by Contributed | May 25, 2021 | Technology

This article is contributed. See the original author and article here.

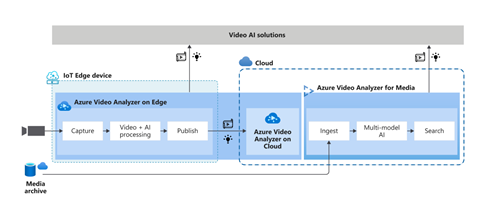

At Microsoft Build 2021, we are pleased to announce the availability of Azure Video Analyzer as an Azure Applied AI Service. Azure Video Analyzer (AVA) is an evolution of Live Video Analytics (LVA) that was launched several months ago. As an Azure Applied AI service, AVA provides a platform for solution builders to build human centric semi-autonomous business environments with video analytics. AVA enables businesses to reduce the cost of their business operations using existing video cameras that are already deployed in their business environments.

Many businesses take a manual approach to monitoring mundane business operations. This is prone to a higher degree of error and miss-rate due to human error and the fact that assessing the criticality of a visual event sometimes requires corelating multiple signals in real-time. AVA, in conjunction with other Azure services, addresses this challenge by providing a mechanism for businesses to automate mundane visual observations of operations and enable employees to focus on the critical tasks at hand.

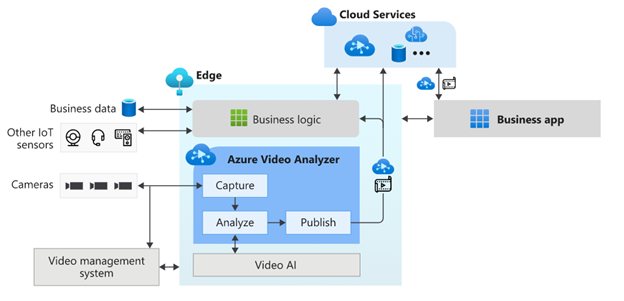

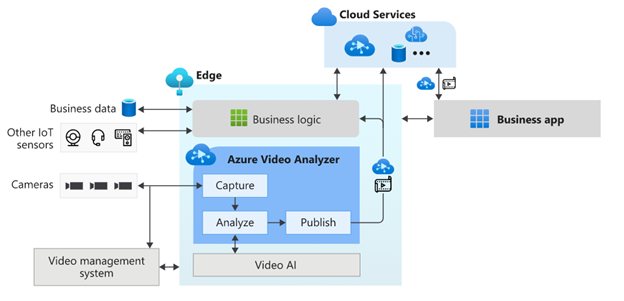

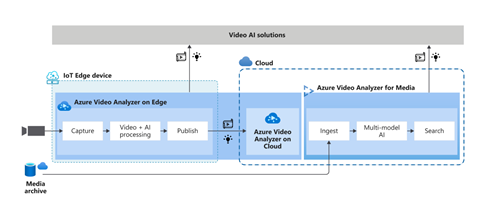

AVA is a hybrid service spanning the edge and the cloud. The edge component of AVA is available via the Azure marketplace and is referred to as “Azure Video Analyzer Edge,” and it can be used on any X64 or ARM 64 Linux device. AVA Edge enables capturing live video from any RTSP enabled video sensor, analysis of live video using AI of choice, and publishing of results to edge or cloud applications. AVA Cloud Service is a managed cloud platform that offers REST APIs for secure management of AVA Edge module and video management functionality. This provides flexibility to build video analytics enabled IoT applications that can be operated locally from within a business environment as well as from a centralized remote corporate location.

AVA can be used with Microsoft provided AI services such as Cognitive Service Custom Vision and Spatial Analysis as well as AI services provided by companies such as Intel. It is also possible to build and integrate a custom AI service that incorporates open-source custom AI models. Business logic that corelates AI insights from AVA with signals from other IoT sensors can be integrated to drive custom business workflows.

AVA has been used by companies such as DOW Inc., Lufthansa CityLine, and Telstra to solve problems such as chemical leak detection, optimizing aircraft turn-around, and traffic analytics. AVA has enabled DOW get closer than ever to reaching their safety goal of zero safety-related incidents. In the case of Lufthansa CityLine, AVA has enabled human coordinators to make better data-driven decisions to reduce aircraft turnaround time, thus reducing costs and improving customer satisfaction significantly. Telstra has been able to unlock new 5G business opportunities with the combination of Azure Video Analyzer, Azure Percept, and Azure Stack Edge.

AVA offers all capabilities that were available in LVA and more. A short summary of some of the prominent capabilities are as follows:

Process live video at the Edge

AVA Edge can be deployed on any Azure IoT Edge enabled X64 or AMD64 Linux device. Live video from existing cameras can be directed to AVA and processed on the edge device within the business environment’s network. In turn, this provides organizations with a solution that considers limited bandwidth and potential internet connectivity issues, all while maintaining the privacy of their environments.

Analyze video with AI of choice

As mentioned earlier, AVA can be integrated with Microsoft provided AI or with AI that is custom built to solve a niche problem, such as Intel’s OpenVINO™ DL Streamer that provides capabilities such as detecting objects (a person, a vehicle, or a bike), object classification (vehicle attributions) and object tracking (person, vehicle and bike).

Azure AI is based on Microsoft’s Responsible AI Principles and the Transparency Note provides additional guidance on designing response AI integrations.

Flexible video workflows

In addition to the existing building blocks provided by LVA, the current release of AVA enables a variety of live video workflows via new building blocks such as Object Tracker, Line Crossing, Cognitive Services extension processor and Video Sink.

Simplify app development with easy-to-use widgets

AVA provides a video playback widget which simplifies app development with a secure video player and enables visualization of AI metadata overlaid on video.

Azure Video Analyzer offers all these capabilities and more. Take a look at Azure Video Analyzer product page and documentation page to learn more.

by Contributed | May 25, 2021 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

The past year has brought dramatic change to almost every facet of our lives. We have seen the same disruption play out across every industry, from retail to healthcare to financial services. Customer expectations are fundamentally altered, and it’s hard to imagine things going back to the way they once were.

As organizations work to stay relevant in this new world, they need digital solutions, with a strong software development strategy to meet the evolving needs of their customers. That means, they need developers.

But for developers, the way we have thought about development and product management has changed little in the past 50 years. Although we have moved from binary to assembly to third-generation languages, we have not seen a fundamental shift.

As organizations look for industry-specific digital solutions tailored for their space, developers need to increasingly shift their mindset from coding to composing clouds and components.

The post Build differentiated SaaS apps with Microsoft Industry Clouds appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | May 25, 2021 | Technology

This article is contributed. See the original author and article here.

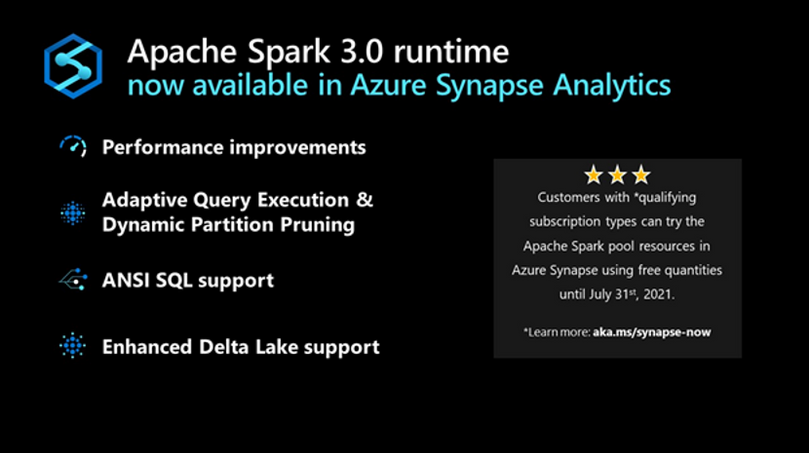

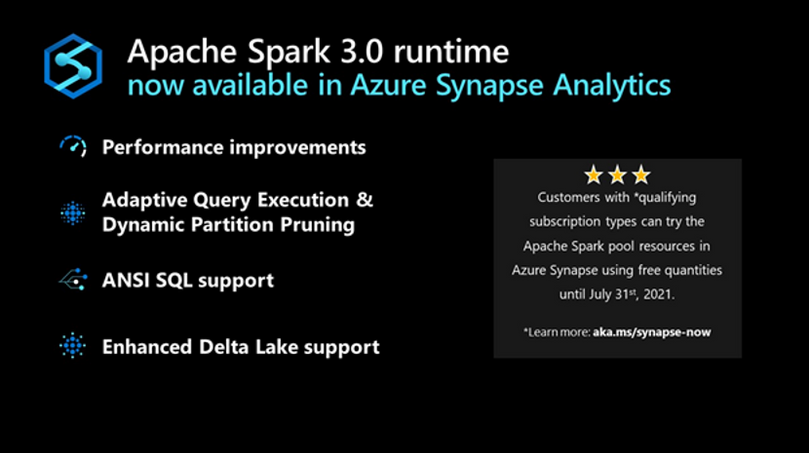

Starting today, the Apache Spark 3.0 runtime is now available in Azure Synapse. This version builds on top of existing open source and Microsoft specific enhancements to include additional unique improvements listed below. The combination of these enhancements results in a significantly faster processing capability than the open-source Spark 3.0.2 and 2.4.

The public preview announced today starts with the foundation based on the open-source Apache Spark 3.0 branch with subsequent updates leading up to a Generally Available version derived from the latest 3.1 branch.

Performance Improvements

In large-scale distributed systems, performance is never far from the top of mind, “to do more with the same” or “to do the same with less” are always key measures. In addition to the Azure Synapse performance improvements announced recently, Spark 3 brings new enhancements and the opportunity for the performance engineering team to do even more great work.

Predicate Pushdown and more efficient Shuffle Management build on the common performance patterns/optimizations that are often included in releases. The Azure Synapse specific optimizations in these areas have been ported over to augment the enhancements that come with Spark 3.

Adaptive Query Execution (AQE)

There is an attribute of data processing jobs run by data-intensive platforms like Apache Spark that differentiates them from more traditional data processing systems like relational databases. It is the volume of data and subsequently the length of the job to process it. It’s not uncommon for queries/data processing steps to take hours or even days to run in Spark. This presents unique challenges and opportunities to take a different approach to optimize and access the data. Over several days the query plan shape can change as estimates of data volume, skew, cardinality, etc., are replaced with actual measurements.

Adaptive Query Execution (AQE) in Azure Synapse provides a framework for dynamic optimization that brings significant performance improvement to Spark workloads and gives valuable time back to data and performance engineering teams by automating manual tasks.

AQE assists with:

- Shuffle partition tuning: This is a major source of manual work data teams deal with today.

- Join strategy optimization: This requires human review today and deep knowledge of query optimization to tune the types of joins used based on actual rather than estimated data.

Dynamic Partition Pruning

One of the common optimizations in high-scale query processors is eliminating the reading of certain partitions, with the adage that the less you read, the faster you go. However, not all partition elimination can be done as part of query optimization; some require execution time optimization. This feature is so critical to the performance that we added a version of this to the Apache Spark 2.4 codebase used in Azure Synapse. This is also built into the Spark 3.0 runtime now available in Azure Synapse.

ANSI SQL

Over the last 25+ years, SQL has become and continues to be one of the de-facto languages for data processing; even when using languages such as Python, C#, R, Scala, these frequently just expose a SQL call interface or generate SQL code.

One of SQL’s challenges as a language, going back to its earliest days, has been the different implementations by different vendors being incompatible with each other (including Spark SQL). ANSI SQL is generally seen as the common definition across all implementations. Using ANSI SQL leads to supporting the least amount of rework and relearning; as part of Apache Spark 3, there has been a big push to improve the ANSI compatibility within Spark SQL.

With these changes in place in Azure Synapse, the majority of folks who are familiar with some variant of SQL will feel very comfortable and productive in the Spark 3 environment.

Pandas

While we tend to focus on high-scale algorithms and APIs when working on a platform like Apache Spark, it does not diminish the value of highly popular and heavily used local-only APIs like pandas. In fact, for some time, Spark has included support for User Defined Functions (UDF’s) which make it easier and more scalable to run these local only libraries rather than just running them in the driver process.

Given that ~70% of all API calls on Spark are Python, supporting the language APIs is critical to maximize existing skills. In Spark 3, the UDF capability has been upgraded to include a capability only available in newer versions of Python, type hints. When combined with a new UDF implementation, with support for new Pandas UDF APIs and types, this release supports existing skills in a more performant environment.

Accelerator aware scheduling

The sheer volume of data and the richness of required analysis have made ML a core workload for systems such as Apache Spark. While it has been possible to use GPUs together with Spark for some time, Spark 3 includes optimization in the scheduler, a core part of the system, brought in from the Hydrogen project to support more efficient use of (hardware) accelerators. For hardware-accelerated Spark workloads running in Azure Synapse, there has been deep collaboration with Nvidia to deliver specific optimizations on top of their hardware and some of their dedicated APIs for running GPUs in Spark.

Delta Lake

Delta Lake is one of the most popular projects that can be used to augment Apache Spark. Azure Synapse uses the Linux Foundation open-source implementation of Delta Lake. Unfortunately, when running on Spark 2.4, the highest version of Delta Lake that is supported is Delta Lake 0.6.1. By adding support for Spark 3, it means that newer versions of Delta Lake can be used with Azure Synapse. Currently, Azure Synapse is shipping with support for Linux Foundation Delta Lake 0.8.

The biggest enhancements in 0.8 versus 0.6.1 are primarily around the SQL language and some of the APIs. It is now possible to perform most DDL and DML operations without leaving the Spark SQL language/environment. In addition, there have been significant enhancements to the MERGE statement/API (one of the most powerful capabilities of Delta Lake) expanding scope and capability.

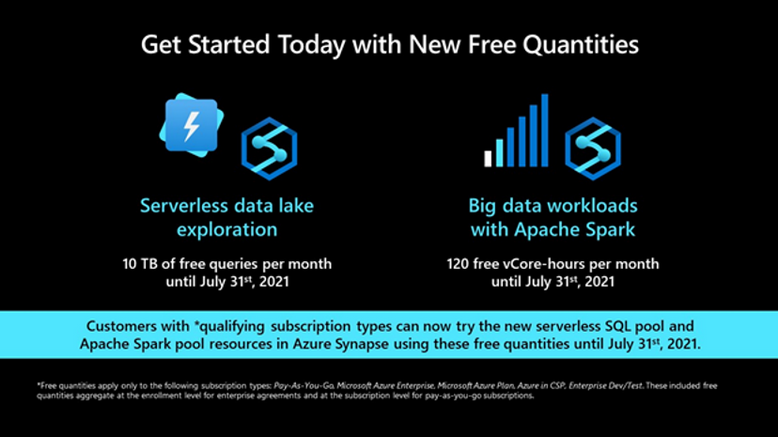

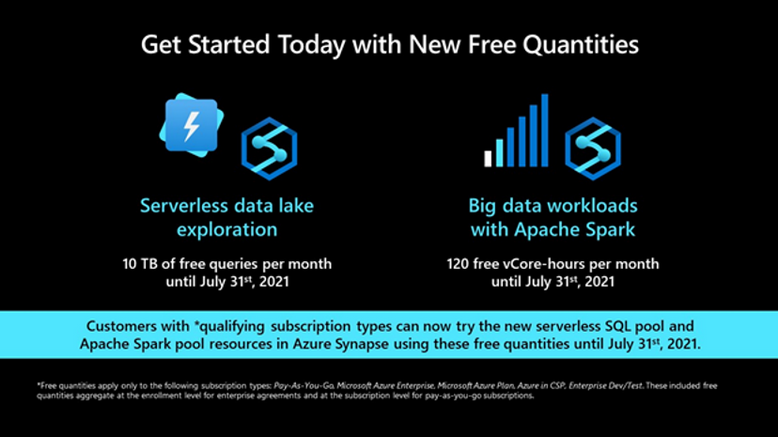

Get Started Today

Customers with *qualifying subscription types can now try the Apache Spark pool resources in Azure Synapse using free quantities until July 31st, 2021 (up to 120 free vCore-hours per month).

by Contributed | May 25, 2021 | Technology

This article is contributed. See the original author and article here.

Meet the New Standard in Workflow

Today marks a new chapter for integration at Microsoft – the General Availability (GA) of Logic Apps Standard – our new single-tenant offering. A flexible, containerized, modern cloud-scale workflow engine you can run anywhere. Today, integration is more important than ever, it connects organizations with their most valuable assets – customers, business partners and their employees. It makes things happen, seamlessly, silently, to power experiences we take for granted, APIs being called by your TV to browse must-watch shows or catch the latest weather, snagging a bargain on your favorite website (with all the stock checking, order fulfillment and charging your credit card as backend workflows). Booking vacations when that was a thing, and keeping us all safe scheduling vaccine appointments on our phones, as well as checking in with friends and family wherever we are. The list goes on. Integration is everything.

Breaking Through the Cloud Barrier

Logic Apps has always been central to our industry-leading modern cloud integration platform – Azure Integration Services. But it was stuck in the cloud, our cloud. We know that business can’t always be bounded like this, and integration needs to be pervasive and accessible, connecting to where things are today, where they need to be tomorrow and where they might be in the future. For that, you need to be able to extend the reach of your network using an integration platform than can truly meet you where you are. Welcome Logic Apps Standard, our born in the cloud integration engine that can now be deployed anywhere – our cloud, your cloud, their cloud, on-premises or edge. And your laptop or dev machine for local development. Windows, Linux or Mac. Anywhere.

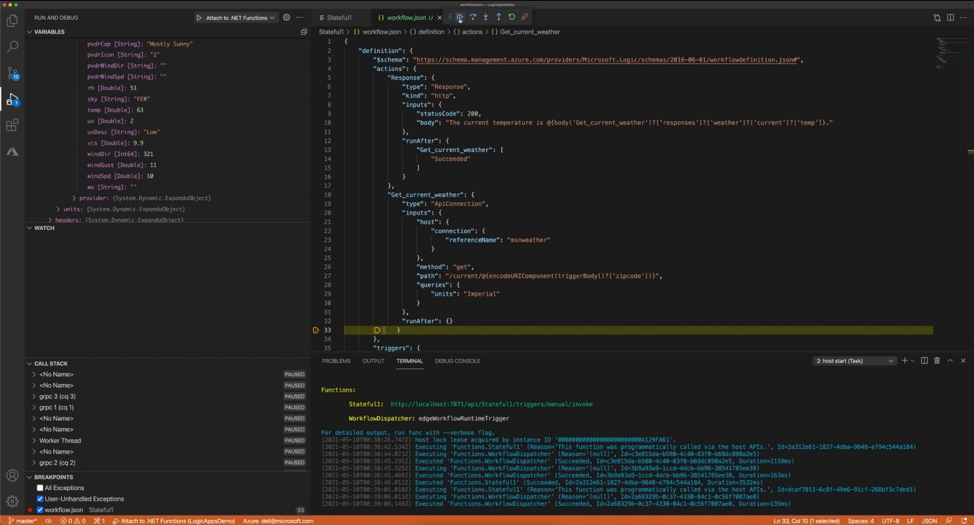

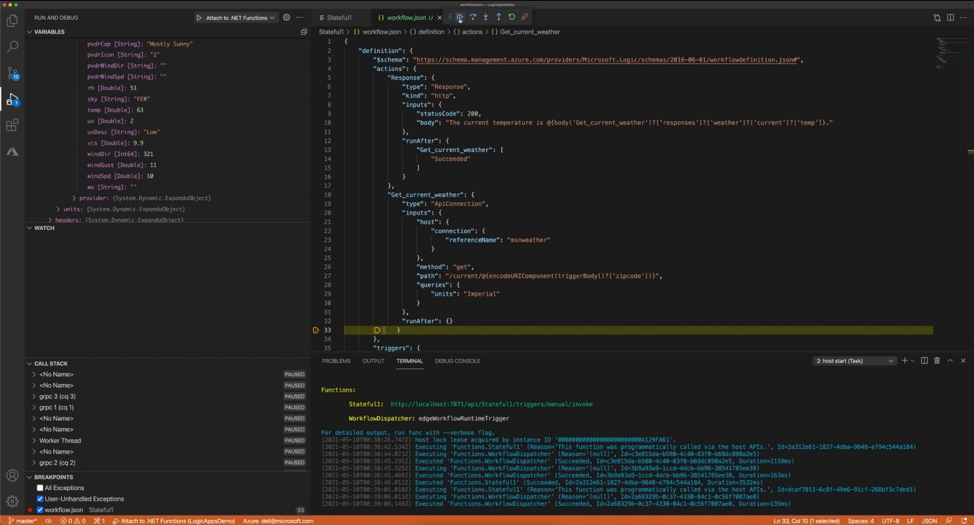

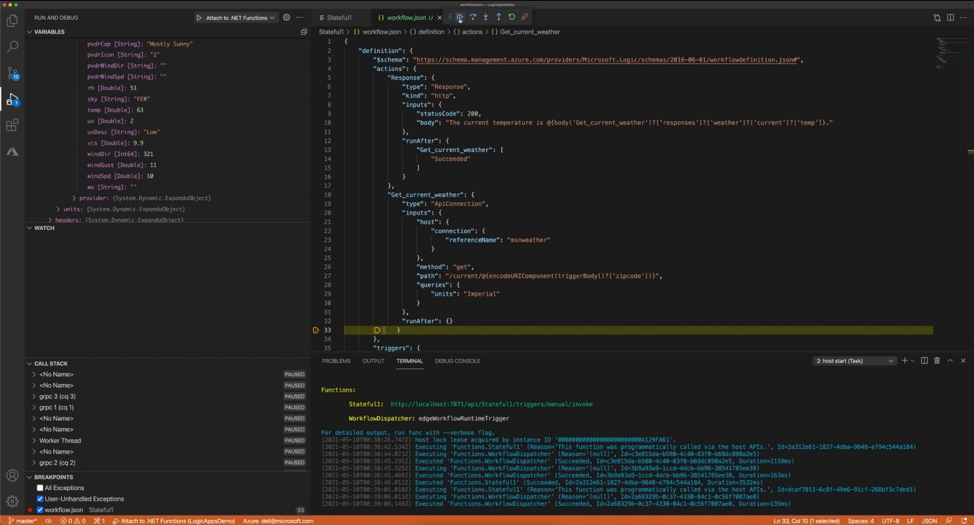

Figure 1. New VS Code extension.

The Speed You Need

We’ve also introduced new Stateless Workflows. As their name implies, this is a new workflow type in Logic Apps that doesn’t need storage to persist state between actions, making your workflows run faster and saving you money. What’s not to like about that? Stateless Workflows open up new high-volume, high-throughput scenarios for real-time processing of events, messages, APIs and data. We’ve achieved performance improvements across both Stateful and Stateless with a new connector model, built-in to the runtime, to provide high performance of some of our most common connectors – Service Bus, Event Hubs, Blob, SQL and MQ. Not only this but you can also now write your own connectors in .Net just like we do with all the same benefits with our new extensibility model for custom connectors.

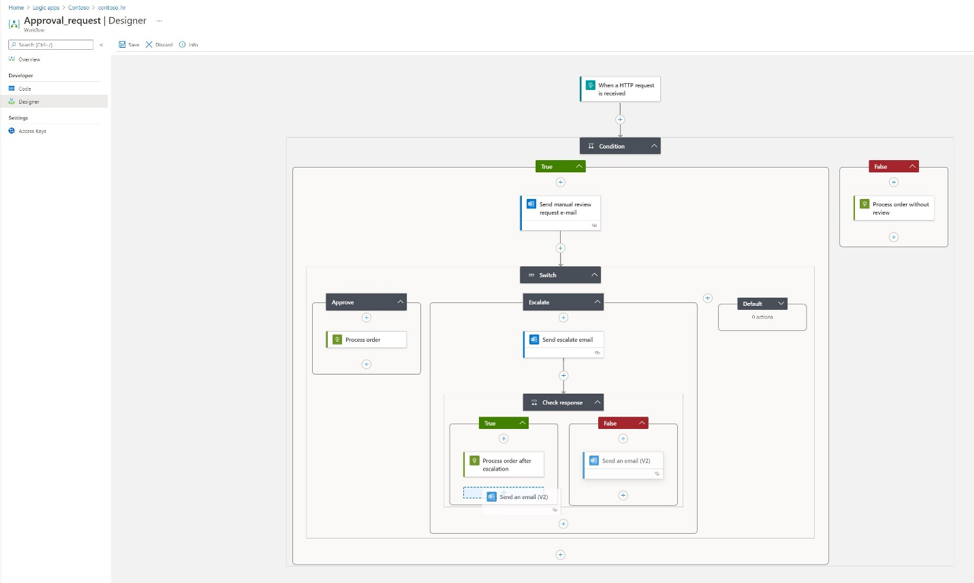

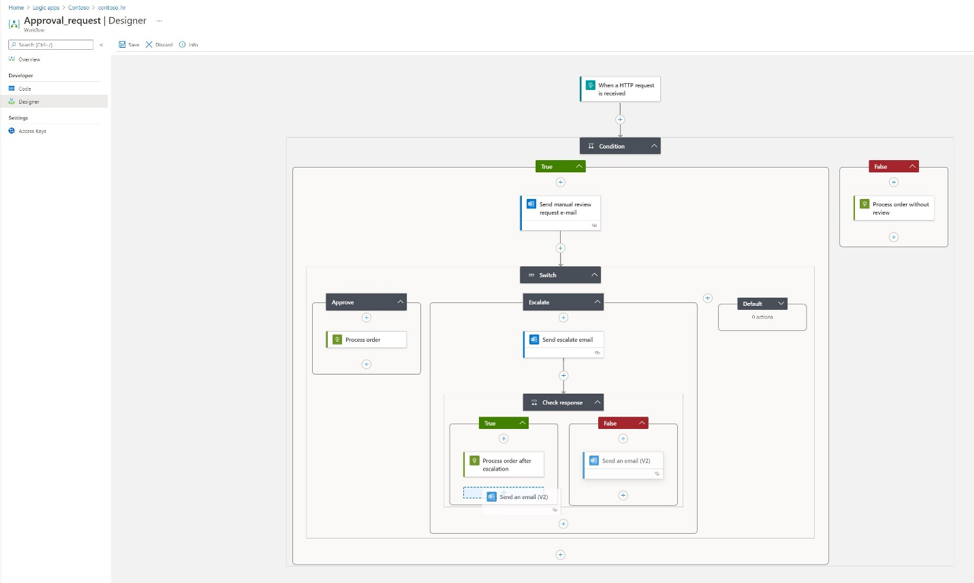

A New Designer Designed For You

We didn’t want to reimagine our new runtime without reimagining the designer too. The no-code magic that brings integration to everyone, not just those who can code – or have time to. The canvas now allows you to bring your most complex business workflow, orchestration and automation problems. It has been recreated with not just a more modern look but incorporates a new layout engine making complex workflows render faster than ever, with full drag/drop, a new dedicated editing pane to de-clutter the whole experience, and new accessibility and other gestures to make authoring easier than ever. For everyone.

But that’s not all, we’ve also created a new VS Code extension for authoring, allowing you – for the first time– to easily debug and test on your local machine, set breakpoints, examine variables values in flight and generally, just do what you do faster – in the World’s most popular IDE.

Figure 2. New Workflow Designer.

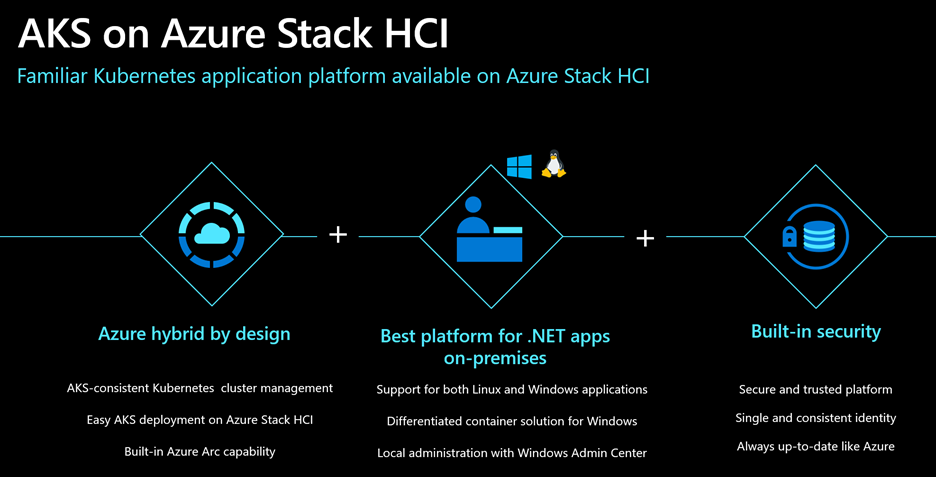

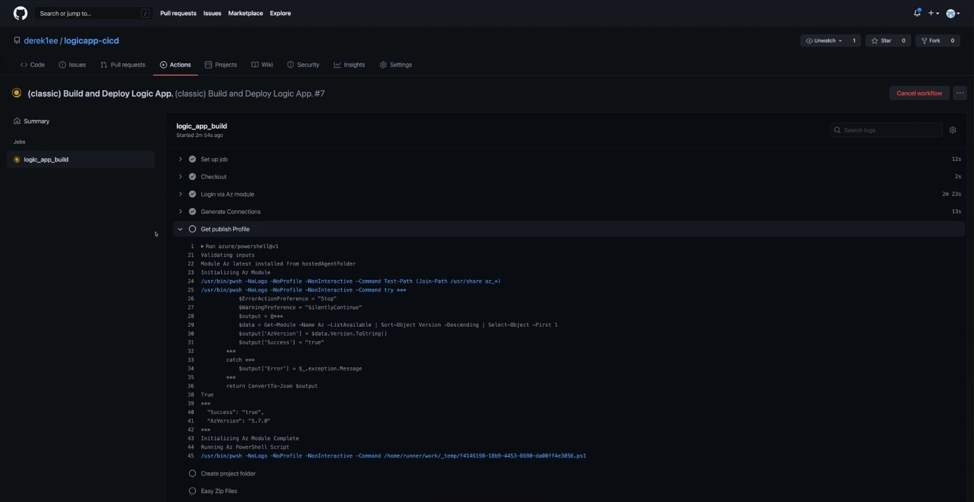

Easier To Live With

As well as leaps forward in our runtime, our designer and general ‘developer flow’ we know that getting your great work to production with as little manual effort and intervention as possible is also what you need. We’ve worked on making it possible to parameterize your workflows in Logic Apps Standard so that you can automate deployments and set environment-specific values in your pipelines to make DevOps a snap. You can choose what you’re familiar with to stay in your groove with support for both Azure DevOps pipelines and GitHub Actions – with provided templates to help you get productive as quickly as possible. You’re able to take an infrastructure as code approach to deploy your solutions and use CI/CD practices to enable you and your team to iterate and deploy without friction as fast as your business demands.

Not only this but Logic Apps Standard also now provides App Insights support too, allowing you ’see’ your running processes, as data flows between endpoints and monitor them using Azure Monitor as well as a host of other Azure built-in management capabilities.

Figure 3. DevOps with Logic Apps.

You’re Always In Control

Because Logic Apps Standard runs on App Service – powering over 2 million web apps serving 40 billion requests per day – you get all the same great benefits that makes App Service great too. Auto scale, virtual network (VNet) support and Private Endpoints – right there at your fingertips – to build amazing solutions that span Web, Workflow and Functions. And of course, because Logic Apps is part of Azure Integration Services too, you can easily connect your applications using over 450 connectors, publish and consume APIs with API Management with just a few clicks and process events with Event Grid at planet-scale.

So What’s Next?

In a word, lots! We’ve also released today, the public preview of Logic Apps (and our other PaaS application services) on Azure Arc. Arc brings a new level of distributed deployment and centralized management to your application and integration environments. We’re also readying SQL support (Azure SQL, SQL Server, SQL Data Services) enabling you to run workloads fully locally with no Azure dependency on storage. Now in private preview, you can sign up here to express interest and get early access before the rest.

See For Yourself

Don’t just take our word for it, watch our Build session on-demand here where Derek Li, will take you through everything that’s new to get you up to speed. You’ll see how ASOS, a global leader in fashion and tech, is using Logic Apps Standard to help them realize their business goals faster than ever before.

You can start right now, for free, and take us for a spin. Read more on Logic Apps Standard here. If you’re already familiar with Logic Apps and want to understand the differences you can review this article. And as always, let us know what you think and what we can do to help you in your efforts.

– Jon & the Logic Apps team

by Contributed | May 25, 2021 | Technology

This article is contributed. See the original author and article here.

We are thrilled to announce that Azure Kubernetes Service on Azure Stack HCI is now generally available. Over the last 8 months we have made 5 public preview releases as we worked closely with customers, and responded to the early feedback that they provided.

You can evaluate AKS-HCI by registering here: https://aka.ms/AKS-HCI-Evaluate.

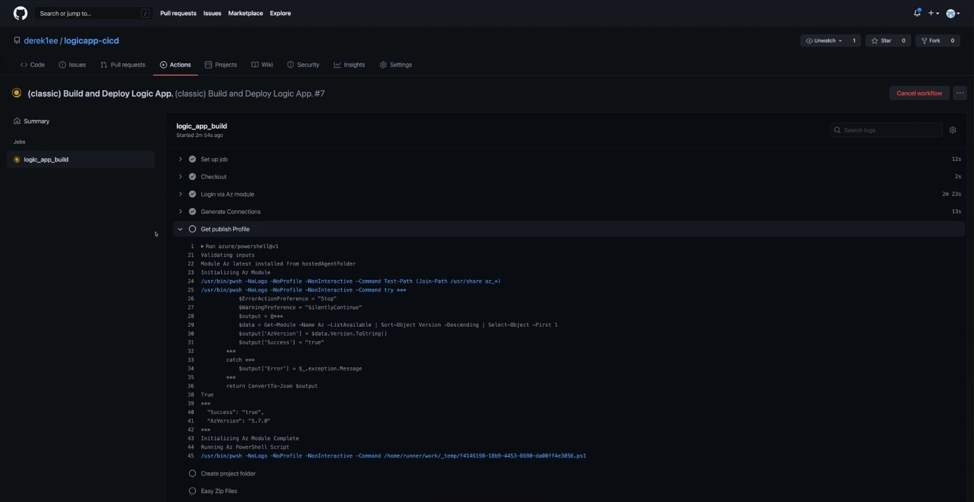

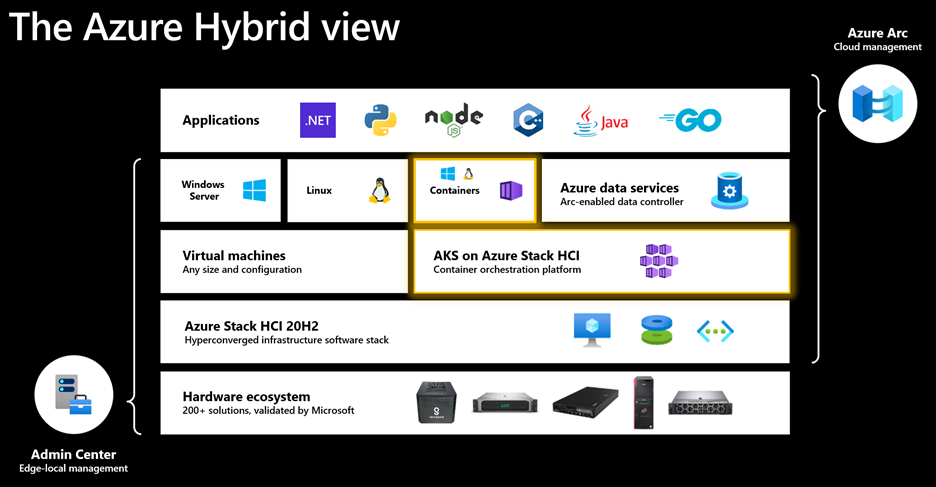

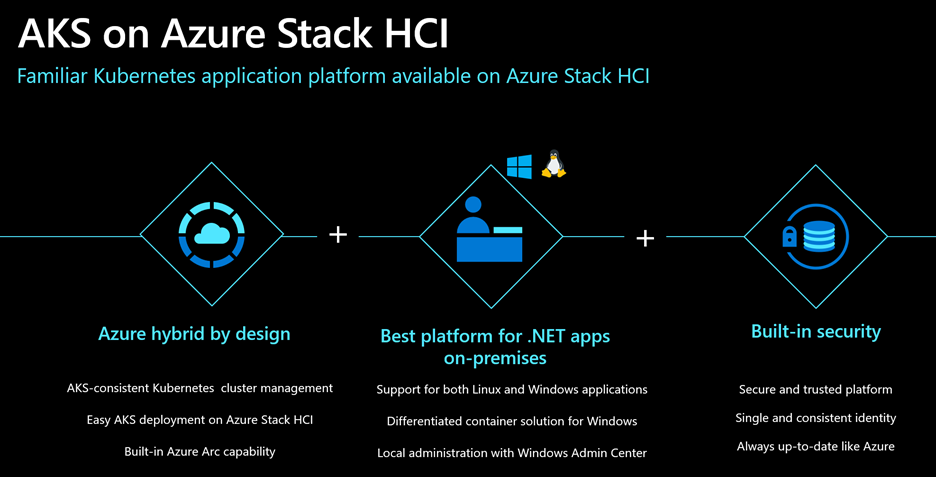

AKS-HCI makes our popular Azure Kubernetes Service (AKS) available on-premises. It fulfills the key need for an on-premises App Platform in the Azure hybrid cloud stack that goes from bare metal all the way into Azure-connected experiences in the cloud.

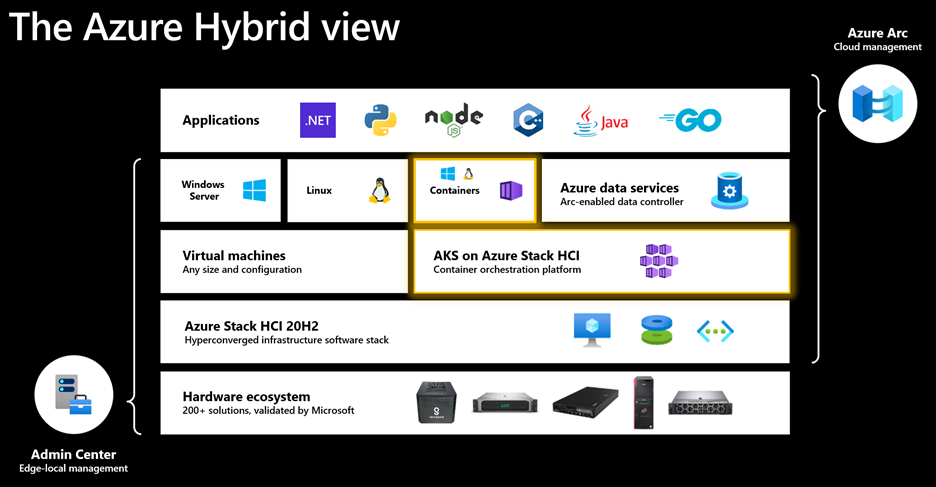

AKS-HCI is a turn-key solution for Administrators to easily deploy, manage lifecycle of, and secure Kubernetes clusters in datacenters and edge locations, and developers to run and manage modern applications – all in an Azure-consistent manner. Complete end-to-end support and servicing from Microsoft – as a single vendor – makes this is a robust Kubernetes application platform that customers can trust with their production workloads.

AKS-HCI is an Azure service that is hybrid by design. It leverages our experience with AKS, follows the AKS design patterns and best-practices, and uses code directly from AKS. This means that you can use AKS-HCI to develop applications on AKS and deploy them unchanged on-premises. It also means that any skills that you learn with AKS on Azure Stack HCI are transferable to AKS as well. With Azure Arc capability built-in, you can manage your fleet of clusters centrally from Azure, deploy applications and apply configuration using GitOps-based configuration management, view and monitor your clusters using Azure Monitor for containers, enforce threat protection using Azure Defender for Kubernetes, apply policies using Azure Policy for Kubernetes, and run Azure services like Arc-enabled Data Services on premises.

No matter how you choose to deploy AKS-HCI – wizard-driven workflow in Windows Admin Center (WAC) or PowerShell – your cluster is ready to host workloads in less than an hour. Under the hood, the deployment takes care of everything that’s required to bring up Kubernetes and run applications. This includes core Kubernetes, container runtime, networking, storage, and security, and operators to manage underlying infrastructure. Scaling the cluster up or down by adding/removing nodes and cluster-updates/upgrades are equally quick and easy. So is ongoing local management through WAC or PowerShell.

AKS-HCI is the best platform for running .Net Core and Framework applications – whether your applications are based on Linux or Windows. The infrastructure required to run containers is included and fully supported. For Windows, AKS-HCI offers an industry-leading solution with advanced features like GMSA non-domain joined hosts, Active Directory integration, and WAC based application deployment, migration, and management. We want to ensure that AKS-HCI remains the best destination for Windows containers.

With Microsoft, security is not an afterthought. AKS-HCI is kept up to date just like any Azure service. Security updates for the entire solution including core Kubernetes platform, network and storge drivers, Linux and Windows container VM images, and other binaries are delivered by Microsoft. Hardened VM images, single sign on with Active Directory integration, encryption, certificate management, and integration with Azure Security Center are just a few features – from a long list – that demonstrate leadership in this space.

Customers are using AKS-HCI to run cloud-native workloads, modernize legacy Windows workloads, and/or Arc-enabled Data Services on-premises. As more and more Azure services become available to be run on-premises, AKS-HCI will continue to be the industry-leading and preferred destination.

Here’s how AKS-HCI is enabling digital transformation at one of our customers – SKF.

“At SKF we continue to execute our vision of digitally transforming the company’s backbone through harnessing the power of technology, interconnecting processes, streamlining operations, and delivering industry-leading digital products and services for our customers. Our focus is on digitalizing all segments of the chain and interconnecting them to unlock the full potential of digital ways of working for our business and customers. Our challenge in manufacturing area is that we have more than 100 factories, and we need to be able to provide them with an IT/OT platform that comes with speed, reliability, and low cost, while providing for critical production systems. SKF plan to standardize on Kubernetes as the primary hosting platform for modern workloads. AKS running both on Azure Cloud and Azure Stack HCI, and the fact that Microsoft has also chosen Kubernetes strategy running their other products, allow us to deploy for example Azure Arc enabled Data Services virtually on any of our new or existing environments. This gives us a tremendous jump start into Lean Digital Transformation of our factories worldwide.” — Sven Vollbehr, Head of Digital Manufacturing at SKF

We are honored and thrilled by the trust you have placed in us and are excited to deliver innovation that empowers you – starting with this GA milestone.

Finally, we would also like to take a moment to thank the entire AKS on Azure Stack HCI team, who have worked tirelessly on this project amid the very trying and tough circumstances of the last year. It has been great to partner with all of you and to get to know you all better.

Thanks!

Ben Armstrong, AKS on Azure Stack HCI Group Program Manager

Dinesh Kumar Govindasamy, AKS on Azure Stack HCI Engineering GC

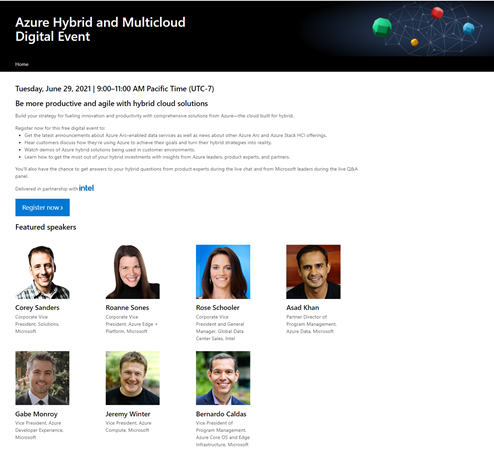

Register for our upcoming Hybrid and Multicloud Digital Event on June 29th!

Learn more about Azure Kubernetes Service on Azure Stack HCI

- Watch our On-demand Build session: Azure Kubernetes Service on Azure Stack HCI (AKS-HCI): A turnkey App Platform for modern .NET apps – with demos featuring e2e deployment, Azure arc, Azure Monitor, Azure Policy, App modernization using WAC, GMSA for Windows Containers, Vision on Edge IoT app on AKS-HCI, and a customer story by SKF.

- Watch Skilling videos

by Contributed | May 25, 2021 | Technology

This article is contributed. See the original author and article here.

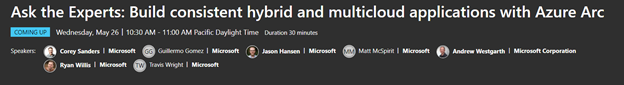

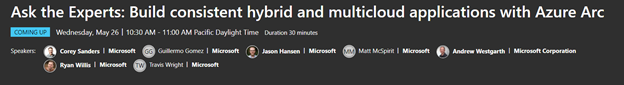

Corey Sanders, CVP of Microsoft Solutions, will be on camera in the session, “ATEBRK233 – Ask the Experts: Build consistent hybrid and multicloud applications with Azure Arc,” at Build 2021 on May 26th, at 10:30AM – 11:00AM PST. Don’t forget to sign up for Build and add this session to your schedule!

He will also be the featured speaker alongside several other leaders in the upcoming Hybrid Digital Event, held on June 29th, at 9:00AM – 11:00AM PST. Register for our upcoming Hybrid and Multicloud Digital Event on June 29th!

by Contributed | May 25, 2021 | Technology

This article is contributed. See the original author and article here.

Red Hat and Microsoft have collaborated to bring enterprise solutions to Java Enterprise Edition (EE) / Jakarta EE developers with solution templates on Azure Marketplace. Deploy Red Hat JBoss Enterprise Application Platform (EAP) on Azure Red Hat Enterprise Linux (RHEL) Virtual Machines (VM) and Virtual Machine Scale Sets (VMSS) if you are migrating away from proprietary application servers to a production supported open source application server or from on-premises to the cloud.

Red Hat and Microsoft

The Azure Marketplace offerings for JBoss EAP on RHEL is a joint solution from Red Hat and Microsoft. Red Hat is the world’s leading provider of enterprise open source solutions and a contributor for the Java standards, OpenJDK, MicroProfile, Jakarta EE, and Quarkus. JBoss EAP is a leading open source Java application server platform that is Java EE Certified and Jakarta EE Compliant in both Web Profile and Full Platform. Every JBoss EAP release is tested and supported on a variety of market-leading operating systems, Java Virtual Machines (JVMs), and database combinations. Microsoft Azure is a globally trusted cloud platform with a range of services from VMs on infrastructure as a service (IaaS) to platform as a service (PaaS). This joint solution by Red Hat and Microsoft includes integrated support and software licensing flexibility. Read the press release from Red Hat to learn more about the collaboration and JBoss EAP on Azure.

Why JBoss EAP and RHEL?

Customers heavily invested in Java EE / Jakarta EE who want to migrate to the cloud while preserving their investments with open-source solutions can utilize JBoss EAP on Azure RHEL VM/VMSS solutions. This reduces the time, complexity, and cost of migrating Java applications to Azure as it is fully supported and offers flexible subscription choices with Pay-As-You-Go (PAYG) and Bring-Your-Own-Subscription (BYOS) options. With the Red Hat Enterprise Linux (RHEL) PAYG option, your operating system can be more secure and up to date with Red Hat Update Infrastructure (RHUI) on Azure and can benefit from running older versions with the Extended Lifecycle Support (ELS) option.

Azure Marketplace Offerings

The Azure Marketplace solutions use the latest versions for RHEL, JBoss EAP, and OpenJDK for production deployments. JBoss EAP is offered only as BYOS, and you can select either BYOS or PAYG for RHEL. Once deployed, you can perform an upgrade by running the *yum update* command. These Marketplace solutions create the Azure compute resources to run JBoss EAP on RHEL. Solution configuration includes stand-alone and clustered mode on Azure VM and VMSS.

Support and subscriptions

Red Hat Enterprise Linux is available as on-demand PAYG or BYOS via the Red Hat Gold Image model using Red Hat Cloud Access. To use RHEL in the PAYG model, you will need an Azure Subscription. Red Hat JBoss EAP is available through BYOS only for now. Customers will need to supply their Red Hat Subscription Manager (RHSM) credentials along with RHSM Pool ID showing valid JBoss EAP entitlements when deploying this solution.

If you are a new JBoss EAP customer and don’t have a Red Hat subscription, create an account on the Red Hat Customer Portal and you can work directly with Red Hat to get set up. Red Hat provides a variety of flexible billing options.

Benefits of using Azure VMs and VMSS

With Azure VMs and VMSS, you get built-in identity with AAD, Role-Based Access Controls (RBAC), networking, data, storage, and security management. You can troubleshoot with Serial Console or enterprise support and have cloud spend transparency with Azure Cost Management.

In addition, JBoss EAP on VMSS allows automatic scaling of resources, up to 600 VMs. VMSS supports integration with a load balancer or Application Gateway. High availability and resiliency are available across single or multiple Data Centers. VM instance scaling can automatically increase or decrease in response to demand or a defined schedule that you can set after template deployment.

Customers will receive integrated support from Microsoft and Red Hat for any production issues with JBoss EAP on RHEL VM and VMSS solutions.

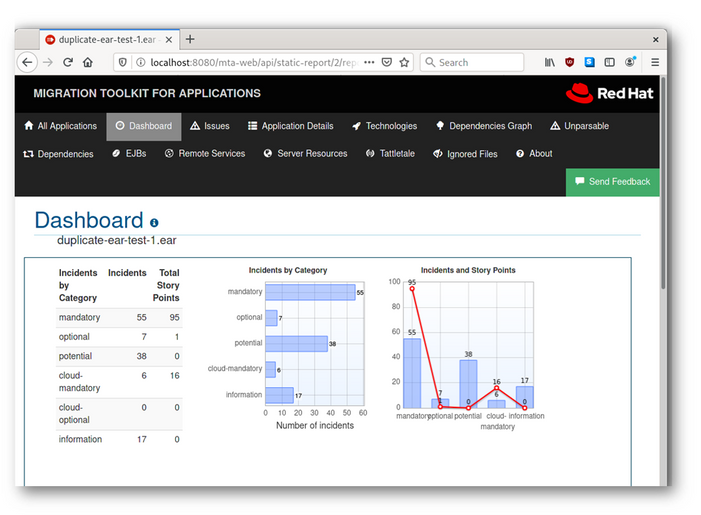

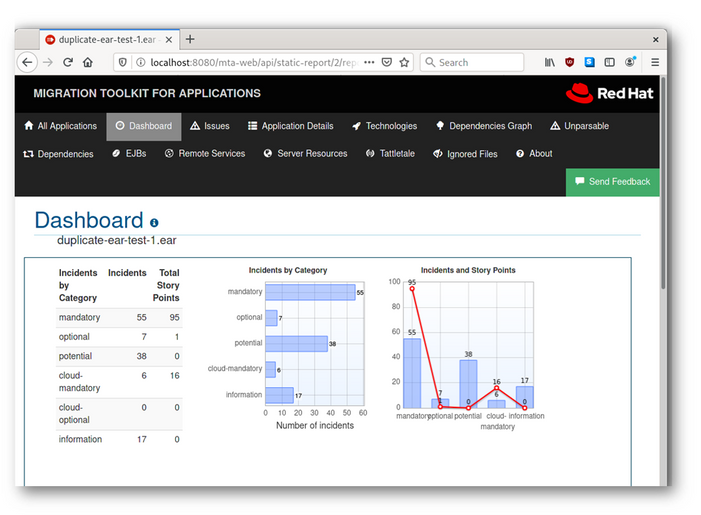

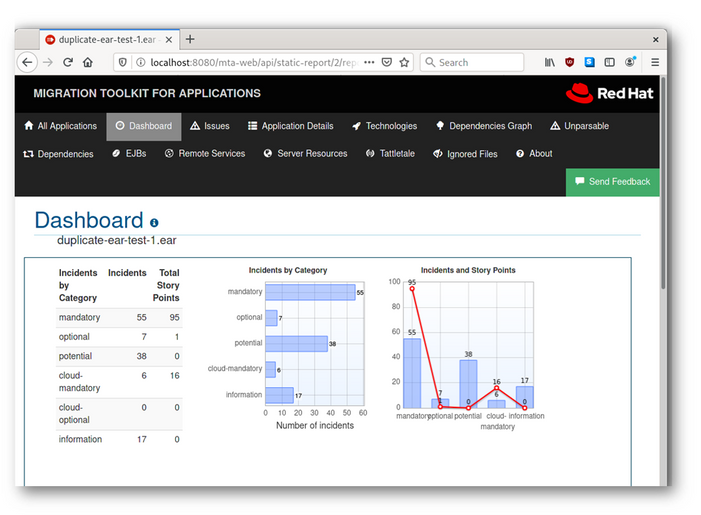

Migrating to JBoss EAP on Azure

The Red Hat Migration Toolkit for Applications (MTA) is a collection of tools that support large-scale Java application modernization and migration projects across a broad range of transformations and use cases. It is recommended to use MTA for planning and executing any JBoss EAP-related migration projects. It accelerates application code analysis, supports effort estimation, accelerates code migration, and helps you move applications to the cloud and containers. MTA allows you to migrate applications from other application servers to Red Hat JBoss EAP.

Image 1 – Red Hat Migration Toolkit for Applications Dashboard

Interested in Other Azure Hosting Options for Red Hat JBoss EAP?

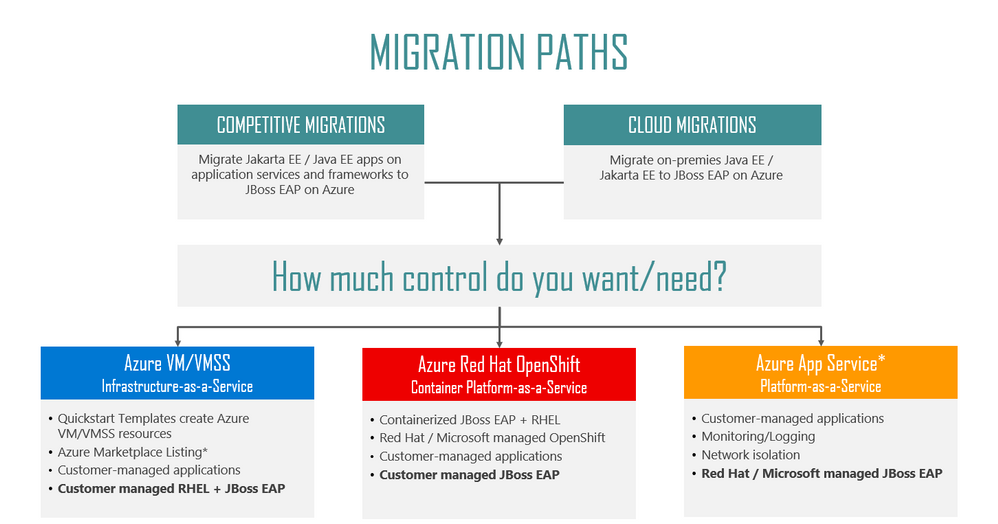

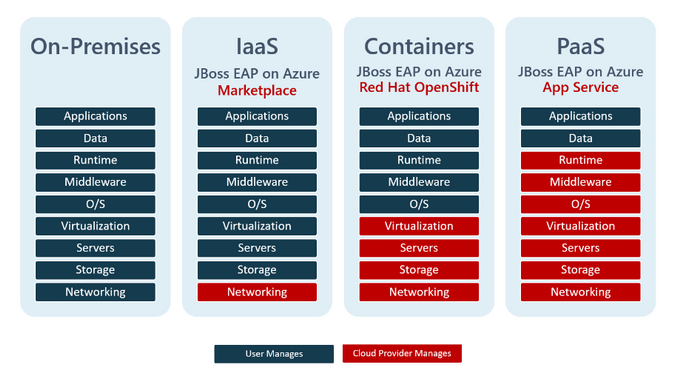

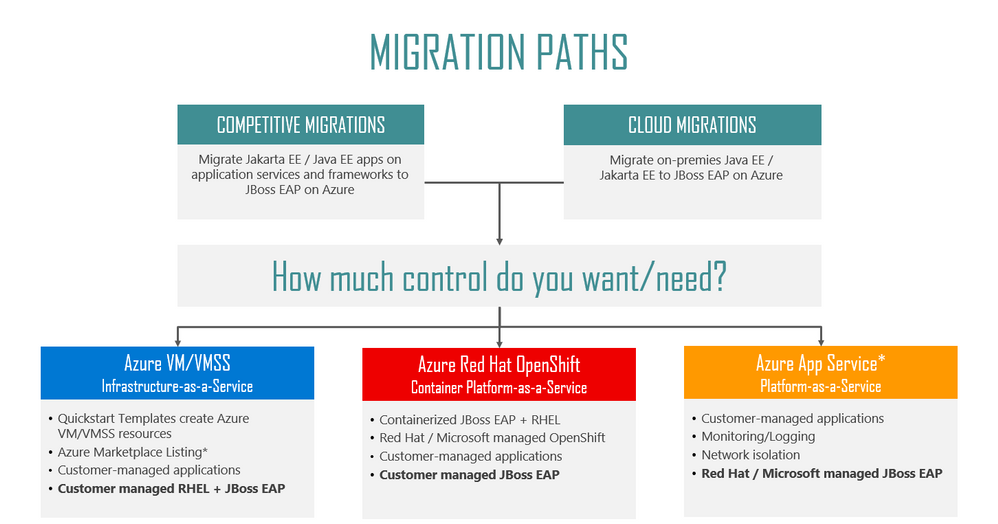

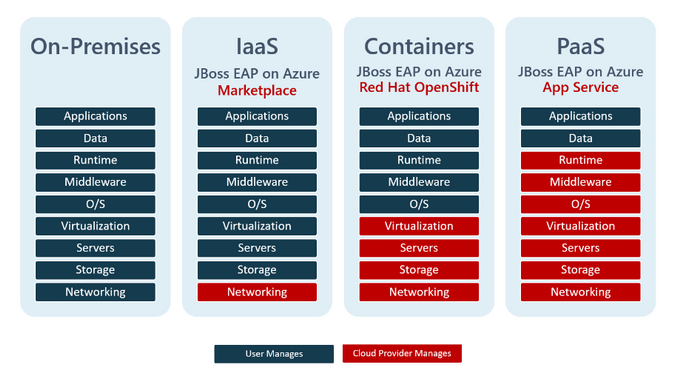

JBoss EAP is also available on Azure Red Hat OpenShift (ARO) and Red Hat OpenShift Container Platform (for multi-cloud strategy) if you are looking for a container-based solution. For a managed hosting option, try JBoss EAP on Azure App Service (in preview). These services include integrated support where you can start your ticket with either Microsoft or Red Hat. So, the real question should be “How much control do you want or need?” Check out the flow chart and technology stack images below to help you identify the best-suited service for your JBoss EAP apps on Azure.

Image 2 – Migration Paths to Red Hat JBoss EAP on Azure

Image 3 – Comparison of Customer vs. Cloud Provider Responsibilities for JBoss EAP Hosting Options on Azure

Try it!

Here are great resources to help you get started.

by Contributed | May 25, 2021 | Business, Microsoft 365, Microsoft Teams, Technology

This article is contributed. See the original author and article here.

The world around us has dramatically changed since the last Microsoft Build. Every customer and partner is now focused on the new realities of hybrid work—enabling people to work from anywhere, at any time, and on any device. Developers are at the heart of this transformation, and at Microsoft, we’ve seen evidence of this in…

The post Build the next generation of collaborative apps for hybrid work appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | May 25, 2021 | Technology

This article is contributed. See the original author and article here.

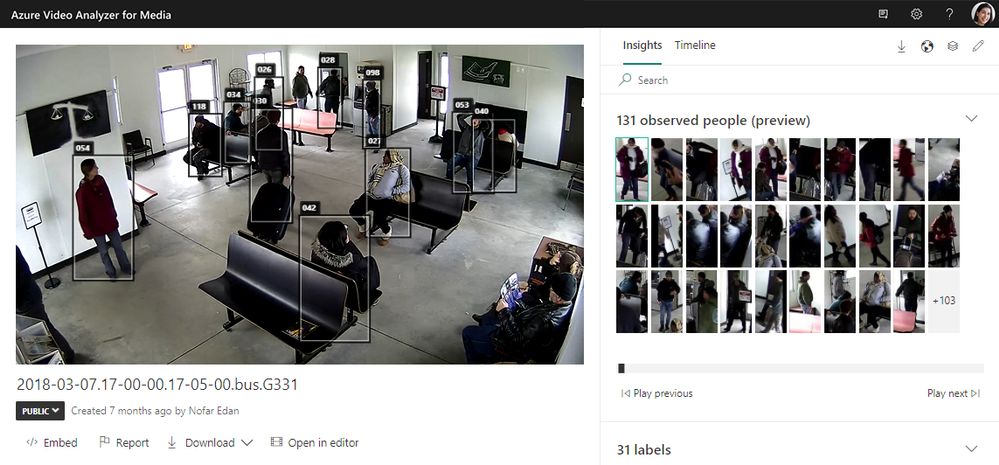

At MS Build 2021, the Azure Video Indexer service is becoming part of the new set of Applied AI services, aiming to enable developers to accelerate time to value for AI workloads, versus building solutions from scratch. Azure Video Analyzer and Azure Video Analyzer for Media are designed to do that specifically for video AI workloads. That is, to enable developers to build video AI solutions easily without the need for deep knowledge in Media or in AI/machine learning; from edge to cloud, live to batch.

As part of this change, Video Indexer will be renamed as Azure Video Analyzer for Media (a.k.a. AVAM). Under the new name, we continue to work hard to bring you the insights and capabilities needed to get more out of your cloud media archives; improve searchability, enable new user scenarios and accessibility, and open new monetization opportunities.

So, what else is new in AVAM (other than the name :) )?

– New insight types added to provide greater support to analysis, discoverability, and accessibility needs

- Audio effects detection, with closed caption files enrichment (public preview in trial and paid accounts): ability to detect non-speech audio effects, such as gunshots, explosions, dogs barking, and crowd reactions.

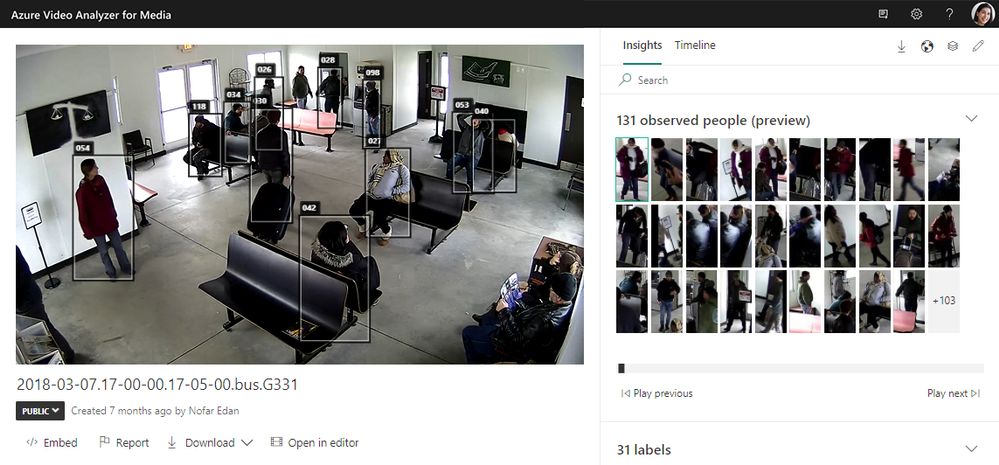

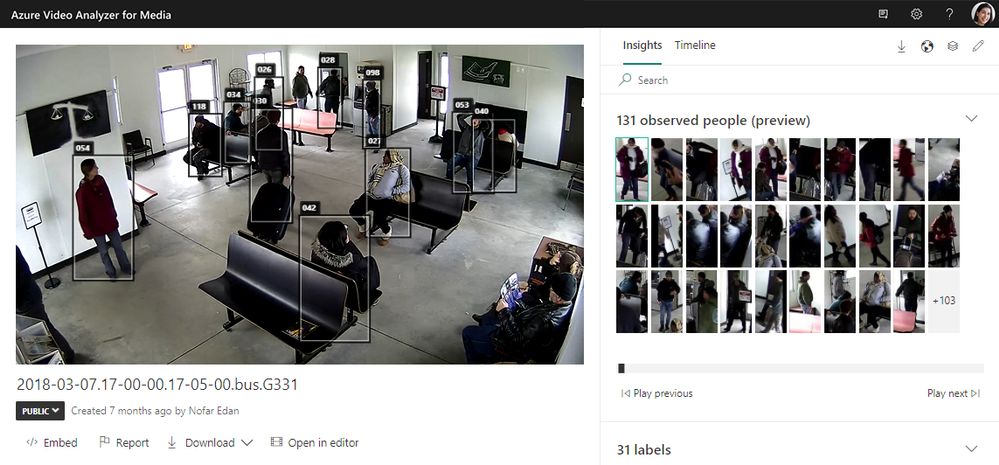

- Observed people tracing (public preview in trial and paid accounts): ability to detect standing people spotted in the video and trace their path with bounding boxes.

– Improvements to existing insights

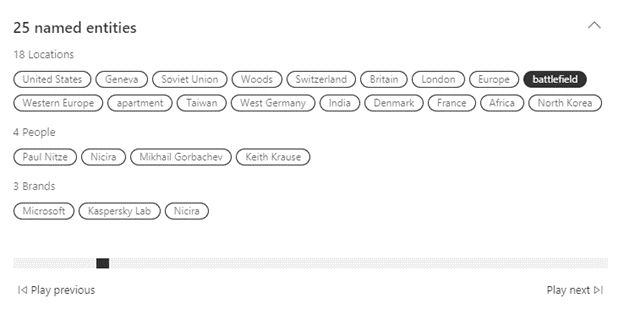

- Improvements to named entities (locations, people, and brands).

- Improvements to face recognition pipeline.

– Extending global support

- Expanded regional availability.

- Multiple new languages supported for transcription.

– Learn from others

- We are proud to share how our partners WPP and Media Valet use the service to provide better media experiences to their customers.

– New in our developers’ community

- New developer portal enabling anyone to get started with the API easily and get answers fast.

- Open-source code to help you leverage the newly added widget customization.

- Open-source solution to help you add custom search to your video libraries.

- Open-source solution to help you add de-duplication to your video libraries.

– New “Azure blue” visual theme

More about all those great additions and announcements in this blog!

Discoverability, accessibility, and event analysis support through new insights

In our journey to allow for a wider and richer analysis for your video archives, we are happy to introduce two new insight types into the AVAM pipelines that can be leveraged in multiple scenarios: Observed people tracing and Audio effect detection. Both new insights are now available in public preview on trial and paid accounts.

Observed People Tracing detects people that appear in the video, including the times in the video in which they appeared, and the location of each person in the different video frames (the person’s bounding box). The bounding boxes of the detected people are even displayed in the video while it plays to allow easy tracing of them. Observed People information enables video investigators to easily perform post event analysis of events such as bank robbery or accident at the workplace, as well as to perform trend analysis over time, for example learning how customers move across aisles in a shopping mall or how much time they spend in checkout lines.

People observed in the player page

People observed in the player page

Audio effects detection detects and classifies the audio effects in the non-speech segments of the content. Audio effects can be used for discoverability scenarios. For example, finding the set of videos in the archive and specific times within the videos in which a gunshot was detected. It can also be used for accessibility scenarios, to enrich the video transcription with non-speech effects to provide more context for people who are hard of hearing, making content much more accessible to them. This is relevant both for organizational scenarios (e.g., watching a training or keynote session) and in the media and entertainment industry (e.g. watching a movie). The set of audio effects extracted are: Gunshot, Glass shatter, Alarm, Siren, Explosion, Dog Bark, Screaming, Laughter, Crowd reactions (cheering, clapping, and booing) and Silence. The audio effects detected are retrieved as part of the insights JSON and optionally in the closed caption files extracted from the video.

Audio effects found in the player page

Audio effects found in the player page

The two newly added insights are currently available when indexing a video with “advanced” preset selected, in audio and video analysis respectively. During the preview period there is no additional fee for choosing the advanced preset over the standard one, so it’s a great opportunity to go ahead and try it on your content!

We keep improving, constantly.

AVAM at its core provides an out-of-the-box pipeline of a rich set of insights, already fully integrated together. In addition to enriching this pipeline with new insights, we keep looking at the ones that are already there and at how to improve and refine them, to make sure you get the most insight into your media content.

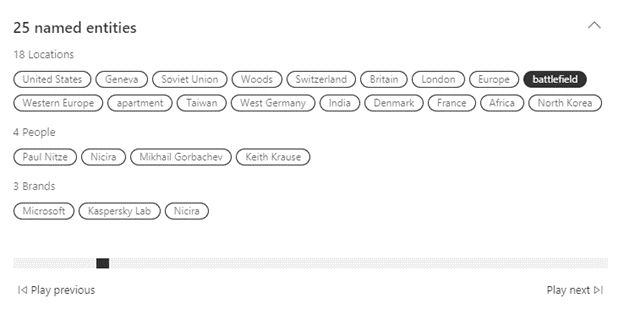

Just recently we released a major improvement to the named entities insights of AVAM. Named entities are locations, people, and brands identified in both the transcription and on-screen text extracted by AVAM, based on natural language processing algorithms. The latest improvement to this insight included identification of a much larger range of people and locations, as well as identification of people and locations in context, even when those are not well-known ones. So, for example, the transcript text “Dan went home” will extract ‘Dan’ as a person and ‘home’ as a location.

Panel of named entities extracted

Panel of named entities extracted

We also just released several improvements to the AVAM face detection and recognition pipeline resulting in better accuracy of face recognition, especially when the thumbnail quality isn’t so good.

Expanding global support

To enable organizations across the globe to leverage AVAM for their business needs, we are constantly working on expanding the service regional availability as well as the supported languages for transcription.

The latest regions we deployed and are now available for customers for creating an AVAM paid account include US North Central, US West, and Canada Central. Additionally, in the next two months we are planning to deploy Central US, France central, Brazil south, West central US, and Korea central.

AVAM’s set of supported languages has also expanded and now includes Norwegian, Swedish, Finnish, Canadian French, Thai, multiple Arabic dialects, Turkish, Dutch, Chinese (Cantonese), Czech, and Polish. As everything else in AVAM, the new languages are available for customers using the API and the portal.

Learn from others

We are proud to share recent partnership announcements, where Azure Video Analyzer for Media empowers companies to get more out of media content and provide new and exciting capabilities.

WPP recently announced a partnership with Microsoft that leverages Azure Video Analyzer for Media to index metadata extracted from their content from a central location accessible from anywhere. The partnership aim is to create an innovative cloud platform that allows creative teams from across WPP’s global network to produce campaigns for clients from any location around the world.

Additionally, Media Valet; a leading digital assert management company uses Azure Video Analyzer for Media in their Audio/Video Intelligence tool (AVI) to helps their customers significantly improve asset discoverability and provide greater insight into their audio and video assets through automated metadata tagging. “With Azure Video Analyzer for Media, we deliver more ways for our customers to analyze their assets,” says Lozano. “They can isolate standard and cognitive metadata, find assets quickly—even within a library of 6 million assets, for example—and then home in on specific insights within those assets.”

New in the AVAM developer community

To use AVAM at scale, automate processes, and integrate with organizational applications and infrastructure, organizations use the AVAM’s REST API. To help you get started with the API easily and get support for intuitive use, we recently revamped the AVAM API portal that allows for one central location with intuitive access to all our development resources, such as API calls description and ability to try them out, access to stack overflow, GitHub, support requests forum, etc. You can read all about getting started with our API here.

New AVAM developer portal home page

New AVAM developer portal home page

Speaking of development resources, AVAM has its own GitHub repository where you can find code samples and solutions on top of AVAM, to help you integrate with it or just inspire you about the different solutions that can be built with the service. Two of the latest additions to our GitHub are code samples:

Firstly, we added an example code for using the new widgets customization capabilities of AVAM. The newly added widget customization capability in AVAM enables developers to customize the widgets of AVAM into their own applications in different advanced ways including loading the JSON from external location, customized widgets styling to fit in to your application, and adding your own custom insights calculated elsewhere.

Secondly, the commercial engineering team (CSE) created two end-to-end solutions demonstrating how to leverage AVAM for different scenarios:

One solution demonstrates using AVAM’s stable frames and Azure Machine Learning to build custom search video solutions that complement AVAM’s out-of-the-box insights with additional information, tailored to the specific organization’s custom data and search terms using Azure Cognitive Search.

Enriching AVAM results with “dog types” model

Enriching AVAM results with “dog types” model

An additional solution to demonstrate how to perform de-duplication on media files. The solution includes a workflow using Logic apps and Durable functions, that take as input either a video (among other content types) and sends it to AVAM. It performs de-duplication of the files by comparing hashes of file and re-using the output of previously analyzed files for any duplicates. The output of the analysis is put on to a service bus for downstream services to consume for cost saving purposes.

And one more fun addition to close with

Lastly, to celebrate the new name, we are expanding the set of visual themes available in the AVAM experience with a new “Azure blue” theme! Now you can choose to use the “Classic” Video Indexer green, pivot to “Dark” theme or choose to go with “Azure blue” which feels right at home as part of the Azure family of services.

New Theme selector setting

New Theme selector setting

Looking to get your feedback!

In closing, we’d like to call you to provide feedback for all recent enhancements, especially those which were released as public preview. We collect your feedback and adjust the design where needed before releasing those as general available capabilities. For those of you who are new to our technology, we’d encourage you to get started today with these helpful resources:

by Contributed | May 25, 2021 | Technology

This article is contributed. See the original author and article here.

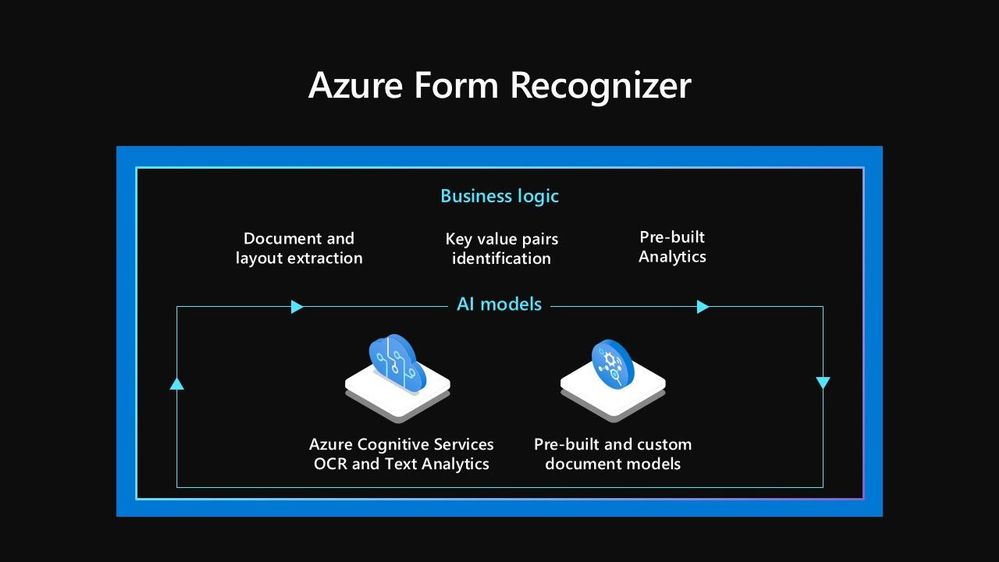

As more organizations widely adopt AI to accelerate their digital transformation, customers have increasingly told us about the need for services that enable faster application of AI to common scenarios, without requiring any machine learning expertise. An of such a service is Azure Form Recognizer, which automates processing paperwork by bringing together vision and language AI capabilities with business logic to isolate and extract key information. helps organizations quickly detect and diagnose issues, as well as trigger alert notifications. Customers like Samsung and Chevron are already using these services in their mission critical workloads.

Today we are bringing such services together into a new product category, Azure Applied AI Services. Applied AI Services solve the most common challenges we’re seeing businesses face today, such as processing documents, scaling customer service, searching proprietary archives for pertinent information, analyzing content of all types, and creating accessible experiences. , development teams can build AI solutions that meet these needs faster than ever before with Applied AI Services. Azure Metrics Advisor, Azure Cognitive Search, Azure Bot Service, and Azure Immersive Reader. We are also introducing Azure Video Analyzer, which brings Live Video Analytics and Video Indexer closer together.

How do Azure Applied AI Services make faster development possible?

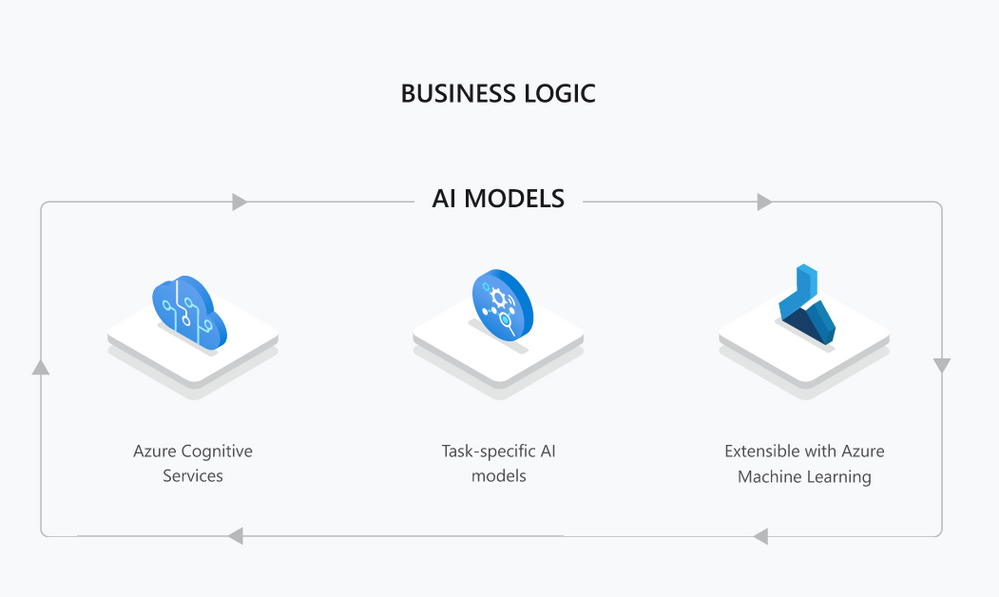

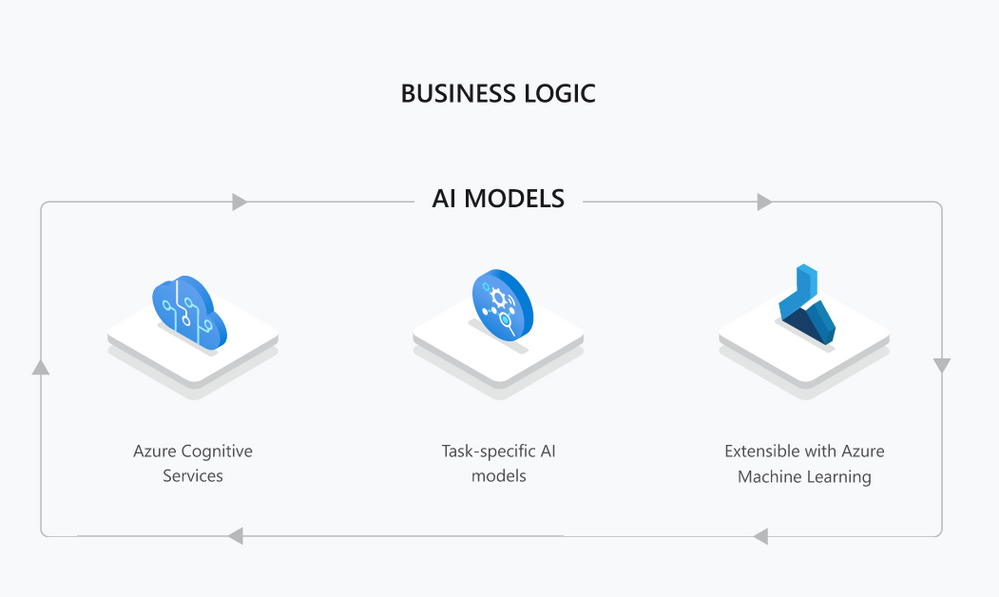

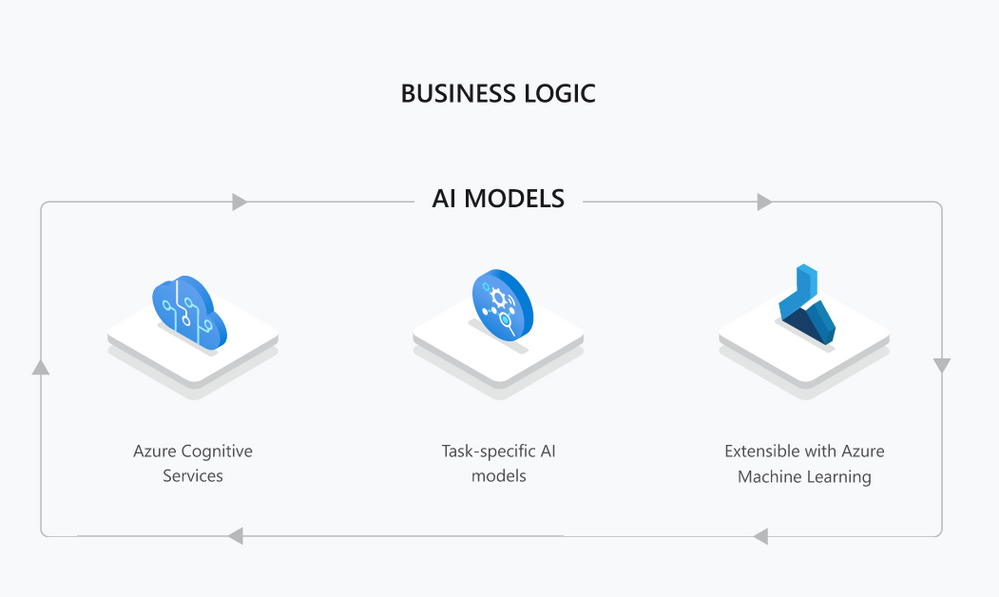

Azure Applied AI Models

Under the hood of Azure Applied AI Services you’ll find the same world-class Azure Cognitive Services: flexible, reliable tools for all language, vision, decision-making, and speech related AI needs. Cognitive Services are the general-purpose building blocks that allow developers to build any AI-powered solution. Cognitive Services offer SDKs (Software Developer Kits), REST APIs, connectors to easily integrate in Azure Serverless or Power Platform, and even user interface(UI) based tools like Speech Studio and Continuous Integration and Deployment (CI/CD) options.

Azure Applied AI Services builds on top of Cognitive Services, combining varies technologies to solve specific problems.

Applied AI Services build on top of Cognitive Services with additional task-specific AI models and business logic to solve common problems organizations encounter, regardless of industry. Digital asset management, information extraction from documents and need to analyze and react to real time data are common to most organizations. In addition, Applied AI Services accelerate development time by providing reliable services that are compliant with our Responsible AI Principles. You can have control of your own data and minimize the latency for mission-critical use cases by running Applied AI Services in containers on your Edge devices.

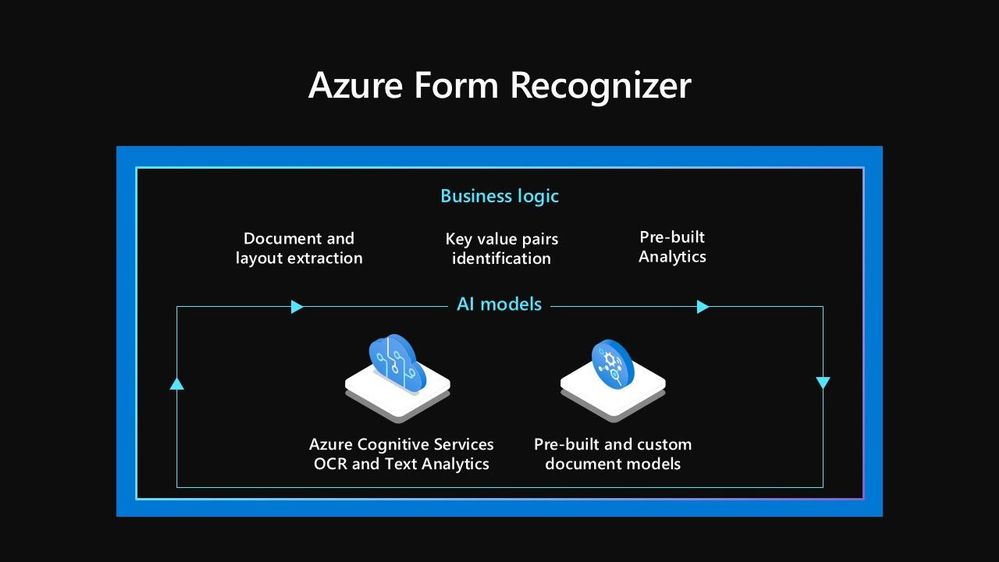

Let’s dive into an example of a common scenario for AI. Business of all kinds from mom-and-pop shops to mass manufacturing companies have to process various documents. Azure Form Recognizer targets this scenario by extracting information from forms and images into structured data to automate data entry. Form Recognizer builds on top of Cognitive Services’ Optical Character Recognition (OCR) capability to recognize text, Text Analytics and Custom Text to relate key value pairs, like a name field description to the value of the name on an ID. Form Recognizer includes additional task specific models to identify information like Worldwide Passports and U.S Driver’s Licenses reliably and is in compliance with Microsoft’s Responsible AI Principles.

Azure Form Recognizer Business Logic & AI Models

Azure Form Recognizer Business Logic & AI Models

While the service is highly specialized, various stakeholders ranging from developers new to AI to data scientists can use Azure Form Recognizer. A developer can build complex document processing functionality with the minimum effort using SDKs and REST APIs. A domain expert can then use the very same service to further train a custom model for industry-specific forms with complex structures. A Machine Learning expert can bring their own custom Machine Learning models, optimized for their use case to extend Form Recognizer. The same development options are available across Applied AI Services.

What are the latest updates for Applied AI Services?

Today we are introducing Azure Video Analyzer, bringing Live Video Analyzer and Video Indexer closer together. Azure Video Analyzer (formerly Live Video Analytics, in preview) delivers the developer platform for video analytics, and Azure Video Analyzer for Media (formerly Video Indexer, generally available) delivers AI solutions targeted at Media & Entertainment scenarios. With Azure Video Analyzer, you can process live video at the edge for high latency, record video in the cloud or record relevant video clips on the edge for limited bandwidth deployments. You can analyze video with AI of your choice by leveraging services such as Cognitive Services Custom Vision and Spatial Analysis, open source models, partner models or just your own custom-built models. With Azure Video Analyzer for Media, your video and audio files are easily processed through a rich set of out-of-the-box machine learning models all pre-integrated together, in different channels of the content; with Vision to detect people and scenes, Language to so you can quickly extract insights from your libraries.

Azure Video AI Solutions

Azure Video AI Solutions

Organizations across the globe are already using Azure Video Analyzer to optimize various processes such as Lufthansa to improve flight turnaround times and Dow to enhance workplace safety with leak detection. Media and Entertainment organizations such as MediaValet use Azure Video Analyzer to extract more value out of their content by finding what they need quickly, scaling across millions of assets.

Azure Metrics Advisor, generally available today, builds on top of Anomaly Detector and makes integration of data, root cause diagnosis, and customizing alerts fast and easy through a readymade visualization and customization UI. Samsung uses Azure Metrics Advisor. In the past, Samsung had relied on a rule-based system in monitoring the health of their Smart TV service. But rules can’t cover all the scenarios and the previous approach generated lots of noise. Using Azure Metrics Advisor, Samsung created a new monitoring solution in China to enhance their previous system and to provide automated, granular root cause analysis that helps engineers locate issues and shorten the time to resolution.

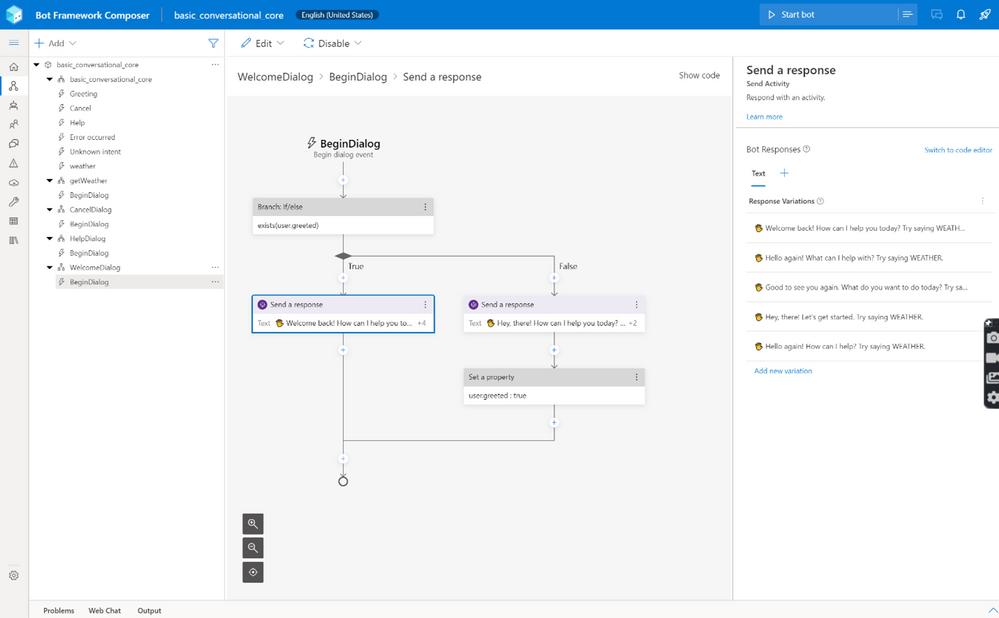

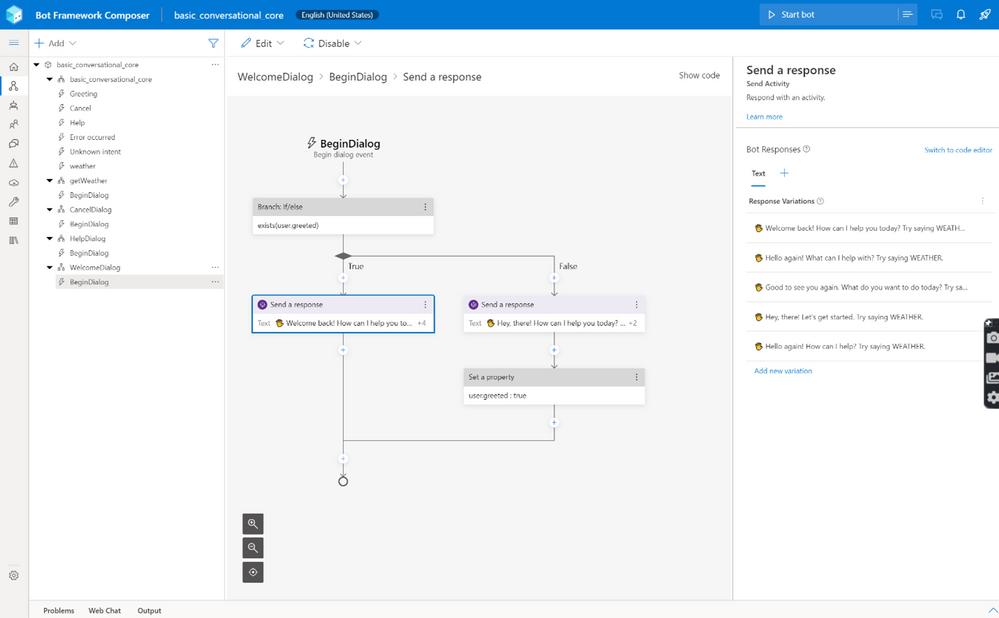

With the latest enhancement to Azure Bot Services, it is easier to build, test, and publish text, speech, or telephony-based bots through an integrated development experience. Varun Nagalia, Director of Unilever HR Systems and Employee Experience Apps: “Una was built on the Microsoft Bot framework foundation. We used the Virtual Assistant templates to streamline the bot’s business logic and handling of user intent in an efficient way. We were able to leverage these templates, adapting them to our business needs and turn around nearly 40 global features in less than 12 months.”

Azure Bot Service

Azure Bot Service

Solving automation, accessibility and scalability problems with Applied AI Services is faster than ever. When a task is not solved with Applied AI Services, Cognitive Services are all purpose APIs that are available to developers to build their solutions. Check out Azure AI Build Session to learn more and see the demos in action.

Read these blog posts for a deeper dive into the latest from these Azure Applied AI Services:

Recent Comments