by Contributed | Jun 30, 2021 | Technology

This article is contributed. See the original author and article here.

We are pleased to announce two new Learning Paths available right now focused on Researchers. We have curated over 7 hours of content that will get you up and ready on Azure.

We want to hear from you. Make sure that you fill a quick survey so that we can ensure that the future learning paths we build are tailored for you.

Whether you need to get started from scratch or are only interested in more advanced topics, we’ve got everything you need to ensure you are productive as soon as possible. If you need to start from scratch let me introduce you to the first learning path.

The Introduction to Cloud Computing for Researchers will get you introduced to the fundamentals of Azure and cloud computing. This learning path will go through how resources are grouped, storing data in Azure, and how to allocate computing resources to run workloads on.

Then, if security and cost are more important to you, Cloud Security and Cost Management for Researchers is your next stop. You will learn how to secure your resources and your data as well as ensure that you are not exhausting all your Azure credits within the same day.

Don’t forget to fill this quick survey. Your feedback matters and future learning paths will be built based on that feedback. We want to hear from you. What can we do to empower you as a researcher?

Let us know!

by Contributed | Jun 29, 2021 | Technology

This article is contributed. See the original author and article here.

Hello everyone, my name is Zoheb Shaikh and I’m a Solution Engineer working with Microsoft Mission Critical team (SfMC). Today I’ll share with you about an interesting issue related to Azure AD Quota limitation we came across recently.

I had a customer who exhausted there AAD quota which put them at a significant health risk.

Before I share more details on this let’s try to understand what your organization AAD Quota could be and why does it even matter.

In simple words Azure AD has a defined quota of number of Directory objects/Resources that can be created and stored in AAD.

A maximum of 50,000 Azure AD resources can be created in a single tenant by users of the Free edition of Azure Active Directory by default. If you have at least one verified domain, the default Azure AD service quota for your organization is extended to 300,000 Azure AD resources. Azure AD service quota for organizations created by self-service sign-up remains 50,000 Azure AD resources even after you performed an internal admin takeover and the organization is converted to a managed tenant with at least one verified domain. This service limit is unrelated to the pricing tier limit of 500,000 resources on the Azure AD pricing page. To go beyond the default quota, you must contact Microsoft Support.

For information about AAD Quotas, see Service limits and restrictions – Azure Active Directory .

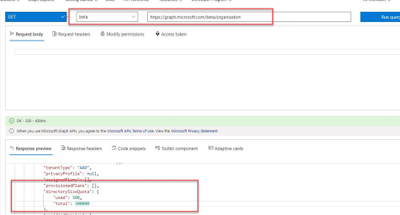

How to check your AAD Quota limit

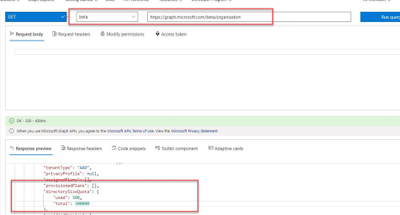

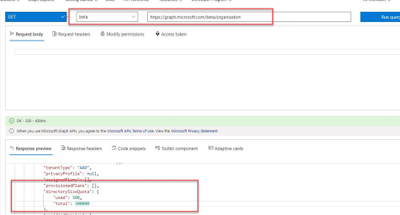

Test this in Graph Explorer: https://developer.microsoft.com/en-us/graph/graph-explorer

Sign into Graph Explorer with your account that has access to the directory.

Run beta query (GET) https://graph.microsoft.com/beta/organization

Now since you understand what AAD Quota is and how to view details let’s get back to the customer scenario and try to understand how AAD Quota affected them and what is in this for you to learn.

The customer was approximately a 100k Users organization using multiple Microsoft cloud related services like Teams, EXO, Azure IaaS, PaaS etc.

As a part of cloud modernization journey, they were doing a massive Rollout of Intune across the Organization post doing all the testing and PoC.

Our proactive monitoring and CXP teams did inform the customer that there Azure AD objects are increasing at an unusual speed, but the customer never estimated this could go beyond their AAD Quota.

One fine morning I got up with a call from our SfMC Critsit manager that my customer’s AAD Quota has exhausted and their AAD Connect is unable to Synchronize any new objects. As a part of Reactive arm of SfMC we got in a meeting with customer along with our Azure Rapid Response team to find what is the cause of the problem.

We decided on below approaches for the issue:

- Confirm AAD Quota exhaustion and what objects are consuming AAD Resources

- Remove stale objects from AAD.

- Reach out to Product Group asking for a Quota increase for this specific customer.

How to check what objects are consuming AAD Quota limit

While I was engaged in this case, we did it the hard way (by exporting all registered objects in Excel and then using Pivot Tables to analyze) but now there is an easy way to do as described below:

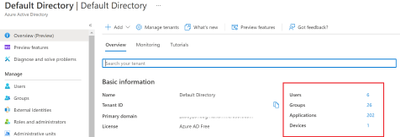

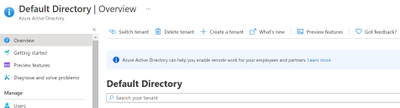

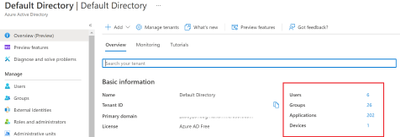

- Login to Azure AD Admin Center (https://aad.portal.azure.com)

- In Azure AD Click on preview features (Presently)

- This will give you a nice overview of your Object Status of AAD

We created the below table to help us find what exactly is going on in the environment, in this we measured how many Objects are in total and how many were created in last few days.

Object

|

Count

|

New Count in last 24 hours

|

New Count in last 1 week

|

Users

|

#

|

#

|

#

|

Groups

|

#

|

#

|

#

|

Devices

|

#

|

#

|

#

|

Contacts

|

#

|

#

|

#

|

Applications

|

#

|

#

|

#

|

Deleted Applications

|

#

|

#

|

#

|

Service Principals

|

#

|

#

|

#

|

Roles

|

#

|

#

|

#

|

Extensionproperties

|

#

|

#

|

#

|

TOTAL

|

##

|

##

|

##

|

Not sharing numbers here but highlighting that we saw Devices consuming about 50 % of the AAD Object quota and increased in the last 24 hours to few thousands.

This output made us understand that thousands of devices are getting registered every day which has resulted in AAD Quota exhaustion.

Based on our analysis to come out of this situation we recommended the customer to delete stale devices that have not been used for more than 1 year. This itself enabled us to delete approximately 50k objects.

This 50k object deletion ensured that they are out of the critical situation giving them some breathing space to think and avoid this problem from reoccurring at least for the next couple of weeks till we figure out what exactly is going wrong.

Being part of the Microsoft Solution for Mission Critical team, we always go above and beyond to support our customers. The first step is always to quickly resolve the reactive issue, subsequently identify the Root Cause, and finally through our Proactive Delivery Methodology making sure this does not happen again.

We followed below approach to identify the root cause and ensure it will not happen again, below the steps:

- Configuring Alerts for validation and Quota exhaustion

- Daily alerts for Azure AD Object count

- Alerts in case AAD Object Quota limit is exhausted.

- More detailed review on the Root Cause of the issue.

- Creating a baseline for AAD Objects needed in the organization.

- Baseline to be created based on number of Objects in Organization (Users, computers etc.)

- What is the expected count?

- Increasing the Object Quota based on the baseline created if needed.

In the next sections we will go through each of the above actions for more explanation

- Configuring Alerts for validation and Quota exhaustion

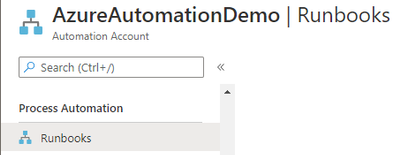

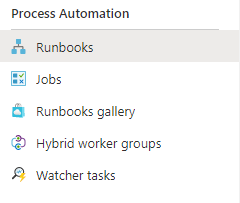

Option#1 using Azure Automation.

We wanted to add alerts to ensure the customer is notified if they are nearing the limit. We achieved this by using Azure Automation as below with the help of my colleague Eddy Ng from Malaysia.

Below is the step-by-step process on how you can help achieve alerts post creating an Azure Automation account:

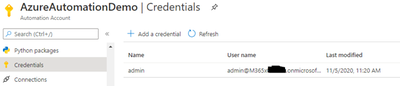

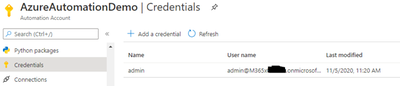

- Create the credential in Credential vault. Click on the + sign, add a credential and input the information name. The credential must have the sufficient rights to connect to Azure AD and not have MFA prompt. The name used is important. It will be referenced from the script.

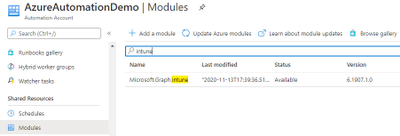

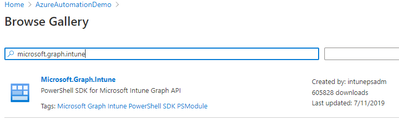

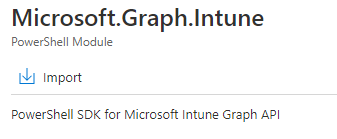

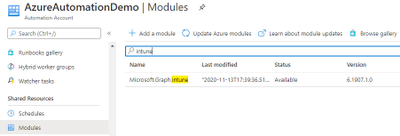

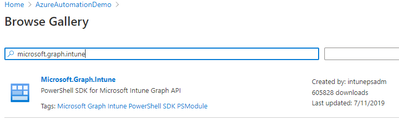

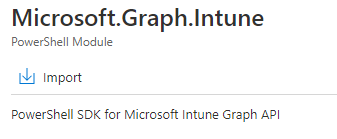

- Next Install Microsoft Graph Intune under the Modules Resources. Click on Browse Gallery, search for Microsoft.Graph.Intune. Click on the result and Import.

We are recommending MS Graph PowerShell SDK going forward.

Create a new Runbook. Give it a name.

Runbook type : Powershell

Paste the below code:

#get from credential vault the admin ID. Change “admin” accordingly to the credential vault name

$credObject = Get-AutomationPSCredential -Name admin

#initiate connection to Microsoft Graph

$connection = Connect-MSGraph -PSCredential $credObject

#setting up Graph API URL

$graphApiVersion = “beta”

$Resource = ‘organization?$select=directorysizequota’

$uri = “https://graph.microsoft.com/$graphApiVersion/$($Resource)”

#initiate query via Graph API

$data = Invoke-MSGraphRequest -url $uri

#get data and validate

#change the number 50000 accordingly

$maxsize = 50000

if ([int]($data.value.directorysizequota.used) -gt $maxsize)

{

write-output “Directory Size : $($data.value.directorysizequota.used) is greater than $maxsize limit”

Write-Error “Directory Size : $($data.value.directorysizequota.used) is greater than $maxsize limit”

Write-Error ” ” -ErrorAction Stop

}

else

{

Write-Output “Directory Size : $($data.value.directorysizequota.used)”

}

- Click Save and Publish

- Click Link to Schedule

- Populate the schedule accordingly. For example, run daily at 12pm UTC.

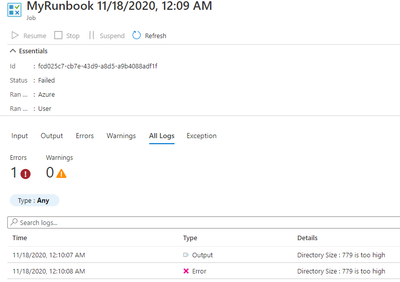

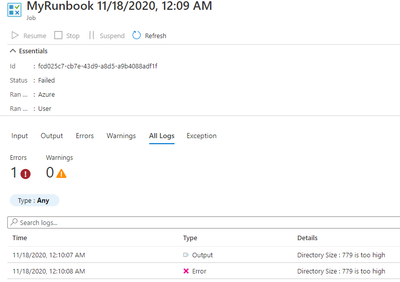

- Results from each run job can be found under Jobs.

- If above quota, the status will be failed as a result of the script -erroraction Stop.

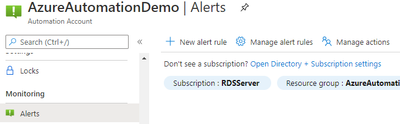

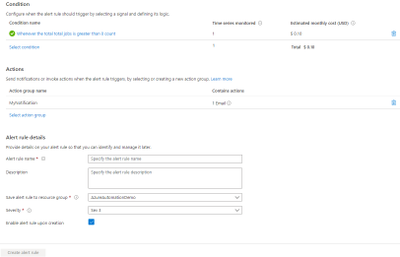

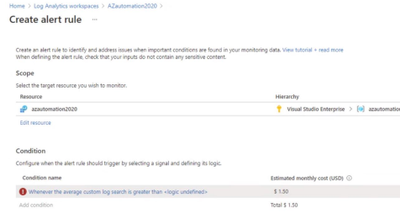

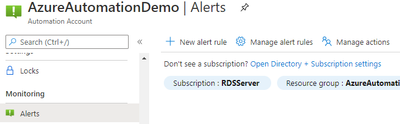

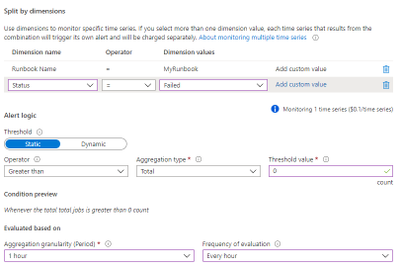

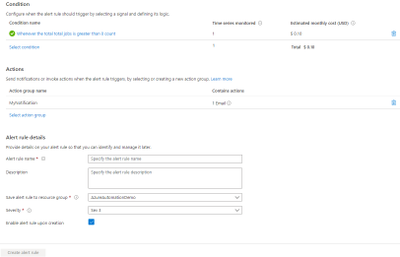

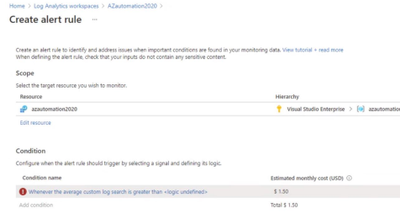

- Setup Alerts to take advantage of this by creating New Alert Rule

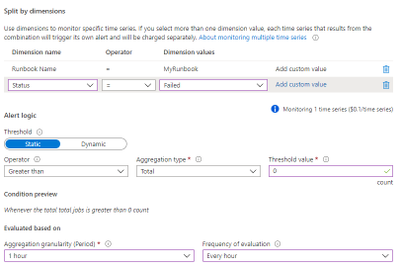

- Click select condition. Signal name “Total Job”. Follow the below. Amend “MyRunbook” accordingly. When finished, click done

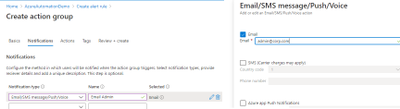

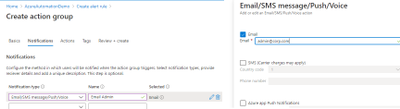

- Select Action Group. Create Action Group.

- Populate info accordingly for Basics.

- Populate info similar to below for Notifications.

- Click Review + Create

- Once done, scroll below under Alert Rule Details, such as Name, Description and Severity.

Results: When the Directory Quota Size breached the limit, you will get an alert via email to the admins.

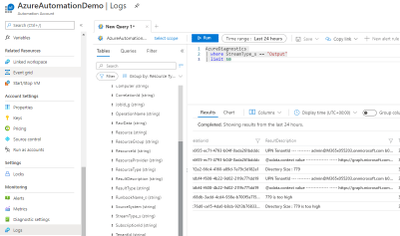

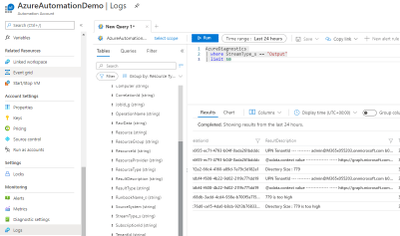

You can then proceed to click on the Runbook and select Jobs. Click on All Logs to see the error output for each individual job run.

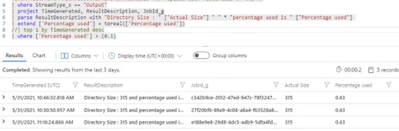

If you wish to monitor the previous results in bulk, go to Logs and run this Kusto Query below. Scroll to the right for ResultDescription. Assumption is that the schedule is set to run daily. A limit of 50 will then be for the past 50 days.

AzureDiagnostics

| where StreamType_s == “Output”

| limit 50

Option#2 Alternative way Configuring Alerts for validation and Quota exhaustion

While I was writing this blog, my colleague Alin Stanciu from Romania advised with probably better way to configure Alerts for Quota Exhaustion.

Replace the script in the Azure Automation account as below!

#get from credential vault the admin ID. Change “admin” accordingly to the credential vault name

$credObject = Get-AutomationPSCredential -Name ‘azalerts’

#initiate connection to Microsoft Graph

$connection = Connect-MSGraph -PSCredential $credObject

#setting up Graph API URL

$graphApiVersion = “beta”

$Resource = ‘organization?$select=directorysizequota’

$uri = “https://graph.microsoft.com/$graphApiVersion/$($Resource)”

#initiate query via Graph API

$data = Invoke-MSGraphRequest -url $uri

#get data and validate

$usedpercentage=(($data.value.directorysizequota.used/$data.value.directorysizequota.total)*100)

#if ($usedpercentage -gt $maxsize)

#{write-output “Directory Size : $($data.value.directorysizequota.used) is greater than 90 percent”}

#else

#{

Write-Output “Directory Size : $($data.value.directorysizequota.used) and percentage used is $($usedpercentage)”

#}

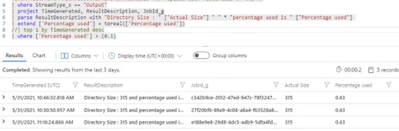

And you could use Azure Log Analytics to help Alert on Monitor as below

AzureDiagnostics

| where Category == “JobStreams”

| where ResourceId == “” // replace with resourceID of the Automation Account

| where StreamType_s == “Output”

| project TimeGenerated, ResultDescription, JobId_g

| parse ResultDescription with “Directory Size : ” [“Actual Size”] ” ” * “percentage used is ” [“Percentage used”]

| extend [‘Percentage used’] = toreal([‘Percentage used’])

| top 1 by TimeGenerated desc

| where [‘Percentage used’] > (0.1)

This can help you get an overview of percentage used

Alert configuration using Log Analytics can be done as shown in below screenshots:

You could define the threshold when to be alerted

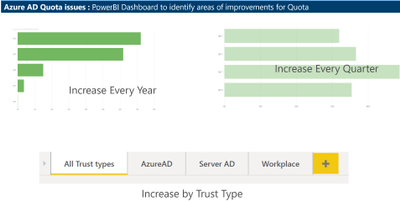

2. More detailed review on the Root Cause of the issue

In this step we need to identify why so many devices are being registered every day.

We exported the list of all registered devices in AAD in excel and tried to filter based on what type of registrations they have had.

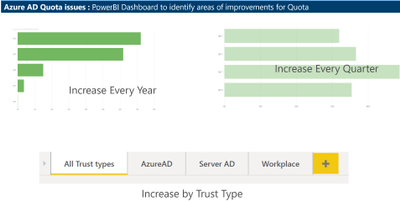

Thanks to Claudiu Dinisoara & Turgay Sahtiyan for helping create a nice dashboard in POWERBI based on these logs which helped us understand the Root Cause much better.

This dashboard helped us understand the type of Device registrations and the overall count across the years, we found that there has been a Significant increase in AAD Device registrations due to Intune Rollout across the organization.

We checked with customer’s Intune support team, and they confirmed that this increase was expected.

3. Creating a baseline on number of AAD objects we can have:

The trickiest part on this issue was coming up with a baseline number for AAD Objects.

So, the customer had approximately 100k users and we came up with the below table for the baseline.

Please note that this number was unique to customer scenarios and discussions and it may differ for your organization.

Object

|

Count

|

Why this number

|

Users

|

105000

|

Total number of Production users are 100k and other 5000 users could be used for Administration, To be deleted users or Guest users.

|

Groups

|

60000

|

We felt 60k is a high number but they were using Groups extensively for Intune and other Policy management tasks, we recommended them to work on reducing this number in future.

|

Devices

|

200000

|

We assumed there will be 2 devices registered per user (Mobile & Laptop) and few stale devices.

|

Contacts

|

16000

|

These objects were already low, so we considered the present values as Baseline

|

Applications

|

1500

|

These objects were already low, so we considered the present values as Baseline

|

Service Principals

|

3000

|

These objects were already low, so we considered the present values as Baseline

|

Roles

|

100

|

These objects were already low, so we considered the present values as Baseline

|

TOTAL

|

385600

|

These objects were already low, so we considered the present values as Baseline

|

We compared the expected baseline with their Quota limit was 5,00,000 and came up with the strategy for cleanups and strategy to maintain the object counts as per baseline.

4. Increasing the Object Quota based on the baseline created if needed.

Their AAD Quota limit was 500,000 objects however our baseline indicated that they need to be around 400,000 objects.

Hope this helps,

Zoheb

by Contributed | Jun 29, 2021 | Technology

This article is contributed. See the original author and article here.

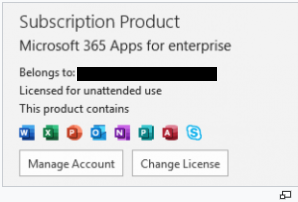

Robotic Process Automation (RPA), also known as unattended use, allows an organization to license certain account types that are designed to be used in process automation.

There are two types of RPA:

- Attended RPA – The collaboration between the user and the bot, which is also known as the Virtual Assistant.

- Unattended RPA – The execution of tasks and interactions independent of the user. With unattended RPA, the bot can run automation on its own.

Over the years organizations have used automation within Office apps, but the process always had to be done with volume license versions of Office due to licensing limitations and feasibility of an organization licensing RPA accounts. Microsoft 365 E3: Unattended License now enables IT admins to automate and use the same version/build of Office that their end users are running. Keep in mind that Office was developed with the end user in mind and not automation. Although this new license allows for amazing automation, we suggest you follow the considerations for RPA outlined here: https://aka.ms/unattendedofficeconsiderations.

Requirements for using unattended RPA

The following are the requirements for using unattended RPA with Microsoft 365 Apps for enterprise:

- Microsoft 365 E3: Unattended License – Required on any account running an RPA unattended task. The account can be a user account, a system/service account, or a robot account.

- Microsoft 365 Apps for enterprise, version 2009 or later.

- RPA is currently only available for Office apps for Windows.

Enabling RPA scenarios

If you want to enable RPA in Microsoft 365 Apps for enterprise, click here for step-by-step instructions.

What does it look like?

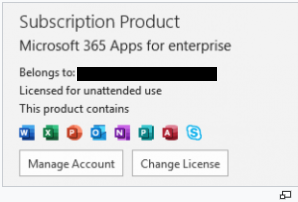

To validate if the Microsoft 365 E3: Unattended License is active from a Microsoft 365 App, navigate to File > Account. Here’s an example in the screen shot below:

Another way to validate if the license is active is under, “%LocalAppData%MicrosoftOfficeLicenses5Unattended.”

If user is signed in, but there is no RPA entitlement (Unattended License) assigned or activated on the account, users may see the following message within their Microsoft 365 Apps:

Assigning licenses to devices in Microsoft 365 admin center

To deploy an Unattended License, you simply purchase the required number of Microsoft 365 licenses and assign the license to a user in the Microsoft 365 admin center. To enable this functionality on a device, use the group policy for currently installed devices and/or the command line.

For more information on unattended RPA with Microsoft 365 Apps for enterprise, check out this Microsoft Docs article.

Continue the conversation by joining us in the Microsoft 365 Tech Community! Whether you have product questions or just want to stay informed with the latest updates on new releases, tools, and blogs, Microsoft 365 Tech Community is your go-to resource to stay connected!

by Contributed | Jun 29, 2021 | Technology

This article is contributed. See the original author and article here.

Following the announcement of Windows 11 on June 24, we wanted to update you on what is coming to Universal Print with Windows 11. If you want to get a head start on these new Universal Print capabilities, head over to the Windows Insider blog to read how you can access the first Windows 11 Insider Preview build. Subscribe to the Windows Insider blog if you want to be updated on our progress to general availability of Windows 11 later this year.

Universal Print is a modern print solution that organizations can use to manage their print infrastructure through serverless cloud services from Microsoft. Since the release of Windows 10, version 1903, we have supported this cloud service with a Universal Print driver. The advantage of that driver is you no longer have to maintain printer drivers on your desktops. There is one driver to rule them all.

Using Universal Print with Universal Print ready printers, which we blogged about a few weeks ago, gives admin and employees the best experience. Those printers are easy to manage in the cloud and expose their functionality to the user.

With Windows 11, we are upgrading the user experience with printers in several ways. First is the general print experience to go along with the updated user experience with Windows 11.

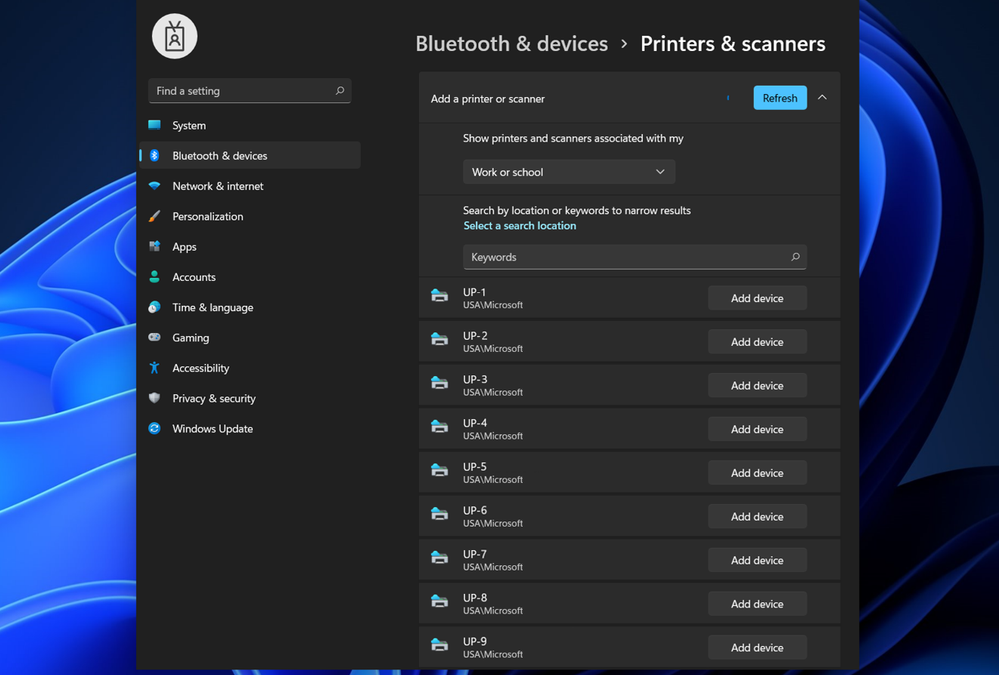

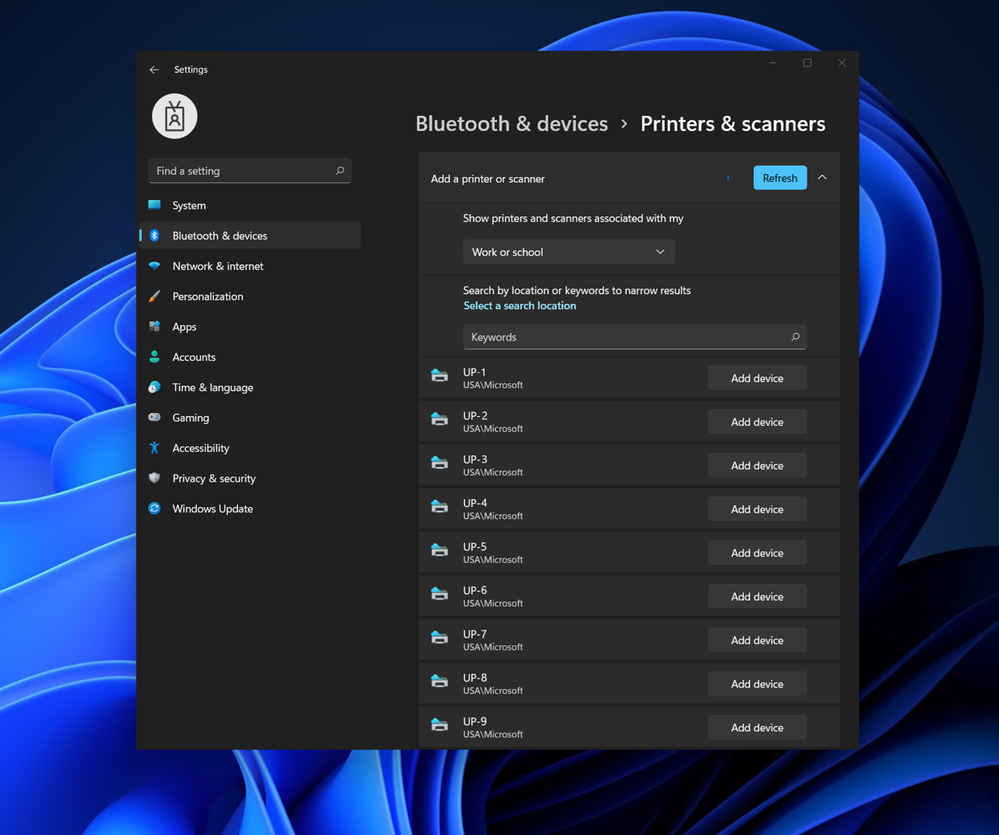

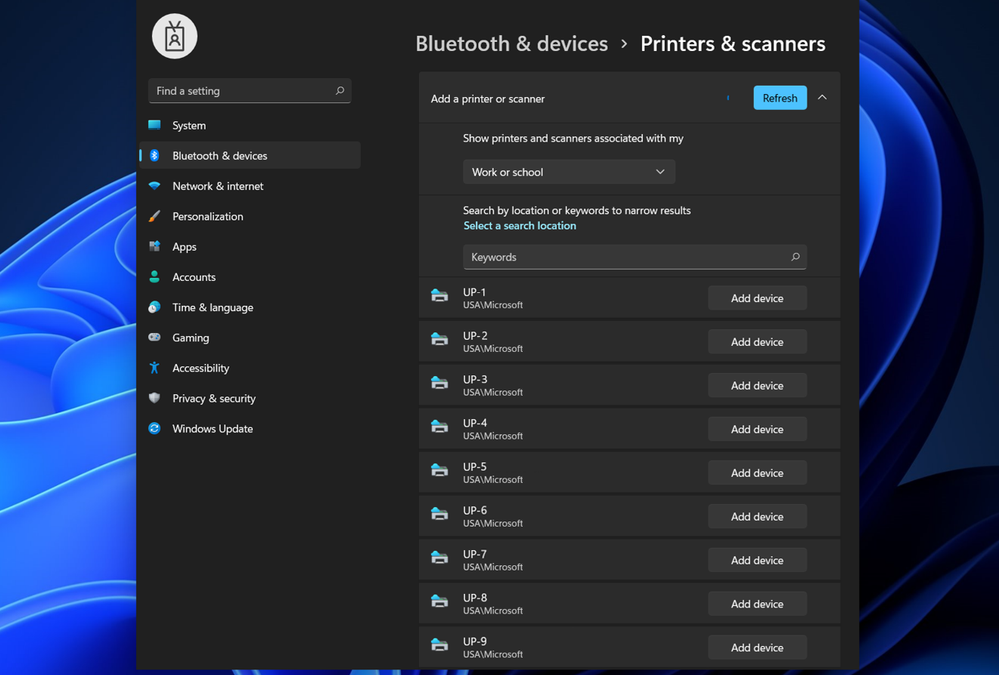

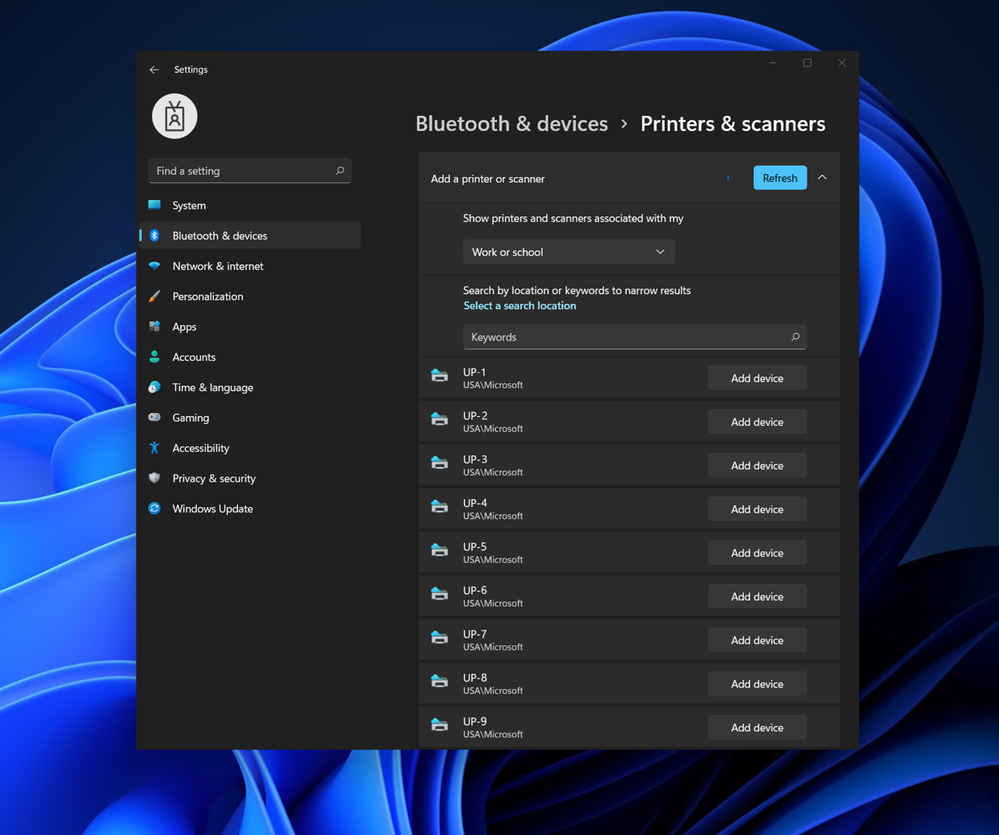

Adding a printer in the Windows 11 Settings app

Adding a printer in the Windows 11 Settings app

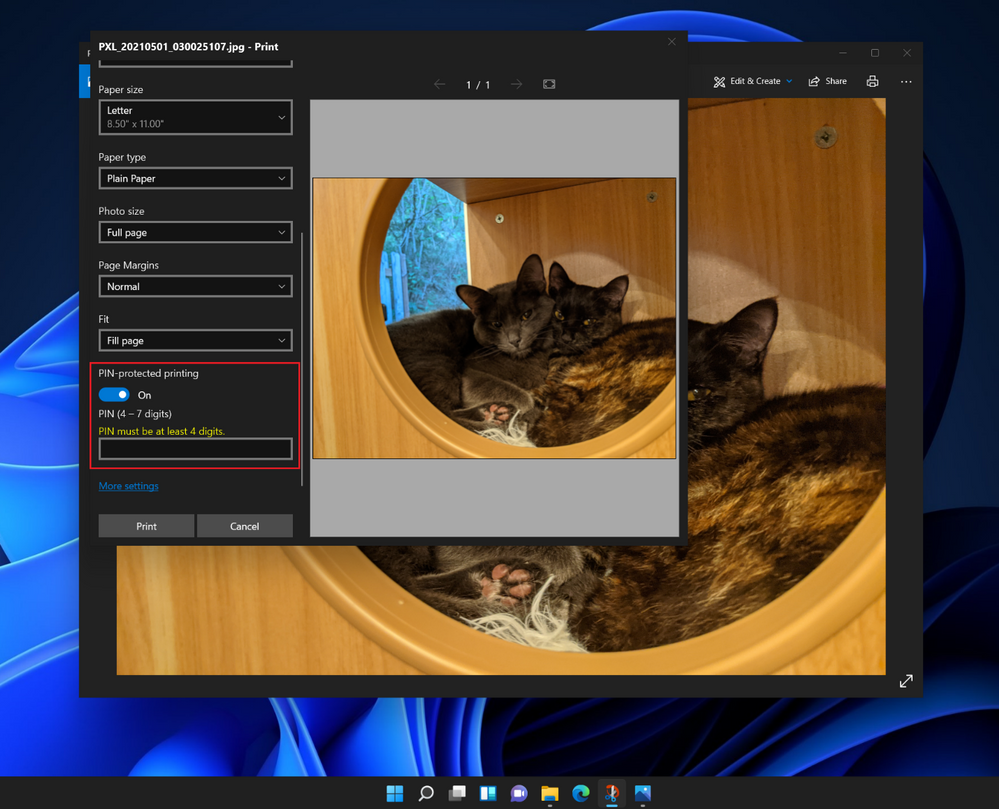

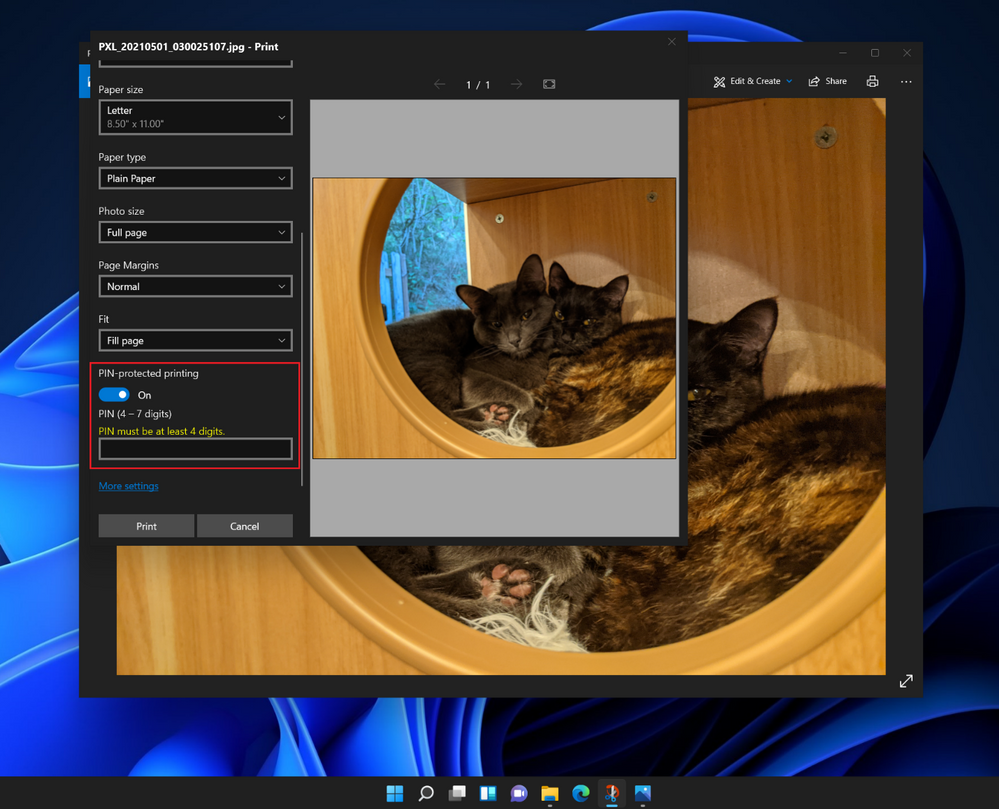

In addition, we are adding some eco-friendly functionality to the updated Universal Print driver that many users will appreciate, the ability to add a PIN to a print job, so the job will not be printed until the user enters the same PIN on the printer. This is one more reason to upgrade your existing printer to a Universal Print ready firmware, if available.

Entering a PIN to release a print job in Windows 11

Entering a PIN to release a print job in Windows 11

Some will see this as increased security, but we mostly think of this as functionality that will reduce paper and toner waste and offer users some privacy when printing. Print jobs will not go to waste on the printer. This capability will also come to Windows 10, version 21H2, which will be released later this year.

With Windows 11, we release support for Print Support Application (PSA) in Windows. Windows ships an inbox printer class driver based on the standards based Mopria printing protocol. This enables a simple and seamless printing experience and eliminates the need for users to install additional software or custom drivers to connect and print to their Mopria-certified printers. The Universal Print driver on Windows 11 can be extended by printer manufacturers and/or Managed Print Software (MPS) solutions with additional custom features and workflows by publishing a PSA to Microsoft Store. These UWP apps are not drivers and run in the user’s context. When installed on the user’s Windows 11 device, the PSA may run in the background to process print jobs for the corresponding printer or can offer an advanced print user interface to address scenarios such as:

- Printer specific custom advanced finishing options.

- Add accounting information and workflows.

- Add a watermark (optional or enforced)

Admins can install the default PSA or another vendor’s PSA if required for a Universal Print ready printer on the user’s Windows 11 devices using Microsoft Endpoint Manager. This capability will also come to Windows 10, version 21H2, which will be released later this year.

PSA will also be available outside the commercial Universal Print service configuration on a consumer Windows 11 device. Apps will install automatically from the store when the home user connects a printer to the home network. The advanced settings in the traditional print UI on Windows 11 will no longer show, and the PSA takes over that UI to offer the user the most appropriate functionality for the printer model they have. The significant advantage is that no printer driver is installed, but an app that interacts with the driver to offer the user more functionality, increasing the stability and security of the user’s device.

In addition, we are bringing some enhanced support for the Internet Printing Protocol (IPP) to the Windows ecosystem. IPP has been implemented in Windows 10 for network printers since 2018. With Windows 11, we are adding IPP over USB to widen hardware support using IPP. To support IPP and PSA in an enterprise environment, Windows also supports directed discovery of IPP printers. This also enables IPP and PSA for Windows Server 2022 for Point and Print customers who want to continue using Windows Server for print enablement. This helps customers use PSA independent of the solution they use: Universal Print or Windows Server or a combination. This capability will also come to Windows 10, version 21H2, which will be released later this year.

Learn more: Universal Print and Windows 11

by Contributed | Jun 29, 2021 | Technology

This article is contributed. See the original author and article here.

Working as a PKI engineer, I am frequently asked about “the best” method to enroll certificates to mobile devices via Intune. What exactly is the best method from a PKI engineer’s perspective? The most secure one, of course. The following article tries to explain the different methods in terms of security.

Overview

Intune supports three different methods to provision certificates to devices or users, that can be easily confused: Simple Certificate Enrollment Protocol (SCEP), Public Key Cryptography Standards (PKCS), and imported PKCS#12 certificates.

While SCEP and PKCS provision each device with a unique certificate, with Imported PKCS#12 certificates you can deploy the same certificate (and private key) that you’ve exported from a source, like Certification Authority database, to multiple recipients. This shared certificate might be useful in scenarios when you need to decrypt data on different devices using exactly the same key pair, e.g. with S/MIME e-mail encryption.

The following article tries to explain the differences – mainly, but not only – from the security perspective. As understanding a technology’s security impact without understanding the technology itself is impossible, we start each section with a brief overview of the certificate enrollment process.

Foreword: Tiered Administration & your PKI

Microsoft’s AD Tier model has enhanced to the “Enterprise Privileged Access Model ”. What remains the same is the fact that a PKI which is fully trustworthy for Active Directory, must be assigned to Tier 0 and secured and treated accordingly. This is not only true for computers hosting Certification Authorities, but also for computers acting as Registration Authorities (like NDES) or computers which have enroll permissions for user certificates allowing for custom subjects (like Microsoft Intune Certificate Connector”).

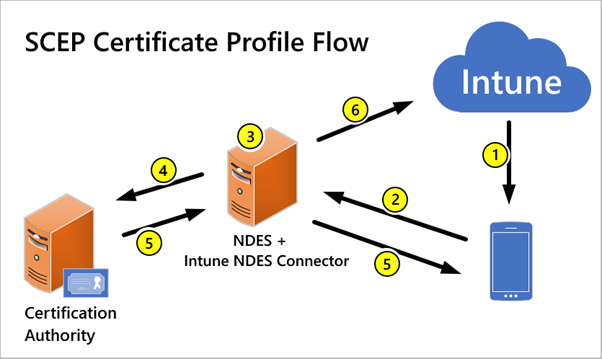

SCEP (Simple Certificate Enrollment Protocol)

The headline already says that this is about a specific protocol: Simple Certificate Enrollment Protocol. Network Device Enrollment Server (NDES) – a Windows Server Role Service – implements this protocol, thereby providing certificate enrollment services for devices (and users). To interconnect Intune with the on-prem NDES, you need to download and install the following piece of software: Microsoft Intune Connector (aka “Microsoft Intune Certificate Connector” aka “NDES Certificate Connector” or simply “NDESConnectorSetup.exe”). Please be aware that installing Intune NDES Connector significantly changes the default behavior of NDES.

Certificate Enrollment Process

Prerequisite: To use a SCEP certificate profile, devices must trust your Root Certification Authority (CA). Create a Intune Trusted Certificate profile in Intune to provision the Trusted Root CA certificate to users and devices.

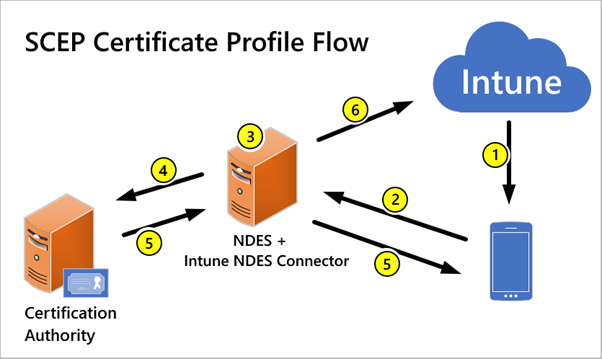

The following steps provide an overview of certificate enrollment using SCEP in Intune:

Source: Troubleshoot use of Simple Certificate Enrollment Protocol (SCEP) certificate profiles to provision certificates with Microsoft Intune – Intune | Microsoft Docs

- Deploy a SCEP certificate profile. Intune generates a challenge string, which includes the specific user (subject), certificate purpose, and certificate type.

- Device to NDES server communication. The device uses the URI for NDES from the profile to contact the NDES server so it can present a challenge. We recommend publishing the NDES service through a reverse proxy, such as the Azure AD application proxy, Web Access Proxy, or a third-party proxy.

- NDES to policy module communication. NDES forwards the challenge to the Intune Certificate Connector policy module on the server, which validates the request.

- NDES to Certification Authority. NDES passes valid requests to issue a certificate to the Certification Authority (CA).

- Certificate delivery to NDES.

- Certificate delivery to the device.

Various Aspects of Security

- The key pair is created by the device (or its TPM) and the private key never leaves the device (or the TPM).

- Security of the enrollment process depends on the security of the server hosting the Connector and the NDES Role Service.

- Intune Connector is installed on the same server that hosts NDES. This server should be properly hardened, with a minimum number of local Admins.

- Verification of certificate subject is performed through Intune together with a NDES Policy Module installed in C:Program FilesMicrosoft IntuneNDESPolicyModule.

- NDES works as a Registration Authority, meaning that it uses its NDES Enrollment Agent certificate to countersign each certificate request after verification, before forwarding it to the CA. Consequently, the NDES Enrollment Agent certificate is extremely sensitive and should be enrolled and stored in an HSM: An adversary getting hold of the NDES Enrollment Agent certificate (and private key) could counter-sign a certificate request of his choice with arbitrary subjects, thereby enrolling valid user authentication certificates (think of the CEO or the Enterprise Administrator). Keep in mind that members of the Local Admins group have access to keys stored in the Local Computer certificate store!

- NDES can run either in the context of Network Service or of a domain service account. Group Managed Service accounts (gMSA) are not supported with the Intune Connector installed. Hardening the NDES service account is essential, not only for your certificate enrollment process, but also for your Active Directory security (weak service accounts make your AD vulnerable to “Kerberoasting” attacks).

- NDES requires Enterprise Admin group membership for installation.

- The Intune Connector/NDES server must be accessible from the Internet and protected by a reverse proxy, like WAP or Azure Application Proxy with Azure Conditional Access. Find more details at: Support for NDES on the Internet.

- Although this is not a feature of SCEP, the Intune NDES Connector provides a feature to revoke certificates at the Certification Authority.

- Non-security related side note: When several profiles (e.g. SCEP and VPN or Wi-Fi) are applied to iOS devices at the same time, a separate certificate is enrolled for each profile.

PKCS (Public Key Cryptography Standard)

The term “PKCS” may be confusing here, because Wikipedia explains PKCS as follows: “PKCS are a group of public-key cryptography standards devised and published by RSA Security LLC, starting in the early 1990s. The company published the standards to promote the use of the cryptography techniques to which they had patents, such as the RSA algorithm, the Schnorr signature algorithm and several others. Though not industry standards (because the company retained control over them), some of the standards in recent years have begun to move into the “standards-track” processes of relevant standards organizations such as the IETF and the PKIX working-group[1].”

However, in this context “PKCS” simply means that two different PKCS standards are used to request a certificate (PKCS#10) and/or push the certificate including the private key (PKCS#12) to the device.

Some key facts about PKCS#12:

- PKCS#12 is the successor to Microsoft’s “PFX”, however, the terms “PKCS #12 file” and “PFX file” are sometimes used interchangeably. Find more details at Wikipedia explaining PKCS#12 .

- PKCS#12 defines an archive file format for storing many crypto objects within a single file (e.g. private key + certificate + CA certificates).

- PKCS#12 is protected by a password because it contains sensitive data (the private key). This password is named “PFX password” further on.

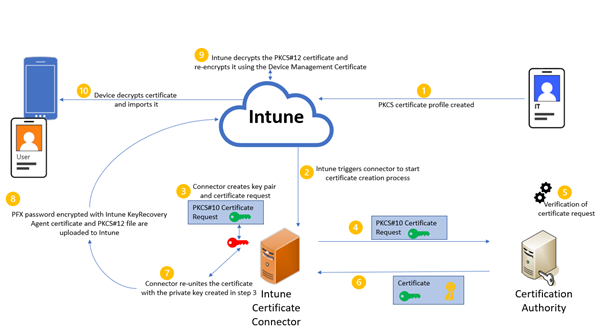

a) PKCS

In this scenario you install the same peace of software as for SCEP enrollment (“Microsoft Intune Certificate Connector” aka “NDES Certificate Connector” or simply NDESConnectorSetup.exe”), you just select different configuration options. More details are provided here: Configure and use PKCS certificates with Intune.

Certificate Enrollment Process

A detailed description of the enrollment process is provided in the article Troubleshoot use of PKCS certificate profiles to provision certificates with Microsoft Intune.

Prerequisite: To use a SCEP certificate profile, devices must trust your Root Certification Authority (CA). Create a Intune Trusted Certificate profile in Intune to provision the Trusted Root CA certificate to users and devices.

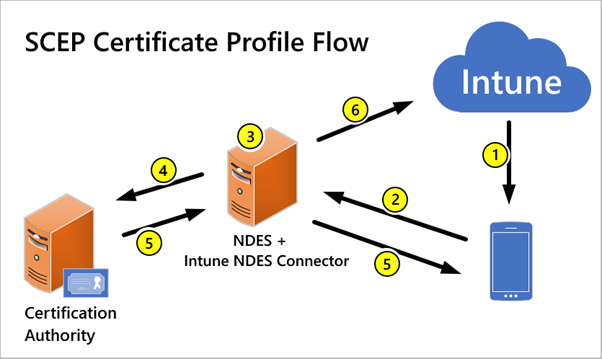

The following steps provide an overview of using PKCS for certificate enrollment in Intune:

- In Intune, an administrator creates a PKCS certificate profile, and then targets the profile to users or devices.

- The Intune service requests that the on-premises Intune Certificate Connector creates a new certificate for the user.

- The Intune Certificate Connector creates a key pair and a Base64 encoded PKCS#10 certificate request.

- The certificate request is sent to an Active Directory Certification Authority.

- The CA verifies the certificate request.

- If positive, the CA issues the certificate, and returns it to the Intune Connector.

- The Connector re-unites the certificate with the private key by creating a PKCS#12 envelope and encrypts it with a randomly generated password (PFX Password).

- The PFX Password is then encrypted with the Intune KeyRecoveryAgent certificate before it is uploaded to Intune together with the PKCS#12 certificate.

- Intune decrypts the PKCS#12 certificate and re-encrypts it for the device using the Device Management certificate. Intune then sends the PKCS#12 to the device.

- The device decrypts the PKCS#12 and imports it.

Various Aspects of Security

- Security of the enrollment process depends on the security of the server hosting the Connector (this is even more true in case the Local System account is used to connect to the CA). This server should be properly hardened, with a minimum number of local Admins.

- The Connector submits certificate requests in the context of the computer account hosting the Connector.

- This computer account can request certificates with a subject of its choice (including the CEO’s or Enterprise Admin’s username).

- Intune derives the subject from the authentication token, there is no additional verification of the subject.

- The server hosting the Intune Connector does not need to be published to the Internet.

- The certificate private key is generated on the server where the Connector is installed and not on the user’s device. It is required that the certificate template allows the private key to be exported, so that the certificate connector can export the PFX certificate and send it to the device. However, please note that the certificates are installed on the device itself with the private key marked as not exportable.

Scalability

In contrast to the SCEP Connector, with PKCS Connector the issuing CA as well as the certificate template are configured in the Intune profile. This means that one PKCS Connector can be used to enroll different certificate templates or even enroll certificates from different CAs.

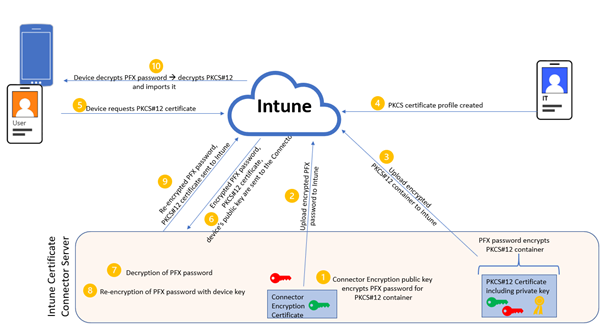

b) Imported PKCS

Microsoft Intune supports use of imported public key pair (PKCS) certificates, commonly used for S/MIME encryption with email profiles. S/MIME decryption is challenging because an email is encrypted with a public key:

- You must have the private key of the certificate that encrypted the email on the device where you’re reading the email so it can be decrypted.

- Before a certificate on a device expires, you should import a new certificate so devices can continue to decrypt new email. Renewal of these certificates isn’t supported.

- Encryption certificates are renewed regularly, which means that you might want to keep past certificate on your devices, to ensure that older email can continue to be decrypted.

Because the same certificate needs to be used across devices, it’s not possible to use SCEP or PKCS certificate profiles for this purpose as those certificate delivery mechanisms deliver unique certificates per device. Here is the main drawback of S/MIME: It is impossible to securely and at the same time efficiently synchronize keys across devices and that’s why Microsoft recommends using Azure Information Protection instead (which was designed with having today’s security and usability challenges in mind).

However, if you still want to go for this scenario you need to install the “PFX Certificate Connector for Microsoft Intune” or simply “PfxCertificateConnectorBootstrapper.msi”. The article Use imported PFX certificates in Microsoft Intune provides details about the configuration.

Certificate Deployment Process

This method neither covers creation of key pairs nor enrollment of certificates. It simply assumes that key pairs and certificates already exist. The focus of this Intune Connector is to offer a temporary solution while migrating from S/MIME to a more up to date solution like Office 365 Message Encryption. S/MIME was originally standardized in 1995 when users didn’t have multiple mobile devices and key compromise was something you didn’t have to worry about.

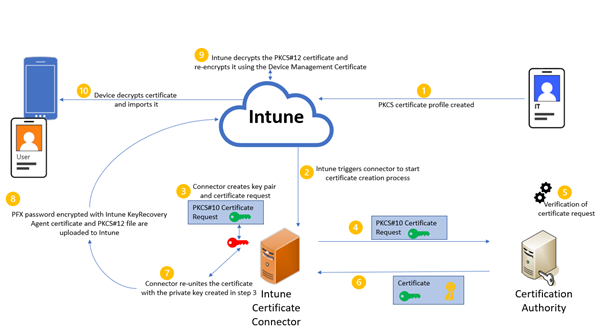

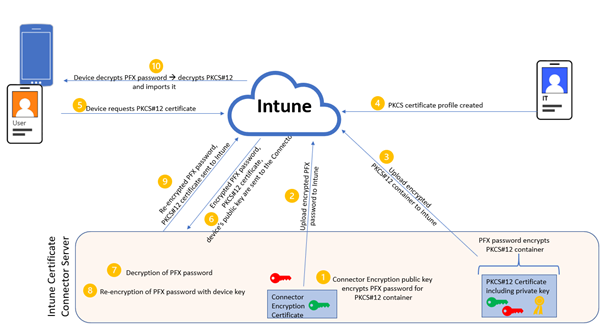

The following steps provide an overview of the PKCS#12 certificate deployment process in Intune:

- The PFX Certificate Connector holds a PFX Certificate Connector certificate and uses the private key to encrypt the PFX password.

- The encrypted PFX password is uploaded to Intune.

- The user’s S/MIME certificate (in PKCS#12 format) is protected with the PFX password and uploaded to Intune, too.

- In Intune, an administrator creates a PKCS#12 certificate profile, and then targets the profile to users or devices.

- The user’s device requests the PKCS#12 certificate.

- Intune sends the encrypted PFX password, the PKCS#12 certificate and the device’s public key to the PFX Certificate Connector.

- The PFX Certificate Connector decrypts the PFX password.

- The PFX Certificate Connector re-encrypts the PFX password with the device’s key.

- The re-encrypted PFX password and the PKCS#12 certificate are sent to Intune.

- The re-encrypted PFX password and the PKCS#12 certificate are forwarded to the user’s device, which then decrypts the PFX password, decrypts the PKCS#12 certificate and imports it into the device.

Various Aspects of Security

- Security of the deployment process is dependent on the security of the server hosting the Connector. This server should be properly hardened, with a minimum number of local Admins.

- The PFX Connector certificate’s private key should be protected using an HSM.

- As already mentioned, S/MIME was originally standardized in 1995 when users didn’t have multiple mobile devices and key compromise was something you didn’t have to worry about. Today, we see distributing and using the same private key across various devices with a different level of security as a contradiction in itself.

- The PFX Connector only covers the distribution of certificates and private keys to the user’s devices. It does not cover:

- Creation of key pairs, requesting and enrolling certificates

- Secure on-prem storage of private keys

- Certificate revocation in case a device is lost or stolen

- Renewal of expiring certificates

I hope you enjoyed this article and it helps to bring some light into the various options of enrolling certificates via Intune.

by Contributed | Jun 29, 2021 | Technology

This article is contributed. See the original author and article here.

We continue to expand the Azure Marketplace ecosystem. For this volume, 102 new offers successfully met the onboarding criteria and went live. See details of the new offers below:

|

Applications

|

|

Advanced DevOps, ETL, and Analytics Workplace: This image offered by My Arch provides a high-performance workplace for ETL (extract, transform, load) and analytics involving healthcare data. The image includes toolchains augmented with MyArch software for in-situ reporting and analytics on raw data in EDI 837 and EDI 835 formats.

|

|

AGRIVI 360 Farm Enterprise: AGRIVI 360 Farm Enterprise gathers all agricultural data, IoT data, and real-time field information so farmers can make data-driven decisions. Features include support for more than 15 languages and integration with customers’ enterprise resource planning solutions.

|

|

Analytics for Semiconductor Manufacturing: This analytics platform from NI, formerly National Instruments, integrates with simulation software, manufacturing infrastructure, fleet data, and more, which enables companies to use their various types of data to transform their businesses.

|

|

Automated Orchestration for Azure IoT at Scale: Infiot’s end-to-end workflow deploys Microsoft Azure IoT Edge runtimes to Infiot Extensible Edge devices and enables automatic Azure IoT Hub connectivity via Azure APIs.

|

|

CA Injector Container Image: This image from Bitnami provides CA Injector, a command-line tool that configures the certificate authority (CA) certificates for cert-manager webhooks.

|

|

Cert Manager Webhook Container Image: This image from Bitnami provides Cert Manager Webhook, which provides dynamic admission control over cert-manager resources using a webhook server.

|

|

Channels Internal Communication Software: Transform employee workstations into internal communication channels with this software from Telelogos. Desktop backgrounds can be branded, lock screens can be customized, and alerts and notifications can be sent to specific workstations.

|

|

Cloud-Native Database for DocumentDB: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides MongoDB Community Server on a Microsoft Azure virtual machine. Choose from MongoDB Community Server versions 3.0, 3.2, 3.6, 4.0, 4.2, or 4.4 on CentOS or Ubuntu.

|

|

Cloud-Native Database for Graph: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides the Community Edition of the Neo4j graph database. Choose from version 3.5, 4.0, or 4.2 on CentOS or Ubuntu. Also included are NGNIX 1.20.0 and OpenJDK 11.0.11.

|

|

Cloud-Native Database for MariaDB: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides MariaDB on CentOS or Ubuntu. Choose from MariaDB 10.1, 10.2, 10.3, or 10.4. Also included are Docker 20.10.6 and phpMyAdmin 5.1.0.

|

|

Cloud-Native Database for MySQL: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides MySQL Community Server on CentOS or Ubuntu. Choose from MySQL Community Server version 5.5, 5.6, 5.7, or 8.0. Also included are Docker 20.10 and phpMyAdmin 5.1.

|

|

Cloud-Native MQ for Apache Kafka Powered by VMLab: This image offered by VMLab provides Apache Kafka on Ubuntu. Apache Kafka is a tool for building data pipelines and streaming apps. Numerous versions are available. Also included are OpenJDK 1.8.0, CMAK 3.0.0.5, Zookeeper 3.4.9, and Docker 20.10.6.

|

|

Cloud-Native MQ for Apache RabbitMQ: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides RabbitMQ Server 3.8.16 on CentOS or Ubuntu. RabbitMQ is a widely deployed open-source message broker. Also included is version 23.3.3 of the Erlang programming language.

|

|

Content Collaboration Platform: This image offered by VMLab, an authorized reseller for Websoft9, provides version 20.0.5 of Nextcloud, a file sync-and-share tool, on CentOS 7.9. Also included are Apache 2.4.46, PHP 7.4.19, MySQL 5.7.34, ONLYOFFICE Docs Community 6.2, phpMyAdmin 5.1.0, Docker 20.10.6, and Redis 5.0.9.

|

|

CPFDS: Digital Age Nepal’s anti-money-laundering solution flags suspicious transactions to report to bank authorities. Alerts can be triggered based on in-person transactions, digital transactions, customer details, cards, ATM activity, and cross-border transactions.

|

|

DevOps All-in-One Platform: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides GitLab Community Edition 13.11.3 on CentOS or Ubuntu. GitLab is a DevOps lifecycle tool. Also included are Docker 20.10.6 and Portainer 2.1.1.

|

|

Enterprise E-Commerce Platform: This image offered by VMLab provides version 2.4.2 of Magento Open Source, an e-commerce platform, on CentOS 7.9. Also included are Apache 2.4, MySQL 8.0, PHP 7.4, Redis 6.0, OpenJDK 1.8.0, Elasticsearch 7.8, RabbitMQ 3.8, Erlang 23.3, phpMyAdmin 6.1, and Docker 20.10.

|

|

Enterprise Web Content Management: This image offered by VMLab, an authorized reseller for Websoft9, provides version 5.7 of the WordPress content management system on CentOS 7.9. Also included are Apache 2.4.46, NGINX 1.20.0, PHP 7.4.19, MySQL 5.7.34, Docker 20.10.6, phpMyAdmin 5.1.0, and Redis 5.0.9. |

|

Imagine: Idea Engagement Platform: Similar to a social network, the Imagine platform is based on a collaborative model. Employees can submit insights or ideas for solving a problem, and qualified ideas will be shown in a feed so others can join in. The process is gamified to reward participants. This app is available in Portuguese.

|

|

Informatica Enterprise Data Catalog 10.5.0: This is a bring-your-own-license offer for Informatica Enterprise Data Catalog 10.5.0, an AI-powered tool for scanning and cataloging data assets on-premises and across the cloud. Features include powerful semantic search, holistic relationship views, and an integrated business glossary.

|

|

Jonathan FlightBase: Jonathan FlightBase is a machine learning platform that manages multiple GPU resources and provides a distributed learning function for model training. This app is available only in Korean.

|

|

JupyterHub Container Image: This image from Bitnami provides a container image of JupyterHub, a server for managing and proxying multiple instances of the single-user Jupyter notebook server. This gives users access to computational environments and resources without burdening them with installation and maintenance tasks.

|

|

JupyterHub Helm Chart: This offer from Bitnami provides a JupyterHub Helm chart. Helm is a Kubernetes package and operations manager. Helm charts are an easy way to get started with an app on Kubernetes. JupyterHub gives users quick access to computational environments and resources.

|

|

KitKAD: KitKAD’s codes and scripts make it easy to get started with Microsoft Azure Data Factory and accelerate, structure, and strengthen your data platform. This app is available only in French.

|

|

KlariVis for Data Analytics and Financial Institutions: The KlariVis analytics platform for bankers aggregates high-value, actionable data into powerful insights pertaining to components that affect loans, deposits, and revenue growth.

|

|

LTI FinTrust: Powered by blockchain, LTI FinTrust brings real-time visibility and transparency into the floor-plan financing process. Original equipment manufacturers, dealers, and financiers can use one network to facilitate inventory tracking, reduce fraud, and ensure timely payments.

|

|

Mother and child notebook application: Adopted by 381 local governments in Japan, MTI Co. Ltd.’s child-rearing app offers mothers support during pregnancy, childbirth, and afterward. This app is available only in Japanese.

|

|

Nasuni Management Console: Nasuni’s file storage platform leverages object storage to deliver a simple, efficient, low-cost solution that scales to handle rapid unstructured file data growth.

|

|

Open Observability platform: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides Open Source Edition 7.5.7 of Grafana, a feature-rich metrics dashboard and graph editor, on CentOS or Ubuntu. Also included is NGINX 1.20.

|

|

Open-Source Business Analytics: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides Community Edition 8.0.0 of Knowage, a business intelligence tool, on Ubuntu 20.04. Also included are NGINX 1.20.0, MariaDB 10.3, Docker 20.10.6, and phpMyAdmin 5.1.0.

|

|

Open-Source Cloud-Native ERP for ERPNext: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides version 11.1 of project management tool ERPNext on Ubuntu 20.04. Also included are NGINX 1.20.0, MariaDB 10.3, Redis 6.2.3, phpMyAdmin 5.1.0, and Docker 20.10.6.

|

|

Open-Source E-Commerce Platform: This image offered by VMLab, an authorized reseller for Websoft9, provides a one-click deployment of version 1.7.7.3 of the PrestaShop e-commerce platform. Apache 2.4.46, PHP 7.4.19, MySQL 5.7.34, phpMyAdmin 5.1.0, Redis 5.0.9, and Docker 20.10.6 are included on CentOS 7.9.

|

|

Open-Source Learning Platform: This image offered by VMLab, an authorized reseller for Websoft9, provides version 3.10.1 of the Moodle learning management system along with Apache 2.4.46, MySQL 5.7.34, PHP 7.4.19, Redis 5.0.9, Docker 20.10.6, and phpMyAdmin5.1.0 on CentOS 7.9.

|

|

Open-Source Log Management: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides version 4.2 of the Graylog log management solution along with NGINX 1.20.0, MongoDB 4.2, adminMongo 0.0.23, Docker 20.10.6, and Elasticsearch 7.10.2 on Ubuntu 20.04.

|

|

Parloa: Parloa is a conversational AI platform for automating phone calls or chats in WhatsApp or Microsoft Teams. Using Azure Cognitive Services, Parloa’s low-code front end allows companies to automate customer service without the need for data scientists, machine learning engineers, or developer resources.

|

|

Percona Server for MySQL 5.7: Percona Server is a free, fully compatible drop-in replacement for MySQL 5.7. Its self-tuning algorithms and support for extremely high-performance hardware delivers excellent performance and reliability.

|

|

Percona Server for MySQL 8.0: Percona Server is a free, fully compatible drop-in replacement for MySQL 8.0. Its self-tuning algorithms and support for extremely high-performance hardware delivers excellent performance and reliability.

|

|

Profitbase Planner 5: Profitbase Planner is a flexible and powerful budgeting and forecasting tool that reduces complexity and time spent planning. Through its business intelligence functionalities, you can discover patterns and correlations in business data.

|

|

Profitbase Risk Management: Businesses can safeguard their assets and reputation with Profitbase Risk Management, which identifies and mitigates risk. Its consolidation feature enables users to understand the interaction between individual risks and see the bigger picture.

|

|

SATAVIA DECISIONX: DECISIONX is a digital twin of the Earth’s atmosphere from ground level to low Earth orbit (LEO). It provides atmospheric data and enables a suite of high-impact applications in sectors ranging from commercial aviation to defense and intelligence.

|

|

SCM Pulse: This blockchain-based supply chain management solution from Larsen & Toubro Infotech proactively mitigates invoice disputes by providing end-to-end visibility, transparency, and traceability for transactions.

|

|

SLB: Servers Lock Box (SLB) is a tool that system administrators, DevOps engineers, developers, and managers can use to safely store data from servers, accounts, or applications. Users can edit the data and manage its distribution.

|

|

Software Load Balancer: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides HAProxy Community Edition on Ubuntu. Numerous versions are available. HAProxy is a high-availability load balancer and proxy server for TCP and HTTP-based applications.

|

|

Strategy-AI for Jira: The Strategy-AI Extension for Jira offered by Chinchilla Software extends the capabilities of Jira projects and boards. Easily tag critical work items, measure how strategic your backlog is, and visualize and monitor the historical strategic value of your sprints and releases.

|

|

Strategy-AI Trello Power-Up: The Strategy-AI Power-Up offered by Chinchilla Software extends the capabilities of Trello boards. Control which plans are brought into Trello and which are not. Easily tag cards and quickly visualize how cards cross business initiatives.

|

|

StudentCare: StudentCare supports student retention at higher education institutions through relationship technologies guided by analytics and artificial intelligence. This app is available in Portuguese.

|

|

Ubuntu 20.04 LTS with Pure-FTPd Server: This offer from Virtual Pulse provides Pure-FTPd Server on Ubuntu 20.04 LTS. Pure-FTPd features robust implementations of FTP protocol specifications, including modern extensions like MLST/MLSD (extensible and mirror-safe directory listings).

|

|

Unemployment Insurance Data Station: Data Station from Solid State Operations enables state workforce agencies to save money through increased accuracy for unemployment insurance payments and reduced IT and training costs.

|

|

Vormetric Data Security Manager v6.4.5: The Vormetric Data Security Manager (DSM) from Thales eSecurity provisions and manages keys for Vormetric Data Security Platform solutions, including Vormetric Transparent Encryption, Vormetric Tokenization with Dynamic Data Masking, Vormetric Application Encryption, and Vormetric Key Management.

|

|

Web-based Ansible Automation Platform: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides Ansible AWX 17.1.0, NGINX 1.20.0, PostgreSQL 10.16.0, phpPgAdmin 5.6, and Docker 20.10.6 on CentOS 7.9 or Ubuntu 20.04.

|

|

Web-based GUI Docker Runtime: This preconfigured image offered by VMLab, an authorized reseller for Websoft9, provides Docker 20.10.6 and Portainer Community Edition 2.1.1 on Ubuntu 18.04. This open-source management toolset allows you to easily build and maintain Docker environments.

|

|

WISE-PaaS-Dashboard: WISE-PaaS/Dashboard from Advantech, a data analysis and visualization tool, connects to commonly used databases and seamlessly integrates with WISE-PaaS platform data.

|

Consulting services

|

|

1-Day Workshop: On-premises Spark to Databricks: Neal Analytics’ workshop will review on-premises Spark workloads running on Hadoop, assess business needs, and develop a roadmap for your migration to Azure Databricks.

|

|

Advanced Analytics: 4-Week Implementation: KUMULUS’ Advanced Analytics solution offers you the powerful tool of predictive marketing by using Azure Machine Learning, Azure AI, Azure IoT Hub, and more. This offer is available only in Portuguese.

|

|

Agile Transformation: 4-Week Implementation: Transform your organization by aligning Agile principles with tools like Azure Boards and Azure Artifacts in this implementation by KUMULUS. This offer is available only in Portuguese.

|

|

AI Business School: Healthcare (1-Day Workshop): Designed for healthcare organizations, NTT’s workshop aims to equip decision makers with AI tools like Microsoft Azure Cognitive Services and Azure Machine Learning Studio. The goal is to optimize patient treatment and care based on real-time data. This offer is available only in German.

|

|

AI Business School: Higher Education (1-Day Workshop): NTT’s workshop aims to introduce tools like Microsoft Azure Cognitive Services and Azure Machine Learning Studio to organizations in the higher education public sector so they can leverage the power of AI in the field of research and digital education. This offer is available only in German.

|

|

AI Business School: Manufacturing (1-Day Workshop): Aimed at the manufacturing industry, NTT’s workshop will equip decision makers with AI tools like Azure Cognitive Services and Azure Machine Learning Studio so they can make informed decisions based on real-time data and optimize processes. This offer is available only in German.

|

|

AI Business School: Public Sector (1-Day Workshop): Designed for public sector leadership, NTT’s workshop aims to equip administrators with AI tools like Azure Cognitive Services, Azure Machine Learning Studio, and Microsoft Power Platform. This offer is available only in German.

|

|

AI Business School: Retail (1-Day Workshop): Designed for the retail sector, NTT’s workshop can help businesses use AI tools like Azure Cognitive Services, Azure Machine Learning Studio, and Microsoft Power Platform to learn consumer shopping patterns based on real-time data. This offer is available only in German.

|

|

Application Modernization: 4-Week Implementation: KUMULUS’ implementation can help you modernize your applications with Microsoft Azure DevOps, or you can fully restructure them using cloud models or by leveraging containers and Kubernetes. This offer is available only in Portuguese.

|

|

Application Modernization: 5-Week Assessment: This framework from Cosmote, a subsidiary of the Hellenic Telecommunications Organization (OTE), will help you evaluate which of its 6Rs (retire, refactor, rehost, retain, repurchase, or replatform) is best suited to your Microsoft Azure cloud transformation journey.

|

|

Azure Active Directory Connect: 5-Day Implementation: Mismo Systems will help integrate your on-premises Active Directory to cloud-based Azure Active Directory. Use single sign on for quick access to both on-premises and cloud-based applications from anywhere at any time.

|

|

Azure Datacenter Roadmap: 1-Day Workshop: In this workshop, timengo will define the target architecture on Microsoft Azure and design a roadmap so you can satisfy your business needs and meet dynamic data migration requirements. This offer is available only in Danish.

|

|

Azure Governance and Fundamentals: 2-Hour Briefing: VNEXT’s briefing will provide a high-level overview of fundamentals, concepts, and implementation insights into what governance in Microsoft Azure looks like and how to architect and implement it.

|

|

Azure IoT Hub: 1-Week Proof of Concept: This proof of concept from IFI Techsolutions will help design an Azure IoT hub so your business can harness the benefits of predictive monitoring and maintenance with secure, durable, and open edge-to-cloud solutions.

|

|

Azure Migration: 1-Hour Briefing: Chunghwa Telecom’s briefing will show how you can use any language, tool, or architecture to build applications and integrate public cloud apps with your existing IT environment. |

|

Azure Migration Services: 6-Week Implementation: Cosmote, a subsidiary of the Hellenic Telecommunications Organization (OTE), will review your infrastructure and help migrate your on-premises datacenter workloads to Microsoft Azure. Deliverables included testing, validation, and support.

|

|

Azure Networking and Security: 1-Day Workshop: In this workshop, T-Systems will showcase the complex and varied services offered by Microsoft Azure and help you design an optimized network layout with a focus on secure access and a customized data and protection plan.

|

|

Azure Virtual Desktop – 1-Day Workshop: timengo will hear your company’s requirements for a platform using Microsoft Azure Virtual Desktop (formerly Windows Virtual Desktop), then devise a roadmap and recommendations for configuration, implementation, and commissioning. This offer is available only in Danish. |

|

Azure Virtual Desktop: 1-Hour Briefing: This briefing from Chunghwa Telecom offers personalized customer support along with expertise to help you design and implement a Microsoft Azure Virtual Desktop (formerly Windows Virtual Desktop) infrastructure for your IT environment.

|

|

Azure Virtual Desktop: 1-Week Proof of Concept: SenIT Consulting will assess your environment and business needs, then set up remote desktops and applications with the help of Microsoft Azure Virtual Desktop (formerly Windows Virtual Desktop). This offer is available in Spanish and English.

|

|

Azure Virtual Desktop: 5-Day Proof of Concept: Devoteam Alegri GmbH will help you design and deploy Microsoft Azure Virtual Desktop (formerly Windows Virtual Desktop) with new multisession functionality that enables secure, high-performance, and fast provisioning of Windows 10 desktops anywhere and on any device.

|

|

Azure Virtual Desktop: 5-Day Proof of Concept: In this proof of concept, NTT will outline best practices, steps, benefits, and costs involved in installing Microsoft Azure Virtual Desktop (formerly Windows Virtual Desktop) across your organization. This offer is available only in German.

|

|

Azure Virtual Desktop: Custom Implementation: vNext IQ’s implementation of Microsoft Azure Virtual Desktop (formerly Windows Virtual Desktop) is aimed at clients who prioritize a modern, connected, secure, and remote work environment for employees. |

|

Azure Virtual Desktop Deployment: 2 Weeks: Cambay Consulting’s experts will deploy Microsoft Azure Virtual Desktop so your organization can benefit from a productive, scalable, and flexible virtualized environment.

|

|

Azure Virtual Desktop FastStart: 1-Week Implementation: CANCOM experts will utilize Microsoft’s Cloud Adoption Framework to implement an Azure Virtual Desktop (formerly Windows Virtual Desktop) environment while their FastStart service will ensure the solution is scalable, secure, cost-effective, and easy to manage.

|

|

Better Together Governance: 3-Week Implementation: Embark on a complete data governance journey with Adastra Corporation in this three-week implementation. Create a holistic, up-to-date map of your data landscape with Microsoft Azure Purview and empower customers to find valuable, trustworthy data.

|

|

Campus Edge Ideation: 1-Day Workshop: Get an overview of the promising possibilities of 5G mobile technology and campus networks in this workshop by T-Systems. Identify and explore edge computing services based on Microsoft Azure Stack and ideate upon relevant use cases for Campus Edge.

|

|

Cloud Health Check: 4-Week Assessment: Collective Insights will review on-premises and Microsoft Azure environments to determine migration priorities for each application while analyzing platform configuration, resource grouping, networking, and connectivity. A review of Azure costs and governance strategies will be provided.

|

|

Cloud Managed Services for Azure Implementation: Leveraging four key areas of effective cloud management — security, finance, technology, and operational expertise — Lightstream’s managed services will alleviate administrative issues, improve security, and cut costs.

|

|

Cloud Migration: 4-Week Implementation: Abersoft will evaluate your infrastructure and migrate any mission-critical workloads to Microsoft Azure. Stay secure and resilient across hybrid environments while optimizing cost and increasing productivity.

|

|

Cloud-Native: 4-Week Implementation: KUMULUS’ offer focuses on two services: Cloud-native application development and implementation of new tech. Optimize your applications by integrating Azure Stack hybrid cloud and new Azure technologies, such as AI, machine learning, and IoT. This offer is available only in Portuguese.

|

|

Data Archival on Cloud: 1-Week Proof of Concept: This proof of concept from IFI Techsolutions will extend your on-premises backup archive solutions to Microsoft Azure. The solution also includes capabilities like auto-tiering, auto-purge, choice of local or global redundancy, and secure access.

|

|

Database and Migration: 4-Week Assessment: Datasolution’s assessment will help customers cost-effectively migrate their data and services from an on-premises environment to a cloud environment while maximizing performance and security. This offer is available only in Korean.

|

|

DataOps: 4-Week Implementation: KUMULUS will use the DataOps methodology to help your organization implement a Microsoft Azure-based data platform in four weeks. This ensures security, flexibility, and cost savings for your business.

|

|

Disaster Recovery (DraaS): 10-Day Implementation: Mismo’s Disaster Recovery (DraaS) will help you achieve your recovery point objective (RPO) and recovery time objective (RTO) for your business applications and implement an optimal cloud backup and disaster recovery plan using Microsoft Azure Site Recovery.

|

|

Enterprise Integration: 4-Week Implementation: KUMULUS will use solutions like Microsoft Azure Data Factory to create enterprise-scale hybrid data integrations. Optimize business decisions by having access to real-time data analytics and greater data governance for your team. This offer is available only in Portuguese.

|

|

Fully Optimized Cloud Infrastructure: 1-Week Assessment: Mismo’s assessment offers complete optimization and governance of public cloud infrastructure, helping you cut investment costs by rightsizing virtual machines and conducting daily audits across your core Microsoft Azure services.

|

|

KS Shadow IT: 4-Week Implementation: This implementation from Knowledge System Consulting México will strengthen your organization’s security by using Microsoft Azure tools. A roadmap to remediate any security shortcomings will be provided. This offer is available only in Spanish.

|

|

KS Threat Protection: 3-Week Implementation: Knowledge System Consulting México will elevate your organization’s security posture by using advanced managed detection and response (MDR) solutions for Microsoft Defender, Azure Defender, Microsoft Cloud App Security, and Azure Sentinel. This offer is available only in Spanish.

|

|

Link Cloud to Azure – ExpressRoute Implementation: Zertia LinkCloud’s service simplifies and expedites your adoption of Microsoft solutions by implementing their ExpressRoute port configuration in Microsoft Azure. This service is available only in Spanish.

|

|

Machine Learning & AI Workshops: SFL Scientific’s workshops will accelerate your AI adoption by introducing you to the capabilities of the Microsoft Azure Machine Learning suite. Use data to predict, measure, and verify specific outcomes to enable workforce efficiency and reduce operating costs.

|

|

Manage Virtual WAN – SD-WAN Citrix: This service available from Zertia automates network deployments, eliminating the complexity of connecting remote branches to the closest Azure point of presence (PoP). This offer is available only in Spanish.

|

|

MongoDB to Cosmos DB: 1-Day Migration Workshop: Microsoft Azure Cosmos DB is an ideal destination for organizations looking to migrate MongoDB to the cloud. This workshop by Neal Analytics will walk you through the steps, helping you modernize your data to improve latency and ensure scalability of your business resources.

|

|

Quick Azure Virtual Desktop: 4-Week Implementation: Using proven processes and tools, 4MSTech offers a quick yet comprehensive implementation of Microsoft Azure Virtual Desktop (formerly Windows Virtual Desktop). Provide employees a virtualized experience that boosts productivity and collaboration.

|

|

SAP on Azure: 2-Hour Briefing: This briefing from Dimension Data, part of NTT, will help your organization understand the process, planning, and execution required to migrate SAP systems to Microsoft Azure. Learn how you can minimize downtime and business disruption by using services from Dimension Data.

|

|

SAP on Azure: 10-Day Assessment: This engagement from Cosmote, a subsidiary of the Hellenic Telecommunications Organization (OTE), will determine if your SAP environment is ready to be moved to Microsoft Azure. The offer includes a cost-benefit analysis along with a feasibility study and a high-level migration plan.

|

|

SAP on Azure: Assessment: This assessment from Telekom Romania Communications will help you understand the migration, infrastructure, and service costs associated with the transition of SAP to Microsoft Azure. Personalized workshops will tailor the process to your business needs and budget.

|

|

Start Your AKS Journey with a 5-Week Proof of Concept: This consulting offer from MaibornWolff will lay the groundwork for your adoption of Azure Kubernetes Service (AKS). Experts will ensure your container platform is set up for automated deployments using CI/CD (continuous integration/continuous deployment) pipelines.

|

|

Virtual Assistant with Voice.ai (1-Day Workshop): Say goodbye to classic chatbots and learn how to create virtual assistants using Bot Framework Composer and Microsoft Azure Cognitive Services. NTT’s workshop, available in German and English, will show you how to integrate telephony with voice AI in your virtual agent.

|

|

Zero Trust: 6-Week Assessment: Collective Insights will assess your environment and improve the security of your Microsoft ecosystem (Active Directory, Azure Active Directory, Microsoft Endpoint Manager) by establishing a zero-trust architecture model in which every access request is fully authenticated, authorized, and encrypted.

|

|

by Contributed | Jun 29, 2021 | Technology

This article is contributed. See the original author and article here.

We’ve reviewed the new settings released for Office since the last security baseline (v2104) and determined there are no additional security settings that require enforcement. Please continue to use the Security baseline for Microsoft 365 Apps for enterprise v2104 -FINAL which can be downloaded from the Microsoft Security Compliance Toolkit.

New Office policies are contained in the Administrative Template files (ADMX/ADML) version 5179 published on 6/7/2021 which introduced 7 new user settings. We have attached a spreadsheet listing the new settings to make it easier for you to find them.

Only trust VBA macros that use V3 signatures (Worth considering)

Microsoft discovered a vulnerability in Office Visual Basic for Applications (VBA) macro project signing which might enable a malicious user to tamper with a signed VBA project without invalidating its digital signature. This blog post explains how VBA macros signed with legacy signatures do not offer strong enough protection against a malicious actor looking to compromise the files integrity.

Admins should consider upgrading the existing VBA signatures to the V3 signature as soon as possible after they upgrade Office to the supported product versions, see instructions in the links below. Once this is complete you can disable the old VBA signatures by enabling the ”Only trust VBA macros that use V3 signatures” policy setting.

- Instructions on how to upgrade Office VBA macro signatures:

If you have questions or issues, please let us know via the Security Baseline Community or this post.

by Contributed | Jun 29, 2021 | Technology

This article is contributed. See the original author and article here.

Whether it’s for reporting and offloading queries from production, there are things you need to keep in mind when using a Geo Replicated Azure SQL Database Readable Secondary. Discuss with MVP Monica Rathbun the challenges when it comes to performance tuning, what to keep in mind, and what to expect.

Watch on Data Exposed

Resources:

by Contributed | Jun 29, 2021 | Technology

This article is contributed. See the original author and article here.

React class type is available

React is a popular JavaScript library for building user interfaces (UI). It is a declarative way to create reusable components for your website. Learn how to create a lab that uses React and some popular JavaScript libraries Redux and JSX. We describe how to install NodeJS, which is a convenient way to run a web server for your React application.

We created two example classes so you could use the operating system of your choice.

The React Development on Windows class type shows how to install Visual Studio 2019 for your development environment. The article includes:

- Lab Account settings needed to use Visual Studio 2019 marketplace image.

- Recommended lab settings.

- How to install debugger extensions for your browser.

- How to allow teacher to view student’s website.

- Example costing for the class.

The React Development on Linux class type shows how to install Visual Studio Code for your development environment. The article includes:

- Recommended lab settings.

- How to install Visual Studio Code and needed extensions.

- How to install debugger extensions for your browser

- How to allow teacher to view student’s website.

- Example costing for the class.

Thanks,

Azure Lab Services Team

by Contributed | Jun 29, 2021 | Technology

This article is contributed. See the original author and article here.

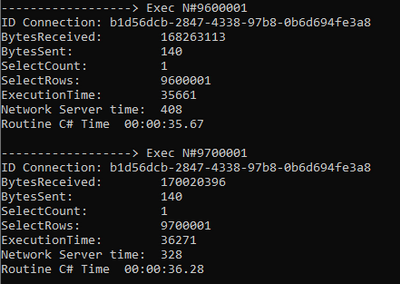

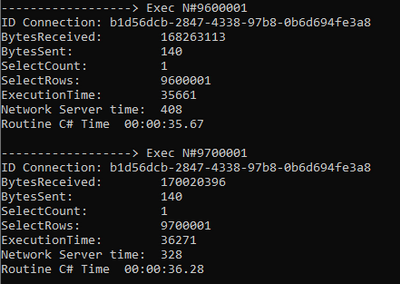

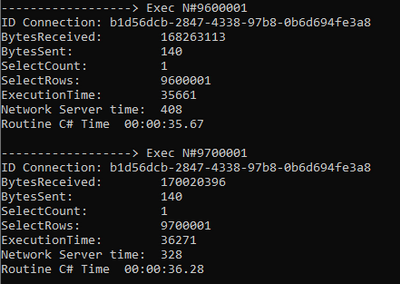

Today, I received a very good question from a customer about what is the command timeout that external tables is using. Following I would like to share with you my experience playing with this.

We need to know that the command timeout is configured by application side, so, for this reason, I developed a small C# application with the following characteristics:

- Every loop I’m increasing the SELECT TOP in 100000 rows.

- PerformanceVarcharNVarchar3 is an external tables that contains around 1.000.000.000 of rows.

- I used the connection statistiics to obtain the time invested on every operation.

- Changing the command timeout to multiple values (in this case to 0) the operation will wait until the command timeout is reached.

for (int tries = 1; tries <= nRows; tries+=100000)

{

stopWatch.Start();

C.SqlCommand command = new C.SqlCommand("SELECT top " + tries.ToString() + "* FROM [PerformanceVarcharNVarchar3]", oConn);

command.CommandTimeout = 0;

Console.WriteLine("------------------> Exec N#" + tries.ToString());

command.ExecuteNonQuery();

IDictionary currentStatistics = oConn.RetrieveStatistics();

if (bMetric)

{

Console.WriteLine("ID Connection: " + oConn.ClientConnectionId.ToString());

Console.WriteLine("BytesReceived: " + currentStatistics["BytesReceived"]);

Console.WriteLine("BytesSent: " + currentStatistics["BytesSent"]);

Console.WriteLine("SelectCount: " + currentStatistics["SelectCount"]);

Console.WriteLine("SelectRows: " + currentStatistics["SelectRows"]);

Console.WriteLine("ExecutionTime: " + currentStatistics["ExecutionTime"]);

Console.WriteLine("Network Server time: " + currentStatistics["NetworkServerTime"]);

}

In this situation, as we could see, the command timeout that a query that is running using External Table will be the same that the application has.

Enjoy!

Recent Comments