MGDC for SharePoint FAQ: How can I estimate my Azure bill? Updated!

This article is contributed. See the original author and article here.

Introduction

When gathering SharePoint data through Microsoft Graph Data Connect, you are billed through Azure. As I write this blog, the price to pull 1,000 objects from Microsoft Graph Data Connect in the US is $0.75, plus the cost for Azure infrastructure like Azure Storage and Azure Synapse.

That is true for all datasets except the SharePoint Files dataset, which has a different billing rate. Because of its typical high volume, the SharePoint Files dataset is billed at $0.75 per 50,000 objects.

I wrote a blog about what counts as an object, but I frequently get questions about how to estimate the overall Azure bill for the Microsoft Graph Data Connect for SharePoint for a specific project. Let me try to clarify things…

Before we start, here are a few notes and disclaimers:

- These are estimates and your specific Azure bill will vary.

- Check the official Azure links provided. Rates may vary by country and over time.

- These are Azure pay-as-you-go list prices in the US as of October 2024.

- You may benefit from Azure discounts, like savings using a pre-paid plan.

How many objects?

To estimate the number of objects, you start by finding out the number of sites in the tenant. This should include all sites (not just active sites) in your tenant. You can find this number easily in the SharePoint Admin Center. That will be the number of objects in your SharePoint Sites dataset.

Finding the number of SharePoint Groups and SharePoint Permissions will require some estimation. I recently collected some telemetry and saw that the average number of SharePoint Groups per Site for a sample of large tenants was around 31. The average SharePoint permissions per site was around 61. The average number of files per site was 2,874.

Delta pulls (gathering just what changed) will be smaller, but that also varies depending on how much collaboration happens in your tenant (in the Delta numbers below, I am estimating a 5% change for an average collaboration level).

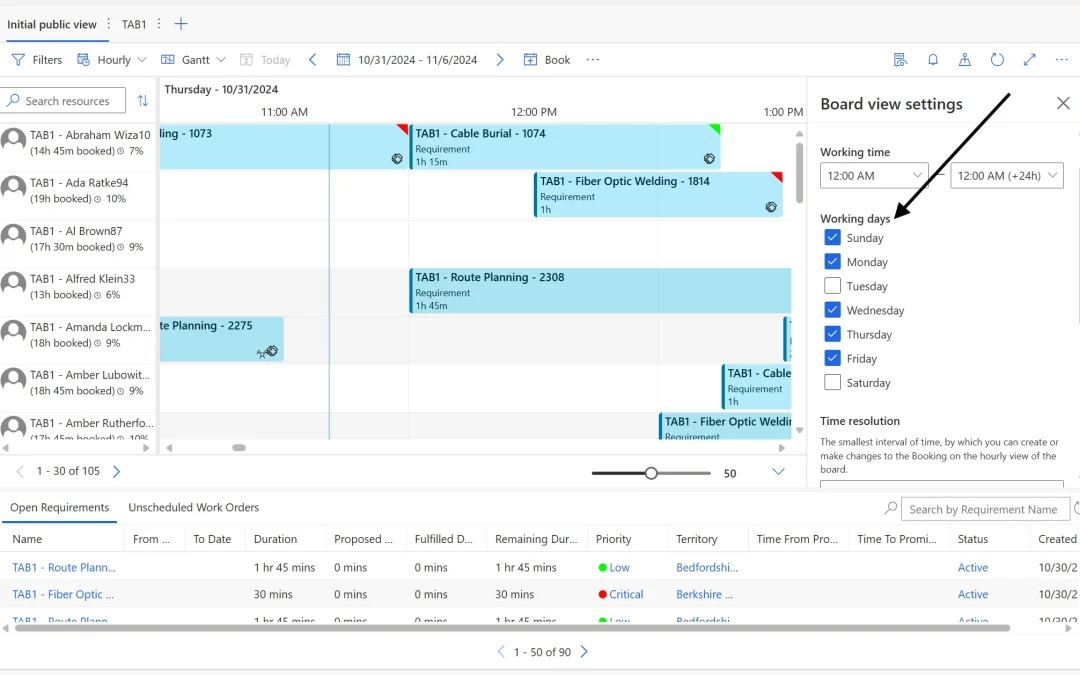

Here’s a table to help you estimate your Microsoft Graph Data Connect for SharePoint costs:

Notes for the table above:

* Higher collaboration level assumes twice the average in terms of groups, permissions and files.

** Security scenario includes Sites, Groups and Permissions. Capacity Scenario includes Sites and Files.

*** Delta assumes 5% change for average collaboration and 10% change for high collaboration. These are on the high side for one week’s worth of changes. Your numbers will likely be smaller.

As you can see, smaller tenants with an average collaboration will see costs below $10 for the smaller Sites dataset and below $1,000 for larger datasets like Permissions or Files.

If you want to estimate the number of SharePoint objects more precisely, there is an option to sample the dataset and get a total object count without pulling the entire dataset. For more information, see MGDC for SharePoint FAQ: How can I sample or estimate the number objects in a dataset?

The official information about Microsoft Graph Data Connect pricing is at https://azure.microsoft.com/en-us/pricing/details/graph-data-connect/

How much storage?

The SharePoint information you get from Microsoft Graph Data Connect will be stored in an Azure Storage account. That also incurs some cost, but it’s usually small when compared to the Microsoft Graph Data Connect costs for data pulls. The storage will be proportional to the number of objects and to the size of these objects.

Again, this will vary depending on the amount of collaboration in the tenant. More sharing means more members in groups and more people in the permissions, which will result in more objects and also larger objects.

I also did some estimating of object size and arrived at around 2KB per SharePoint Site object, 20KB per SharePoint Group object, 3KB per Permission object and 1KB per file object. There are several Azure storage options including Standard vs. Premium, LRS vs. GRS, v1 vs. v2 and Hot vs. Cool. For Microsoft Graph Data Connect, you can go with a Standard + LRS + V2 + Cool blob storage account, which costs $0.01 per GB per month.

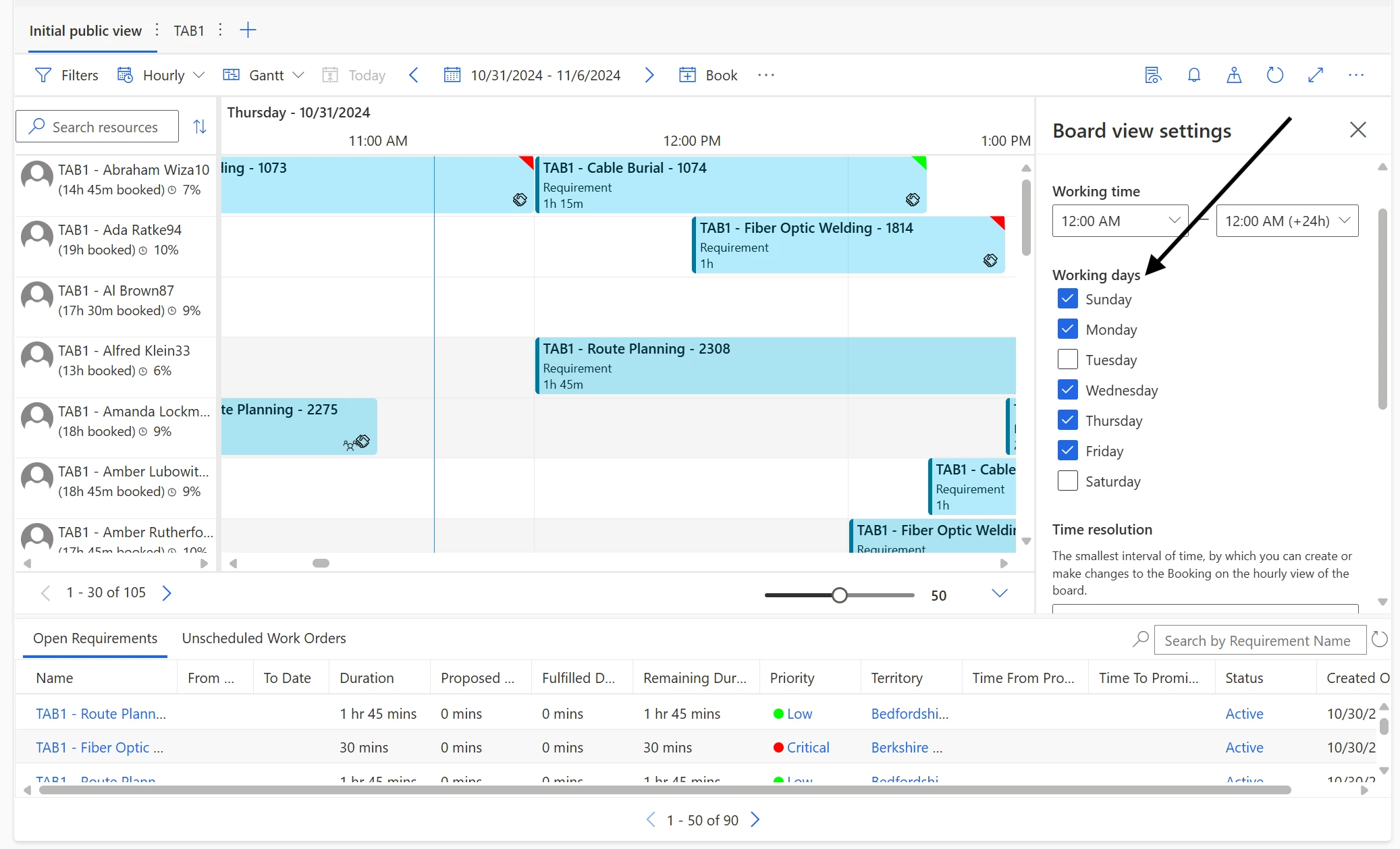

Here’s a table to help you estimate your Azure Storage costs:

The same notes from the previous table apply here.

As you can see, smaller tenants with average collaboration will see storage costs below $1000/month, most of it going to storing the larger Files dataset. The cost for delta dataset storage is also fairly small, even for the largest of tenants. There are additional costs per storage operation like read and write but those are negligible at this scale (for instance, $0.065 per 10,000 writes and $0.005 per 10,000 reads).

The official information about Azure Storage pricing is at https://azure.microsoft.com/en-us/pricing/details/storage/blobs/

What about Synapse?

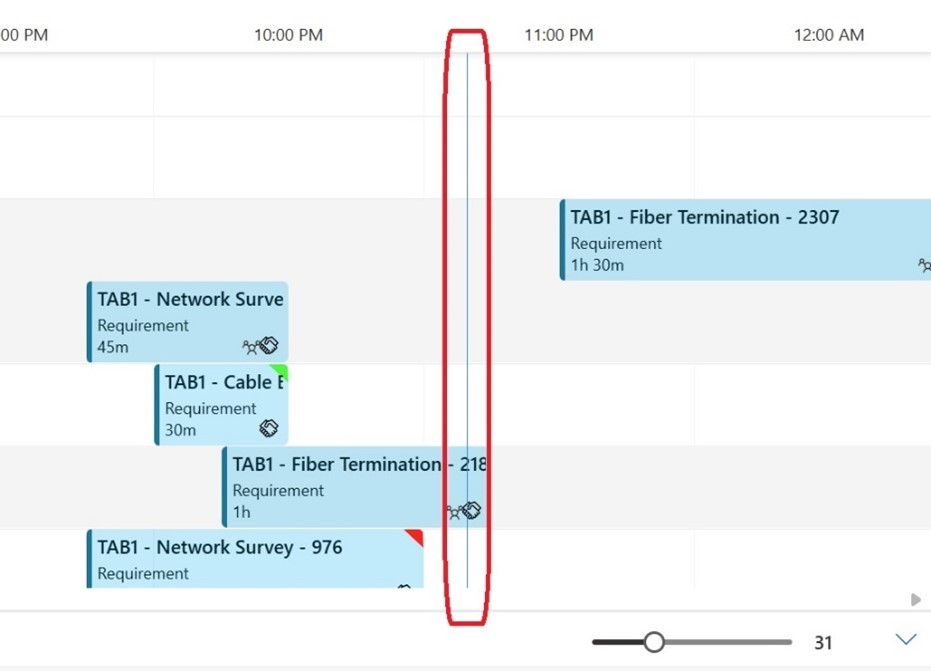

You will also typically use Azure Synapse to move the SharePoint data from Microsoft 365 to your Azure account. You could run a pipeline daily to get the information and do some basic processing, like computing deltas or creating aggregations.

Here are a few of the items that are billed for Azure Synapse when running Microsoft Graph Data Connect pipelines:

- Azure Hosted – Integration Runtime – Data Movement – $0.25/DIU-hour

- Azure Hosted – Integration Runtime – Pipeline Activity (Azure Hosted) – $0.005/hour

- Azure Hosted – Integration Runtime – Orchestration Activity Run – $1 per 1,000 runs

- vCore – $0.15 per vCore-hour

As with Azure Storage, the costs here are small. You will likely need one pipeline run per day and it will typically run in less than one hour for a small tenant. Large tenants might need a few hours per run to gather all their SharePoint datasets. You should expect less than $10/month for smaller tenants and less than $100/month for larger and/or more collaborative tenants.

The official information about Azure Synapse pricing is at https://azure.microsoft.com/en-us/pricing/details/synapse-analytics/

Closing notes

These are the main meters in Azure to get you started with costs related to Microsoft Graph Data Connect for SharePoint. I suggest experimenting with a small test/dev tenant to get familiar with Azure billing.

For more information about Microsoft Graph Data Connect for SharePoint, see the links at https://aka.ms/SharePointData.

Recent Comments