by Contributed | Oct 26, 2021 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Today, we are excited to announce the general availability of tax calculation for Microsoft Dynamics 365.

Over the past three decades, technology has made the world smaller. The steady drive of globalization and digitalization has pushed through significant headwindssuch as market protectionism, global recessions, and, most recently, a global pandemicto change the way we live and conduct business. Now, despite vast geographic distances, it is relatively simple to connect, communicate, and trade instantly with almost anyone. This has set the stage for businesses of all sizes and industries to expand globally in pursuit of new markets, suppliers, talent, and revenue streams.

As businesses pursue global expansion, they are challenged to keep pace with a myriad of ever-evolving country-specific laws and frequently changing legal requirements from local, state, and national tax authorities. For many years, the only option available for business leaders looking to ensure tax compliance globally was to hire enough full-time finance and tax professionals to monitor policy changes and align company processes accordingly. Indeed, this has been the standard solution for some time now.

Microsoft Dynamics 365 is changing this situation for companies with intelligent and timely globalization solutions, such as tax calculation. Tax calculation not only helps businesses unify their tax-relevant data, but it also leverages automation to make their tax calculation processes more efficient. At the same time, it assists in minimizing compliance risk by reducing opportunities to introduce errors into the process. In these ways, our new tax calculation service is simplifying and improving tax compliance for global businesses.

Learn more in our recent blog: Reduce complexity across global operations with Dynamics 365

Tax calculation service overview

Tax calculation is a low code, hyper-scalable, multitenant, microservice-based tax engine that enables organizations to automate and simplify the tax determination and calculation process. It is a standalone solution built on Microsoft Azure that enhances tax determination and calculation capabilities of Dynamics 365 applications through a flexible and fully configurable solution.

As tax calculation becomes generally available, it will integrate with Dynamics 365 Finance and Dynamics 365 Supply Chain Management, and we plan to integrate with more first and third-party applications in the near future. Additionally, it is fully backward compatible with the existing tax engine in Dynamics 365 Finance and Dynamics 365 Supply Chain Management and can be deployed as a new feature in the Feature Management workspace. Tax calculation service can also be enabled at more granular levels, including by legal entity or business process.

Tax determination

One challenge for organizations today is determining the appropriate tax for their business transactions. This challenge increases in difficulty as organizations add new regions where they trade and as the volume and types of transactions they process grows.

Tax calculation configures and determines tax codes, rates, and deductions in a low code and flexible way based on any combinations of the fields of a taxable document, such as shipping to or from location address, item category, or nature of counterparty, typically found on purchase orders or invoices.

Tax calculation

Another related challenge that companies face in addition to determination is calculating the tax due for their transactions. Again, this challenge becomes more difficult as business scale increases and as business is conducted in more distinct tax regions, each with its own authorities and requirements.

Our tax calculation service helps businesses meet this challenge as well by configuring with low code experience and executing the complex tax calculation formulas and conditions required by tax regulators, such as tax calculation based on margin or tax calculation on top of other tax codes.

Multiple tax registration numbers

Organizations also face challenges when they have multiple tax registration numbers that must be rolled up or reconciled to one central legal entity or party. When businesses add multiple tax registration numbers, the complexity of tax determination and calculation multiplies in step.

Tax calculation enables organizations to support multiple tax registration numbers under a single party, such as a legal entity, customer, or vendor. It also supports automatic determination of the correct tax registration number on taxable transactions like sales orders and purchase orders. And it works across various workflow scenarios, including transfer pricing, consignment warehouse, or low-risk distributor model.

In conjunction with new tax calculation, modified Tax Reporting provides new capabilities to execute country-specific, tax regulatory reports like VAT Declaration, EU Sales List, and Intrastat from a single legal entity. In multiple tax registration business scenarios, the reports select sets of data only relevant for transactions with specific country tax registration and provide results in the legally required form of an electronic file or a report layout required to file taxes in the country of tax registration.

Learn more about the feature enhancements of Tax Reporting and availability for specific countries and regions in our release notesand TechTalk.

Tax on transfer orders

A final challenge that businesses face when confronting the complexities and risk of tax compliance in foreign markets is determining and calculating the tax on transfer orders. This situation is quite common, such as when a business has a regional manufacturing facility or central warehouse that feeds storefronts scattered across multiple countries.

With tax calculation, businesses can allow for tax on transfer orders to support the EU regulation “Exempt intra-EU supply according to article 138 of the Directive 2006/112/EC” for Intra-EU Supply of goods.

Availability

Tax calculation is deployed in the following Azure geographies.

- Asia Pacific

- Australia

- Canada

- Europe

- Japan

- United Kingdom

- United States

Learn more about the features of tax calculation service inour tax calculation overview, and check out our recent TechTalk on tax calculation.

Learn more what some of our partners are saying about the new tax calculation capabilities of Dynamics 365:

Next steps

If you are an existing Dynamics 365 user and would like to start today with tax calculation and Tax Reporting, check out our Get started with tax calculation documentation. Or, if you would like to see how Dynamics 365 Finance can benefit your company and enable smoother global expansion with microservice solutions such as tax calculation and Tax Reporting, we invite you to get started with a free trial today.

The post Tax calculation enhancements are now available for Dynamics 365 appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Oct 25, 2021 | Technology

This article is contributed. See the original author and article here.

Attackers are constantly evolving their phishing technique with sophisticated campaigns to subvert email protection systems like Microsoft Defender for Office 365 and make your security perimeters vulnerable. For this reason, it’s critical that SecOps professionals empower employees to be hypervigilant to such threats and report them as soon as they land in their inboxes.

Microsoft Defender for Office 365 has a fully automated detection and remediation system for emails, URLs and attachments that are reported by your employees. User and admin submissions are critical positive reinforcement signals for our machine learning based detection systems to review, triage, rapid-learn and mitigate attacks. The submission pipeline is a tightly integrated solution with automated mail-flow filters that protect your employees from similar threats.

You can learn here on how to report an email to Microsoft and manage your submissions here.

Once you report an email through the submission process, our system follows a set of actions. If you have any organizational compliance restrictions that prevents a user from reporting sensitive emails outside of your infrastructure, we recommend using the custom mailbox reporting option detailed here. This will ensure that user reported emails will come to your custom mailbox. Admins who are granted privileges to review those emails can then report them back to Microsoft.

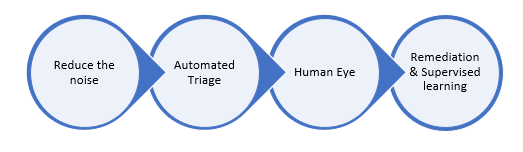

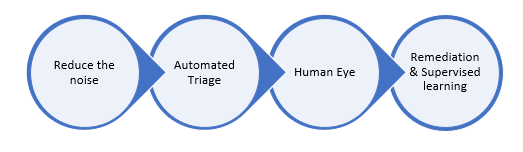

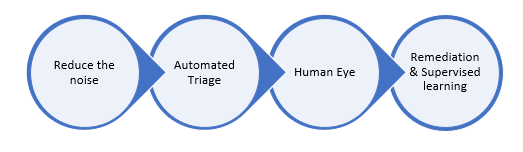

Figure 1: Behind the scenes post-submission process flow.

- Reduce the Noise: We wanted to reduce the noise from the submissions to ensure we send only clean signals for our automated triage system.

- Simulation: Your simulated messages from Attack Simulation Training or any of your 3rd party vendor will be filtered.

- Policy overrides: Wrongly classified messages due to your tenant’s ploicies or sometimes to end users’ policies are notified so that you can act at your end. From the override scenarios, we also honor your policies if they recommend detection improvements.

- Email Auth Check: We validate if the email authentication passed or failed during the email delivery.

You can learn about configuring 3rd party simulations here and learn about our secure by default strategy here.

- Automated Triage: We run our detection filters once again to identify if our systems have adapted between the time of delivery and your submission.

- All our machine learning based email filters will be run on your submissions to reclassify them in case of updated learning.

- In case of entities such as URLs and attachments in your submitted emails, we detonate them once again in our highly advanced sandbox environment to uncover the status of weaponization and assign the verdicts accordingly.

- We run a series of machine learning models exclusively on the reported submissions to identify, cluster the patterns and reclassify them in case of change in verdicts based on anomalies observed in similar submissions across our global customer base.

- We generate automated alerts on user reported phishing submissions by default and enable AIR (Automated Investigation and Response) so that these submissions will carry the verdicts from our automated triaging process. Please note that AIR is only available in MDO plan 2/E5/A5.

You can learn more about our AIR capabilities here.

- Human Eye: As the weaponization of phishing entities are dynamic, attackers constantly use multiple evasion techniques to bypass filters. That’s why a hybrid approach and human partnered machine learning based solution is the ideal method to stay on top of advanced threats and weaponization tricks.

- We prioritize submissions based on various factors such as phish severity, malware indicators, false positives, high volume anomalies, advanced pattern indicators, and whether reported by an admin or user.

- Expert graders/analysts are well trained on phish detection techniques and will be dissecting submissions through various Indicators of Compromise (IOC) and do deeper investigation on all email entities such as headers, URLs, attachments, and sender reputation to assign the right verdict on submissions.

- Graders generally deliver informed decisions as high-quality verdict signals that are being sent to the above discussed machine learning models 2(c) for continuous reinforcement learning.

- Human graded verdicts always take a higher priority and can override the verdict given by the automated triage process.

Human graders review anonymized email header and body metadata and hashed entities in a highly compliant environment that meets all the privacy guidelines from our Trust Center. You can learn more about Microsoft Trust Center here. You can also learn more about data storage here, and learn more about our data retention policies here.

- Remediation and supervised learning: The new verdict either from automated triage or human grader review will be sent for both rapid remediation and retrain our ML filters faster.

- We apply the graded labels from the automated triage system or human graders on the submitted and all related clusters belonging to this submitted pattern.

- We move all the messages from the current delivery location to the new location for all the impacted end users. For example, the system will move the messages from inbox to quarantine in case of ‘phish’ verdicts and move from Quarantine/Junk to inbox in case of ‘Good’ verdicts using our Zero-hour Auto Purge (ZAP) platform.

- We also identify IOCs by the submitted clusters and add them to our reputations to initiate instantaneous mitigation against phish and malware campaigns.

- Any new incoming mail from a similar pattern or IOC will be auto classified with the new verdict to reduce the triage load from your SecOps.

You can learn more about ZAP here.

The automated User Submission workflow is carefully designed with state-of-the-art technology to help the organizations to mitigate any False negative or False positive risks faster to reduce MTTR (Mean time to Response) for their SOC team. It also becomes important for organizations to enhance their security postures by training employees who actively participate in this phish detection submission feedback loop. Attack Simulation Training is included with Microsoft Defender for Office 365 P2 and E5 licenses and provides the necessary simulation and training capability that reduces phish vulnerabilities at the user-level. Learn more and get started using Attack Simulation Training here.

Do you have questions or feedback about Microsoft Defender for Office 365? Engage with the community and Microsoft experts in the Defender for Office 365 forum.

by Contributed | Oct 23, 2021 | Technology

This article is contributed. See the original author and article here.

The Story (Michael)

Just before Southcoast Summit 2021 got started, the organizers hosted the Automate Everything – SS2021 Hackathon where every solution revolves around Flic buttons. Wait, you don’t know what a Flic button is? It’s basically a wireless smart button that lets you control devices, apps and services. Push once, twice or hold the button and let each variant trigger a different action. There are multiple use cases in business but also in personal life in which Flic buttons make your life easier. Check out the Flic homepage to learn more.

Meet Petrol Push. A modern day organization that has a clear mission: Save kittens. There are hundreds of kittens all over Britain that get stuck in trees, get lost within the urban jungle or need help in any other kind of way. Luckily Petrol Push underholds a huge fleet of volunteers to rescue kittens every day.

the challenge

As you may know there is a petrol shortage happening right now and of course you wonder, how can Petrol Push keep up their noble mission? Flic Buttons and the Microsoft Power Platform gave them the ability to come up with a solutions to help all their volunteers in their day to day work.

the solution

Every Petrol Push car got a Flic button installed and whenever Petrol Push volunteers pass a gas station, they can indicate with a push of a button, whether the gas station has fuel available or not. This information gets stored on a map so every Petrol Push employee knows where fuel is available and where it’s not. This way the volunteers can keep their focus on their mission. They don’t need to drive around searching for fuel or worry where to gas up. The community of volunteers takes care of that.

Petrol Push cares deeply about their volunteers so they don’t want to put them in danger in any way. That’s why this solution comes with a little extra. Petrol Push workers don’t have to check the map over and over again to see whether anything has changed. If one of the volunteers found a gas station where fuel is available, the button gets pushed and the fleet will get notified with a song. That way the drivers know when to check the map for updates.

Within these times it might happen that our drivers get in trouble themselves, run out of gas, have a flat tire or something else. Once again, Petrol Push cares about their volunteers deeply so the Flic button provides the opportunity to call other volunteers on the road for help. Once again with a song, so no other driver needs to check their phone. The position gets indicated on the map though, so that help can be arranged quickly. It’s only the supervisor that gets an additional text message in order to provide further information.

Note: you will probably know by know, but this use case exemplifies the ability to combine geographic location with notifications that are not based on text. In this way, we want to draw attention to how versatile Power Platform solutions are and we also want to think about the people who can only use devices in a limited way. Please use this use case to customize it to your needs. And always remember, only as a community we are strong, so let’s be inclusive

Now, let’s dive into details and see how this solution actually works

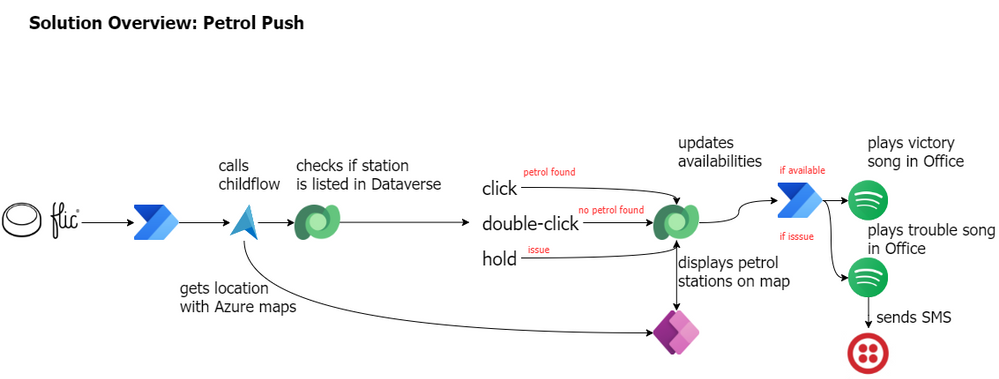

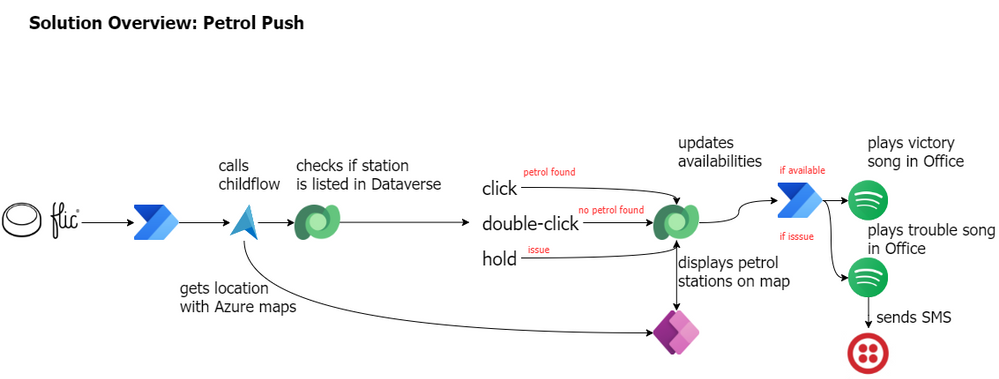

The Flic and the flow (Tomasz)

In a big picture, the flow was built to get information about location of a driver who triggered it, next to lookup details of the closest petrol station (by using Azure Maps API). Finally to save the station’s data together with status into database, so later it can be displayed with a proper color of a pin, inside the app. But in details, it’s much more interesting.

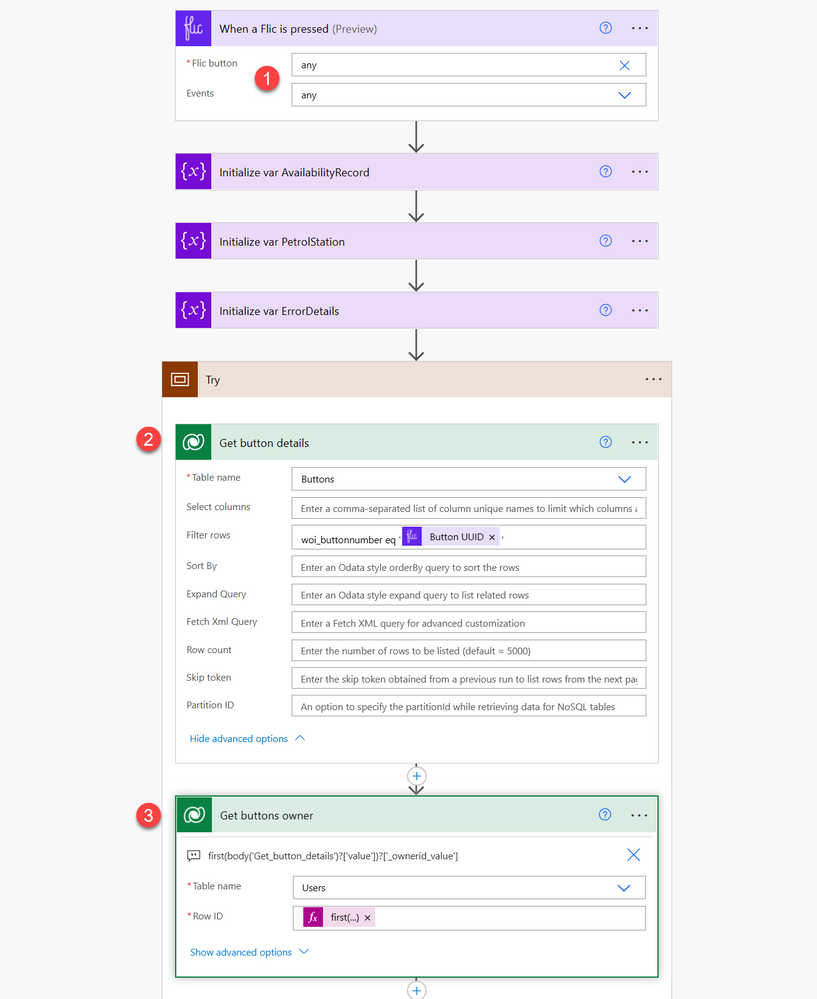

The flow can be triggered by any driver (1). Also, for any Flic event, but that will be described later. Next, bot looks up details of the button itself (2), to get its owner (3). This information will be later used to record data along with information about the driver.

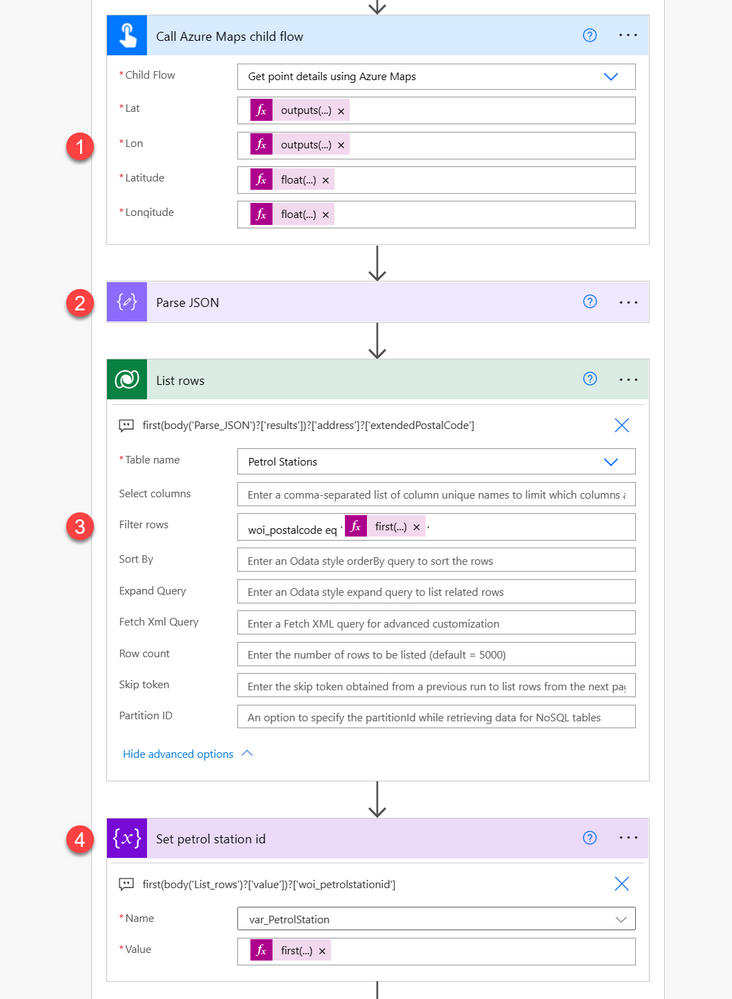

Next the flow calls Azure Maps custom connector via a dedicated child flow (1), by passing latitude and longitude of a driver’s location. Coordinates are obtained using GPS from driver’s phone that is paired with Flic button. Obviously this should be done using the action directly within the parent flow, however for some unknown reasons we were facing an issue while saving process with the action inside, so we decided to move it into a child flow. Don’t judge :)

Data returned by the child flow, that represents details about the nearest petrol station is then parsed (2).

Finally bot using postal code is filtering existing stations’ data to get a match (3). This is done using ODATA expression:

woi_postalcode eq ‘@{first(body(‘Parse_JSON’)?[‘results’])?[‘address’]?[‘extendedPostalCode’]}’. Then it saves its row ID into variable (4). Naturally, if there’s no station for the given postal code, variable will be empty. **We also made an assumption**, that there can be one station for a given postal code :)

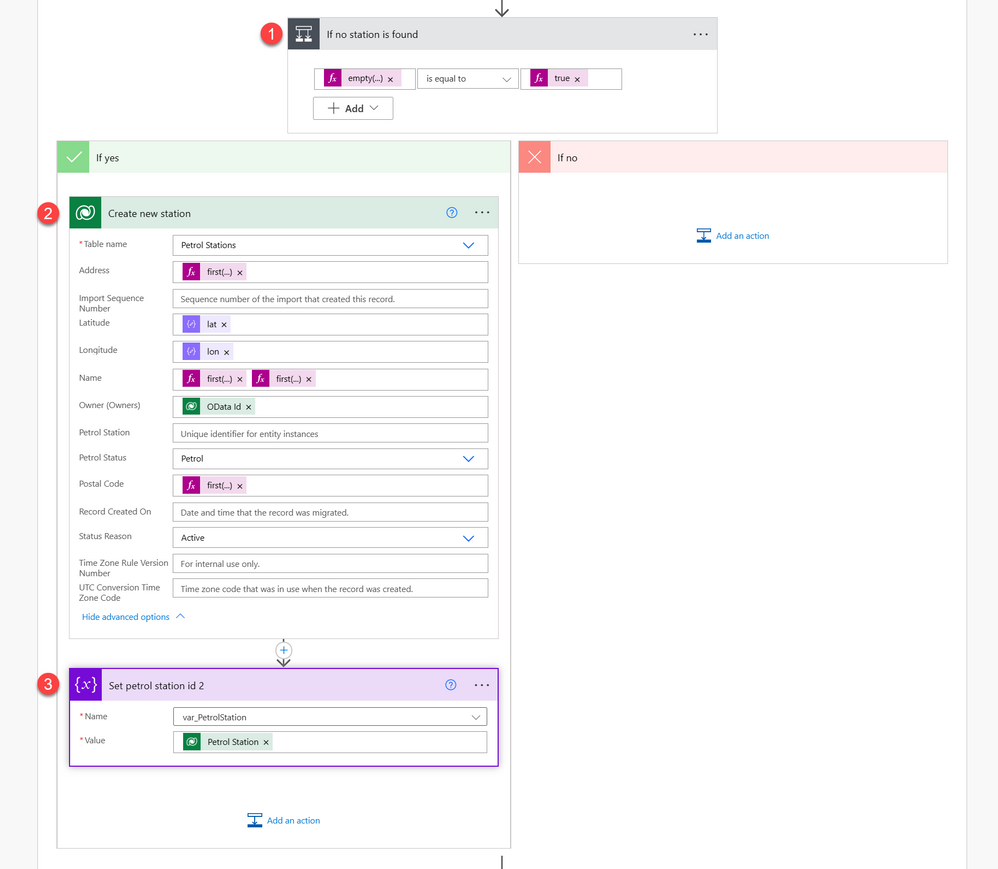

Process now checks, if station’s row ID is empty (1) – if yes, it means it has to be created. Creation (2) of the record takes all the details returned from Azure Maps API, like full address, station name, lat and lon, information about driver who reported it and finally – the postal code. After that row ID of the created station is being saved into variable.

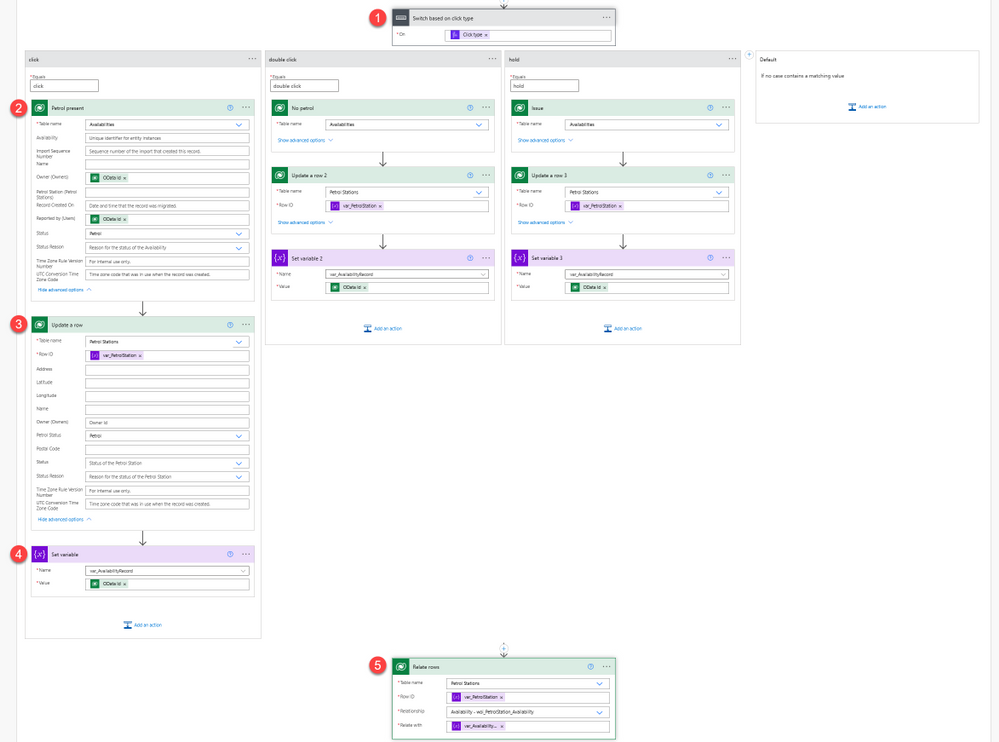

Now process moves to check what kind of action occurred on the Flic. There are 3 possible activities:

– Single click – means that there’s petrol on the station,

– Double click – means that there’s no petrol on the station,

– Long press – means there’s an issue and driver requires assistance.

To check what action occurred, we are using switch action (1). For each branch process executes the same actions, just with different statuses. First, bot creates an entry in Activities table (2), to record latest status (to one from Petrol, No petrol, Issue) for the station together with driver details who reported it.

After that is done, it updates status (again to one from Petrol, No petrol, Issue) of the station record itself (3). Then it saves created activity record OData id into a variable. And finally it relates records (4) – petrol station together with the created activity record.

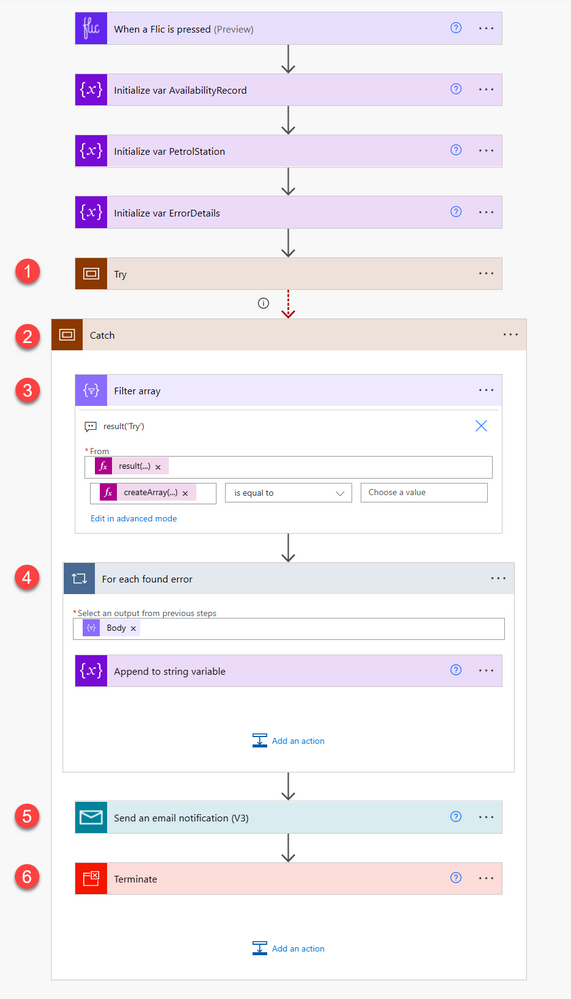

What is also worth to mention is that the whole process is built using the try-catch pattern. All actions that are executed in terms of the business logic are stored in the “Try” scope (1). If anything fails within the scope, it is caught by the “Catch” scope (2), that has it’s “Run after” settings configured to only be executed if previous actions fails, times out or is skipped.

Process in the “Catch” scope first filters (3) results of the “Try” scope, using the expression result(‘Try’) to leave only those entries which contain information about errors: @equals(createArray(‘Failed’, ‘TimedOut’), ”). Next for each such record (4) it is adding information about the details to a string variable. Finally, variable’s contents is sent to admin as a notification (5) and the whole process ends up with “Failed” outcome.

Show me something beautiful – The canvas app (Carmen)

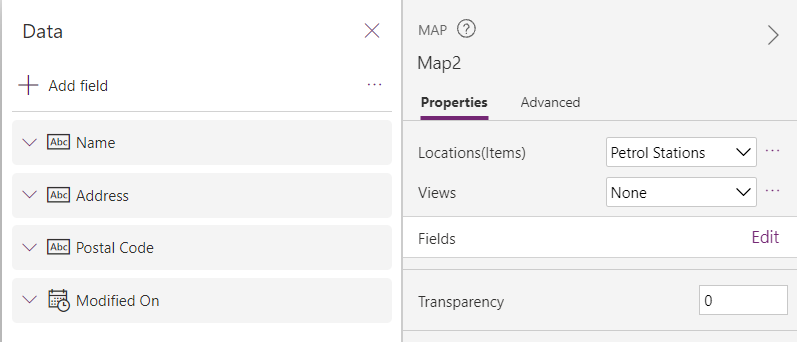

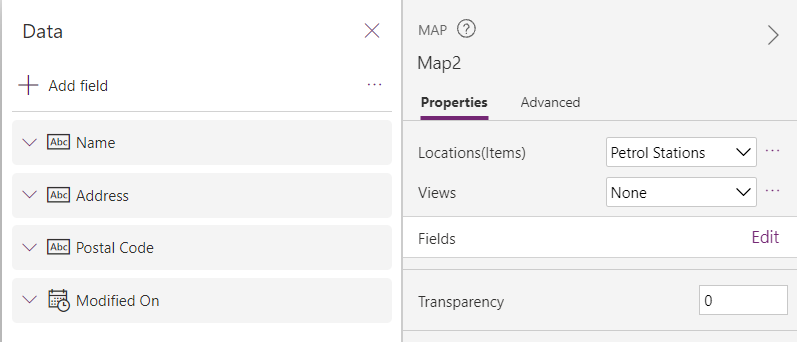

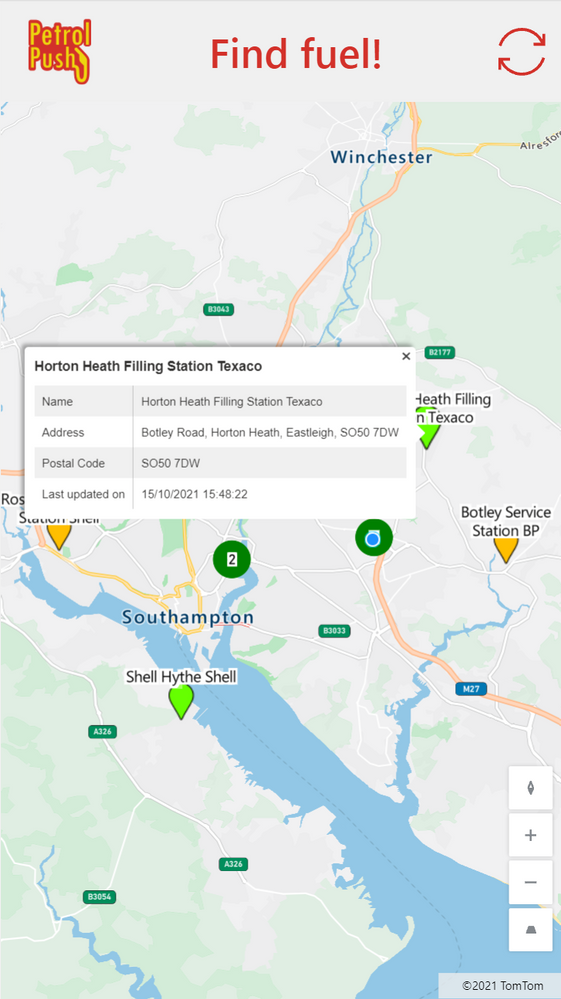

With the data stored in Dataverse, the canvas app can be created to display the available information and inform the people where they can find fuel. The canvas app consists of a header (with the company logo, name and refresh icon) and a map control.

We are using the built-in map control, which allows us to display the gas stations with their appropriate color, automatically center on the user’s current location and display additional information about each gas station when selecting the location pin.

To get the location pins on the map, we added the Dataverse table as a source in the Items property of the map control. We are currently not doing any filtering, but this could be added if needed. The latitude, longitude, labels and colors is each contained in a specific column within the data source. These are provided as values for the following properties (where the text between quotes is the name of the column in the Dataverse table):

– ItemsLabels = “woi_name”

– ItemsLatitudes = “woi_latitude”

– ItemsLongitudes= “woi_longitude”

– ItemsColors = “woi_color”

The woi_colors columns is defined as a calculated column that is influenced by the value of the Petrol Status column in the same table. Petrol Status contains the last known status of fuel at the respective station. The colors are defined as hex values with the following mapping:

Last Known Status |

Color |

Color name |

Petrol |

“#66FF00” |

Light Green |

No petrol |

“#FF0000” |

Red |

Issue |

“#FFBF00” |

Amber |

The color of the grouped pins is defined by the PinColor property of the map control. It is set to Green, which is a darker color than the green used for the stations with fuel.

When a pin is selected, the info card is shown. This is defined by setting the InfoCards property of the map to Microsoft.Map.InfoCards’.OnClick. The fields that are shown on the info card are defined by editing the **Fields** in the properties pane of the map. Four fields are shown on the info card:

– Name

– Address

– Postal code

– Modified on (to know when the station’s status was last updated)

This can be seen on the below screenshot.

The resulting app shows a map with all identified gas stations and their last known status, indicated with the color of the pin. Selecting a specific gas station provides the user with more information on that station.

We need a real map – The custom connector to Azure Maps (Lee)

A key part of the solution is populating a list of petrol stations and their status based on presses of the Flic button. We initially looked to use the built-in Bing maps Power Automate connector and actions to find the current address when a Flic button was used. However, this would return the nearest address, which is not necessarily a petrol station (e.g. it could be a house on the opposite side of the street which is deemed nearer).

To work around this, we created an Azure Maps resource in Azure. Azure Maps can return a list of addresses within a certain radius that fit a particular “POI (point of interest) category” – in this case a petrol station. Using the subscription-key (API key) from the Azure Maps resource, we were able to create a custom connector in Power Automate and query for the nearest petrol stations to the longitude and latitude when the Flic button was pressed.

Bring me the vibes – The Spotify connector(Yannick)

We like to celebrate victories and help each other in times of need, and what better way than use music for this? We have a sound system in the office connected to Spotify so let’s use that to keep everyone updated on things that happen on the road!

A new Power Automate flow will trigger every time a new petrol station status is logged, excluding when no petrol was available. In the case someone found Petrol at a gas station, we get super excited for our colleague and play Fuel by Metallica in the office to have a small party. When someone gets in trouble, for whatever reason, we play Trouble by Coldplay (so we know we need to rush to rescue) and a text message is sent to the manager.

Integrating with Spotify isn’t too difficult (the API is well-documented) but requires the creation of a custom connector with following API actions:

When combining both, we can first fetch all connected devices and then filter them on the device id of our office sound system. If the device is connected, we can play the appropriate song for the occasion with the second API call.

And lastly, for the text message we’ll use Twilio. Luckily they have an existing connector within Power Automate so it’s only a matter of registering for a Twilio account, getting a number to send messages from and configuring the action in our flow.

The princess and the push (Luise)

Straight from the beginning of the hackathon, we took care of documenting our architecture decisions and how we would implement them. We set up a GitHub repository, invited everyone in the team so they could commit their files. We continued to document all major steps so that everyone could use this as a reference to explain our solution, although each member was only in charge of their workload. Getting all information and documenting while building ensured accuracy but also gave an opportunity to think through the app and reflect on decisions.

Documentation includes screenshots of the flows, explains the data model and environment variables. We also published the solution itself in this repository to give community the chance to play with our app.

What can we learn from this epic quest? (everybody)

As a group, we discussed the hackathon for quite a while even after it had ended, and we came up with, for us, four important lessons this experience has taught us.

1. Do one thing the right way, instead of a million things in a messy way

What helped us build this solution in a short timespan, was that each person of the team was responsible for a specific part of the solution. There was no context switching between the app studio and building the cloud flow for example. Instead, we made some agreements in the beginning of the day and let each other know verbally and in the documentation if anything needed to change. This allowed all of us to focus on their own part, resulting in finished pieces to the puzzle.

2. Take care of documentation

Since development was decentralized, it was important we could keep each other up to date on what we were doing. Therefore, we documented from the start. Since we were working against the clock, we had one person who constantly went around the table to see what each of us was working on and to make sure it was captured in the documentation. After the individual pieces were finished, this allowed us to piece them together more easily.

3. 1 + 1 = 3

Or in our case 6 x 1 = 10 (or something). Each of us has a different background, no two are the same. Because of this, we were able to share different perspectives and we were able to find the most efficient way to create the different pieces of the puzzle: e.g. Azure maps for station identification, canvas apps for a quick user interface and cloud flows for logic. Since we were not limited to one area of expertise, our solution combines the best of different worlds. In the process, we all learned from each other, either technical skills or an approach how to tackle something. And since we were all eager to learn from and share with each other, we had a lot of fun doing it. ????

4. We are all developers

Each of us is building or creating something on a daily basis, be that using no-code, low-code or code-first platforms and tools. During the hackathon, we realized that our commonalities are more important than our differences. We share a common problem-solving and solution-oriented approach. We can define logic and we do it in very similar terms (if – then – else, anyone?). We can conceptualize solutions and explain them to each other. And then each of us can find some way using their own tools to build that solution. This is what makes us developers, not the language or tool set we build things in, but the approach and mindset we share. All of us are developers, and you can be one too.

Recent Comments