by Contributed | Jun 12, 2022 | Technology

This article is contributed. See the original author and article here.

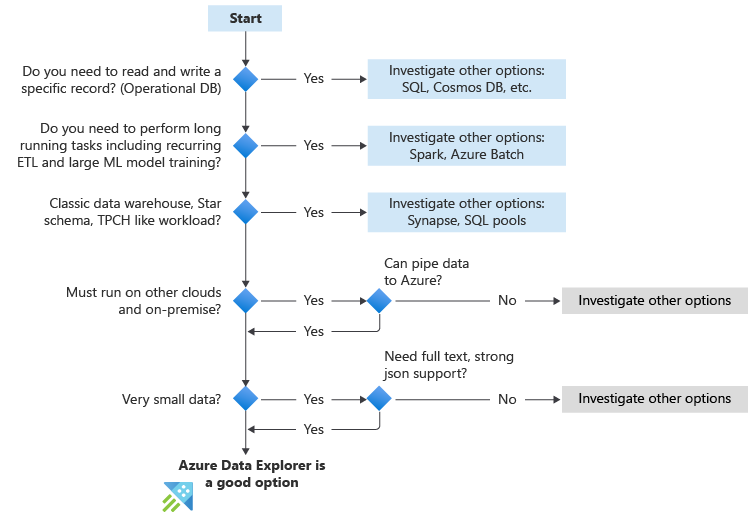

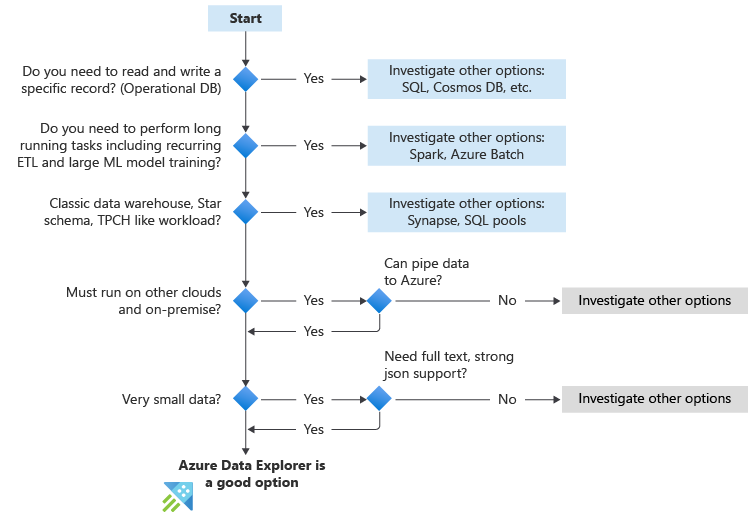

Azure Data Explorer is a big data interactive analytics platform that empowers people to make data driven decisions in a highly agile environment. The factors listed below can help assess if Azure Data Explorer is a good fit for the workload at hand. These are the key questions to ask yourself.

The following flowchart table summarize the key questions to ask when you’re considering using Azure Data Explorer.

Guidance summary

The following table shows how to evaluate new use cases. While this doesn’t cover all use cases, we think it can help you make a decision if Azure Data Explorer is the right solution for you.

Use case |

Interactive Analytics |

Big data (Variety, Velocity, Volume) |

Data organization |

Concurrency |

Build vs Buy |

Should I use Azure Data Explorer? |

|---|

Implementing a Security Analytics SaaS |

Heavy use of interactive, near real-time analytics |

Security data is diverse, high volume and high velocity. |

Varies |

Often multiple analysts from multiple tenants will use the system |

Implementing a SaaS offering is a Build scenario |

Yes |

CDN log analytics |

Interactive for troubleshooting, QoS monitoring. |

CDN logs are diverse, high volume and high velocity. |

Separate log records. |

May be used by a small group of data scientists but may also power many dashboards |

The value extracted from CDN analytics is scenario-specific and requires custom analytics |

Yes |

Time series database for IoT Telemetry |

Interactive for troubleshooting, analyzing trends, usage, detecting anomalies |

IoT telemetry are high velocity but may be structured only or medium in size |

Related sets of records. |

May be used by a small group of data scientists but may also power many dashboards |

When searching for a database, context is typically “build” |

Yes |

Read more on When to use Azure Data Explorer (Kusto)

by Contributed | Jun 10, 2022 | Technology

This article is contributed. See the original author and article here.

At Supercomputing 2019, we announced HBv2 virtual machines (VMs) for HPC with AMD EPYC™ ‘Rome’ CPUs and the cloud’s first use of HDR 200 Gbps InfiniBand networking from NVIDIA Networking (formerly Mellanox). HBv2 VMs have proven very popular with HPC customers on a wide variety of workloads, and have powered some of the most advanced at-scale computational science ever on the public cloud.

Today, we’re excited to share that we’re making HBv2 VMs even better. By taking learnings from our efforts to optimize HBv3 virtual machines, we will soon be enhancing the HBv2-series in the following ways:

- simpler NUMA topology for application compatibility

- better HPC application performance

- new constrained core VM sizes to better fit application core-count or licensing requirements

- Fixing a known issue that prevented offering HBv2 VMs with 456 GB of RAM

This article details the changes we will soon make to the global HBv2 fleet, what the implications are for HPC applications, and what actions we advise so that customers can smoothly navigate this transition and get the most out of the upgrade.

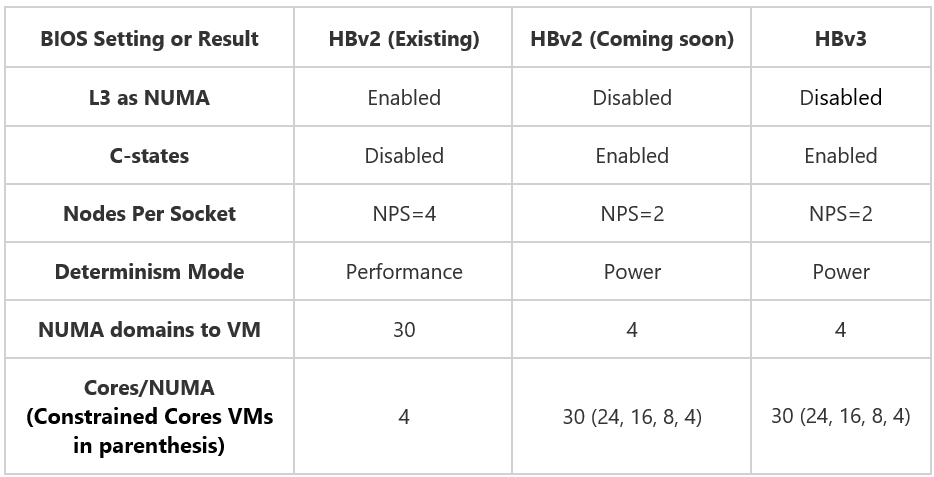

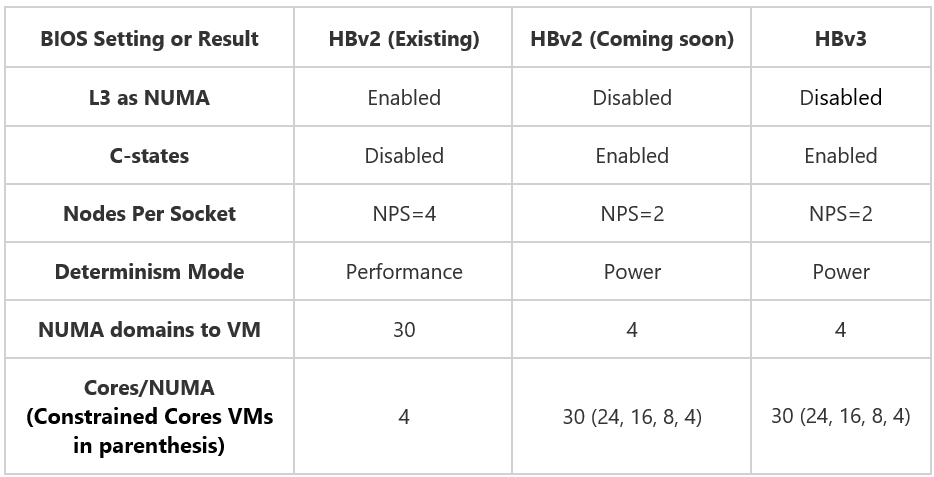

Overview of Upgrades to HBv2

The BIOS of all HBv2 servers will be upgraded with the platform changes tabulated below. These upgrades bring the BIOS configurations of HBv3 (already documented in HBv3 VM Topology) and HBv2 in alignment with one another, and in doing synchronize so how the hardware topology appears and operates within the VM.

Simpler NUMA topology for HPC Applications

A notable improvement will be a significant reduction in NUMA complexity presented into customer VMs. To date, HBv2 VMs have featured 30 NUMA domains (4-cores per NUMA). After the upgrade, every HBv2 VM will feature a much simpler 4 NUMA configuration. This will help assist customer applications that do not function correctly nor optimally with a many-NUMA hardware topology.

In addition, while there will be no hard requirement to use HBv2 VMs any differently than before, the best practice for pinning processes to optimize performance and performance consistency will change. From an application runtime perspective, the existing HBv2 process pinning guidance will no longer apply. Instead, optimal process pinning for HBv2 VMs will be identical to what we already advise for HBv3 VMs. By adopting this guidance, users will gain the following benefits:

- Best performance

- Best performance consistency

- Single configuration approach across both HBv3 and HBv2 VMs (for customers that want to use HBv2 and HBv3 VMs as a fungible pool of compute)

Better HPC workload performance

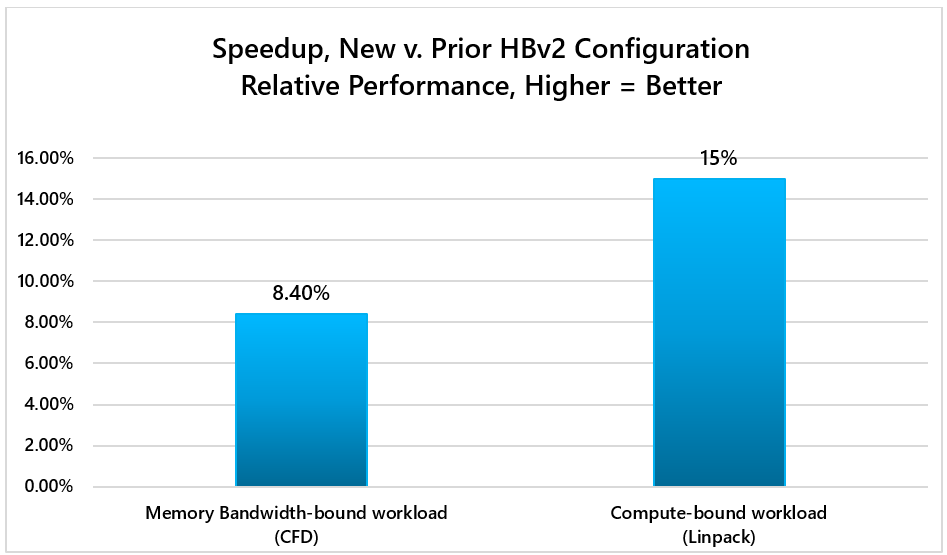

These changes will also result in higher performance across a diverse set of HPC workloads due to enhancements to peak single-core boost clock frequencies, and better memory interleaving. In our testing, the upgrade to HBv2 VMs improve representative memory bandwidth-bound and compute-bound HPC workloads by as much as 8-15%:

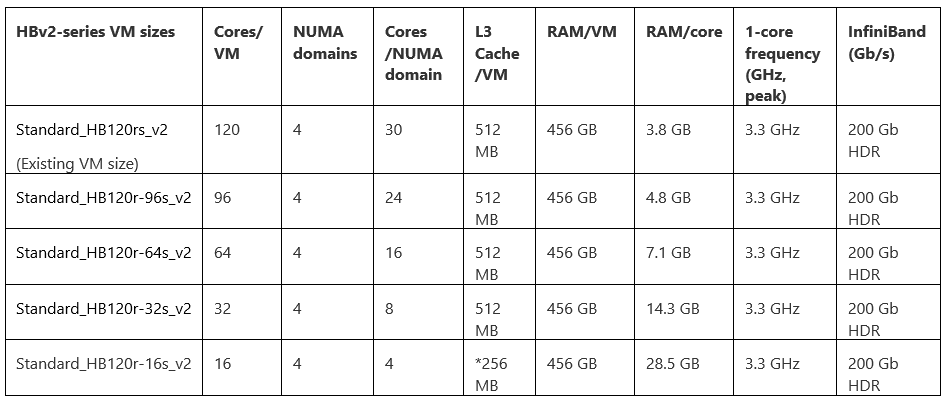

Constrained Core VM sizes

Finally, soon after the BIOS changes are made to HBv2 servers we will introduce four new constrained core VM sizes. See below for details:

*Due to the architecture of Zen2-based CPUs like EPYC 2nd Generation, the 16-core HBv2 VM size only exposes 256 MB out of a possible 512 MB of L3 cache within the server

As with HBv3 VMs, constrained cores VM sizes for the HBv2-series will enable customers to right-size the core count of their VMs on the spectrum of maximum performance per core (16-core VM size) to maximum performance per VM (120 core VM size). Across all VM sizes, global shared assets like memory bandwidth, memory capacity, L3 cache, InfiniBand, local SSD, etc. remain constant. Doing so increases how those assets are allocated on a per-core basis. In HPC, common scenarios for which this is useful include:

- Providing more memory bandwidth per CPU core for CFD workloads.

- Allocating more L3 cache per core for RTL simulation workloads.

- Driving higher CPU frequencies to fewer cores in license-bound scenarios.

- Giving more memory or local SSD to each core.

Fixing Known Issue with Memory Capacity in HBv2 VMs

The upgrade will also address a known issue from late 2021 that required a reduction from the previously offered 456 GB of RAM per HBv2 VM to only 432 GB. After the upgrade is complete, all HBv2 VMs will once again feature 456 GB of RAM.

Navigating the Transition

Customers will be notified via the Azure Portal shortly before the upgrade process begins across the global HBv2 fleet. From that point forward any new HBv2 VM deployments will land on servers featuring the new configuration.

Because many scalable HPC workloads, especially tightly coupled workloads, expect a homogenous configuration across compute nodes, we *strongly advise* against mixing HBv2 configurations for a single job. As such, we recommend that once the upgrade has rolled out across the fleet that customers with VM deployments from before the upgrade began (and thus still utilizing servers with the prior BIOS configuration) de-allocate and re-allocate their VMs so that the have a homogenous pool of HBv2 compute resources.

As part of notifications sent via the Azure portal, we will also advise customers visit the Azure Documentation site for the HBv2-series virtual machines where all changes will be provided in greater detail.

We are excited to bring these enhancements to HBv2 virtual machines to our customers across the world. We’re also happy to take customer questions and feedback by contacting us at Azure HPC Feedback.

by Contributed | Jun 10, 2022 | Technology

This article is contributed. See the original author and article here.

Establishing trust around the integrity of data stored in database systems has been a longstanding problem for all organizations that manage financial, medical, or other sensitive data. Ledger is a new feature in Azure SQL and SQL Server that incorporates blockchain crypto technologies into the RDBMS to ensure the data stored in a database is tamper evident. In this session of Data Exposed with Anna Hoffman and Pieter Vanhove, we will cover the basic concepts of Ledger and how it works, Ledger tables, and digest management, and database verification.

Resources:

Ledger Whitepaper

by Contributed | Jun 9, 2022 | Technology

This article is contributed. See the original author and article here.

Establishing trust around the integrity of data stored in database systems has been a longstanding problem for all organizations that manage financial, medical, or other sensitive data. Ledger is a new feature in Azure SQL and SQL Server that incorporates blockchain crypto technologies into the RDBMS to ensure the data stored in a database is tamper evident. In this session of Data Exposed with Anna Hoffman and Pieter Vanhove, we will cover the basic concepts of Ledger and how it works, Ledger tables, and digest management, and database verification.

Watch on Data Exposed

Resources:

Ledger Whitepaper

View/share our latest episodes on Microsoft Docs and YouTube!

by Contributed | Jun 8, 2022 | Technology

This article is contributed. See the original author and article here.

There is no shortage of incident response frameworks in the security industry. While the processes may vary, there is relatively universal agreement on requirements to remediate an incident and conduct lessons learned. Remediation falls towards the end of the incident response cycle because security teams must fully analyze the incident to understand several dynamics:

- Who is the attacker?

- When did the incident occur?

- Which user, asset, or data are being targeted?

- Which attack techniques were leveraged?

- Which of our defenses detected it?

- Is this the full scope of the compromise, or are more factors involved?

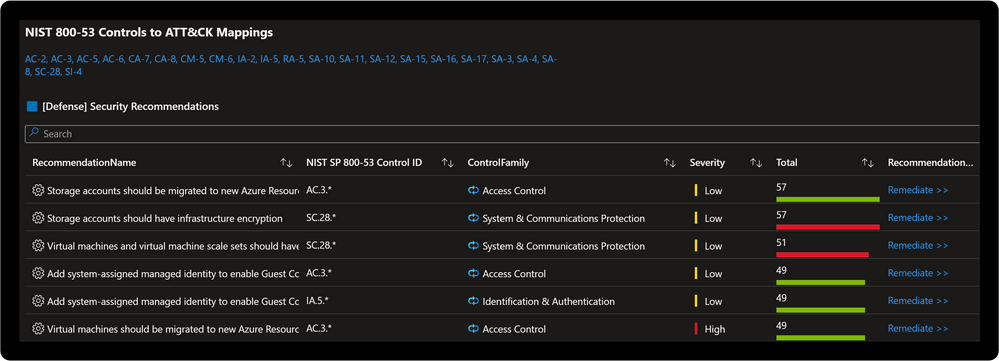

Security teams respond after understanding these and several organizationally aligned information requirements. The incident is closed when there is confidence the attacker was expelled from the environment and respective actions completed. The difference between a young Security Operations Center (SOC) and a mature one often lies in the way incident response teams conducts lessons learned. This process evolves from a “rinse and repeat” type approach to proactive threat modeling. Proactive threat modeling is critical to understanding where the attacker maneuvered through a network. The MITRE ATT&CK® framework allows security teams to understand the methods attackers employ against networks. Recently MITRE Engenuity published the NIST SP 800-53 Controls to ATT&CK Mappings which provides an actionable approach to implementing defenses based in the NIST SP 800-53 controls framework.

Microsoft Sentinel: Threat Analysis & Response Solution

Microsoft Sentinel: Threat Analysis & Response Solution

The Microsoft Sentinel: Threat Analysis & Response Solution takes this a step further with (2) new Workbooks designed to support development of threat hunting programs and dynamic threat modeling designed to identify, respond, harden, and remediate against threats. Microsoft Defender for Cloud is a Cloud Workload Protection Platform (CWPP) and Cloud Security Posture Management (CSPM) which provides powerful coupling with Microsoft Sentinel. Where Microsoft Sentinel provides incident response capabilities, Microsoft Defender for Cloud provides remediation actions aligned to respective regulatory compliance initiatives. Once the incident is fully remediated and cloud weaknesses are addressed there is also the ability to evaluate analytics coverage with the Microsoft Sentinel MITRE ATT&CK® blade. Check out the demo to see how.

Solution Benefits

- Proactive threat modeling (red vs. blue)

- Quantifiable framework for building threat hunting programs

- Monitoring & alerting of security coverage, threat vectors, and blind spots

- Response via security orchestration automation and response (SOAR) playbooks

- Remediation with cloud security posture management (CSPM)

- Compliance alignment to NIST SP 800-53 controls

Solution Content & Workflows

Solution Content & Workflows

Solution Overview

Threat Analysis & Response Workbook

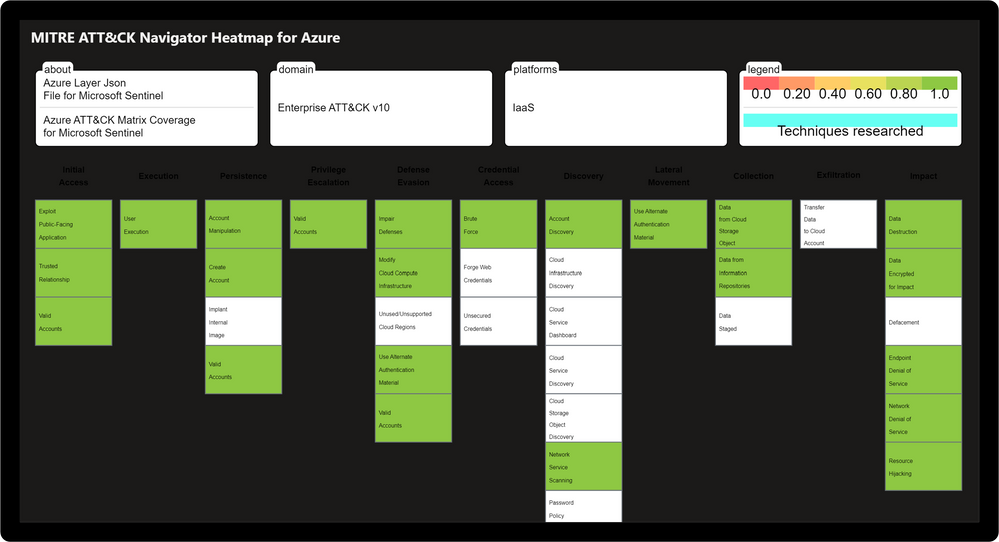

Designed by the Microsoft Threat Intelligence Center, the Threat Analysis & Response Workbook provides the foundation for building threat hunting programs. This workbook features recommended steps for getting started including resources for deploying analytics rules and hunting queries. Data Source Statistics provides an overview of which logs are ingested from respective sources which provides a starting point for determining utility of respective analytics rules. The Microsoft Sentinel GitHub section provides an overview of available analytics by alignment to respective tactics/techniques. MITRE ATT&CK Navigator Heatmap provides an assessment of coverage by tactic and technique areas which is valuable for evaluating the efficiency of organizational threat hunting programs.

Threat Analysis & Response Workbook

Threat Analysis & Response Workbook

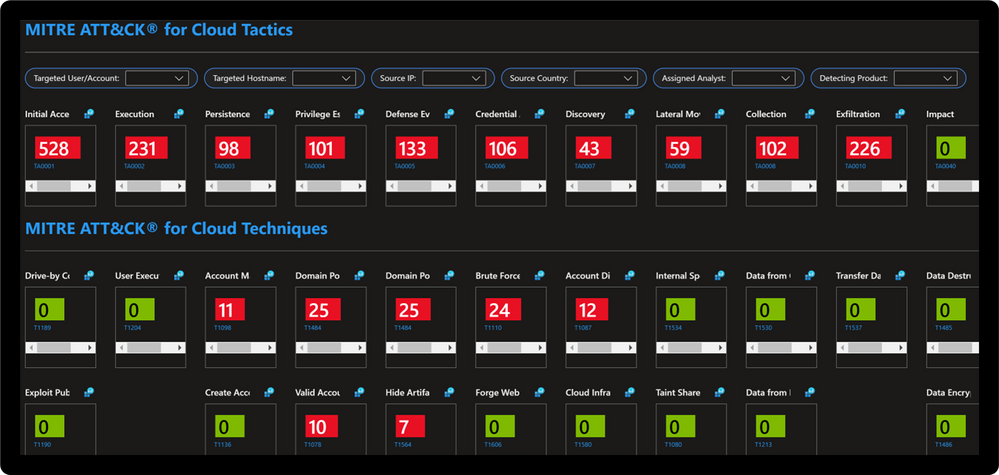

Dynamic Threat Analysis & Response Workbook

The Dynamic Threat Analysis & Response Workbook dynamically assesses attacks to your on-premises, cloud, and multi-cloud workloads. Attackers are categorized by the MITRE ATT&CK for Cloud Matrix and evaluated against Microsoft Sentinel observed Analytics and Incidents. This provides pivots to evaluate attacks against specific users, assets, attacking IPs, countries, assigned analyst, and detecting product. Each tactic provides a respective control area comprised of technique control cards.

Dynamic Threat Modeling & Response Workbook

Dynamic Threat Modeling & Response Workbook

Technique Control Cards provide details of establishing coverage, evaluation of observed attacks, and defense recommendations aligned to NIST SP 800-53 controls. Observed attacks are addressed via Microsoft Sentinel Incidents for Investigation, Playbooks for Response, MITRE ATT&CK blade for Coverage, and Microsoft Defender for Cloud for Remediations.

Improve posture by implementing NIST SP 800-53 control recommendations with Microsoft Defender for Cloud

Improve posture by implementing NIST SP 800-53 control recommendations with Microsoft Defender for Cloud

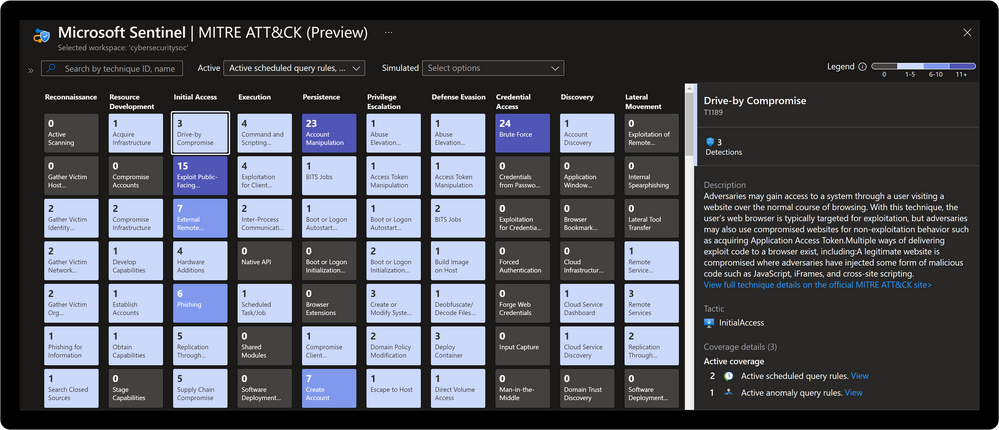

Microsoft Sentinel: MITRE ATT&CK Blade

MITRE ATT&CK is a publicly accessible knowledge base of tactics and techniques that are commonly used by attackers and is created and maintained by observing real-world observations. Many organizations use the MITRE ATT&CK knowledge base to develop specific threat models and methodologies that are used to verify security status in their environments. Microsoft Sentinel analyzes ingested data, not only to detect threats and help you investigate, but also to visualize the nature and coverage of your organization’s security status.

Microsoft Sentinel: MITRE ATT&CK Blade

Microsoft Sentinel: MITRE ATT&CK Blade

Get Started Today

Learn more about threat hunting with Microsoft Security

Understand security coverage by the MITRE ATT&CK® framework

Joint forces – MS Sentinel and the MITRE framework

MITRE ATT&CK® mappings released for built-in Azure security controls

This solution demonstrates best practice guidance, but Microsoft does not guarantee nor imply compliance. All requirements, tactics, validations, and controls are governed by respective organizations. This solution provides visibility and situational awareness for security capabilities delivered with Microsoft technologies in predominantly cloud-based environments. Customer experience will vary by user and some panels may require additional configurations for operation. Recommendations do not imply coverage of respective controls as they are often one of several courses of action for approaching requirements which is unique to each customer. Recommendations should be considered a starting point for planning full or partial coverage of respective requirements.

Recent Comments