The power of proactive engagement in Dynamics 365 Contact Center

This article is contributed. See the original author and article here.

Let’s face it—customers don’t just want fast service anymore. They want businesses to know what they need before they even ask. Whether it’s a reminder about a missed payment, a heads-up about a delivery delay, or a quick check-in after a dropped call, customers appreciate when companies practice proactive engagement.

At the same time, businesses are under pressure to do more with less. Reducing call volumes, improving customer service representative (CSR) productivity, and delivering consistent experiences across channels are no longer nice-to-haves—they’re essential.

That’s where proactive engagement comes in. It’s about flipping the script: instead of waiting for customers to reach out, you reach out to them—with the right message, at the right time, on the right channel. At the end of the day, it’s about relationships. Proactive engagement helps businesses show up for their customers in ways that are meaningful and memorable. It’s about being there before you’re needed. About solving problems before they become complaints. About turning every interaction into an opportunity to build trust. And in a world where customer loyalty is earned one moment at a time, that makes all the difference.

The platform behind the vision

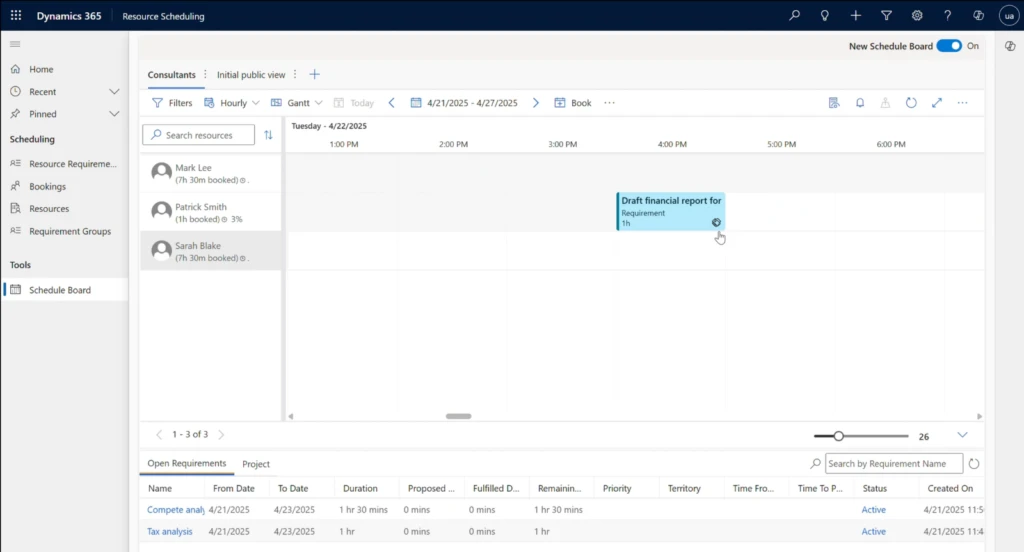

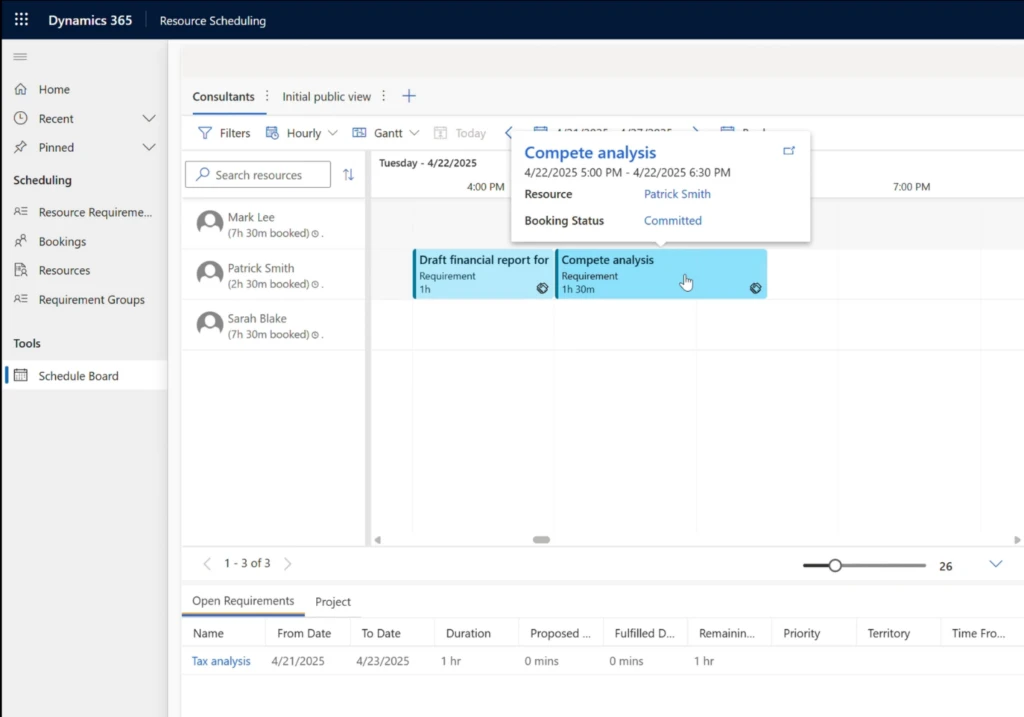

So how does it all work? At the heart of proactive engagement is a powerful platform built on Dynamics 365 Contact Center, Dynamics 365 Customer Insights – Journeys (CIJ), and Copilot Studio. Together, they’re changing how organizations connect with customers.

With this platform, you can design intelligent, automated outreach that feels personal. For example, AI-powered voice bots can handle routine calls—like reminding someone about a payment or updating them on a service issue—while seamlessly handing off to a customer service rep when the situation calls for a more personal touch. And the best part? You don’t need to be a developer to make it all happen. The platform is designed with low-code tools. Business users can build and manage customer journeys across voice, SMS, and email—all from one place.

It’s flexible, scalable, and built for the real world, where customer needs change fast and every interaction matters.

How proactive engagement works

There are two main ways to kick off outbound interaction. One is through Customer Insights – Journeys (CIJ). This is where you can build event-driven journeys that automatically trigger calls based on real-time signals—like a missed payment or a service disruption, or segment-based journeys that target customers that meet a specific criteria. The other is through the CCaaS API, which is perfect if you already have campaign tools or CRMs in place and want to plug into the platform for things like scheduled callbacks.

Once the trigger is set, Contact Center takes over. It handles the orchestration. That is, it figures out the best time to call, checking if the customer is within their preferred contact window. It also manages the pacing of calls so CSRs aren’t overwhelmed. It’s a seamless handoff and it ensures that every call feels intentional, timely, and relevant.

Dial modes: Tailored for every scenario

One of the most powerful aspects of proactive engagement is its flexibility, especially when it comes to placing calls. Additionally, it offers dial modes optimized for different types of customer interaction.

Copilot dial

This is the most automated option. The system places the call, and a conversational AI bot—your Copilot—takes the lead. It’s perfect for transactional scenarios like payment reminders, service outages, or shipping updates. Copilot can handle the entire interaction. However, if it senses the need for a more nuanced conversation, it can seamlessly bring it to a CSR. It’s a major upgrade from traditional IVR systems, offering a more natural, helpful experience.

Preview dial

Here, the CSR is in the driver’s seat from the start. Before the call is even placed, the CSR gets a full view of the customer context—past interactions, preferences, and the reason for the outreach. Once they’re ready, the system dials the customer. This mode is ideal for high-touch engagements where personalization is key, like follow-ups on complex service issues or high-value sales conversations.

Progressive dial

This mode strikes a balance between automation and human touch. The system waits until a CSR is available, then places a call to a customer. If the customer picks up, the CSR joins the conversation. It’s efficient, but still ensures that someone is ready to help the moment the customer answers. You can even use Copilot to verify the customer before the CSR joins, adding an extra layer of smart filtering.

Use cases

The beauty of proactive engagement is its versatility. It’s a platform that spans the entire customer journey, not just for one department or one type of interaction. Think about a utility company sending outage alerts before customers even notice a problem. Or a bank reminding customers about upcoming payments. Or a retailer following up on an abandoned cart with a personalized offer.

Whether it’s transactional, commercial, or customer-initiated, proactive engagement makes it easy to deliver the kind of service that feels thoughtful, timely, and human.

What’s next for proactive engagement

Microsoft is continuing to invest in expanding the capabilities of proactive engagement to support even more sophisticated, scalable, and compliant outreach. One of the most exciting additions on the horizon is predictive dialing, designed for high-volume outreach—like marketing campaigns or large-scale service updates—ensuring CSRs stay productive while customers get timely responses. This mode uses AI to forecast CSR availability and customer pickup rates, allowing the system to place multiple calls per CSR to maximize efficiency.

Also on the roadmap:

- Enhanced regulatory compliance for commercial calling (e.g., TCPA, OFCOM)

- Post-call outcome processing (e.g., voicemail detection, retries)

- Conversational SMS and Conversational WhatsApp support for outbound journeys

Learn more about proactive engagement

To learn more, read the documentation: Configure proactive engagement (preview) | Microsoft Learn

Try the preview and ensure your organization stays ahead of customer expectations. Send your feedback to pefeedback@microsoft.com.

The post The power of proactive engagement in Dynamics 365 Contact Center appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments