NLU+: Fine-tuned language intelligence for smarter conversations

This article is contributed. See the original author and article here.

In today’s fast-moving world, it’s not enough to just hear what customers say—you need to understand what they really mean. Whether they’re talking to a voice agent (IVR) or chatting with a virtual agent, it’s important that the technology behind the scenes can truly understand them. That’s where Natural Language Understanding (NLU) comes in. It helps contact centers respond more naturally, reduce the need to transfer calls, and make every interaction smoother and more helpful.

To help businesses improve how they talk to customers, we’re introducing NLU+, a new feature in Microsoft Copilot Studio. NLU+ is designed for companies that have a lot of customer conversations and want more control over how their virtual agents respond. It’s especially useful for teams that have collected years of chat or call data. With NLU+, they can fine-tune how their bots understand and reply, making conversations feel more personal and effective.

Why now? Many companies are curious about using generative AI but aren’t quite ready to go all in. NLU+ offers a reliable and customizable option that gives you accurate results and full control over your data. It lets you build smart language models using your own past conversations, tailored to your business’s unique way of speaking and working. This means your agents can better understand even tricky or industry-specific language, leading to faster and more natural customer service.

Think of NLU+ like a smart engine that gets better the more you use it. It doesn’t just pick up on keywords—it understands full sentences and the meaning behind them, letting customers speak freely and still gets what they’re saying. This makes conversations feel more human and less robotic.

Training the model

NLU+ works by learning from examples. You give it sample conversations with notes that explain what each part means. This helps it understand full sentences instead of just following a step-by-step script. While it takes a bit of effort to set up, the payoff is a system that keeps getting better over time. If you want a virtual agent that grows with your business and keeps delivering great service, NLU+ is a great choice.

By introducing NLU+ with other NLU’s like Generative AI, standard NLU, and Azure CLU, you can build a wide range of self-service agents. Generative AI is great for low maintenance agentic systems, standard NLU is great for rapid setups while NLU+ is better for more complex tuning needs. Azure CLU helps you connect with existing Microsoft language tools. Together, these options let you find the right balance between speed, accuracy, and control.

Custom ontology

An ontology is a formal representation of knowledge within a specific domain. NLU+ allows you to define your own intents, entities, and relationships using annotated data, forming your custom ontology. This gives you full control over how your agent understands and processes language. For example, in a flight booking scenario, you can define variables like origin, destination, travel date, and number of passengers. We can now add entity annotations within the topic triggers, allowing tighter coupling of intents and entities.

Advanced slot filling

Once your data is annotated, NLU+ can extract multiple data points from a single utterance. This eliminates the need for repetitive back-and-forth questions. For instance, if a customer says, “Book a flight from Miami to Boston for two people tomorrow,” the model can simultaneously extract the origin, destination, number of passengers, and travel date. This not only saves time but also creates a smoother, more conversational experience.

NLU+ also allows makers to add additional training data to help the model understand the many ways customers might answer a question, thereby improving entity extraction accuracy.

Bulk data import

NLU+ supports the import of large volumes of training data—making it easy to scale and refine your models. With simple import/export functionality, you can quickly update topic triggers, append new data, and iterate on your model without starting from scratch. This is especially useful for enterprises that need to manage multiple topics or frequently update their conversational flows.

Consistent latency using a precompiled model

You can use the Train feature to pre-compile your model. This ensures low-latency, deterministic performance—critical for high-volume environments. The training process also includes a unique feature for voice agents (IVR), where the recognizer is trained alongside the model to improve speech recognition accuracy. Once training is complete, you’ll receive a status update, and if there are any issues, you can export the results for easy troubleshooting and refinement.

Get started with NLU+

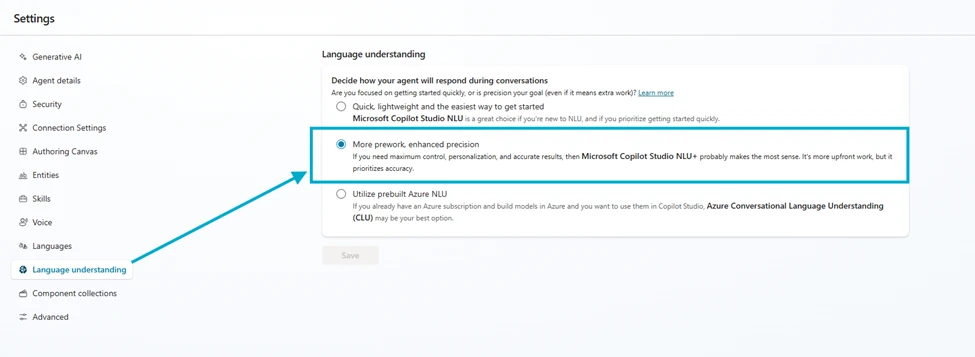

Getting started with NLU+ is simple. All you need is a Dynamics 365 Contact Center license. Once enabled, navigate to the Language Understanding section in Copilot Studio and select NLU+ as your orchestration engine. From there, you can upload your data, annotate it, train your model, and test it –all within the same intuitive interface. Once you’re satisfied with the results, publish your agent and start delivering smarter, faster, and more personalized conversations that are designed to always understand meaning.

Learn more

Watch a quick video introduction.

Read the documentation:

The post NLU+: Fine-tuned language intelligence for smarter conversations appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments