by Contributed | Dec 15, 2023 | Technology

This article is contributed. See the original author and article here.

Microsoft 365 Copilot is not only a powerful tool for creating documents, but also a smart assistant for Microsoft Teams meetings. With Copilot, you can easily prepare for your meetings, take notes, capture action items, and follow up on tasks. Here are some ways that Copilot can help you in your Teams meetings:

Microsoft 365 Copilot is not only a powerful tool for creating documents, but also a smart assistant for Microsoft Teams meetings. With Copilot, you can easily prepare for your meetings, take notes, capture action items, and follow up on tasks. Here are some ways that Copilot can help you in your Teams meetings:

– Before the meeting, Copilot can scan your calendar and suggest relevant documents, insights, and questions to help you get ready. You can also ask Copilot to create an agenda and share it with the attendees.

– During the meeting, Copilot can listen to the conversation and transcribe it in real time. You can also ask Copilot to summarize the key points, highlight important information, and generate a meeting recap.

– After the meeting, Copilot can send the meeting recap to the attendees, along with the action items and due dates. You can also ask Copilot to follow up on the tasks, check the progress, and remind the assignees.

With Microsoft 365 Copilot in Teams meetings, you can save time, improve collaboration, and enhance productivity. (fyi, this preceding description… was written by Microsoft 365 Copilot)

In this Day 10 of Copilot I walk through the use of Microsoft 365 Copilot within Microsoft Teams meetings.

Resources:

Previous Days of Copilot:

Thanks for visiting – Michael Gannotti LinkedIn | Twitter

by Contributed | Dec 14, 2023 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

How often do you find yourself spending too much time reading through long and complex customer cases, trying to figure out the main problem and the best solution? What amount of cases do you handle per day, and how do you ensure quality and consistency in your responses? How do you keep track of the most relevant and updated information from multiple sources and channels?

These are some of the challenges that support agents face every day, and they can affect their productivity, performance, and satisfaction. Customers expect fast and accurate answers to their questions and issues, and they don’t want to repeat themselves or wait for long periods of time. Support agents need to be able to quickly understand the context and history of each case, identify the root cause and the best action, and communicate effectively with customers and colleagues.

This is where summarization comes in. Summarization is an AI-powered feature that provides support agents case summaries to help them quickly understand the context of a case and resolve customer issues more efficiently. It uses large language models and natural language processing to analyze and condense information from various sources and formats, such as emails, chat messages, phone calls, documents, and web pages, into concise and relevant summaries. Summarization can save you time, improve your accuracy, and enhance the customer experience.

This blog post explains how summarization works, what benefits it can bring to your support team and your organization, and how you can turn it on and use it with Dynamic 365 Customer Service Copilot, the leading AI platform for customer service.

Summarization: The gateway to generative AI Innovation

Summarization stands out as an ideal first use case, primarily due to its immediate, measurable impact on efficiency and productivity. It automates the extraction of crucial details such as the case title, customer, subject, product, priority, case type, and description. The AI-generated summaries offer context and communicate the actions already undertaken to address the issue. Summarization delivers tangible benefits, showcasing the power of generative AI in a clear and demonstrable manner.

Compared to other AI use cases, the implementation of summarization is less complex. It is a straightforward starting point for organizations new to generative AI, reducing barriers to entry and integration time. The success of summarization in enhancing productivity and achieving measurable outcomes serves as a confidence-building milestone for organizations. Experiencing the transformative power of generative AI fosters trust in the technology. Additionally, it lays a robust foundation for delving into more advanced use cases.

Starting with summarization brings immediate operational improvements. It also helps organizations to embrace the broader potential of generative AI, setting the stage for future business process innovation.

By selecting the Make case summaries available to agents checkbox, administrators can bring their organization a quick win that adds immediate business value. We have invested in summarization features to allow more customization options. Admins now can customize the format of how agents view conversation summaries. They can also customize the entities and fields that are used for case summary.

Learn more

To learn more about summarization, read the documentation: Enable summarization of cases and conversations | Microsoft Learn

The post Begin your copilot journey with summarization appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Dec 14, 2023 | Technology

This article is contributed. See the original author and article here.

Today, we announced that Copilot for Microsoft 365 will be available to purchase for faculty and staff with Microsoft 365 A3 and A5 licenses beginning January 1, 2024. Also, starting in early February 2024, Microsoft Copilot (formerly Bing Chat Enterprise) will be available for all faculty users and Higher Education students who are 18 years or older.

Copilot for Microsoft 365 available for Education faculty and staff

Beginning on January 1, 2024, Copilot for Microsoft 365 will be generally available for Microsoft 365 A3 and A5 faculty for $30 per user per month with a 300-seat minimum per tenant. Copilot for Microsoft 365 combines the power of large language models (LLMs) with your organization’s data – all in the flow of work – to turn your words into one of the most powerful productivity tools on the planet. We’re excited to bring generative AI to educational institution faculty and staff, benefitting their impactful work across research, communications, marketing, data analysis, fundraising, and management.

Copilot inherits your organization’s security, compliance, and privacy policies set up in Microsoft 365. To learn more about Copilot for Microsoft 365, you can review documentation including requirements, setup, and information about privacy, security, and compliance.

Educational institutions interested in purchasing Copilot for Microsoft 365 should contact their account teams.

Microsoft Copilot with commercial data protection available to faculty and higher education students

We’re also excited to announce that beginning in early February we’re expanding availability of Microsoft Copilot with commercial data protection (formerly Bing Chat Enterprise) to all faculty, staff, and higher education students age 18+ . Eligible users, when signed in with their Entra ID account, can access Microsoft Copilot with commercial data protection from copilot.microsoft.com, bing.com/chat, or through Copilot in Microsoft Edge and Copilot in Windows. Copilot with commercial data protection means that data is protected, chat data is not saved, Microsoft has no eyes-on access to it, and it is not used to train foundation models. Learn more in this article.

Get started

Our education customers can start getting ready for Copilot now. Validate your educational institution type in the Microsoft 365 admin center, and indicate student eligibility in the Microsoft Entra admin center by updating the “Age Group” and “Consent Provided” fields. For more details on what you can do to prepare for Microsoft Copilot in your Education tenant, review this blog.

To learn more about managing Copilot, including how to disable it, review this documentation. Admins will see the service plan attached to their user’s Microsoft 365 license in late January and rollout will begin in early February.

For more information, read the full announcement.

by Contributed | Dec 13, 2023 | Technology

This article is contributed. See the original author and article here.

We are excited to announce limited General Availability of Azure Defender for new Microsoft Azure Database for PostgreSQL – Flexible Server instances. This is another add-on, which, if deployed, provides another important security barrier to your Azure PostgreSQL server in addition to existing security features, we blogged about earlier.

In the following article, we will discuss how adding Azure Defender for OSS databases with your PostgreSQL Flexible server will help you secure your applications from hacking attacks.

Protection against brute force attacks

A brute force attack uses trial-and-error to guess login info, encryption keys, or find a hidden web page. Hackers work through all possible combinations hoping to guess correctly.

These attacks are done by ‘brute force’ meaning they use excessive forceful attempts to try and ‘force’ their way into your private account(s).

The “brute-force” terminology is derived from the tactic of using constant attempts or excessive “force” until the threat actor arrives at the desired result—entry into a system with the right credentials. Despite this being one of the oldest hacking methodologies, according to Verizon’s 2020 Data Breach Investigations Report, hacking, which includes brute forcing passwords, remains the primary attack vector. Over 80% of breaches caused by hacking involve brute force or the use of lost or stolen credentials.

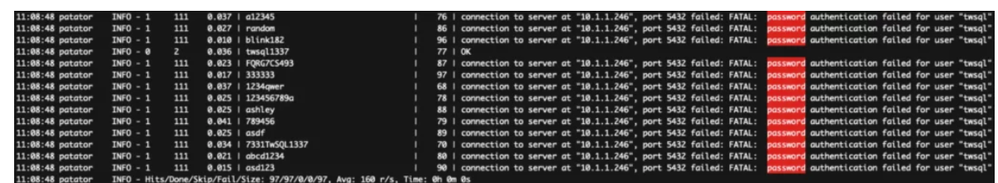

PostgreSQL brute force attack example

PostgreSQL brute force attack example

When Microsoft Defender detects a brute force attack, it triggers an alert to bring you awareness that a brute force attack took place. It also can separate simple brute force attack from brute force attack on a valid user or a successful brute force attack.

Detecting anomalous database access patterns

Databases may store extremely sensitive business information, making them a major target for attackers. Therefore, securing their data from damage or leakage is a critical issue. To manage this, enterprises typically implement several layers of protection between users and data, working at the network, host, and database levels. The data protection at database level includes the access control models to limit the permissions to of legitimate users to read, write data and encryption at times. These security models are sometimes insufficient to prevent misuse, especially insider abuse by legitimate users. When Microsoft Defender detects anomalous pattern, it fires an alert to make you aware of such activity as well.

Enabling Microsoft Defender with PostgreSQL Flexible Server

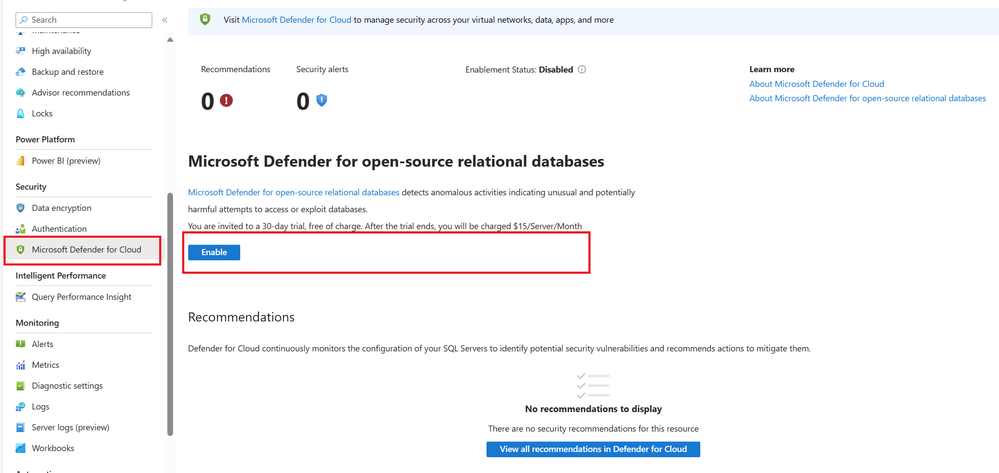

Enabling Defender with PostgreSQL Flexible Server in Azure Portal

Enabling Defender with PostgreSQL Flexible Server in Azure Portal

- From the Azure portal, navigate to Security menu in the left pane.

- Pick Microsoft Defender for Cloud

- Click Enable in the right pane.

Resources

For more information on Azure Defender and its use with Postgres Flexible Server see following:

To learn more about our Flexible Server managed service, see the Azure Database for PostgreSQL service page. We’re always eager to hear customer feedback, so please reach out to us at Ask Azure DB for PostgreSQL.

by Contributed | Dec 12, 2023 | Technology

This article is contributed. See the original author and article here.

Today, we’re excited to announce the public preview of the Arc VS Code Extension – a one-stop-shop for all your developer needs. Whether you’re just starting your journey with Arc, or you’re already in production with an Arc enabled application, our extension can help streamline your developer experience. The Arc VS Code extension is a home for Enterprise and ISV developers alike. The features built into the extension can help you accelerate development for both workloads that you’re running on the Edge, as well as services that you’re building to publish on the Azure Marketplace.

We understand that it can be time consuming to learn new technologies and develop and test your applications. To add to the challenge, building your first application will require you to ramp up and interact with multiple products as you go through the developer workflow. To simplify this experience, we’re bringing these resources to VS Code so you never have to leave your workbench. You can use the Azure Arc VS Code Extension to deploy your first Arc enabled environment, learn Arc technologies using sample applications, and deploy to an AKS cluster for testing.

A Developer Hub for Arc

By downloading the Arc Extension to your VS code, you can now complete the following tasks in-editor:

- Deploy your first Arc enabled environment by creating a single machine AKS cluster. You can use this cluster to deploy applications locally and test your Arc enabled workloads.

- Connect your AKS clusters in your development or test environments to Arc using a single click.

- Discover sample applications by using the VS Code Extension to clone the Jumpstart Agora repository, a collection of sample applications for various cloud-to-edge scenarios.

- Create your first Arc-enabled service from scratch, even a simple HelloWorld application, with the sample code provided by deploying it on an AKS cluster on your desktop. Once you’ve created your HelloWorld application, you can then begin to deploy more complex applications, including this sample application with KeyVault

What’s next for the Arc VS Code Extension

We’re currently in public preview, and we’re working hard to add more features to the VS Code Extension that will further simplify your developer workflow. We’re working on more sample applications and a more flexible and robust developer environment.

Feedback & Contributions

The Arc VS Code Extension is an open-source, MIT-licensed product! Our team is excited to collaborate with all of you and intends for the Arc VS Code Extension to be community-driven. We welcome contributions as well as feedback. Our team encourages you to file issues, open pull requests, contribute to discussions, and more via our GitHub repository.

Microsoft 365 Copilot is not only a powerful tool for creating documents, but also a smart assistant for Microsoft Teams meetings. With Copilot, you can easily prepare for your meetings, take notes, capture action items, and follow up on tasks. Here are some ways that Copilot can help you in your Teams meetings:

Recent Comments