Forrester study finds 346% ROI when modernizing service operations with Dynamics 365 Field Service

This article is contributed. See the original author and article here.

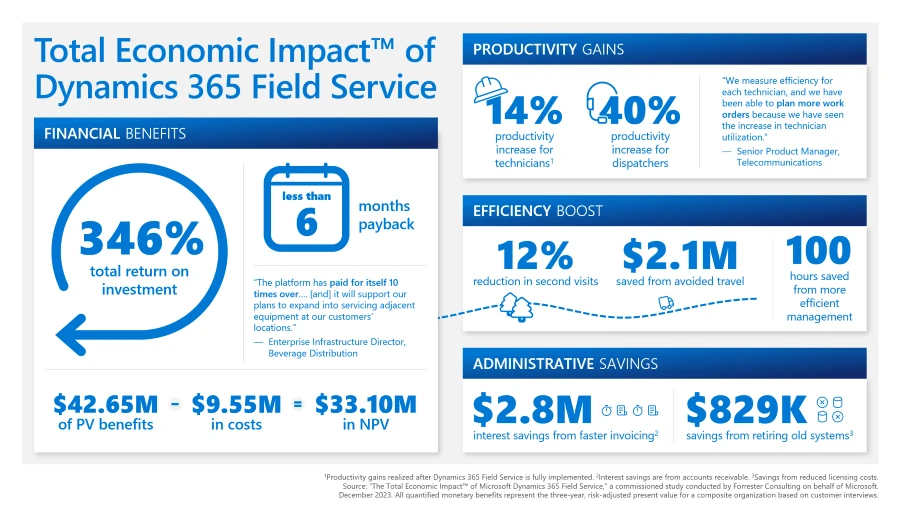

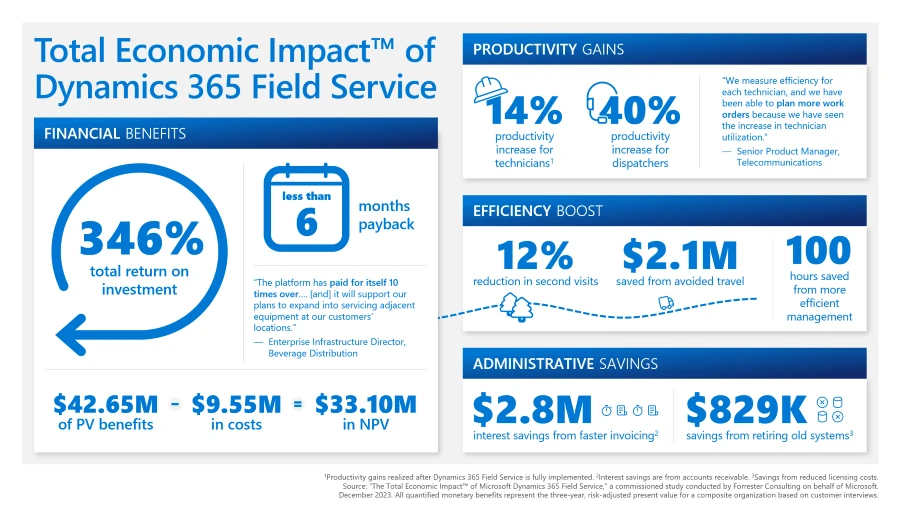

According to our Forrester Consulting study, software that helps organizations elevate field service delivery can improve financial performance in two ways: by helping increase customer retention and expansion by exceeding service expectations, and by increasing productivity. We are pleased to share the results of a December 2023 Forrester Consulting Total Economic ImpactTM (TEI) Study commissioned by Microsoft. Forrester calculates Microsoft Dynamics 365 Field Service delivered benefits of $42.65 million over three years to a composite organization. The total investment required was $9.5 million and provided a ROI of 346% with a payback period of less than six months.

Methodology and purpose

TEI is a methodology developed by Forrester Research to assist companies with technology-related purchase decisions by providing business decision-makers with a framework to evaluate the potential financial impact of the technology on the entire organization.

Forrester Consulting interviewed 11 representatives from seven organizations with experience using Dynamics 365 Field Service to conduct this TEI study. The characteristics of these businesses and the results of the interviews were then aggregated to develop a composite organization. The key findings of the study are based on the composite organization and are summarized below.

Key challenges

The organizations that were interviewed for the study shared the following challenges prior to adopting Dynamics 365:

- Lack of visibility into field service status

- Communication issues among management, sales, and service teams

- Technicians’ inability to complete work orders in a timely fashion

With these challenges top of mind, the interviewees sought to invest in a solution that could improve the productivity and efficiency of their field service teams, enable cost savings, and lead to customer retention and profitability.

Key findings

Dynamics 365 Field Service helps organizations deliver onsite service to customer locations. The application includes work order automation, scheduling algorithms, asset servicing, mobility, Microsoft 365 integration, and infusion of generative AI through Copilot to set up frontline workers for success when they are onsite providing service for customers. It is part of the larger Dynamics 365 portfolio of applications designed to work together to deliver efficiency and improve customer experience.

Forrester’s study revealed seven quantifiable impact areas: increased first-time fix rate, increased field technician productivity, eliminated standard time-to-invoice delays, avoided travel time, improved dispatcher productivity, enhanced management productivity, and retired legacy solutions.

We examine each of these areas below in more detail to understand how Dynamics 365 Field Service delivers value across field service organizations.

Increased first-time fix rate

Sending out field technicians to resolve customer issues is expensive even for the first visit, so many organizations want to do everything they can to avoid a second truck roll to resolve a problem. Deploying Dynamics 365 Field Service helped the organizations to increase their first-time fix rates by ensuring that technicians could quickly locate equipment, understand history and problems, tap into institutional knowledge about problems, and ensuring they had stocked the appropriate parts for service. Increasing the first-time fix rates also helped these organizations avoid 12% of second visits by additional technicians to complete a service call.

Increased technician productivity

Interviewees found that Dynamics 365 Field Service helped to remove many administrative tasks, so field technicians could spend more of their time focusing on addressing customer issues. Organizations were also able to use the solution to find the best field technician for each job, determine the most efficient route for getting to a customer site, and ensuring that technicians were carrying the right parts and tools to fix the problems. In addition, the Microsoft Dynamics 365 Remote Assist feature helped technicians use institutional knowledge, rather than having to spend time tracking down a peer or documentation. And both managers and technicians had greater visibility into technicians’ service calls, which helped them to plan for greater efficiency when scheduling customer work orders. All of this resulted in increasing field technician productivity by up to 14% once Dynamics 365 Field Service and Remote Assist were fully implemented.

Decreased time to invoice customers

An inability to integrate field service applications with key applications in finance often meant considerable time gaps between when a service order was completed and a customer was billed for service. One interviewee noted that using paper-based processes for invoicing service calls meant up to a month could go by before an invoice was sent, but after implementing Dynamics 365 Field Service, customers could be invoiced for work orders on the same day. For the composite organization, eliminating standard time-to-invoice delays resulted in $2.8 million savings in interest on accounts receivable.

Avoided travel time

One key challenge interviewees shared was that field technicians could lose significant time due to traffic delays or inefficient job routing, which required them to go out of their way to get to customer sites. With Dynamics 365 Field Service, dispatchers could ensure that planned routes were the most efficient and economical and that technicians’ routes were updated constantly to avoid potential slowdowns from traffic or road construction. The availability of mixed reality apps like Dynamics 365 Remote Assist and Dynamics 365 Guides also meant that field technicians could get assistance without subject matter experts needing to be on-site.

By using routing algorithms and traffic updates provided by Dynamics 365 Field Service, the composite organization can create more efficient schedules for technicians and save $2.1 million over three years.

Improved dispatcher productivity

Service dispatchers were often relying on highly manual processes to assign field technicians to jobs. Any change in staffing or scheduling increased inefficiency, especially since schedules were shared across whiteboards, spreadsheets, and calendar apps, meaning mistakes and deletions could be made. Dynamics 365 Field Service enables service organizations to automate scheduling and rescheduling for customer service calls. It also helps service managers match the best service technician for a work order based on time or expertise. One project manager interviewed for the study stated that having everything in one place provided better visibility for schedulers that helped them understand job progress and seamlessly include everyone in the workflow.

Overall, the composite organization saw a 40% improvement in dispatcher productivity as well as cost savings of $1.6 million.

Enhanced management productivity

optimize your field service management with proven solutions

Some interviewees had reported that field service managers spent a lot of time resolving scheduling issues, tracking missing parts inventory, and following up on incomplete jobs. With the ability to automate more processes in Dynamics 365 Field Service, those field service managers found they had more time to focus on strategic tasks that help their teams improve in other ways. Because reporting provided managers with information they didn’t have access to before, they were able to get a clearer view into technician productivity, work order status, parts inventory, and other metrics that helped them discover and address gaps so they could meet monthly targets. Service managers also had greater visibility into areas where field technicians needed more training and support, so they could improve team performance overall.

Service managers enhanced management productivity by 100 hours per year.

Retired legacy solutions

In the past, participant organizations used various combinations of email, calendar and scheduling apps, spreadsheets, or third-party field service tools to manage their field service efforts. Implementing Dynamics 365 Field Service, which integrates with Microsoft 365 apps such as Outlook and Microsoft Teams, helped to reduce licensing, administration, and maintenance costs for maintaining separate applications to support field service teams.

Other benefits

Beyond the quantified benefits detailed above, the organizations participating in the TEI study also experienced other benefits, including:

- Improved customer experience through increased efficiency and more accurate updates about service calls.

- Enhanced employee experience by enabling field technicians to use their mobile phones to complete most of their work tasks.

- Improve service delivery speed and quality by using Copilot in Dynamics 365 Field Service in their field service operations.

- Access to mixed reality applications such as Dynamics 365 Remote Assist and Microsoft Dynamics 365 Guides to help support field technicians on service calls.

Next steps

As we have seen here, Forrester’s study uncovered seven quantifiable impact areas along with several other significant unquantifiable benefits. When combined, these factors resulted in benefits of $42.65 million over three years for the composite organization. The total investment required was $9.5 million, leading to a 346% ROI with a payback period of less than six months.

For a closer look at the results and to understand how Dynamics 365 Field Service can help your service organization, you can download and read the full study: The Total Economic ImpactTM of Microsoft Dynamics 365 Field Service.

Source: Forrester: “The Total Economic ImpactTM of Microsoft Dynamics 365 Field Service”, Forrester Research, Inc., December 2023.

The post Forrester study finds 346% ROI when modernizing service operations with Dynamics 365 Field Service appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments