by Contributed | Dec 30, 2021 | Technology

This article is contributed. See the original author and article here.

As we approach the new year, we often find ourselves reflecting on what we accomplished in the year that is coming to a close. Global pandemic aside, 2021 was a busy year for Azure Databricks. The platform expanded into new core use-cases while also providing additional capabilities and features to support mature and proven use-cases and patterns. Below you’ll find some of the highlights from 2021.

Lakehouse Platform

In 2021, the Lakehouse architecture really picked up steam. Early in the year, Lakehouse was mentioned in Gartner’s Hype Cycle for Data Management validating it as an architecture pattern being evaluated and leveraged across many different organizations and leading to many data and analytics companies and services adopting and providing guidance around the pattern.

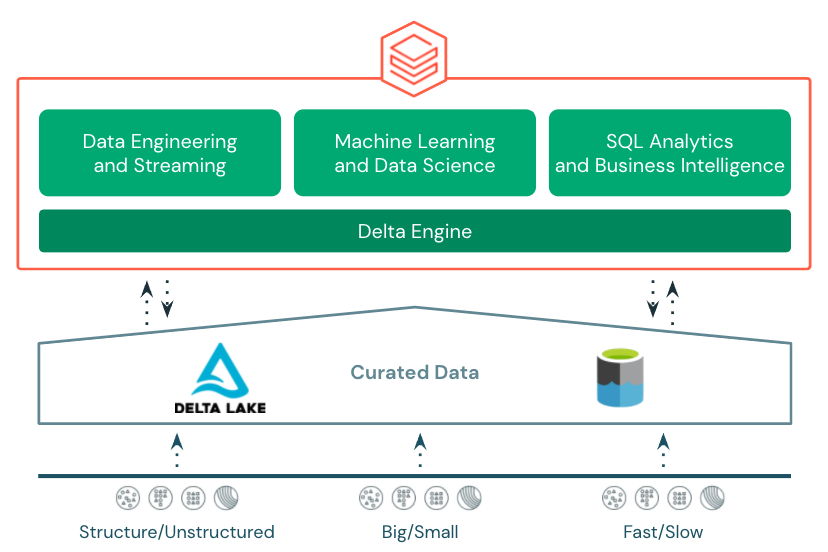

The Azure Databricks Lakehouse Platform provides end-to-end, best in class support for data engineering, stream processing, data science and machine learning, and SQL and business intelligence all on top of transactional, open storage in Azure Data Lake Store.

The foundation for the Azure Databricks Lakehouse is Delta Lake. Delta Lake is an open storage format that brings reliability to curated data in the data lake with acid transactions, data versioning, time-travel, etc. 2021 saw a slew of new features introduced to Delta Lake and Azure Databricks’s integration with Delta Lake including:

Photon

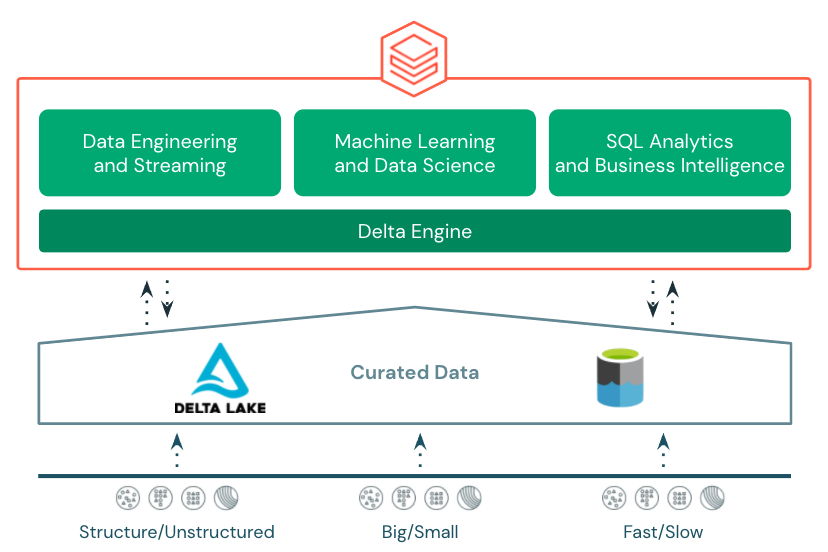

Also in 2021, Photon was made available in Azure Databricks. Photon is the native vectorized query engine on Azure Databricks, written to be directly compatible with Apache Spark APIs so it works with your existing code.

It’s also the same engine that was used to set an official data warehousing performance record. Photon is the default engine for Databricks SQL and is also available as a runtime option on clusters in Azure Databricks. Try it out to see how it improves performance on your existing workloads.

New User Experiences

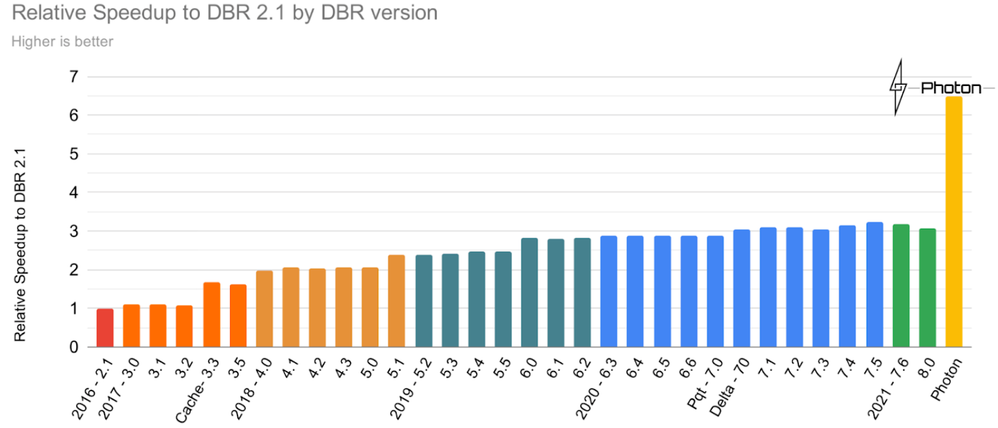

2021 also brought new user experiences to Azure Databricks. Previously, data engineers, data scientists, and data analysts all shared the same notebook-based experience in the Azure Databricks workspace. In 2021, two new experiences were added.

Databricks Machine Learning was added to cater more to the needs of data scientists and ML engineers with easy access to notebooks, experiments, the feature store, and the MLflow model registry. Databricks SQL was added to cater more to the needs of business analysts, SQL analysts, and database admins with a familiar SQL-editor interface, query catalog, dashboards, access to query history, and other admin tools. An important characteristic of the three distinct user experiences is that all of them share a common metastore with database, table, and view definitions, consistent data security, and consistent programming languages and APIs to Delta Engine.

Databricks SQL

One of the biggest additions to Azure Databricks in 2021 was Databricks SQL. Databricks SQL was announced earlier in the year during the Data and AI Summit and went GA in Azure Databricks in December.

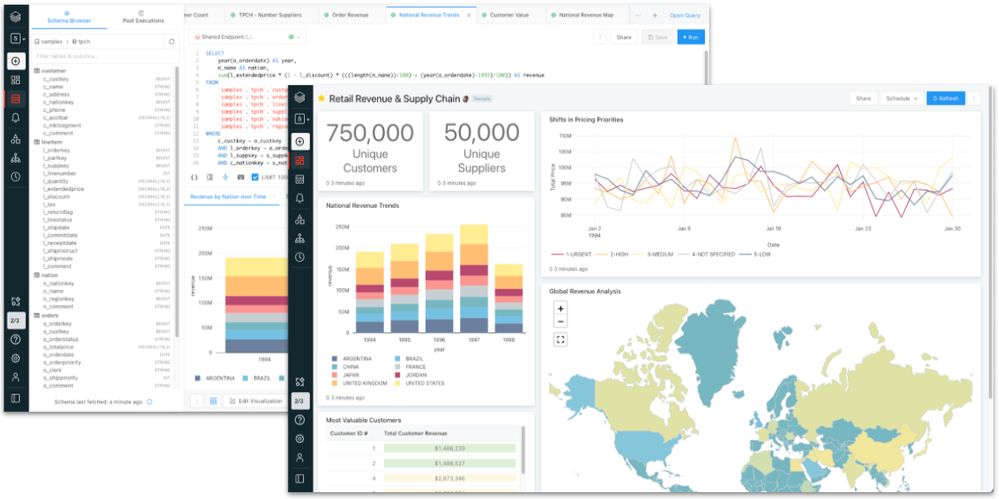

Databricks SQL provides SQL users and analysts with a familiar user experience and best-in-class performance for querying data stored in Azure Data Lake Store. Users can query tables and views in the SQL editor, build basic visualizations, bring those visualizations together in dashboards, schedule their queries and dashboards to refresh, and even create alerts based on query results.

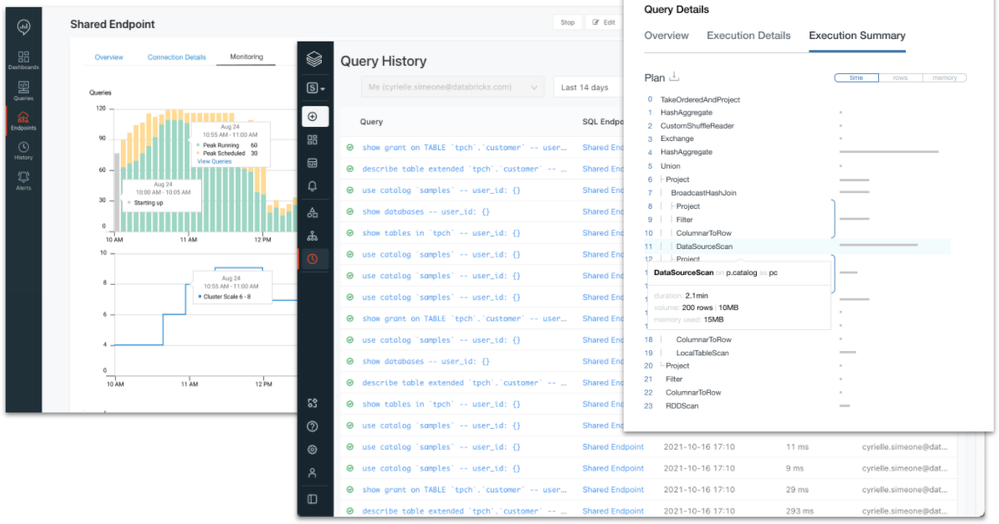

Databricks SQL also provides SQL and database admins with the tools and controls necessary to manage the environment and keep it secure. Administrators can monitor SQL endpoint usage, review query history, look at query plans, and control data access down to row and column level with familiar GRANT/DENY ACLs.

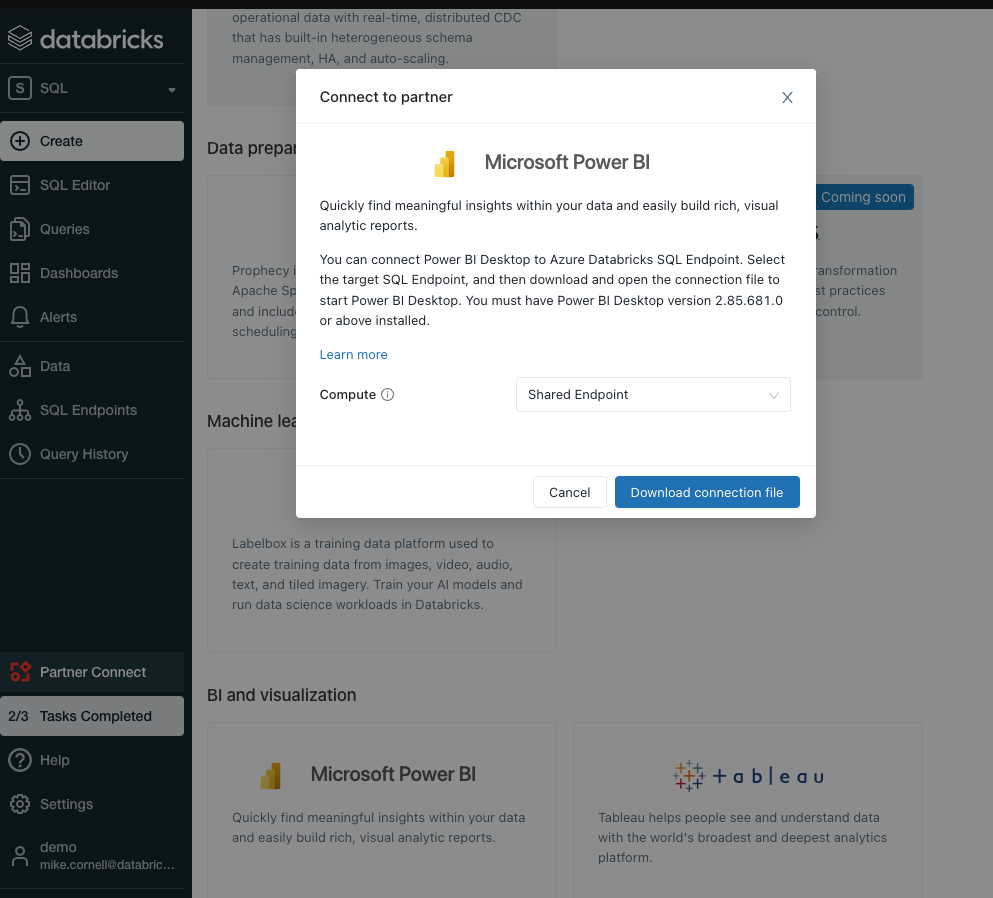

Along with Databricks SQL came stronger and easier integration with Power BI. Over the course of 2021, the Power BI connector for Azure Databricks went GA and got several major performance improvements including Cloud Fetch for faster retrieval of larger datasets into Power BI. With the inclusion of Power BI in the Azure Databricks Partner Connect portal, users can now connect their Databricks SQL endpoint to Power BI with just a few clicks.

Data Engineering

2021 also saw several new features and enhancements to help make data engineers more efficient.

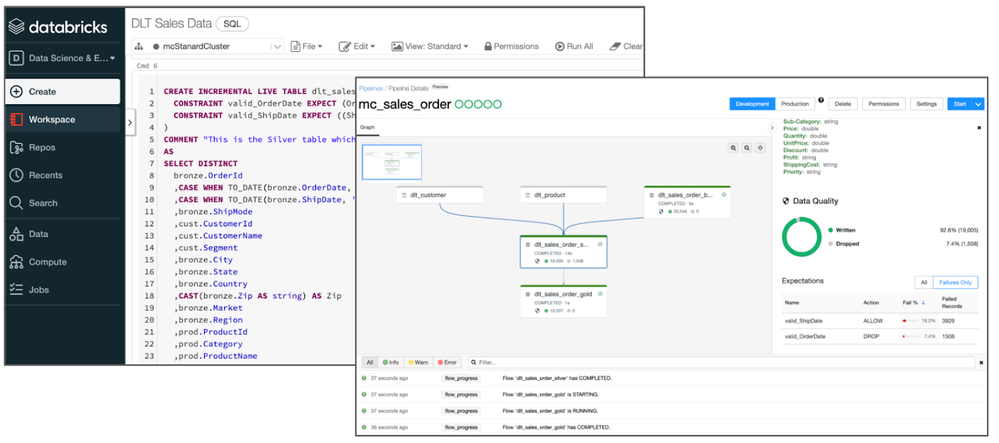

One of those is Delta Live Tables. Delta Live Tables provides a framework for building data processing pipelines. Users define transformations and data quality rules, and Delta Live Tables manages task orchestration, dependencies, monitoring, and error handling. Transformations and data quality rules can be defined using basic declarative SQL statements. Delta Live Tables can also be combined with the Databricks Auto Loader to provide simple, consistent incremental processing of incoming data. The Auto Loader also saw several enhancements including:

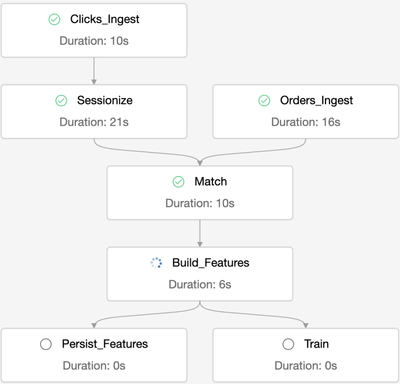

Another new capability that was added in 2021 is multi-task job orchestration. Prior to this capability, Databricks jobs could only reference one code artifact (i.e a notebook) per job. This meant that an external jobs orchestration tool was needed to string together multiple notebooks and manage dependencies. Multi-task job orchestration allows multiple notebooks and dependencies to be orchestrated and managed all from a single job. It also enables some additional future capabilities like the ability to reuse a jobs cluster across multiple tasks and even calculate a single DAG across tasks.

Databricks Machine Learning

Finally, 2021 brought some notable new features to an already industry leading data science and machine learning platform in Azure Databricks.

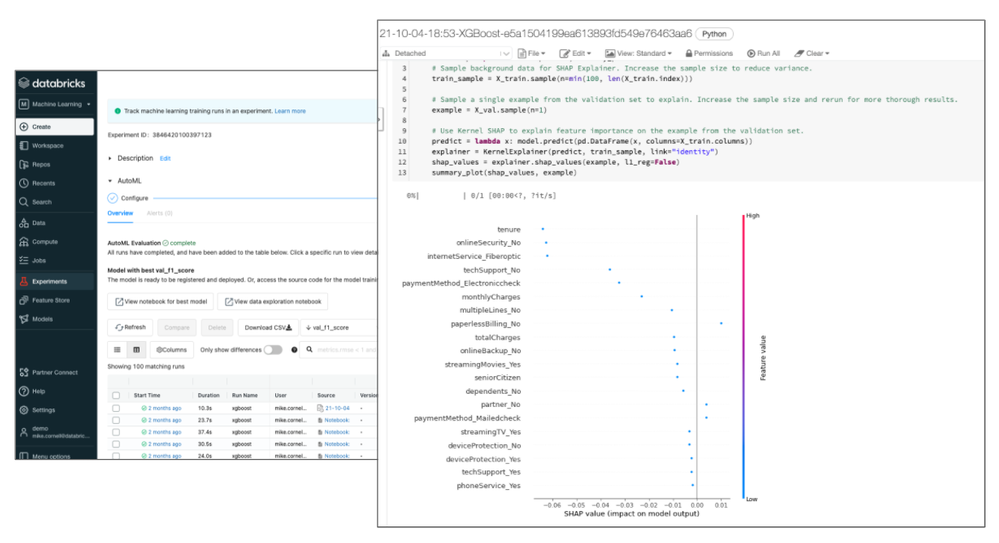

One of those features, announced during the Data and AI Summit, is Databricks AutoML. Databricks AutoML takes an open, glass-box approach to apply machine learning to a selected dataset. It includes prepping the dataset, training models, recording the hyperparameters, metrics, models, etc. using MLflow experiments, and even a Python notebook with the source code for each trial run (including feature importance!). This allows data scientists to easily build on top of the models and code generated by Databricks AutoML. Databricks AutoML automatically distributes trial runs across a selected cluster so that trials run in parallel.

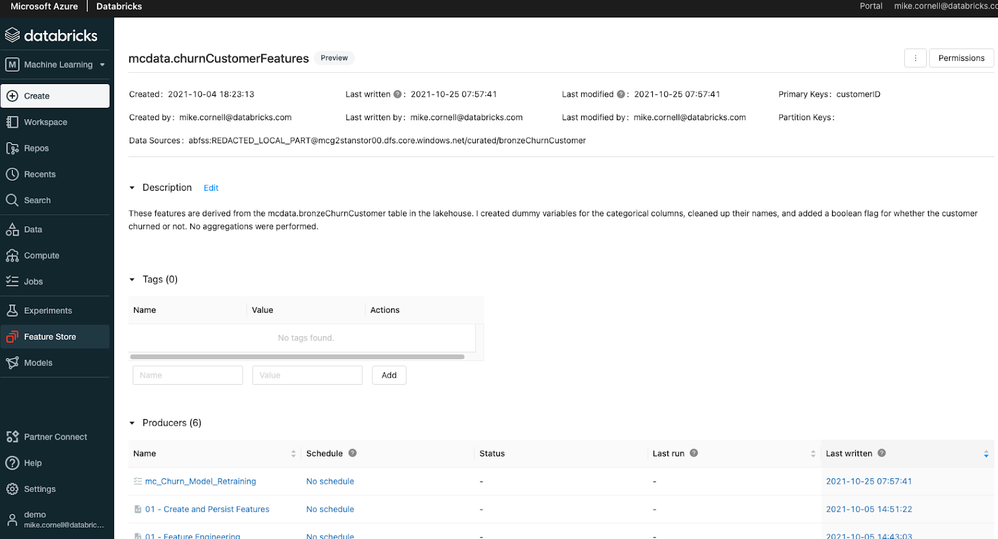

Another feature introduced to Databricks Machine Learning in Azure Databricks was the Databricks Feature Store. The Databricks Feature Store is built on top of Delta Lake and is stored in Azure storage giving it all the benefits of Delta Lake like an open format, built in versioning, time-travel, and built in lineage. When used in an MLflow model, the Databricks Feature Store injects the feature information and feature lookup code right into the MLflow model artifact. This takes the burden off of the data scientist and MLOps engineer. The Databricks Feature Store even includes a sync for online storage like Azure MySQL for low latency feature lookups for real time inference.

Both the Databricks AutoML and Databricks Feature Store are available in the Databricks Machine Learning experience in the Azure Databricks Workspace.

More to Come in 2022

While 2021 was a busy year for Azure Databricks, there’s already some highly anticipated features and capabilities expected in 2022. Those include:

- The Databricks Unity Catalog will make it easier to manage and discover databases, tables, security, lineage, and other artifacts across multiple Azure Databricks workspaces

- Managed Delta Sharing will allow for secure sharing of Delta Lake datasets stored in Azure Data Lake Store to internal consumers and external consumers all with an open, vendor/tool agnostic standard (already available in Power BI!)

- Databricks Serverless SQL will allow for nearly-instant SQL compute startup with minimal management likely resulting in lower costs for BI and SQL workloads

- New low-code/no-code capabilities will enable and empower both data scientists and citizen data scientists to explore, visualize, and even prepare data with just a few clicks.

Get Started

Ready to dive in and get hands on with these new features? Register for a free trial, attend this 3-part training series, and join an Azure Databricks Quickstart Lab where you can get your questions answered by Databricks and Microsoft experts.

by Contributed | Dec 29, 2021 | Technology

This article is contributed. See the original author and article here.

Azure Data explorer team is constantly focused on reducing COGS and making sure users are paying only for value they are getting.

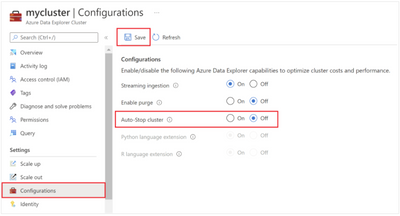

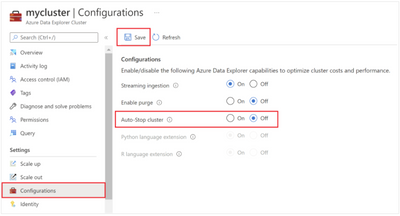

As part of this initiative, we’re now adding a new automatic capability to stop unused clusters.

In case, you created a cluster and did not ingest any data to it or even if you ingested data and later, you’re not running any queries or ingesting new data for days, we will automatically stop that cluster.

Stopping the cluster reduces cost significantly as it releases the compute resources which are the bulk of the overall cluster cost.

Once a cluster is stopped you will need to actively start it when you need it again. It will not start automatically when a new query is sent or ingest operation performed.

When a cluster is stopped it does not lose any of the already ingested data, since the storage resources are not released. This means, that once you start the cluster it will be ready for use in minutes with all databases, tables and functions fully operational.

It is possible to manage cluster auto-stop either using SDKs or using the Azure Portal. For more details on automatic stop of inactive Azure Data Explorer clusters, read this – https://docs.microsoft.com/en-us/azure/data-explorer/auto-stop-clusters

This new feature is another piece in the cost reduction effort the Azure Data Explorer team is making.

You’re welcome to add more proposals and ideas and vote for them here – https://aka.ms/adx.ideas

ADX dashboards team

Recent Comments