How to setup File system storage utilization alert rule for web apps

This article is contributed. See the original author and article here.

As following document indicated, File System Usage is a new metric being rolled out globally, no data is expected unless your app is hosted in an App Service Environment.

https://docs.microsoft.com/en-us/Azure/app-service/web-sites-monitor#understand-metrics

Therefore you may not use this metric for alert rule currently, even you can see this metric in alert rule setting UI.

As a workaround, we can create a WebJob to call following rest api that can get app service plan “File System storage’ utilization and then sent an email if met exceed usage situation.

https://management.azure.com /subscriptions/{sub}/resourceGroups/{rg}/providers/Microsoft.Web/serverfarms/{app service plan name}/usages?api-version=2019-08-01

Here is my demo steps for your reference.

1.In order to call resource manager rest api, firstly I created service principal that can access resources.

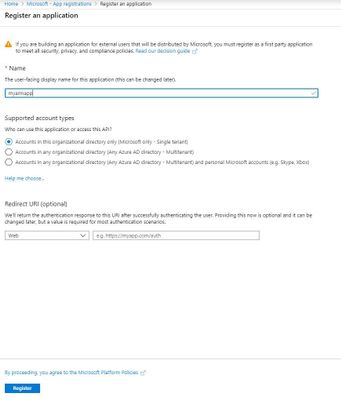

Sign in to Azure Account through the Azure portal->Select Azure Active Directory->Select App registrations->Select New registration.

To access resources in subscription, assign the application to a role as contributor role.

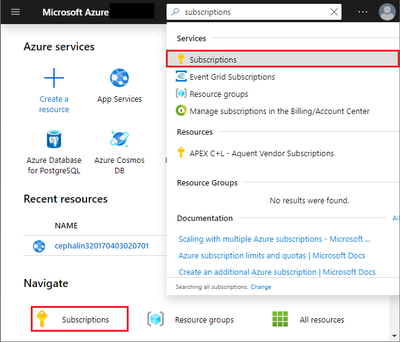

Select the particular subscription that include your app service plan to monitor.

Select Access control(IAM)->Add role assignment, add the contributor role to application.

Get values for signing in.

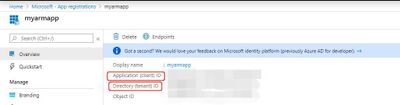

Select Azure Active Directory->From App registrations in Azure AD, select your application.

Copy the Directory(tenant)ID and Application(client) ID and will use it later.

At this App registration, Create a new application secret, select Certificates & secrets->Select Client secrets -> New client secret.

Also copy this secret for later use.

For more details for above steps, please refer below link:

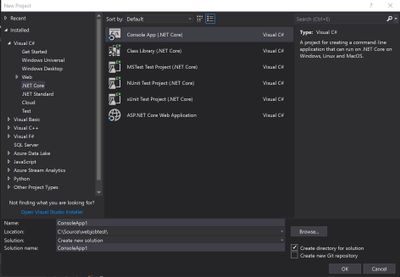

2.Create a new C# .net Core Console app in the visual studio for the web job development.

Install the latest stable 3.x version of the Microsoft.Azure.WebJobs.Extensions NuGet package, which includes Microsoft.Azure.WebJobs.

Here’s the Package Manager Console command for version 3.0.2:

Install-Package Microsoft.Azure.WebJobs.Extensions -version 3.0.2

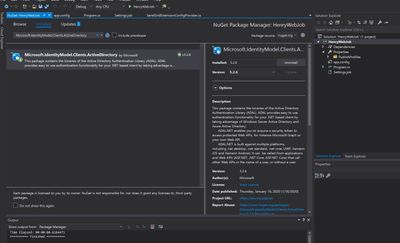

Install the Active directory authentication package in Visual Studio.

The package is available in the NuGet Gallery.

Get an access token for the app in C# program.

In program.cs, add an assembly reference for the ActiveDirectory identity model:

using Microsoft.IdentityModel.Clients.ActiveDirectory;

And add a method to get an access token using previously copied tenantId, applicationId and client secret.

private static async Task<string> GetAccessToken(string tenantid, string clientid, string clientsecret)

{

string authContextURL = "https://login.microsoftonline.com/" + tenantid;

var authenticationContext = new AuthenticationContext(authContextURL);

var credential = new ClientCredential(clientid, clientsecret);

var result = await authenticationContext.AcquireTokenAsync("https://management.azure.com/", credential);

if (result == null)

{

throw new InvalidOperationException("Failed to obtain the JWT token");

}

return result.AccessToken;

}

Now everything is set to make REST calls defined in the Azure Resource manager REST API.

We can add a method to call a following GET REST API for app service plan “File System storage’ utilization with the token gotten by above method and calculate if the current usage exceed limit.

private static bool GetUsage(string URI, String token)

{

Uri uri = new Uri(String.Format(URI));

// Create the request

var httpWebRequest = (HttpWebRequest)WebRequest.Create(uri);

httpWebRequest.Headers.Add(HttpRequestHeader.Authorization, "Bearer " + token);

httpWebRequest.ContentType = "application/json";

httpWebRequest.Method = "GET";

// Get the response

HttpWebResponse httpResponse = null;

try

{

httpResponse = (HttpWebResponse)httpWebRequest.GetResponse();

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

return false;

}

string result = null;

using (var streamReader = new StreamReader(httpResponse.GetResponseStream()))

{

result = streamReader.ReadToEnd();

}

Int64 currentvalue = Convert.ToInt64(JObject.Parse(result).SelectToken("value[10].currentValue").ToString());

Int64 limit = Convert.ToInt64(JObject.Parse(result).SelectToken("value[10].limit").ToString());

if (currentvalue > limit)//You can set your condition as your requirement

return true;

else

return false;

}

Then in the execute method, will send email if the usage exceed. In this method I used SendGrid to implement emailing feature.

For more details regarding SendGrid configuration, please refer following link:

https://docs.microsoft.com/en-us/azure/sendgrid-dotnet-how-to-send-email

public static void Execute()

{

string tenantId = "yourtenandid";

string clientId = "yourclientid";

string clientSecret = "yourclientsecret";

string restapiurl = "https://management.azure.com/subscriptions/{sub}/resourceGroups/{rg}/providers/Microsoft.Web/serverfarms/{appserviceplan}/usages?api-version=2019-08-01";

var token = GetAccessToken(tenantId,clientId,clientSecret).Result;

if (GetUsage(restapiurl, token))

{

var apiKey = ConfigurationManager.AppSettings["AzureWebJobsSendGridApiKey"].ToString();

var client = new SendGridClient(apiKey);

var msg = new SendGridMessage()

{

From = new EmailAddress("loshen@microsoft.com", "DX Team"),

Subject = "henry",

};

msg.AddTo(new EmailAddress("loshen@microsoft.com", "Test User"));

msg.AddContent("text/html", "<html><body>There is Alert for File sytem usage.</body></html>");

var response = client.SendEmailAsync(msg).Result;

}

}

The complete code for program.cs would be like this:

using System;

using System.IO;

using System.Threading.Tasks;

using SendGrid;

using SendGrid.Helpers.Mail;

using System.Net;

using System.Configuration;

using Microsoft.IdentityModel.Clients.ActiveDirectory;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

namespace HenryWebJob

{

class Program

{

static void Main()

{

Execute();

}

public static void Execute()

{

string tenantId = "yourtenandid";

string clientId = " yourclientid ";

string clientSecret = "yourclientsecret";

string restapiurl = " https://management.azure.com/subscriptions/{sub}/resourceGroups/{rg}/providers/Microsoft.Web/serverfarms/{appserviceplan}/usages?api-version=2019-08-01";

var token = GetAccessToken(tenantId,clientId,clientSecret).Result;

if (GetUsage(restapiurl, token))

{

var apiKey = ConfigurationManager.AppSettings["AzureWebJobsSendGridApiKey"].ToString();

var client = new SendGridClient(apiKey);

var msg = new SendGridMessage()

{

From = new EmailAddress("loshen@microsoft.com", "DX Team"),

Subject = "henry",

};

msg.AddTo(new EmailAddress("loshen@microsoft.com", "Test User"));

msg.AddContent("text/html", "<html><body>There is Alert for File sytem usage.</body></html>");

var response = client.SendEmailAsync(msg).Result;

}

}

private static async Task<string> GetAccessToken(string tenantid, string clientid, string clientsecret)

{

string authContextURL = "https://login.microsoftonline.com/" + tenantid;

var authenticationContext = new AuthenticationContext(authContextURL);

var credential = new ClientCredential(clientid, clientsecret);

var result = await authenticationContext.AcquireTokenAsync("https://management.azure.com/", credential);

if (result == null)

{

throw new InvalidOperationException("Failed to obtain the JWT token");

}

return result.AccessToken;

}

private static bool GetUsage(string URI, String token)

{

Uri uri = new Uri(String.Format(URI));

// Create the request

var httpWebRequest = (HttpWebRequest)WebRequest.Create(uri);

httpWebRequest.Headers.Add(HttpRequestHeader.Authorization, "Bearer " + token);

httpWebRequest.ContentType = "application/json";

httpWebRequest.Method = "GET";

// Get the response

HttpWebResponse httpResponse = null;

try

{

httpResponse = (HttpWebResponse)httpWebRequest.GetResponse();

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

return false;

}

string result = null;

using (var streamReader = new StreamReader(httpResponse.GetResponseStream()))

{

result = streamReader.ReadToEnd();

}

Int64 currentvalue = Convert.ToInt64(JObject.Parse(result).SelectToken("value[10].currentValue").ToString());

Int64 limit = Convert.ToInt64(JObject.Parse(result).SelectToken("value[10].limit").ToString());

if (currentvalue < limit)//You can set your condition as your requirement

return true;

else

return false;

}

}

}

Then schedule the webjob as every 5 minutes with Settings.job file.

{

"schedule": "0 */5 * * * *"

// Examples:

// Runs every minute

// "schedule": "0 * * * * *"

// Runs every 15 minutes

// "schedule": "0 */15 * * * *"

// Runs every hour (i.e. whenever the count of minutes is 0)

// "schedule": "0 0 * * * *"

// Runs every hour from 9 AM to 5 PM

// "schedule": "0 0 9-17 * * *"

// Runs at 9:30 AM every day

// "schedule": "0 30 9 * * *"

// Runs at 9:30 AM every week day

// "schedule": "0 30 9 * * 1-5"

}

Publish the webjob to an webapp

In Solution Explorer, right-click the project and select Publish.

Then go the webapp->WebJobs, can see webjob running as scheduled.

For more details regarding webjob, can refer following link:

https://docs.microsoft.com/en-us/azure/app-service/webjobs-sdk-get-started

Recent Comments