by Contributed | Feb 12, 2021 | Technology

This article is contributed. See the original author and article here.

Between ITOpsTalks: All Things Hybrid and the upcoming Microsoft Ignite, the news just keeps on coming. Here’s what the team is covering this week: Soft delete for Azure file shares is now on by default for new storage accounts, Create and run jobs in your Azure IoT Central application, Public Preview of Power BI embedded generation 2, Connecting Azure to the International Space Station with Hewlett Packard Enterprise and as always our Microsoft Learn Module of the week.

General availability: Soft delete for Azure file shares is now on by default for new storage accounts

Soft delete acts like a recycle bin for Azure file shares, meaning that deleted shares remain recoverable for their entire retention period for 7 days by default for storage accounts created after January 31st.

You will be charged for soft deleted data on the snapshot meter. If you have automated the creation of new storage accounts and the creation/deletion of new file shares within them, you must modify your scripts to explicitly disable soft delete after the creation of a new storage account. Soft delete will remain disabled by default for existing storage accounts.

More information can be found here: Preventing accidental deletion of Azure file shares

Azure Edge Computing on the International Space Station

Microsoft announced thier partnership with Hewlett Packard Enterprise to connect Azure directly to space using HPE’s upcoming launch of its Spaceborne Computer-2 delivering edge computing and artificial intelligence capabilities together for the first time on the International Space Station.

Microsoft Research and Azure Space engineering teams are currently evaluating the potential of HPE’s space, state-of-the-art processing in conjunction with hyperscale Azure, alongside the development of advanced artificial intelligence and machine learning models to support new insights and research advancements, including:

- Weather modeling of dust storms to enable future modeling for Mars missions.

- Plant and hydroponics analysis to support food growth and life sciences in space.

- Medical imaging using an ultrasound on the ISS to support astronaut healthcare.

On February 20, HPE’s Spaceborne Computer-2 is scheduled to launch into orbit for the International Space Station bringing Azure AI and machine learning to new space missions, emphasizing the true power of hyperscale computing in support of edge scenarios.

More information can be found here: Connecting Azure to the International Space Station with Hewlett Packard Enterprise

Microsoft announces Power BI Embedded Generation 2 public preview

Microsoft has announced the 2nd generation of Power BI Embedded, referred to as Embedded Gen 2, is available for Azure subscribers to use during the preview period. All of the Power BI Embedded Gen 1 capabilities such as pausing and resuming the capacity, are preserved in Gen 2 and the price per SKU remains the same, however the Gen 2 capacity resource provides the following updates and improved experience:

- Enhanced performance- Better performance on any capacity size, anytime. Operations will always perform at top speed and won’t slow down when the load on the capacity approaches the capacity limits.

- Greater scale – No limits on refresh concurrency, fewer memory restrictions and complete separation between report interaction and scheduled refreshes

Lower entry level for paginated reports and AI workloads – You can start with an A1 SKU and grow as you need.

- Scaling a resource instantly – From scaling a Gen 1 resource in minutes, to scaling a Gen 2 resource in seconds.

Scaling without downtime – You can scale an Embedded Gen 2 resource without experiencing any downtime.

- Improved metrics – Coming in a few weeks to simplify monitoring and metrics-based automation. Instead of various Gen 1 metrics, there will be one CPU utilization metric in Gen 2. In addition, a built-in reporting tool will allow you to perform utilization analysis, budget planning and chargebacks.

The Power BI team have created detailed documentation to help navigate the public preview that they’ll continue to update throughout the process. You can learn much more about Power BI Embedded Gen 2 and how it works in the following docs:

Create and run a job in your Azure IoT Central application

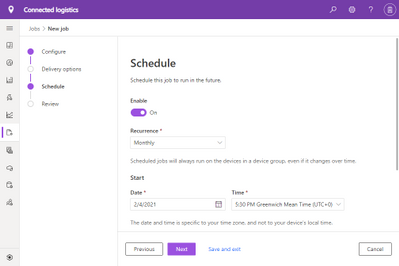

Scheduling in the updated jobs wizard now lets you configure a recurring monthly job in addition to hourly and daily recurring jobs:

Azure IoT Central can now manage your connected devices at scale through jobs. Jobs allow for bulk updates to device and cloud properties and run commands.

This article shows you how to get started with using jobs in your own application: Create and run jobs in your Azure IoT Central application

Community Events

- Microsoft Ignite – Registration is now available for the upcoming event. Stay tuned for more details as they become available.

- Azure admin jump start – Live, demo-heavy deep dives into scenarios detailing core Azure services, workloads, security, and governance.

MS Learn Module of the Week

Discover the role of Python in space exploration

The goal of this learning path is not to learn Python, the goal is to understand how Python plays a role in the innovative solutions that NASA creates. Through the lens of space discovery, this learning path could ignite a passion to persistently learn, discover, and create so that you too can one day help us all understand a little more about the world beyond our Earth.

This 3 hour learning path can be completed here: Discover the role of Python in space exploration

Let us know in the comments below if there are any news items you would like to see covered in the next show. Be sure to catch the next AzUpdate episode and join us in the live chat.

by Contributed | Feb 12, 2021 | Technology

This article is contributed. See the original author and article here.

At 4:30PM PST on February 11, 2021 Azure Sphere customers may have been affected by an Azure Cosmos DB outage that disrupted the Azure Sphere Security Services. For more information about the Azure Cosmos DB outage, please see Azure status.

The Azure Sphere Security Service disruption was resolved at approximately 6:00PM PST on February 11, 2021. Azure Sphere customers should no longer be affected. If you are experiencing issues related to the Azure Cosmos DB outage and resulting Azure Sphere Security Services disruption, please contact your TSP for support.

by Contributed | Feb 11, 2021 | Technology

This article is contributed. See the original author and article here.

Azure Accelerated Networking is now available on the RDMA over InfiniBand capable and SR-IOV enabled VM sizes HB, HC, HBv2 and NDv2. Accelerated Networking enables Single Root IO Virtualization (SR-IOV) for a VM’s Ethernet SmartNIC resulting in enhanced throughput of 30 Gbps, and lower and more consistent latencies over the Azure Ethernet network. Performance data with guidance on optimizations to achieve higher throughout of up to 38 Gbps on some VMs is available on this blog post (Performance impact of enabling AccelNet) .

Note that this enhanced Ethernet capability is still additional to the RDMA capabilities over the InfiniBand network. Accelerated Networking over the Ethernet network will improve performance of loading VM OS images, Azure Storage resources, or communicating with other resources including Compute VMs.

When enabling Accelerated Networking on supported Azure HPC and GPU VMs it is important to understand the changes you will see within the VM and what those changes may mean for your workloads. Some platform changes for this capability may impact behavior of certain MPI libraries (and older versions) when running jobs over InfiniBand. Specifically the InfiniBand interface on some VMs may have a slightly different name (mlx5_1 as opposed to earlier mlx5_0) and this may require tweaking of the MPI command lines especially when using the UCX interface (commonly with OpenMPI and HPC-X). This article provides details on how to address any observed issues.

Enabling Accelerated Networking

New VMs with Accelerated Networking (AN) can be created for Linux and Windows. Supported operating systems are listed in the respective links. AN can be enabled on existing VMs by deallocating VM, enabling AN and restarting VM (instructions).

Note that certain methods of creating/orchestrating VMs will enable AN by default. For example, if the VM type is AN-enabled, the Portal method of creation will, by default, check “Accelerated Networking”

Some other orchestrators may also choose to enable AN by default, such as CycleCloud which is the simplest way to get started with HPC on Azure.

New network interface:

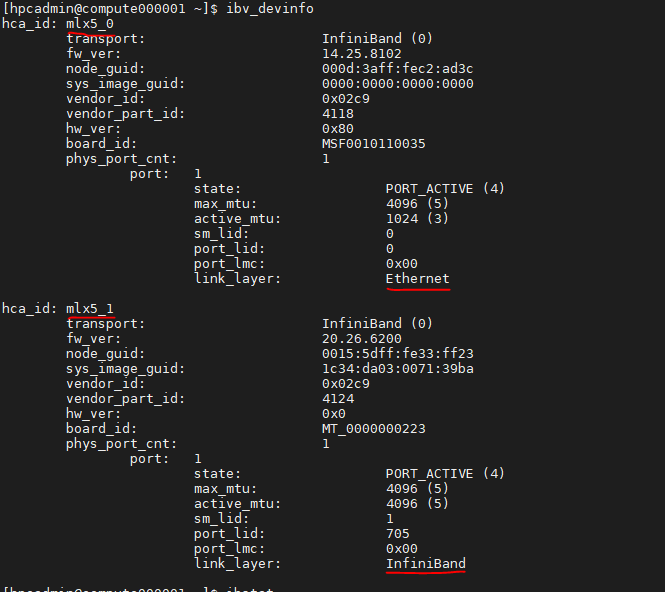

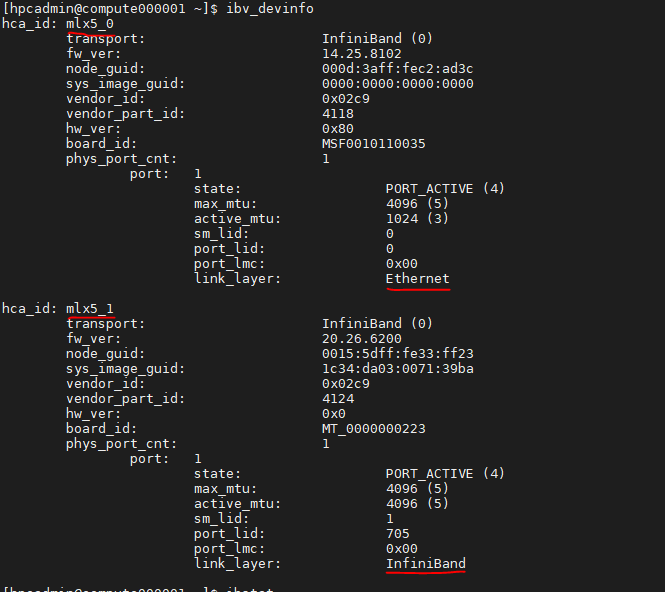

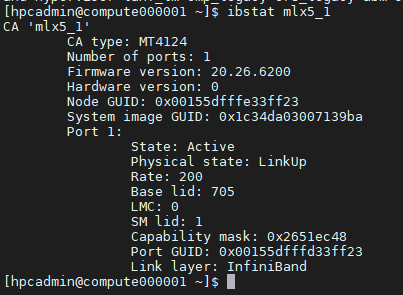

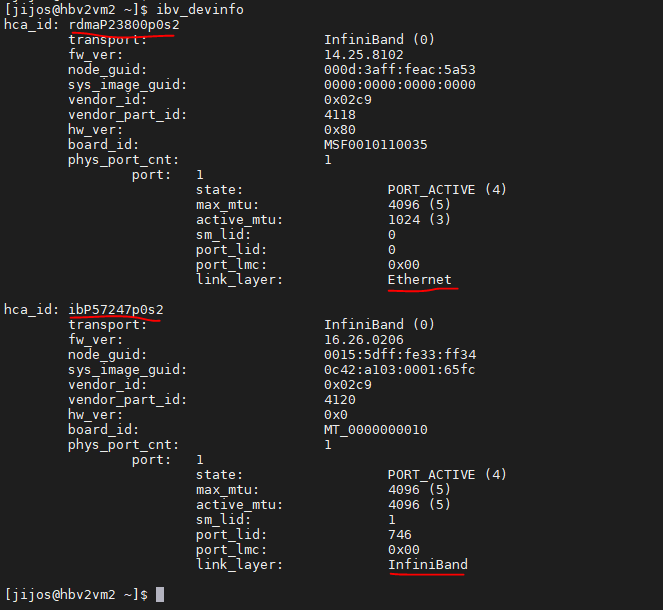

As the Ethernet NIC is now SR-IOV enabled, it will show up as a new network interface. Running lspci command should show an additional Mellanox virtual function (VF) for Ethernet. On an InfiniBand enabled VM, there will be 2 VFs (Ethernet and InfiniBand). For example, following is a screenshot from Azure HBv2 VM instance (with OFED drivers for InfiniBand).

Figure 1: In this example, “mlx5_0” is the ethernet interface, and “mlx5_1” is the InfiniBand interface.

Figure 2: InfiniBand interface status (mlx5_1 in this example)

Impact of additional network interface:

As InfiniBand device assignment is asynchronous, the device order in the VM can be random. i.e., some VMs may get “mlx5_0” as InfiniBand interface, whereas certain other VMs may get “mlx5_1” as InfiniBand interface.

However, this can be made deterministic by using a udev rule, proposed by rdma-core.

$ cat /etc/udev/rules.d/60-ib.rules

# SPDX-License-Identifier: (GPL-2.0 OR Linux-OpenIB)

# Copyright (c) 2019, Mellanox Technologies. All rights reserved. See COPYING file

#

# Rename modes:

# NAME_FALLBACK - Try to name devices in the following order:

# by-pci -> by-guid -> kernel

# NAME_KERNEL - leave name as kernel provided

# NAME_PCI - based on PCI/slot/function location

# NAME_GUID - based on system image GUID

#

# The stable names are combination of device type technology and rename mode.

# Infiniband - ib*

# RoCE - roce*

# iWARP - iw*

# OPA - opa*

# Default (unknown protocol) - rdma*

#

# Example:

# * NAME_PCI

# pci = 0000:00:0c.4

# Device type = IB

# mlx5_0 -> ibp0s12f4

# * NAME_GUID

# GUID = 5254:00c0:fe12:3455

# Device type = RoCE

# mlx5_0 -> rocex525400c0fe123455

#

ACTION=="add", SUBSYSTEM=="infiniband", PROGRAM="rdma_rename %k NAME_PCI"

With the above udev rule, the interfaces can be named as follows:

Note that the interface name can appear differently on each VM as the PCI ID for the InfiniBand VF is different on each VM. There is ongoing work to make the PCI ID unique such that the interface is consistent across all VMs.

Impact on MPI libraries

Most MPI libraries do not need any changes to adapt to this new interface. However, certain MPI libraries, especially those using older UCX versions, may try to use the first available interface. If the first interface happens to be the Ethernet VF (due to asynchronous initialization), MPI jobs can fail when using such MPI libraries (and versions).

When PKEYs are explicitly required (e.g. for Platform MPI) for communication with VMs in the same InfiniBand tenant, ensure that PKEYS are probed for in the correct location appropriate for the interface.

Resolution

UCX has now fixed this issue. So, if you are using an MPI library that uses UCX, please make sure to use the right UCX version.

HPC-X 2.7.4 for Azure includes this fix, and it will be available on the Azure CentOS-HPC VM images (CentOS 7.6, 7.7, 7.8, 8.1 based) on the Marketplace very soon. More details on the Azure CentOS-HPC VM images is available on the blog post and GitHub.

by Contributed | Feb 11, 2021 | Technology

This article is contributed. See the original author and article here.

Final Update: Friday, 12 February 2021 01:46 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 02/12, 00:40 AM UTC. Our logs show the incident started on 02/11, 11:40 PM UTC and that during the 1 hours that it took to resolve the issue customers in the West US 2 Region with workspace-enabled Application Insights resources experienced intermittent data gaps and latent data as well as possible misfiring of alerts based on such data gaps or latencies.

- Root Cause: The failure was due to a backend resource that hit an operational threshold.

- Incident Timeline: 1 Hour – 02/11, 11:40 PM UTC through 02/12, 00:40 AM UTC

We understand that customers rely on Application Insights as a critical service and apologize for any impact this incident caused.

-Jayadev

by Contributed | Feb 11, 2021 | Technology

This article is contributed. See the original author and article here.

Initial Update: Friday, 12 February 2021 01:35 UTC

We are aware of issues within Azure Monitor Services and are actively investigating. Some customers in West US Region may experience Data Latency, Data Access and delayed or misfired Alerts.

- Next Update: Before 02/12 06:00 UTC

We are working hard to resolve this issue and apologize for any inconvenience.

-Jayadev

Recent Comments