by Contributed | Feb 15, 2021 | Technology

This article is contributed. See the original author and article here.

Some customer asked me about the following query around their creating system.

Query

“We are creating some system and using paired regions to secure redundancy of it. Each database instance (primary and replicated) is located on both regions and these instances belongs to a fail over group. When we tested database failover, applications in their environment could not access primary database instance. We don’t think network is reachable since global peering is configured between virtual networks in each region. What is the root cause of this issue? How do we fix this issue?”

Backgrounds

As backgrounds are not clear, I asked the customer to share details about their system and the facing issue.

- Their system is deployed to paired regions to secure the redundancy of their system.

- Traffic Manager works in front of their system to load balance incoming traffic. They use priority-based traffic-routing for load balancing. If some failure occurs in active region, Traffic Manager changes route of incoming traffic to the another region.

- Global peering between virtual networks in both regions is configured.

- They use App Service to host their applications. Their App Service instances are integrated with virtual networks, and service endpoints for SQL Database instances are configured at the subnets where these app services are integrated. Also, service endpoints for App Service instances are configured in order to interact each App Service instance.

- They use SQL Database in this system and instances on both regions belongs to automatic failover group. As read-write/read-only listener is geo-independent, they don’t have to modify database connection string used in applications whenever database failover occurs.

- As of now, they don’t mind that primary database region should be the same as the one where Traffic Manager routes incoming traffic. In other words, they think cross region connection is fine.

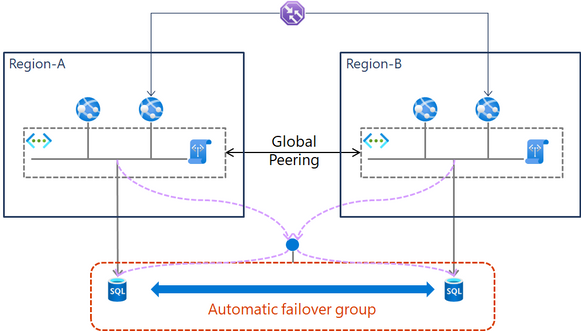

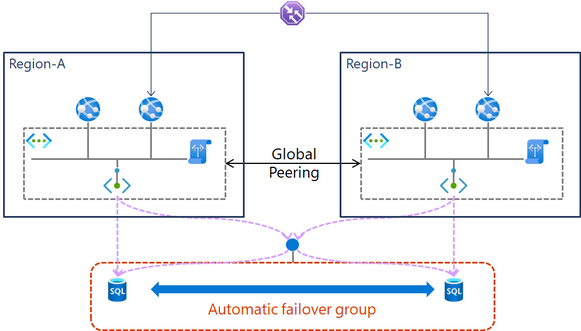

The following diagram reflects their comments and our hearing results.

Root cause

If you are familiar with Azure, you can detect the root cause of this issue easily. This is due to service endpoint limitation. For Azure SQL, a service endpoint applies only to Azure service traffic within a virtual network’s region.

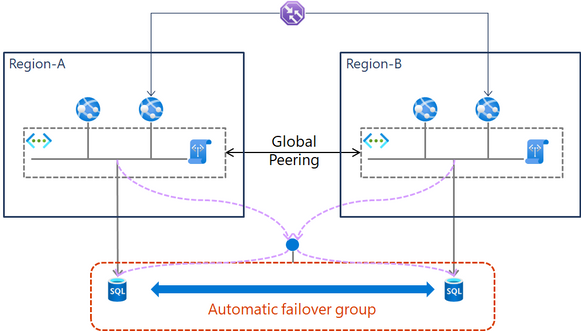

The following case works fine.

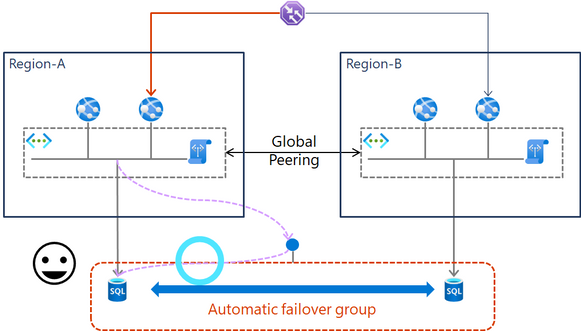

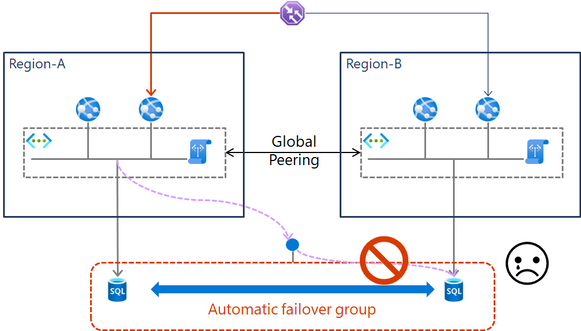

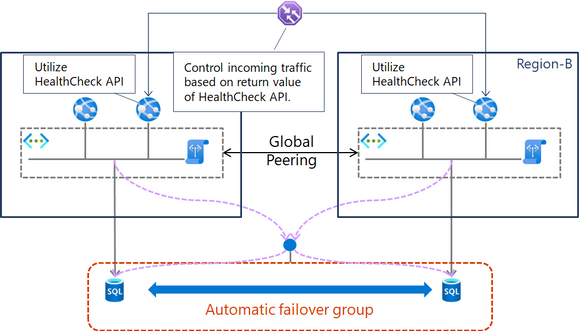

However, the following case does not work even if global peering is configured.

Solutions

In this case, we can choose two options listed below.

- Using private link

- Modifying traffic routing rule

1. Using private link

If cross region connection is still fine, they can fix this issue by using private link instead of service endpoint.

Azure Private Link for Azure SQL Database and Azure Synapse Analytics

https://docs.microsoft.com/azure/azure-sql/database/private-endpoint-overview

When using private link, the diagram looks like this.

When using private link, the following limitations should be considered.

Cost

Performance

2. Modifying traffic routing rule

In some cases, private link does not meet requirements. In this case, we should configure Traffic Manger to match between the region where Traffic Manager routes incoming traffic and database primary region. The diagram looks like this.

To achieve this, the following configuration is required.

- First of all, priority for active region is set smaller value (e.g. 50) , and the priority for the other region is set much bigger value (e.g. 1000).number. This configuration allows incoming traffic to be routed to active region. For more details, see the following document.

Priority traffic-routing method

https://docs.microsoft.com/azure/traffic-manager/traffic-manager-routing-methods#priority-traffic-routing-method

- Then, healthcheck API should be configured. The API checks if access between applications and databases is healthy. If heathy, the API returns HTTP 200, otherwise, it returns 503.

- Following the document, traffic Manager is configured in order to use this API to monitor endpoint. If healthcheck API returns 503, Traffic Manger modifies routing route.

Configure endpoint monitoring

https://docs.microsoft.com/azure/traffic-manager/traffic-manager-monitoring#configure-endpoint-monitoring

This concept has some limitations listed below.

- Needless to say, healthcheck API should be created.

- It takes some time to change routing region. Precisely, the minimum number of trials (from 0 to 9) to monitor endpoint by healthcheck API and trial interval (default is 30 second interval, and 10 second interval is also available, but additional cost is required). For more details, see the following document.

Configure endpoint monitoring

https://docs.microsoft.com/azure/traffic-manager/traffic-manager-monitoring#configure-endpoint-monitoring

Conclusion

In this case, I suggested both ways and asked this customer to make their decision. And last but not least, Traffic manager is used in this case, but this solution is applicable when using Azure Front Door.

by Contributed | Feb 15, 2021 | Technology

This article is contributed. See the original author and article here.

Overview

We are happy to share with the SAP on Azure community one solution to automate your SAP system start / stop in Azure.

This is ready to use, flexible, end-to-end solution (including PaaS Azure automation runtime environment, scripts, and runbooks, tagging process etc.) that enables you to automatically:

- Start / Stop your SAP systems, DBMSs, and VMs.

- SAP application servers

- If you use managed disks (Premium and Standard), you can decide to convert them to Standard during the stop procedure, and to Premium during the start procedure.

This way you achieve cost savings both on the compute and on the storage side!

SAP systems stop and SAP application servers stop is specially designed for a graceful shutdown, allowing SAP users and batch jobs to finish. This way you can minimize the SAP system or the SAP application server’s downtime impact. The approach is similar on the DBMS side.

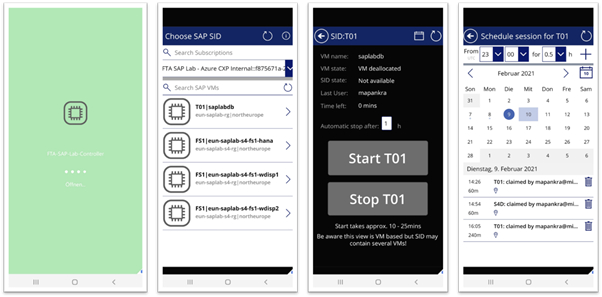

To further enhance the user experience, you can consume this functionality using a cool SAP Azure Power App . For more information you can check a great blog of Martin Pankraz – Hey, SAP Systems! My PowerApp says Freeze! But only if you’re done yet .

This solution is the product of a joint effort of the SAP on Azure CAT Team (Cloud Advisory Team) andthe SAP on Azure FastTrack Team (Robert Biro and Martin Pankraz).

Solution Capabilities

In details, with this solution you can do the following:

- Start / Stop a complete SAP NetWeaver Central System on Linux or Windows, and the VM.

The typical scenario here is a non prod SAP systems.

- Start / Stop of a complete SAP NetWeaver Distributed System on Linux or Windows and VMs.

The typical scenario here is a non prod SAP systems.

Here you have:

- One DBMS VM (HA is currently not implemented)

- One ASCS/SCS or DVEBMGS VM (HA is currently not implemented)

- One or more SAP application servers

- It is assumed that SAPMNT file share is located on SAP ASCS or DVEBMGS VM.

- In a distributed SAP landscape, you can deploy your SAP instances across multiple VMs, and those VMs can be placed in different Azure resources groups.

- In a distributed SAP landscape, the SAP application instances (application server and SAP ASCS/SCS instance) can be on Windows and DBMS on Linux (this is so called heterogenous landscape), for DBMS that support such scenario for example SAP HANA, Oracle, IBM DB2, SAP Sybase and SAP MaxDB.

- On the DBMS side, starting, stopping, getting the status of DBMS itself is implemented for:

- SAP HANA DB

- Microsoft SQL Server DB

- Currently, starting, stopping and getting the status of DBMS is NOT implemented for Oracle, IBM DB2, Sybase and MaxDB DBMSs.

You can use the solution with these DBMSs, but you need to make sure that:

- DBMS is configured to automatically start with OS start.

- SAP system in startup procedure start first DBMS (which is default SAP start order in SAP instance profile)

Although we did not test with all these DBMSs, the expectation is that this approach will work.

- Start / Stop Standalone HANA system and VM

- Start / Stop SAP Application Server(s) and VM(s)

This functionality can be used for SAP application servers scale out -scale in process.

One meaningful scenario for production SAP systems is that you, as an SAP system administrator, identify upcomming peaks in the system load (for example Black Friday or Year-End close), where you know in advance how many SAPS / application servers you would need to meet the additional load requirements, and for how long. Then you can either schedule start / stop or manually start/stop a number of already prepared SAP application servers, that will cover the load peak.

- Converting the disks from Premium to Standard managed disks during the stop process, and the other way around during the start process to reduce the storage costs.

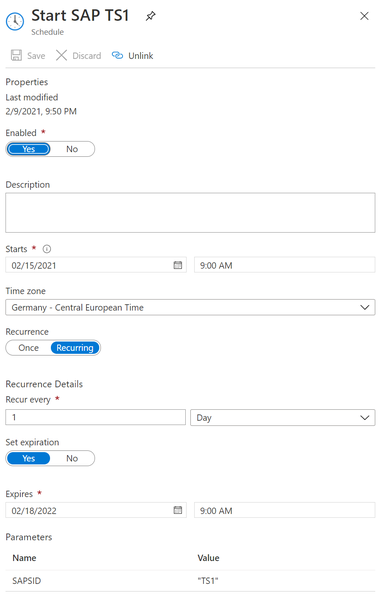

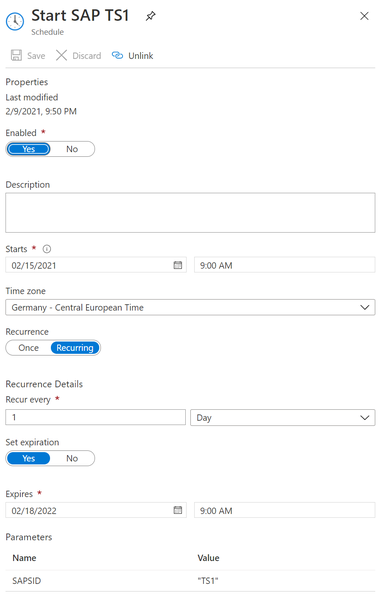

- Start / Stop actions can be executed manually, or can be scheduled.

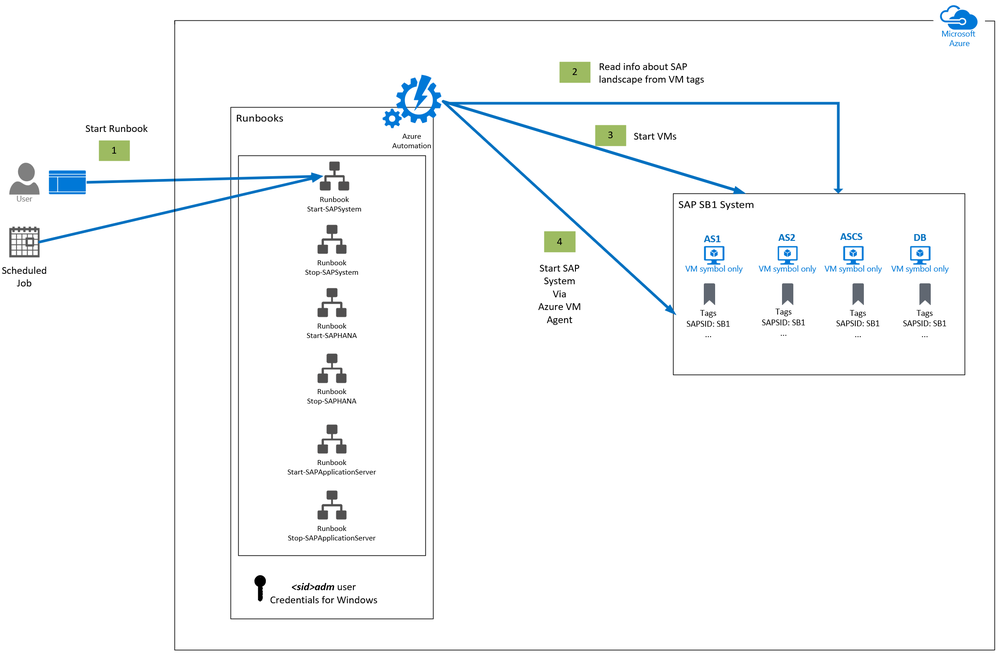

PaaS Solution Architecture

The solution is using Azure automation account PaaS as an automation platform to execute the SAP shutdown/startup jobs.

Runbooks are written in PowerShell. A PowerShell module SAPAzurePowerShellModules is used by all runbooks. These runbooks and the module are stored in PowerShell Gallery, and are easy to import.

Information about the SAP landscape and instances are stored in VM Tags. VM tagging can be done either manually or even better – using prepared tag runbooks.

<sid>adm password is needed on Windows OS and is stored securely in the Credentials area of the Azure automation account.

Secure assets in Azure Automation include credentials, certificates, connections, and encrypted variables. These assets are encrypted and stored in Azure Automation using a unique key that is generated for each Automation account. Azure Automation stores the key in the system-managed Key Vault.

The Starting and Stopping of:

- An SAP system and an SAP Application server is implemented using scripts (calling SAP sapcontrol executable).

- An SAP HANA DB is implemented using scripts (calling SAP sapcontrol executable).

- SQL Server DB start / stop / monitoring is implemented using scripts (calling SAP Host Agent executable).

- All scripts are executed at OS level, in a secure way via Azure VM agent.

- All SAP system and DBMS start / stop / monitor scrips are generated on the fly during the runtime, therefore there is no need to store them anywhere.

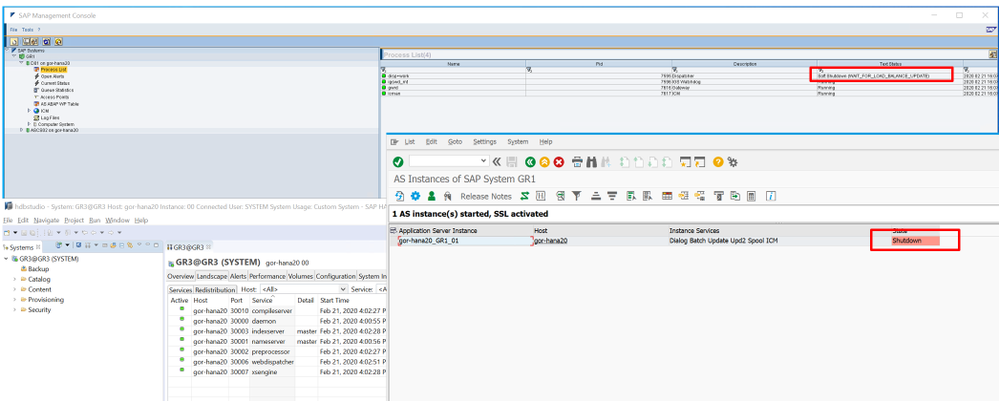

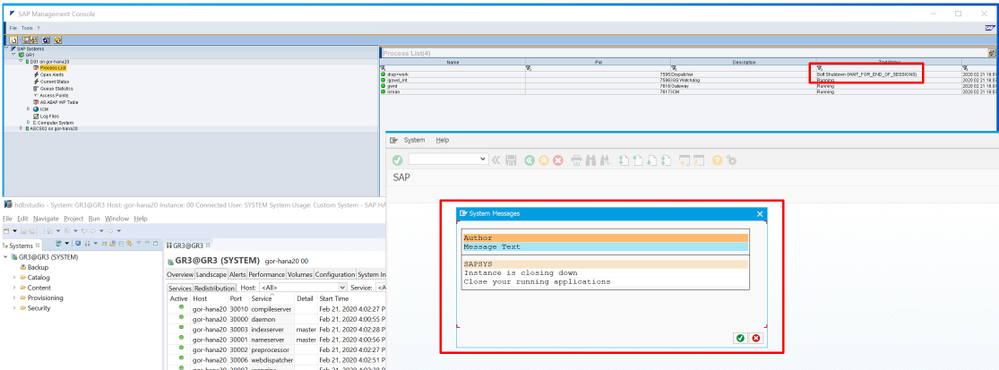

Soft / Graceful Shutdown of SAP System, Application Servers, and DBMS

SAP System and SAP Application Servers

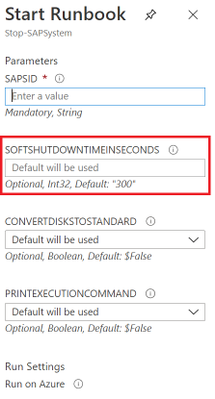

The stopping of the SAP system or SAP application server will be done using an SAP soft shutdown or graceful shutdown procedure, within a specified timeout. The SAP soft shutdown is handling gracefully SAP processes, users, etc. within the specified downtime time, during the stop of the whole SAP system or one SAP application server.

Users will get a popup to log off, SAP application server(s) will be removed from different logon groups (users, batch, RFC, etc.), the procedure will wait for SAP batch jobs to complete (until the specified timeout is reached). This functionality is implemented in the SAP kernel.

INFO: You can specify the value for SAP soft shutdown time as a parameter. The default value is 300.

DBMS Shutdown

For SAP HANA and SQL Server, DB soft shutdown is also implemented, which will gracefully stop these DBMS, so DB will have time to flush consistently all content from memory to storage and stop all DB processes.

User Interface Possibilities

A user can trigger start / stop in two ways:

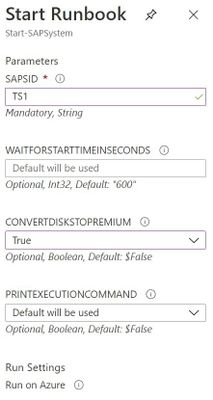

- using Azure Automation account portal UI

- or via modern SAP Azure Power App, which can be consumed in a browser, smart phones or Teams:

For more information you can check a great blog of Martin Pankraz – Hey, SAP Systems! My PowerApp says Freeze! But only if you’re done yet.

The SAP PowerApp application is fully integrated with backend start / stop functionality with Azure automation account. It will automatically collect information on all available SAP SIDs (via SAPSID tag) and offer Start / Stop / SAP system status functionality!

Cost Optimization Potential

Cost Savings on Compute for non-Productive SAP Systems

The non-production SAP systems, like dev, test, demo, training etc., typically do not run 24/7. Lets assume you would run them 8 hours per day, Monday through Friday. This means you run and pay for each VM 8 hours x 5 days = 40 hours. The rest of the time of 128 hours per week you don’t need to pay, which translates into approximatelly 76 % of savings on compute!

Cost Savings on Compute for Productive SAP Systems

Productive SAP system run typically 24/7 and you never completely shut them down. Still, there is a huge potential for savings in the SAP application layer. The SAP application layer constitutes the largest portion of SAPS in an SAP system.

INFO: SAP Application Performance Standard (SAPS) is a hardware-independent unit of measurement that describes the performance of a system configuration in the SAP environment. It is derived from the Sales and Distribution (SD) benchmark, where 100 SAPS is defined as 2,000 fully business processed order line items per hour. SAPS is an SAP measure of compute process power.

In an on-premises environment, an SAP system is often oversized, so that it can process the required peak loads. But the reality is, these peaks are rare (maybe few days in 3 months). Most of the time such systems are underutilized. I’ve seen prod systems that have 5 – 10 % of total CPU utilization most of the time.

In the cloud we have the possibility to run only what we need, and pay for what we used – hence, the SAP application servers’ layer is a perfect candidate to bring down the cost for SAP productive systems!

Here, this solution offers jobs to start / stop an SAP application server and VMs. It is using a soft shut down, allowing SAP users and processes enough time to complete.

Cost Savings on Storage

If you use Premium storage, there is an opportunity for cost savings, by converting such managed storage to standard, while the system is not running.

Let’s say you need to use Premium storage (especially for the DBMS layer) to get a good SAP performance during the runtime. But once the SAP system (and VMs) is stopped, if you choose to convert the disks from Premium to Standard disks, you will pay much less on the storage during the time the system and the VMs are stopped.

During the start procedure, you can decide to convert the disks back to premium, to have good performance while the SAP systems are running , and only pay for the more expensive Premium storage, while the system is running.

For example, if the SAP system would run 8 hours x 5 days = 40 hours, and the SAP system is stopped for 128 hours per week, that means that 128 hours per week, you will not pay the price for Premium storage, but the reduced price for Standard storage.

For example, price of 1 TB P30 disk approximately 2 times higher than 1 TB S30 Standard HDD disk. For above mentioned scenario, savings on 1 TB managed disk would be approximately 54 %!

For the exact updated pricing for managed disks, check here.

Cost of Azure Automation Account PaaS Service

The cost of using Azure automaton service is extremely low. Billing for jobs is based on the number of job run time minutes, used in the month. And for watchers, billing is based on the number of hours used in a month. Charges for process automation are incurred whenever a job or watcher runs. You will be billed only for minutes/hours that exceed the free included units (500 min for free). If you exceed the monthly free limit of 500 min, you will pay per minute €0.002/minute.

Let’s say one SAP system start or stop takes on average 15 minutes. And let’s assume you scheduled your SAP system to start every morning from Monday to Friday and stop in the evening as well. That will be 10 executions per week, and 40 per month for one SAP system.

This means that in 500 free minutes you can execute 33 start or stop procedures for free.

Everything extra you need to pay. For one start or stop (of 15 min), you would pay 15 min * €0.002 = €0.03. And for 40 start / stop of ONE SAP system you would pay € 1.2 per month!

For uptodate pricing, you can check here.

Solution Cost of Azure Automation Account Management

Often, when you use a solution which offers you specific functionality, you need to manage it as well, learn it, etc. All this generates additional costs, which can be quite high.

As Azure automation account is a PaaS service, you have here ZERO management costs!

Plus, it is easy to set it up and use it.

Documentation and Scrips

All documentation and scripts can be found here on GitHub. All job scripts and SAP on Azure PowerShell Module is available on PowerShell Gallery.

by Contributed | Feb 15, 2021 | Technology

This article is contributed. See the original author and article here.

Hello Folks,

If you have not seen the content our team has put together for our “ITOpsTalks: All things Hybrid” online event? You can still watch all the sessions online. To the point of today’s post. During the OPS117: PowerShell Deep dive session Joey Aiello and Jason Helmick introduced us to Predictive IntelliSense otherwise known as PSReadLine.

PSReadLine replaces the command line editing experience of PowerShell PSReadLine and up. It provides:

- Syntax coloring.

- Simple syntax error notification.

- A good multi-line experience (both editing and history).

- Customizable key bindings.

- Cmd and emacs modes (neither are fully implemented yet, but both are usable).

- Many configuration options.

- Bash style completion (optional in Cmd mode, default in Emacs mode).

- Bash/zsh style interactive history search (CTRL-R).

- Emacs yank/kill ring.

- PowerShell token based “word” movement and kill.

- Undo/redo.

- Automatic saving of history, including sharing history across live sessions.

- “Menu” completion (somewhat like Intellisense, select completion with arrows) via Ctrl+Space.

The “out of box” experience is meant to be very familiar to PowerShell users with no need to learn any new keystrokes.

I really think this is a great new editing experience for anyone working with PowerShell scripts but as I mentioned to Joey during our talk “is there a way to share the PowerShell console history across multiple machines. Jason mentioned that you can pick up the history files and move it around.

I ran a little experiment and it’s working flawlessly. So far… LOL

Preparing Machine 1

Before we start. I use the latest version of PowerShell 7 on all my machines. Yes Windows PowerShell is installed but I did NOT try the following experiment with Windows Powershell. If you are planning on trying this on Windows PowerShell. You will need the 1.6.0 or a higher version of PowerShellGet to install the latest prerelease version of PSReadLine.

Windows PowerShell 5.1 ships an older version of PowerShellGet which doesn’t support installing prerelease modules, so Windows PowerShell users need to install the latest PowerShellGet by running the following commands from an elevated Windows PowerShell session.

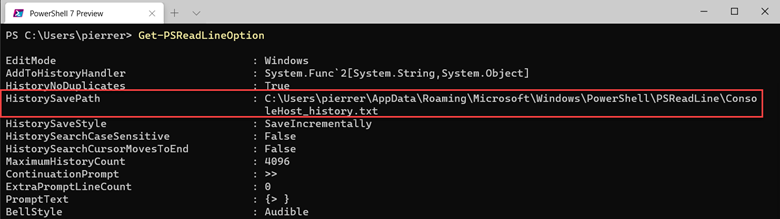

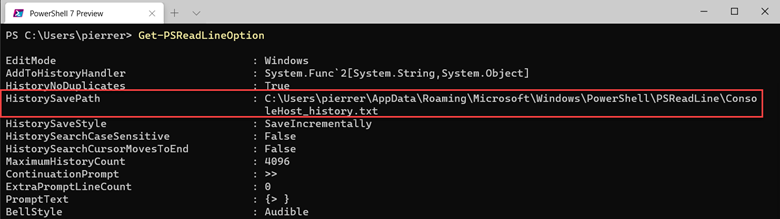

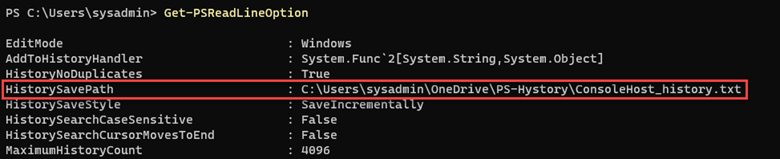

So, once I had PSReadLine installed on my PC I verified the location of my history file by using the Get-PSReadLineOption command.

I copied the “ConsoleHost_history.txt” file to a folder I created in my OneDrive folder.

In my case “D:1drvOneDrivePS-Hystory”. You can put it wherever you want. After copying the file all I needed to do is set the location by using the following command:

Set-PSReadLineOption -HistorySavePath D:1drvOneDrivePS-HystoryConsoleHost_history.txt

Preparing Machine 2

I moved to one of the laptops I use when I travel (which I have not done in a long time), I installed the module,

Install-Module -Name PSReadLine -AllowPrerelease

imported it,

Import-Module PSReadLine

Used the Set-PSReadLineOption cmdlet to customizes the behavior of the PSReadLine module to use the history file.

Set-PSReadLineOption -PredictionSource History

And checked my settings.

Finalizing settings

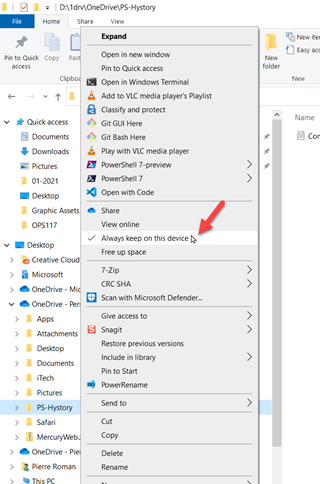

Just to ensure that the folder on all my machines would always be updated I set the properties on the folder to “Always keep on this device” on all my machines.

Now I have a shared history file on all my PCs. This was fun and will be useful.

Cheers!

Pierre

by Contributed | Feb 15, 2021 | Technology

This article is contributed. See the original author and article here.

Hi Everyone!

My name is Fabian Scherer, Customer Engineer (CE – formerly known as PFE) at Microsoft Germany for Microsoft Endpoint Manager related topics. In some of my previous engagements I have been analysing and troubleshoot Site Maintenance Task related problems and developed a strategy to improve Site Maintenance Tasks.

This blog post will cover the following topics:

- What are Site Maintenance Tasks

- Sources and helpful commands

- Ensure all Site Maintenance Tasks are up and running

- Check the defined timespans

- Synchronize a global MEMCM hierarchy

- Disable Site Maintenance Tasks for Hierarchy Updates

What are Site Maintenance Tasks

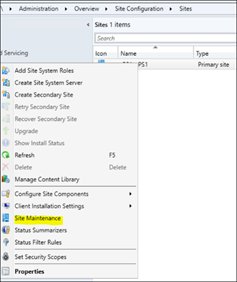

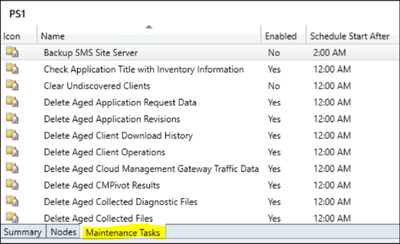

The MEMCM built-in Maintenance Tasks can be configured and used to automatically clean up obsolete and old data from your Configuration Manager database. The Site Maintenance Tasks are located at:

Administration > Overview > Site Configuration > Sites > Properties > Site Maintenance

Starting with Configuration Manager Version 1906, there is an additional category at the bottom of the console.

If you open one of these tasks, you can enable/disable it, define the data scope (e.g. the age of data) and the schedule, in which this task attempts to run and perform the intended operation. Note: You cannot define the exact start time of the individual task. Configuration Manager controls the amount and sequence of all scheduled tasks and continuously evaluates, if there is enough time left to start and complete the next task within the allowed timeframe.

If there is an open slot during the defined timeframe, the task will be executed. If the timeframe is over or nears to end shortly, the task will or may not be started and waits until the next scheduled run. Only the ‘Backup SMS Site Server’ Task will terminate other running tasks, to ensure and enforce its own completion.

Sources and Helpful Commands

Microsoft Documentation:

Maintenance tasks for Configuration Manager

Reference for maintenance tasks in Configuration Manager

Related Logs:

%MECM_installdir%Logssmsdbmon.log

SQL Execution Status:

Select * from SQLTaskStatus

Enforce a Site Maintenance Task to ‘run now’:

Powershell (Credits go to Herbert Fuchs for this script):

$SiteCode = 'FOX'

$MT = Get-CMSiteMaintenanceTask -SiteCode $SiteCode -Name 'Delete Aged Scenario Health History'

$MethodParam = New-Object 'System.Collections.Generic.Dictionary[String,Object]'

$MethodParam.Add('SiteCode',$($SiteCode))

$MethodParam.Add('TaskName',$($MT.TaskName))

$ConfigMgrCon = Get-CMConnectionManager

$ConfigMgrCon.ExecuteMethod('SMS_SQLTaskStatus','RunTaskNow',$MethodParam)

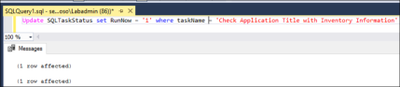

SQL:

Update SQLTaskStatus set RunNow = '1' where taskName = '$TaskName'

Get Runtime:

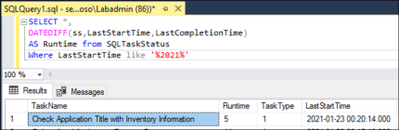

SQL:

SELECT *,

DATEDIFF(ss,LastStartTime,LastCompletionTime)

AS Runtime from SQLTaskStatus

Where LastStartTime like '%2021%'

/*Available Timespans:

hour hh

minute mi, n

second ss, s

millisecond ms

microsecond mcs

nanosecond ns*/

Ensure all Site Maintenance Tasks are up and running

Using the Get Runtime SQL Query will give you an overview of all Site Maintenance Tasks on your Site with a LastStartTime timestamp in 2021.

If you find a task with a negative value in the ‘Runtime’ column, it’s an indicator that the task is not running properly and did not complete successfully on the last attempt (please ignore the ‘Evaluate Provisioned AMT Computer Certificates’ if it shows up, it’s an outdated task).

To fix a maintenance task with negative runtime, you can use the “Enforce a Site Maintenance Task to ‘run now’” Powershell Script or SQL Query to trigger the task immediately, regardless of its defined schedule:

After this task is completed, the data is cleaned up and removed from the database. If this task is still failing, you will have to start troubleshooting the specific task.

The most common reason for a failing task, is when its overlapping with other tasks. Sometimes the task cannot be started during the defined timeframe or will be terminated by the “Backup SMS Site Server” task, which will result in the intended data not getting cleaned up and will continue to pile up. This may kick off an avalanche of problems coming your way.

Check the defined timespans

The most challenges are:

- Ensure that all Site Maintenance Tasks are running

- Ensure that there are no blocking tasks

- Synchronize the tasks with the backup

- Ensure that the load is evenly balanced

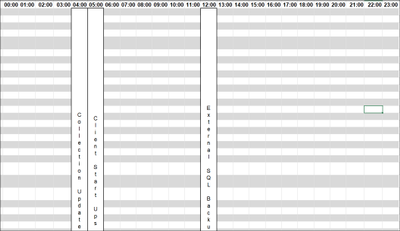

So I recommend all to build a view (Example Excel Sheet is attached) and enter the ‘external’ indicators like:

- Distribution Point Content Validation

- Collection Evaluation

- External SQL Backup

- Maintenance Scripts (for example Ola Hallengren)

After that, you should add the Active Site Maintenance Tasks and the Runtime for this Site:

Now you can start to plan the Site Maintenance Tasks. I prefer a system where ‘related categories’ get consolidated and the runtime does not exceed 50% of the defined timeslot:

Summarized Categories:

- Specific Site Cleanup Tasks

- The Central Administration Site and the Primary Site got separated Tasks which are only vailable on the specific Site Role

- Client related Information Cleanup

- Delete all Client related stuff

- Replication Data

- All Data which has to be replicated at the Infrastructure

- Maintenance Scripts

- Like Ola Hallengren SQL Maintenance Scripts

- Backup

Synchronize a Global MECM hierarchy

I recommend to synchronize the Site Maintenance Task Timeslots in a hierarchy.

This will benefit in:

- Minimizing the administrative effort

- Minimizing the replication data

- Minimizing the replication time, especially during a Disaster Recovery

This is quite easy if you have a hierarchy running in the same time zone. If you are running a global hierarchy, I would recommend using the time zone of your CAS as the baseline time and calculate to synchronize it with the other time zones of your primary sites (Example: CAS runs in EMEA and the Primary Site on UTC +6):

Now you can simply copy/paste the timespans to the other time zone and synchronize the global Replication Data.

Disable Site Maintenance Tasks for Hierarchy Updates

It’s a Microsoft recommendation to ensure that no Site Maintenance Tasks are running during an MECM hierarchy update. To ensure this I recommend to disable all Tasks. But the work doing this manually step by step is a time wasting action so I would like to share three Powershell scripts with you to disable the Tasks:

Preparation:

Copy the attached Scripts to your Server and create the following Folders:

Edit the following Points at the Scripts:

- $Sitecode (Script 1)

- $ProviderMachineName (Script 1)

- %Scriptpath% (Script 1, 2, 3)

1_Get_Site_Maintenance_Tasks.ps1

This is the first script will create an .csv file with all Site Maintenance Task values. If you run it the second time it will backup your existing .csv File to the Backup folder. This ensures that no data is lost when someone runs this script on the wrong time.

$SiteCode = "XYZ"

$ProviderMachineName = "Servername.FQDN"

$ExportFile = "%Scriptpath%DataSiteMaintenanceValues.csv"

$Bkup = "%Scriptpath%BackupSiteMaintenancesValues_"+[DateTime]::Now.ToString("ddMMyyyy_hhmmss")+".csv"

if (Test-Path $ExportFile)

{

Copy-Item -Path $ExportFile -Destination $Bkup

Remove-Item $ExportFile

}

$initParams = @{}

if((Get-Module ConfigurationManager) -eq $null)

Import-Module "$($ENV:SMS_ADMIN_UI_PATH)..ConfigurationManager.psd1" @initParams

}

if((Get-PSDrive -Name $SiteCode -PSProvider CMSite -ErrorAction SilentlyContinue) -eq $null) {

New-PSDrive -Name $SiteCode -PSProvider CMSite -Root $ProviderMachineName @initParams

}

Set-Location "$($SiteCode):" @initParams

Get-CMSiteMaintenanceTask | Export-csv $ExportFile

2_Disable_Site_Maintenance_Tasks.ps1

This script is the second one and will disable all enabled Tasks except ‘Update Application Catalog Tables’ which are marked es -enabled out of the .csv of the first script.

$Values = Import-CSV "%Scriptpath%DataSiteMaintenanceValues.csv"

$Log = "%Scriptpath%LogsDisable_SiteMaintenanceTasks.log"

$Time = Get-Date -Format "dd-MM-yyyy // hh-mm-ss"

"$Time New Run - Set back to default Values." | out-file "$Log" -Append

foreach ($Item in $Values)

{

$SiteCode = $Item.Sitecode

$ProviderMachineName = $Item.PSComputerName

$DeleteOlderThan = $Item.DeleteOlderThan

$DeviceName = $Item.DeviceName

$Name = $Item.TaskName

"Start working at $Name on $SiteCode." | out-file $log -Append

$initParams = @{}

if((Get-Module ConfigurationManager) -eq $null) {

Import-Module "$($ENV:SMS_ADMIN_UI_PATH)..ConfigurationManager.psd1" @initParams

}

if((Get-PSDrive -Name $SiteCode -PSProvider CMSite -ErrorAction SilentlyContinue) -eq $null) {

New-PSDrive -Name $SiteCode -PSProvider CMSite -Root $ProviderMachineName @initParams

}

Set-Location "$($SiteCode):" @initParams

Set-CMSiteMaintenanceTask -SiteCode $SiteCode -MaintenanceTaskName $Name -Enabled 0

"Finished $Name on $SiteCode" | out-file $log -Append

"---" | out-file $log -Append

}

3_Enable_Site_Maintenance_Tasks.ps1

This is the third script and should run after the Update is done. It will enable all -enabled marked Site Maintenance Tasks except of “Backup SMS Site Server”. The “Backup SMS Site Server” has to be enabled manually. This is caused by the .csv backuped data. If you run an Environment with an delocated SQL Server the Backup Path Value will be stored as “F:SCCM_Backup|Y:SQL_Backup” and Powershell is unable to work with the pipe at the Value. I also prepared the opportunity to write the settings back but it’s not necessary because the values will be saved at the Infrastructure.

$Values = Import-CSV "%Scriptpath%DataSiteMaintenanceValues.csv"

$Log = "%Scriptpath%LogsEnable_SiteMaintenanceTasks.log"

$Time = Get-Date -Format "dd-MM-yyyy // hh-mm-ss"

"$Time New Run - Set back to default Values." | out-file "$Log" -Append

foreach ($Item in $Values)

{

$SiteCode = $Item.Sitecode

$ProviderMachineName = $Item.PSComputerName

$DeleteOlderThan = $Item.DeleteOlderThan

$DeviceName = $Item.DeviceName

$Name = $Item.TaskName

$Enabled = $Item.Enabled

if ($Enabled -eq 'TRUE')

{

"Start working at $Name on $SiteCode." | out-file $log -Append

$initParams = @{}

if((Get-Module ConfigurationManager) -eq $null) {

Import-Module "$($ENV:SMS_ADMIN_UI_PATH)..ConfigurationManager.psd1" @initParams

}

if((Get-PSDrive -Name $SiteCode -PSProvider CMSite -ErrorAction SilentlyContinue) -eq $null) {

New-PSDrive -Name $SiteCode -PSProvider CMSite -Root $ProviderMachineName @initParams

}

Set-Location "$($SiteCode):" @initParams

if ($Name -eq "Backup SMS Site Server")

{

"$Name could not be restored." | out-file $log -Append

"---" | out-file $log -Append

}

else

{

Set-CMSiteMaintenanceTask -Name $Name -Enabled 1 -SiteCode $SiteCode

}

"Enabled $Name on $SiteCode" | out-file $log -Append

"---" | out-file $log -Append

}

else

{

"$Name on $SiteCode is deactivated per Design and will be skipped." | out-file $log -Append

"---" | out-file $log -Append

}

}

Fabian Scherer

CE

Disclaimer

The sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.

by Contributed | Feb 14, 2021 | Technology

This article is contributed. See the original author and article here.

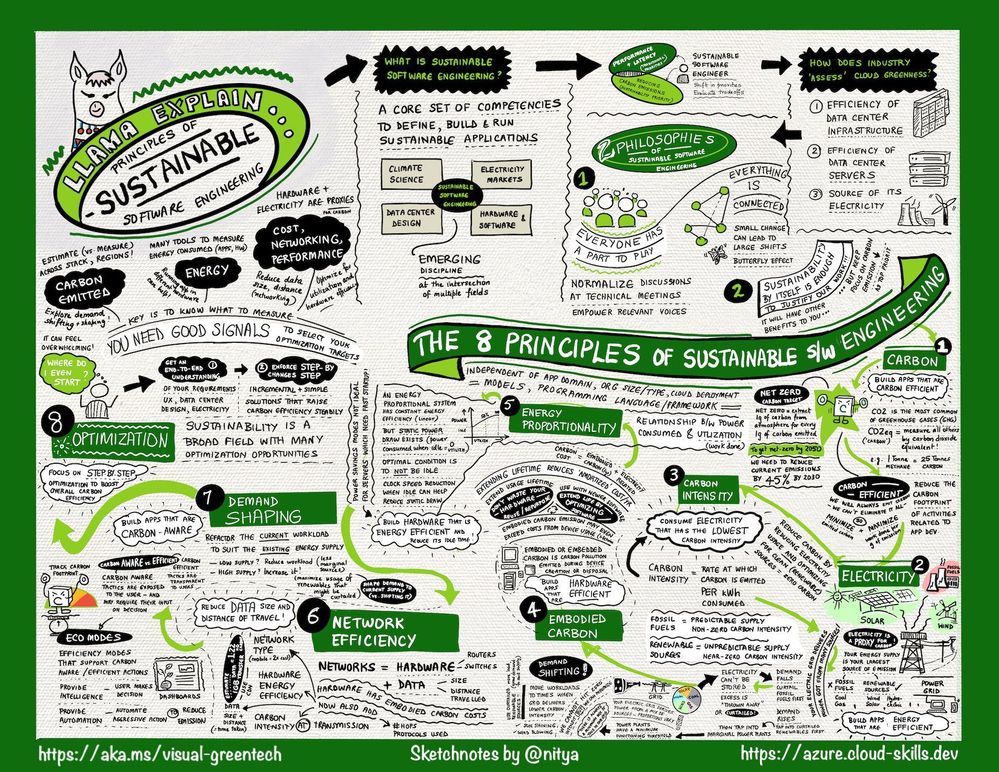

This is a condensed form of the article originally published on the Visual Azure site. It’s part of an effort to explain technology concepts using visual vocabularies, including sketchnotes!

Background

Recently, I came across the Microsoft 2020 Environment Sustainability Report and had a chance to check out this review of the progress made towards the sustainability goals that had been laid out in the Jan 2020 announcement from Microsoft leadership. I had already decided I wanted to spend more time this year in understanding environmental issues and sustainability solutions in both tech and community contexts. And I needed to start by understanding basic concepts and terminology.

Thankfully, my colleagues from the Green Advocacy team in Developer Relations had recently released a Microsoft Learn Module covering the Principles of Sustainable Software Engineering. So I did what I always do when I want to learn something and retain that knowledge in meaningful ways for later recall — I sketch-noted it!

The Big Picture

As a visual learner, I’ve found that capturing information in one sheet helps me grasp “the big picture” and make connections to other ideas that I learn about in context. So here’s the sketch-note of the module. You can download a high-resolution version at the Cloud Skills: Sketchnotes site, and read a longer post about what I learned on my Visual Azure blog.

Key Takeaways

The module describes the 2 core philosophies and 8 core principles of sustainable software engineering.

Let’s start with the core philosophies:

How I think about this:

- Butterfly Effects. Even the smallest individual action can have substantial collective impact. In that context, educating ourselves on the challenges and principles is critical so that we can apply them, in whatever small ways we can, to any work we do in the technology or community context.

- Duty to Protect. We have just this one planet. So even though sustainability may have other side-effects that are beneficial or profitable, our main reason for doing this is the core tenet of sustainability itself. We do it because we must, and all other reasons are secondary.

As for the 8 principles, this is what I took away from my reading:

- Carbon. Short for “carbon dioxide equivalent”, carbon is a measure by which we evaluate the contribution of various activities to greenhouse gas emissions that speed up global warming.

- Electricity. Is a proxy for carbon. Our power grid contains a mix of both fossil fuels (coal, gas) and renewables (wind, solar, hydroelectric) where the latter emit zero carbon but have a less predictable supply.

- Embodied Carbon. Is the carbon footprint associated with creation and disposal of hardware. Think of embodied carbon as the fixed carbon cost for hardware, amortized over its lifetime. Hardware is viewed as a proxy for carbon.

- Carbon Intensity. Is the proportion of good vs. bad energy sources in our energy grid. Because renewable energy supply varies with time and region (e.g., when/where is the sun shining), carbon intensity of workloads can also vary.

- Energy Proportionality. Is a measure of power consumed vs. the utilization (rate of useful work done). Idle computers consume power with no (work) value. Energy efficiency improves with utilization as electricity is converted to real work.

- Demand Shaping. Given the varying carbon intensity with time, demand shaping optimizes the current workload size to match the existing energy supply – minimizing the curtailing of renewables and reliance on marginal power sources.

- Network Efficiency. Is about data transmission and the related hardware and electricity costs incurred in that context. Minimizing data size and number of hops (distance travelled) in our cloud solutions is key to reducing carbon footprint.

- Optimization. Is about understanding that there are many factors that will contribute to carbon footprints – and many ways to “estimate” or measure that. Picking metrics we can understand, track, and correct for, becomes critical.

This is a high-level view of those principles each of which is described in detail in its own unit. I highly encourage you check the course out after reviewing the sketch-note.

Sustainability @microsoft

Why does this matter to us as technologists? I found the Sustainability site to be a good source for educating myself on how these challenges are tackled at scale, in industry.

Microsoft has set three objectives for 2030:

- Be carbon negative: Extract more carbon dioxide from the atmosphere, than we contribute.

- Be water positive: Replenish more water from the environment, than we consume.

- Be zero waste: Reduce as much waste as we create, emphasizing repurposing and recycling materials.

A fourth goal is to be biodiverse and use technology to protect and preserve ecosystems that are currently in decline or under threat. And this is where technology initiatives like the Planetary Computer come in, helping researchers collect, aggregate, analyze, and act upon, environmental data at scale to craft and deliver machine learning models for intelligent decision-making.

The bottom line is that we all have a role to play, and educating ourselves on the terms and technologies involved, is key. I hope you’ll take a few minutes now to review the sketchnote and complete the Principles of Sustainable Software Engineering on your own. It’s time to be butterflies and drive collective impact with our individual actions!

Recent Comments