by Contributed | Jan 20, 2021 | Technology

This article is contributed. See the original author and article here.

One of the most used non-Microsoft app that Microsoft Cloud App Security can help protect is Box.

You may ask: “Why would I connect Box to MCAS? What benefits will I gain?”

Continuing on our series on how to use MCAS to protect your non-Microsoft apps, we’ll discuss leveraging MCAS to detect threat impacting your Box environment and to protect your data in the cloud.

Why connect Box?

In short: for the exact same reasons you would want to connect Office 365 and more, as described here:

Benefit

|

Description

|

Policy or template

|

Compromised account or insider threat

|

The built-in Threat Detection policies in Microsoft Cloud app Security will apply to Box as soon as you have connected it. No additional configuration is necessary: by simply connecting you will start seeing new alerts when applicable.

|

“Potential Ransomware Activity”, or any of the built in detections.

|

Enforce Data Compliance

|

By enabling content inspection, you can control the type of data stored in Box, just like you could in Office 365.

You are then able to take actions, such as quarantining or removing a file. You can also simply notify the users of their non-compliant files.

|

“File containing PII detected in the cloud (built-in DLP engine)”, or simple generic file policies.

|

Prevent data leakage

|

Content inspection, in conjunction with restricting file sharing helps prevent data from leaking to unwanted parties.

|

“File shared with unauthorized domain”, “Stale externally shared files”.

|

Azure Information Protection integration

|

Leverage the AIP integration with MCAS to add automatic AIP labeling capabilities to your files stored in Box

|

(no template available, using generic file policies).

|

How to connect Box?

First thing first, let’s discuss how to connect Box to Cloud App Security.

The process is as straightforward as can be, and is fully described in our Official documentation.

If you would rather see it in action, check out the video below:

Configure MCAS for Box

By simply connecting box you already gain value: not only the default threat detection policies will apply automatically, but any File Policy you have created to support another app, such as Office 365, will also apply by default to Box (only governance actions require editing existing policies).

Therefore, you would be able to start enforcing compliance requirements right after the connection is established.

Of course, each app being unique, there are a number of Box specific configurations and policies that can be leveraged. Let’s start here with best practices that apply to most customers.

Quick config – Quick value!

Enabling Box policy templates

With regards to Box specifically, we created the following templates to help you handle the specificities of the app, and we recommend most customers to enable them as Box is connected to your MCAS environment.

Template

|

Description

|

Identify Box shared links without a password

|

Box can make it very easy to share files with internal or external parties. Sometimes even too easy, and we have seen a number of our customers accidentally leaking data. To help limit this risk, we created this File Policy template in MCAS that allows you to identify non-password protected shared links.

If this policy triggers too many results, it can edited to add additional matching criteria, or content inspection.

|

Detecting unauthorized Watermark Label changes

|

Box allows watermarking documents to indicate a level of confidentiality to the reader. One may want to control when these are modified, and for that purpose we created this activity policy template.

It can be tweaked to filter results per user, group, file type and more.

|

Unauthorized account updating shared link expiration dates

|

Box allows placing expiration dates on shared links. Thanks to this Activity policy template, MCAS can alert when an expiration date is extended or changed, avoid potential policy violation. As for the other templates, it can be tweaked to better fit your needs.

|

The video below illustrates how to use these templates in your environment:

Generic templates

On top of these specific Box use cases, you can use all the generic features and policy templates offered in MCAS. Here are a few easy examples to deploy.

Template

|

Description

|

Mass Download by a single user

|

Alerts when a single user performs more than 50 downloads within 1 minute (these thresholds can be changed)

|

Potential Ransomware Activity

|

Alerts when a user uploads files to the cloud that might be infected with ransomware.

|

Logon from a Risky IP address

|

Alert when a user logs on from a risky IP address to your sanctioned services.

‘Risky’ IP category contains by default anonymous proxies and TOR exits point. You can add more IP addresses to this category through the ‘IP addresses range’ settings page.

|

File Shared with Unauthorized domain

|

This policy can help you detect file sharing with domains that may represent a certain risk, such as personal email domains (outlook.com, gmail.com) or competitor’s organization.

|

Configure your own policy

Of course, these pre-canned templates are only the tip of the iceberg of what can be done to protect your Box environment.

Two main types of policies would apply to your Box deployment.

First, Activity Policies can be configured to detect virtually any activity that you deem suspicious for your environment. These are particularly useful if you are concerned about a specific threat in your environment.

One of the most common use cases we see for Box users is the ability to apply Data Loss Prevention (DLP) policies.

For that, one can use MCAS File Policies. They allow the admin to detect files with specific properties, sharing level, and even do content inspection to detect sensitive data.

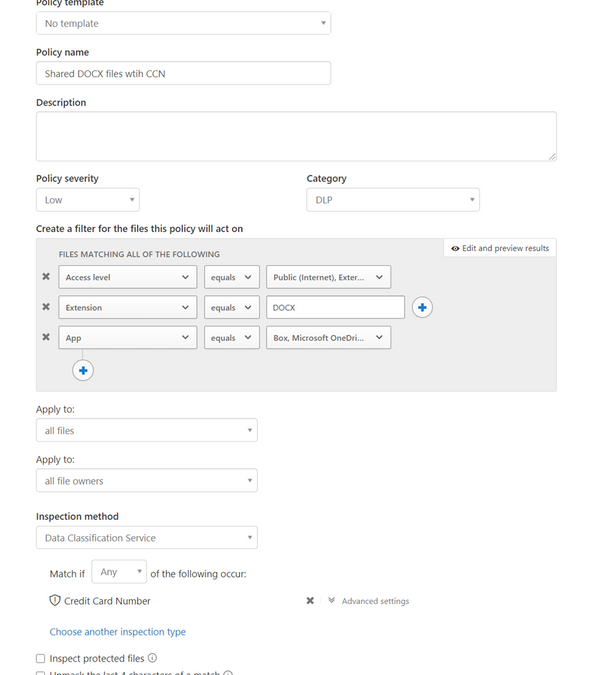

One of the key benefits of these File Policies is that they can apply equally to all apps. For instance, if you are using Office 365 and Box, a single policy can be applied to detect your sensitive data shared in the cloud (should you decide to have separate policies for each of your apps it is also possible using the “App” filter). See the capture below for an example of a policy detecting Credit Card Numbers in DOC files stored in OneDrive, SharePoint or Box:

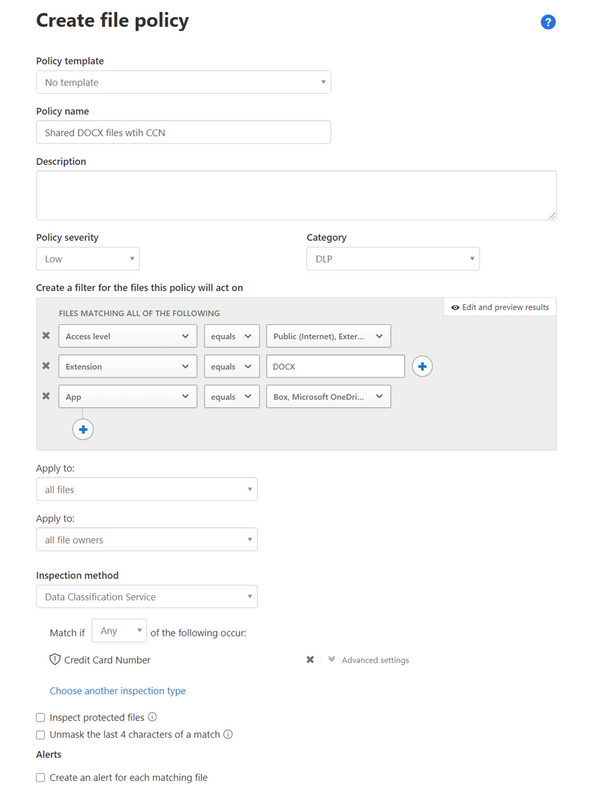

Governance Actions

The last part of the File policy creation page is dedicated to Governance actions. These allow you to define actions that will be executed automatically when a policy is triggered. These can be different for each app. For Box the list is quite extensive as displayed here:

Let’s discuss a few of these.

Box Governance action

|

Description

|

Remove External User

|

This will remove permissions to any user that is not part of the organization from all matching files. Users are recognized as being part of the org based on their email address domain name.

|

Remove Direct Shared link

|

Completely unshare any file that matches the policy.

|

Set an expiration date on a shared link

|

Forces a shared link to expire at a specific date. This can be very valuable to limit cases where files are shared and often forgotten, even when not used anymore.

|

Admin Quarantine

|

After defining a target folder, this action will move any file that matches the policy to the folder. There, the file can be reviewed, and the admin can decide if it should be authorized or removed.

|

Trash

|

As clear as can be. This action can be useful when some data must never be found in cloud storage.

|

Notify the last file editor

|

The policy digest would notify the owner of the file. If a file is shared and multiple users can edit it, the last editor may be the one adding the non-compliant data.

|

Apply Classification label

|

This action will automatically apply an AIP label to a file. It extends Box capabilities by adding automatic classification of supported document. More info here.

|

Real time control

The policies and controls we have discussed above are all relying on Box’s APIs to query activities and data. While this allows monitoring activities very specific to Box and data already stored, it is an out of band connection (cloud to cloud, users are never aware of this connection) and as such, data is received by MCAS in Near Real Time.

For use-cases where real time controls are required, we can leverage another component of MCAS: Conditional Access App Control.

This feature allows MCAS to act as a reverse proxy in the cloud, and allows for a real time control of several activities, for Box or any other Cloud App:

- Control file downloads

- Control file Uploads (including malware detection)

- Control or prevent Cut/Copy/Paste/Print

Some of the most common scenario used with Conditional access app Control with Box are:

- Block download of sensitive data to unmanaged devices

- Prevent upload of malware.

- Prevent copying or printing data from an unmanaged device.

- Prevent file sharing: clicking on the share button would be blocked.

- Read-only mode: prevent file editing or file creation/upload

More info on how to use Conditional Access App control is available here:

You can also learn about how to deploy Conditional Access App Control in the videos here:

Share your thoughts!

We hope this will help you get the best value out of MCAS and secure your environment.

Have you found a scenario that we haven’t covered here? Please share with our community and let us know in the comments below.

(By Idan Basre and @Yoann Mallet)

by Contributed | Jan 20, 2021 | Technology

This article is contributed. See the original author and article here.

There is a growing need for virtual meetings, consults, and appointments but also an increase in the amount of time people are dedicating to try to schedule those appointments. Back and forth phone calls, emails, text messages. Bookings can make it easier, so you can spend more time talking to customers rather than trying to schedule them, and it does it in a secure and integrated way with Microsoft 365.

Microsoft Bookings helps making scheduling and managing appointments easy and seamless. It does this through a web-based booking tool where people have the flexibility to see and book services when it’s convenient for them, it makes it easier to manage staff’s time by integrating with Outlook’s calendar and keeps everyone updated with timely and automatic email confirmations and reminders to reduce no-shows, all these also helps organizations reduce repetitive scheduling tasks.

Bookings is flexible, customizable, secure as it uses a mailbox in Exchange Online, and can be designed to fit scenarios and needs of different parts of an organization.

We have worked with various industries to enable different scenarios. Tele-Health by virtual consultations with doctors through Microsoft Teams, educational classrooms, financial consulting, organizations internal services like legal/IT/HR provided to their employees, candidate interviews, assisted shopping in retail, and government services. To read more on how customers are using Bookings for these scenarios, please click here.

These scenarios demand high scale, to help make sure Bookings works well for you and scale to your needs, we have prepared these best practices.

1. Planning for scale

Each Bookings calendar is currently designed to handle a maximum of 2,500 bookings across all services in that calendar per day, along with a creation limit of 10 booking requests per second, this will work for common scenarios.

If your requirements exceed this, you should plan to distribute the load using the steps below.

- Create a Bookings calendar with just one service.

- Clone this calendar to multiple calendars.

- You can opt-in to the Bookings preview to use a clone option and optout of the preview anytime you want to.

- Limit each calendar for specific audiences, like:

By buildings or operating group

By booking period (mornings only vs afternoons)

- Dividing the load across different Bookings calendars will help ensure none of them will reach the 2,500 limit.

2. Set how far in advance your customers can book an appointment

Finding the optimal value for the maximum advance appointment time your customers can book can help prioritize the daily limits for appointments that are closer to “today”. We have attached a simple Excel spreadsheet where you can enter the values to help you estimate the number of appointments and forward-looking time you can have in your Bookings calendar.

Follow the instructions below to use the attached spreadsheet.

- Calculating the maximum advance appointment time in which those 2,500 appointments could be achieved.

- Open the booking availability timeframe only for that period of time.

- Keep updating the timeframe once the slots are full or a day has passed, so people will always have the option to book up to the same timeframe in advance.

If you got confused (don’t worry, we did too), below is an example. This example is calculated at the calendar level, not at the service level.

- If the appointment duration is 10 minutes, and the maximum number of working hours are 8, then the possible number of appointments/day will be 8*60/10. This means up to 48 appointments per staff member, assuming you don’t need buffer time between appointments.

- If we consider 5 staff members per booking calendar, the max number of appointments you can have in a workday will be 240.

- Considering that opening more than 2,500 slots will throttle the system, then the ability to book a service shouldn’t be opened for more than 2500/240 = ~10.5 days

- Assuming a 5-day week, do not let your customers book appointments 2 weeks before the current date (I.e. today). This will ensure that your customers can always book an appointment

- You can choose to move the booking timeframe every day to always have a 2 week pre-booking time or do it when your bookings are filling up.

We are actively working to increase the scaling limits as you read this and we will post a new communication as soon as our systems are updated.

As always, please let us know if you have any feedback in our UserVoice channel.

Thank you!

Gabriel on behalf of the Bookings team

by Contributed | Jan 20, 2021 | Technology

This article is contributed. See the original author and article here.

Built-In Service Bus Trigger: batched message processing and session handling

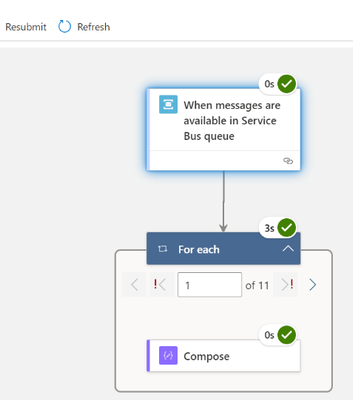

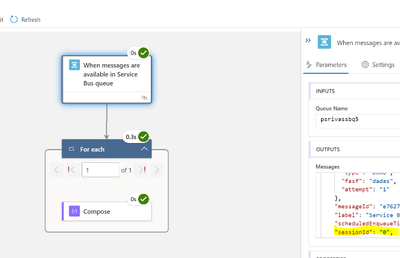

Logic App Refresh preview provides us with the option to create a built-in S trigger to receive messages from Service Bus topic or queue.

In this blog post two advanced Service Bus message processing are discussed:

- Azure Service Bus batch processing and how to configure the max batch count of messages.

- Messages processing in Logic App for the session-aware Service Bus queues or subscriptions.

Service Bus queue batch processing:

To improve the message retrieval performance for Service Bus queue, it is highly recommended to receive the messages in batch. The Logic App Service Bus trigger for queue by default supports array of Service Bus messages as output.

The prefetchCount is used to specify how many messages should be retrieved in a “batch” to save the roundtrips from Logic Apps back to the Azure Service Bus. Prefetching messages increases the overall throughput for a queue by reducing the overall number of message operations, or round trips.

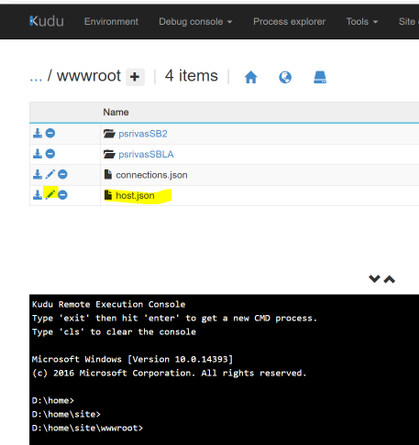

The prefetchCount can be configured in the global configuration settings in host.json, the Azure function trigger requests these many messages for the Azure Function.

{

"version": "2.0",

"extensions": {

"serviceBus": {

"prefetchCount": 20,

"messageHandlerOptions": {

"autoComplete": true,

"maxConcurrentCalls": 32,

"maxAutoRenewDuration": "00:05:00"

}

The host.json can be edited using Kudu Advance tool (in case the Logic App is created in Azure Portal).

Message processing for session-aware service bus queue/subscriptions:

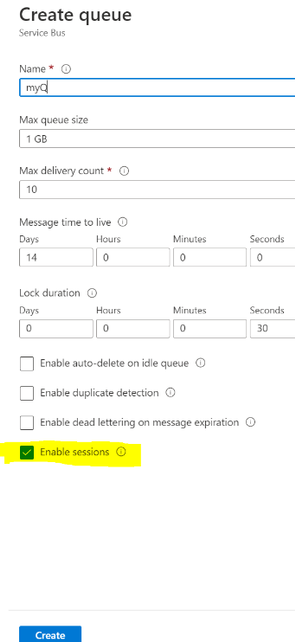

You can enable the session by selecting the Enable sessions checkbox while creating the Service Bus queue in UI :

In the session-aware Service Bus queue the built-in Service Bus trigger cannot receive the message by default. The sessions are not enabled for Service Bus trigger by default.

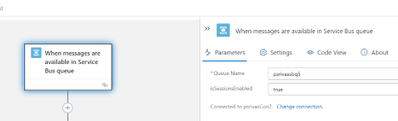

The Azure Service Bus trigger is based upon Azure Function ServiceBusTrigger binding configuration of Azure function, the isSessionsEnabled configuration needs to set for it to enable the session processing. This option can be handled in the logic app code definition as given below:

"triggers": {

"When_messages_are_available_in_Service_Bus_queue": {

"inputs": {

"parameters": {

"isSessionsEnabled": true,

"queueName": "psrivassbq5"

}

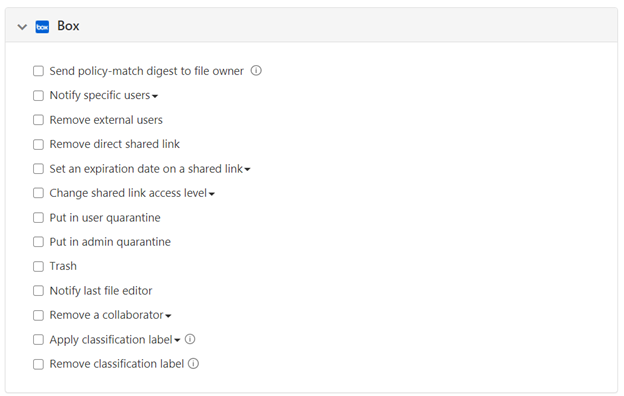

Once you update the Logic App definition you can view this in the designer as shown below. However, the isSessionsEnabled trigger input option cannot be configured on the designer surface. The input option IsSessionEnabled in UI will be provided in the Logic App Refresh future release.

When the messages are queued in Service Bus session queue, it will trigger the Service Bus trigger based upon sessions in Service Bus queue. You can use the SessionId property from Service Bus trigger output.

by Contributed | Jan 20, 2021 | Technology

This article is contributed. See the original author and article here.

Today, we received a new service request that our customer wants to connect from Oracle to Azure SQL Managed Instance or Azure SQL Database using Oracle Database Gateway for ODBC using a Windows Operating system.

Following, I would like to share with you the steps that we’ve done:

First Step: Installation and Configuration of the different components:

1) Oracle Database Gateway for ODBC

- Install it defining a new listener using the port, for example, 1528.

- I modified the listerner.ora adding the following text:

SID_LIST_LISTENER_ODBC =

(SID_LIST =

(SID_DESC=

(SID_NAME=dg4odbc)

(ORACLE_HOME=C:apptgusernameproduct19.0.0tghome_2)

(PROGRAM=dg4odbc)

)

)

- The final result of listener.ora file looks like:

# listener.ora Network Configuration File: C:apptgusernameproduct19.0.0tghome_2NETWORKADMINlistener.ora

# Generated by Oracle configuration tools.

LISTENER_ODBC =

(DESCRIPTION_LIST =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = localhost)(PORT = 1528))

(ADDRESS = (PROTOCOL = IPC)(KEY = EXTPROC1528))

)

)

SID_LIST_LISTENER_ODBC =

(SID_LIST =

(SID_DESC=

(SID_NAME=dg4odbc)

(ORACLE_HOME=C:apptgusernameproduct19.0.0tghome_2)

(PROGRAM=dg4odbc)

)

)

- I restarted the listener for this specific Oracle Instance.

2) I modified the tnsnames.ora adding the following text:

dg4odbc =

(DESCRIPTION=

(ADDRESS=(PROTOCOL=tcp)(HOST=localhost)(PORT=1528))

(CONNECT_DATA=(SID=dg4odbc))

(HS=OK)

)

3) I re-started the listener.

4) I modified the file placed on <oracle_home_folder>admin subfolder on your Oracle Home Installation with the following parameters:

# This is a sample agent init file that contains the HS parameters that are

# needed for the Database Gateway for ODBC

#

# HS init parameters

#

HS_FDS_CONNECT_INFO = dg4odbc

HS_FDS_TRACE_LEVEL = OFF

#

# Environment variables required for the non-Oracle system

#

#set <envvar>=<value>

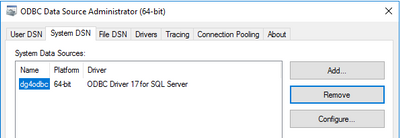

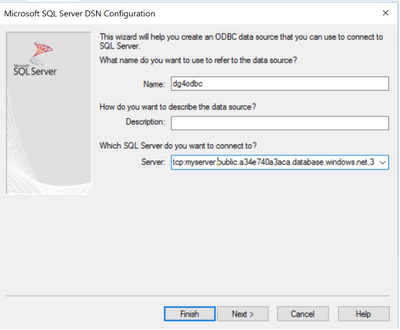

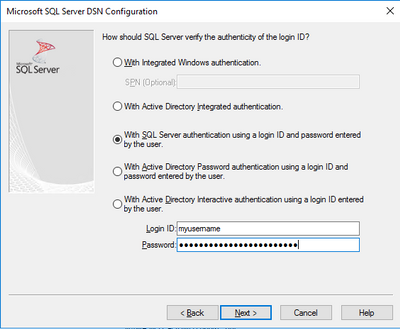

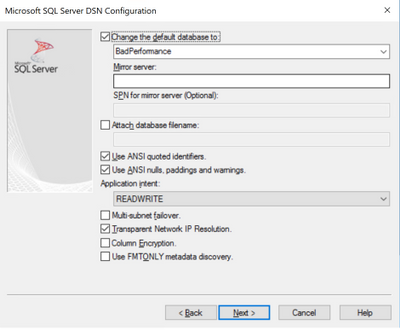

5) The parameter of the previous file HS_FDS_CONNECT_INFO contains the name of the Data Source Name that we are going to use to connect. So, in this situation, using ODBC Data Source Administrator (64-Bit) I defined the following data source parameters:

- Name: dg4odbc

- Server: The name of my public instance name

- SQL Server Authentication.

- Database Name that I want to connect.

Second Step: Test the connectivity and run a sample query.

- Open a new Windows Command Prompt, I run sqlplus to connect to any instance of Oracle that I have: sqlplus system/MyPassword!@OracleInstance as sysdba

- I created a database link to connect using ODBC to my Azure SQL Managed Instance

create database link my4 connect to "myuserName" identified by "MyPassword!" using 'dg4odbc';

- Finally, I executed the following query to obtain data from the table customers making a reference of this database link:

select * from customers@my4;

As I mentioned before this configuration process works, also, connecting to Azure SQL Database.

Enjoy!

by Contributed | Jan 20, 2021 | Technology

This article is contributed. See the original author and article here.

As part of our recent Azure Security Center (ASC) Blog Series, we are diving into the different controls within ASC’s Secure Score. In this post we will be discussing the control of Enable audit and logging.

Log collection is a relevant input when analyzing a security incident, business concern or even a suspicious security event. It can be helpful to create baselines and to better understand behaviors, tendencies, and more.

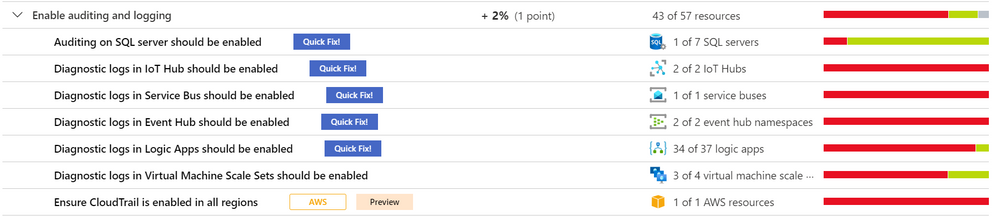

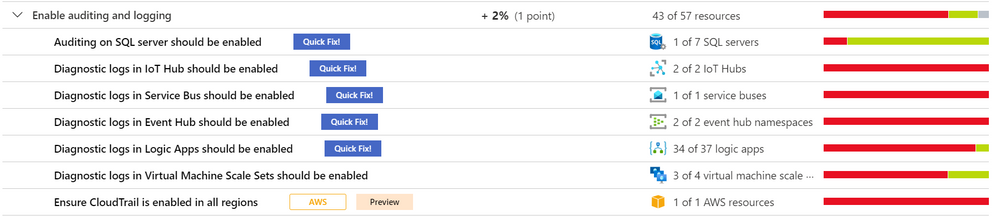

The security control enable auditing and logging, contains recommendations that will remind you to enable logging for all Azure services supported by Azure Security Center and resources in other cloud providers, such as AWS and GCP (currently in preview). Upon the remediation of all these recommendations, you will gain a 1% increase in your Secure Score.

Recommendations

The number of recommendations will vary according to the available resources in your subscription. This blog post will focus on some recommendations for SQL Server, IoT Hub, Service Bus, Event Hub, Logic App, VM Scale Set, Key Vault, AWS and GCP.

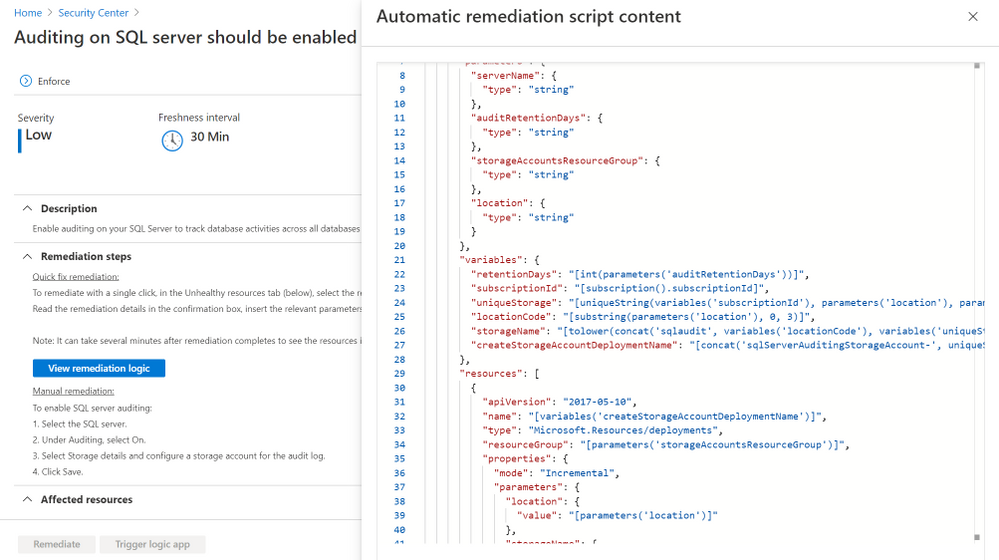

Auditing on SQL Server should be enabled

Enable auditing is suggested to track database activities. To remediate, ASC has a Quick Fix button that will change the Microsoft.Sql/servers/auditingSettings property state to Enabled. The logic app will request the retention days and the storage account where the audit will be saved. The storage account can be created during that process, the template is in this article. Nonetheless, there is also a manual remediation described in the Remediation Steps. The recommendation can be Enforced, so that Azure policy’s DeployIfNotExist automatically remediates non-compliant resources upon creation. More information about Enforce/Deny can be found here. To learn more about auditing capabilities in SQL, read this article.

Diagnostic logs in IoT Hub should be enabled

This enables you to recreate activity trails for investigation purposes when a security incident occurs or your IOT Hub is compromised. The recommendation can be Enforced and it also comes with a Quick Fix where a Logic App modifies the Microsoft.Devices/IotHubs/providers/diagnosticSettings Metrics AllMetrics and the Logs Connections, DeviceTelemetry, C2DCommands, DeviceIdentityOperations, FileUploadOperations, Routes, D2CTwinOperations, C2DTwinOperations, TwinQueries, JobsOperations, DirectMethods, DistributedTracing, Configurations, DeviceStreams to “enabled”: true. To learn more about Monitoring Azure IoT Hub visit this article.

Diagnostic logs in Service Bus should be enabled

This recommendation can be Enforced, and it has a Quick Fix that will remediate the selected resources by modifying Microsoft.ServiceBus/namespaces/providers/diagnosticSettings “All Metrics” and “OperationalLogs” to “enabled”: true. It is necessary to put the retention days to deploy the Logic App. To manually remediate it, follow this article. To learn more about the Service Bus security baseline, read this article.

Diagnostic logs in Event Hub should be enabled

The Quick Fix has a Logic App that will modify for selected resources the Microsoft.EventHub/namespaces/providers/diagnosticSettings metrics AllMetrics and the logs ArchiveLogs, OperationalLogs, AutoScaleLogs to “enabled”: true, with the retention days input. This recommendation can be Enforced. For manual remediation steps, visit this article. To learn more about the Event Hub security baseline, read this article.

Diagnostic logs in Logic Apps should be enabled

The recommendation can be Enforced and it comes with a Quick Fix where a Logic App modifies the Microsoft.Logic/workflows/providers/diagnosticSettings metrics “AllMetrics” and logs “WorkflowRuntime” to “enabled”: true. The retention days field has to be input at the beginning of the remediation. For manual remediation steps, visit this article. To learn more about Logic Apps monitoring in ASC, read this article.

Diagnostic logs in Virtual Machine Scale Sets should be enabled

This specific recommendation does not come with the Enforce feature nor a Quick Fix. To configure the Azure Virtual Machine Scale Set diagnostics extension follow this document. The command az vmss diagnostics set will enable diagnostics on a VMSS. To learn more about the Azure security baseline for Virtual Machine Scale Sets, read this article.

Diagnostic logs in Key Vault should be enabled

The recommendation can be Enforced and it also comes with a Quick Fix where the Logic App goes to the resource Microsoft.KeyVault/vaults/providers/diagnosticSettings and sets the metrics AllMetrics and logs AuditEvent to “enabled”: true including the retention days input. For manual remediation steps, read this article. To learn more about monitoring and alerting in Azure Key Vault, visit this article.

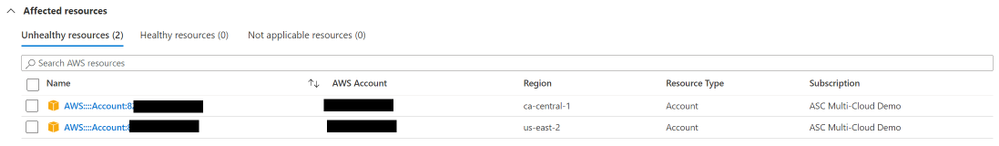

Ensure a log metric filter and alarm exist for security group changes – AWS Preview

By directing CloudTrail Logs to CloudWatch Logs real-time monitoring of API calls can be achieved. Metric filter and alarm should be established for changes to Security Groups. Recommendations for AWS resources do not have the Enforce feature, Quick Fix button, Trigger Logic App. To remediate them, follow the AWS Security Hub documentation.

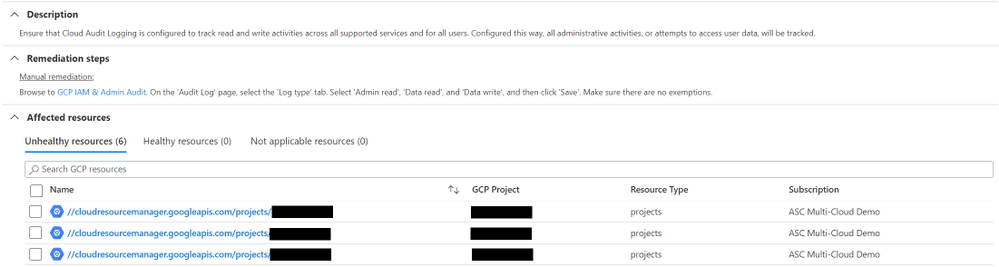

Ensure that Cloud Audit Logging is configured properly across all services and all users from a project – GCP Preview

Ensure that Cloud Audit Logging is configured to track read and write activities across all supported services and for all users. Configured this way, all administrative activities, or attempts to access user data, will be tracked. Recommendations for GCP resources do not have the Enforce feature, Quick Fix button, Trigger Logic App. To remediate them, follow the Manual Remediation Steps. For more information, visit the GCP documentation.

Reviewer

Yuri Diogenes, Principal Program Manager (@Yuri Diogenes)

Recent Comments