by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

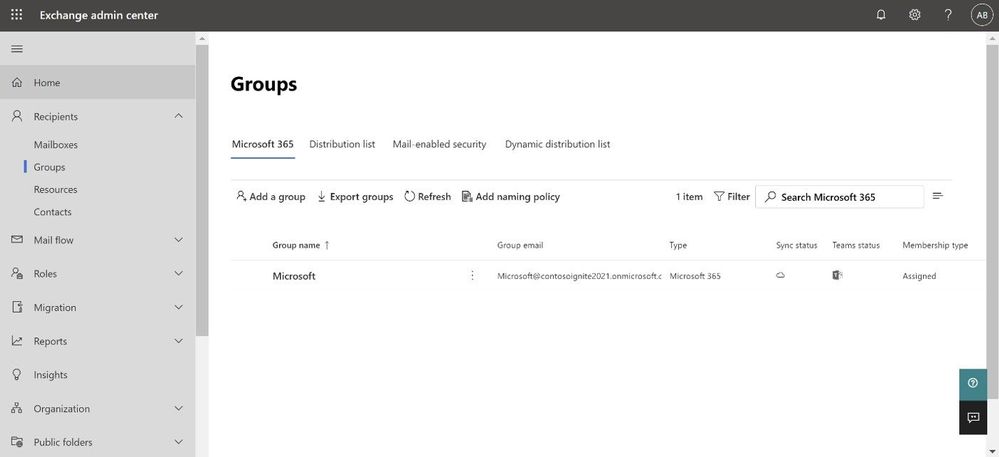

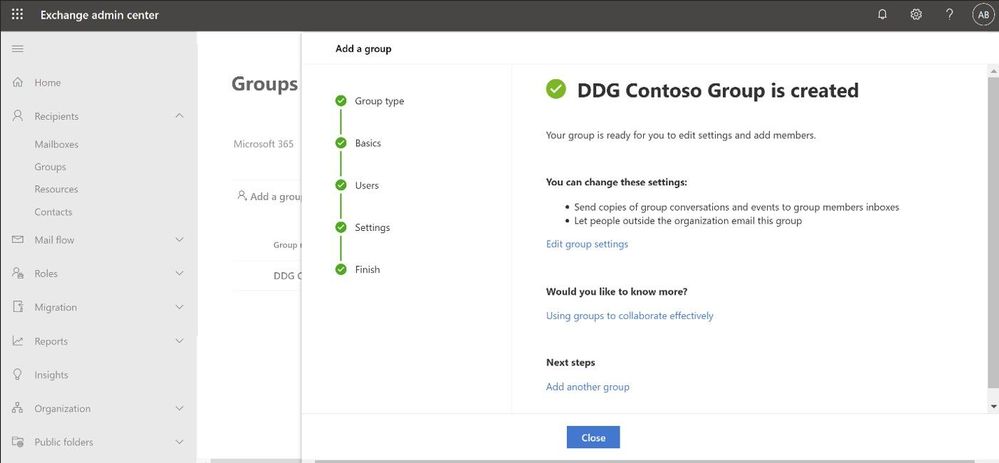

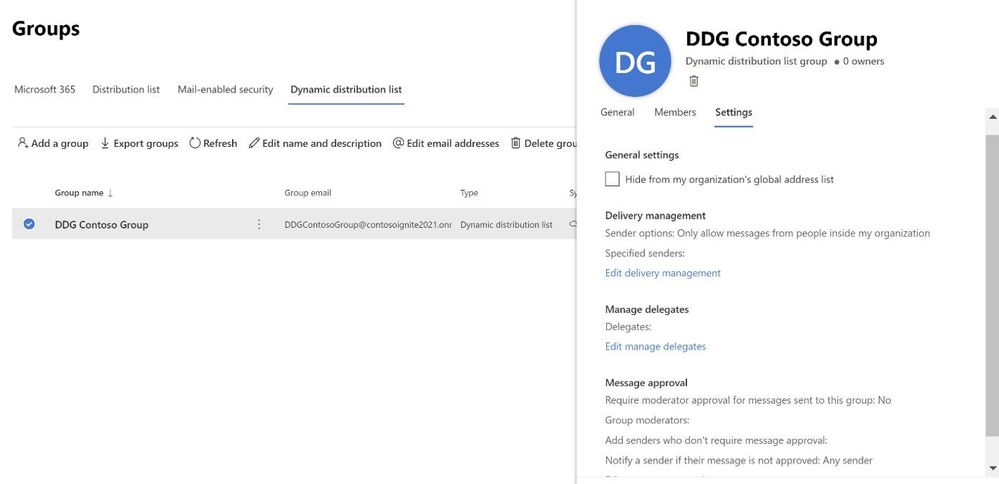

Continuing on our path to release the new EAC, we wanted to tell you that the Groups feature is now available. The experience is modern, improved, and fast. Administrators can now create and manage all 4 types of groups (Microsoft 365 group, Distribution list, Mail-enabled Security group and Dynamic distribution list) from the new portal.

We wanted to call out some improvements in Groups experience in the new EAC when compared with classic EAC.

Ease of discoverability: With a new tabbed design approach, groups are now separated out into 4 different pivots. Admins no longer need to filter or sort functions to find group types. You can click on the pivot of the group type you want to manage, and all such groups will be populated in that view.

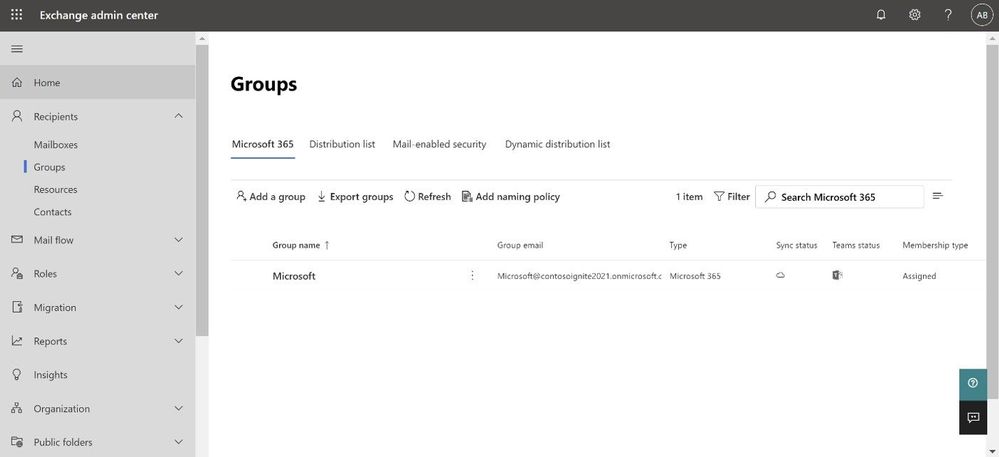

More controls during group creation: The new group creation flow design wizard (the flyout panel that pops from the right) guides you through creation and is consistent with the other parts of the portal. To create any group, there are no pop-ups anymore and you do not have to leave the page that you are on. You simply click on the Add a group button and a guided flyout opens to assist you with group creation.

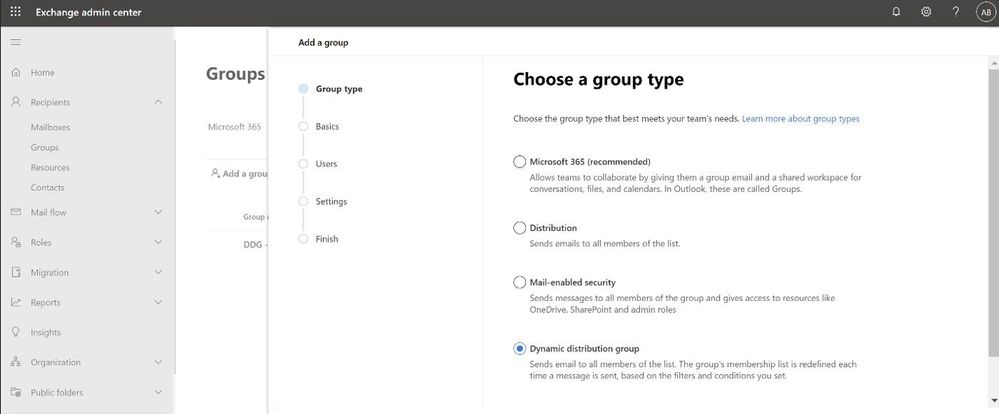

Performance: The new design is faster and more performant. It takes very little time for you to see the groups that are already created. If creating new groups, once the group is created, you can additionally edit the group settings directly from the completion page (see below).

Performance: The new design is faster and more performant. It takes very little time for you to see the groups that are already created. If creating new groups, once the group is created, you can additionally edit the group settings directly from the completion page (see below).

Settings management

All group settings including general settings, delivery management, manage delegates, message approval, etc. are also now available in the new EAC.

Happy groups management!

If you have feedback on the new EAC, please go to Give feedback floating button and let us know what you think:

If you share your email address with us while providing feedback, we will try to reach out to you to get more information, if needed:

Learn more

To learn more, check the updated documentation. To experience groups in the new EAC, click here (this will take you to the new EAC).

The Exchange Online Admin Team

by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

I was recently troubleshooting an issue with Bot Composer 1.2 to create and publish the bot on Azure government cloud. But even after performing all the steps correctly we end up getting a 401 unauthorized error when the bot is tested on web chat.

Below are the things that need to be added to bot for making it work on Azure Gov cloud.

https://docs.microsoft.com/en-us/azure/bot-service/bot-service-resources-faq-ecosystem?view=azure-bot-service-4.0#how-do-i-create-a-bot-that-uses-the-us-government-data-center

Just to isolate the problem more, we deployed the exact same bot from composer on Normal App service and (not azure government one) and everything works smoothly there. So we got to know that the issue only happens when the deployment happens on the Azure government cloud.

We eventually figured out the following steps that are needed to make it work properly over azure government cloud.

** We need to make a code change for this to work and for making the code change, we would have to eject the run time otherwise composer will never be able to incorporate the change.

Create the bot using Composer.

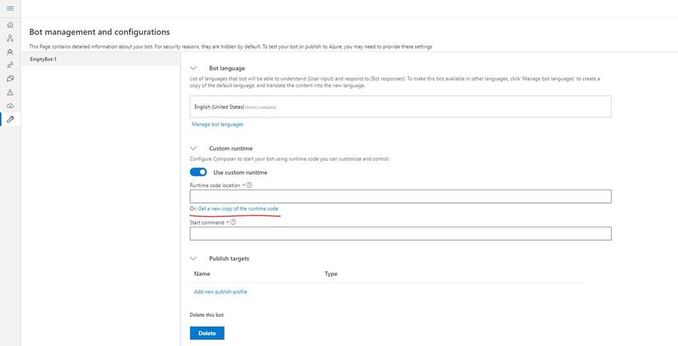

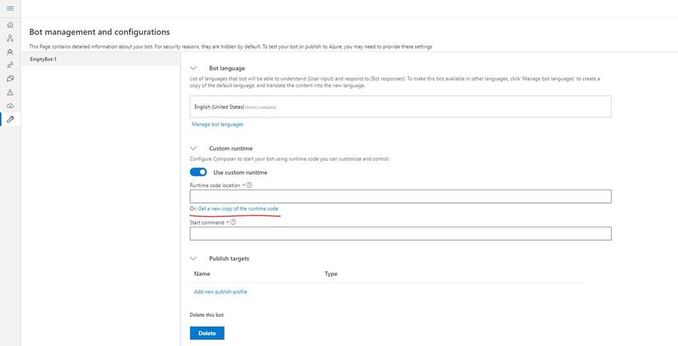

Click on the “Project Settings” and then make sure you select “Custom Runtime” and then click on “get a new copy of runtime code”

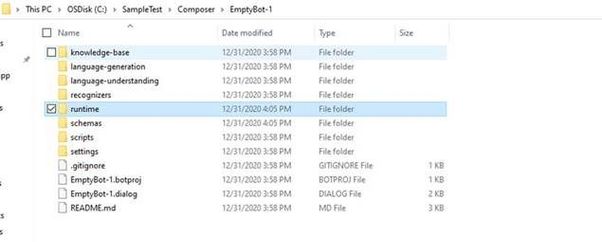

Once you do that, a folder called as “runtime” will be created in the project location for this composer bot.

In the runtime, go to the azurewebapp folder and then open the .csproj file in Visual Studio and make the following changes (the following changes are required for the Azure government cloud)

- Add below line in startup.cs :

services.AddSingleton<IChannelProvider, ConfigurationChannelProvider>()

- Modify BotFrameworkHttpAdapter creation to take channelProvider in startup.cs as follows.

var adapter = IsSkill(settings) ? new BotFrameworkHttpAdapter(new ConfigurationCredentialProvider(this.Configuration), s.GetService<AuthenticationConfiguration>(), channelProvider: s.GetService<IChannelProvider>()) : new BotFrameworkHttpAdapter(new ConfigurationCredentialProvider(this.Configuration), channelProvider: s.GetService<IChannelProvider>());

- Add a setting as following in your appsettings.json (this can be added from composer as well)

"ChannelService": "https://botframework.azure.us"

Build the ejected web app as follows :

C:SampleTestComposerTestComposer1runtimeazurewebapp>dotnet build

Now we need to build the schema by using the given script : (from powershell)

PS C:SampleTestComposerTestComposer1schemas> .update-schema.ps1 -runtime azurewebapp

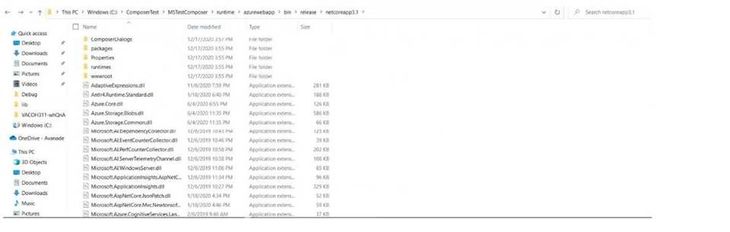

Next we need to publish this bot to Azure app service, we will make use of az webapp deployment command. Firstly create the zip of contents within the “.netcoreapp3.1” folder (not the folder itself) which is inside the runtime/azurewebapp/bin/release :

Now we will use the command as follows :

Az webapp deployment source config-zip –resource-group “resource group name” –name “app service name” –src “The zip file you created”

This will publish the bot correctly to Azure and you can go ahead and do a test in web chat.

In short we had to make few changes from code perspective to get things working in Azure government cloud and for making those changes to a bot created via composer we need to eject the runtime and then make those code changes, build and update the schema and finally publish.

References :

Pre-requisites for Bot on Azure government cloud : Bot Framework Frequently Asked Questions Ecosystem – Bot Service | Microsoft Docs

Exporting runtime in Composer : Customize actions – Bot Composer | Microsoft Docs

by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

As you’re probably aware, Microsoft is in the process of updating Azure services to use TLS certificates from a different set of root certificate authorities (root CAs). Azure TLS Certificate Changes provides details about these updates. Some of these changes affect Azure Sphere, but in most cases no action is required for Azure Sphere customers.

This post provides a primer about the Azure Sphere certificate “landscape”: the types of certificates that the various Azure Sphere components use, where they come from, where they’re stored, how they’re updated, and how to access them when necessary. Furthermore, it also describes how the Azure Sphere OS, SDK, and services make certificate management easier for you. We assume you have a basic familiarity with certificate authorities and the chain of trust. If this is all new to you, we suggest starting with Certificate authority – Wikipedia or other internet sources.

Azure Sphere Devices

Every Azure Sphere device relies on the Trusted Root Store, which is part of the Azure Sphere OS. The Trusted Root Store contains a list of root certificates that are used to validate the identity of the Azure Sphere Security Service when the device connects for device authentication and attestation (DAA), over-the-air (OTA) update, or error reporting. These certificates are provided with the OS.

When daily attestation succeeds, the device receives two certificates: an update certificate and a customer certificate. The update certificate enables the device to connect to the Azure Sphere Update Service to get software updates and to upload error reports; it is not accessible to applications or through the command line. The customer certificate, sometimes called the DAA certificate, can be used by applications to connect to third-party services such wolfSSL that use transport layer security (TLS). This certificate is valid for about 25 hours. Applications can retrieve it programmatically by calling the DeviceAuth_GetCertificatePath function.

Devices that connect to Azure-based services such as Azure IoT Hub, IoT Central, IoT Edge must present their Azure Sphere tenant CA certificate to authenticate their Azure Sphere tenant. The azsphere ca-certificate download command in the CLI returns the tenant CA certificate for such uses.

EAP-TLS network connections

Devices that connect to an EAP-TLS network need certificates to authenticate with the network’s RADIUS server. To authenticate as a client, the device must pass a client certificate to the RADIUS. To perform mutual authentication, the device must also have a root CA certificate for the RADIUS server so that it can authenticate the server. Microsoft does not supply either of these certificates; you or your network administrator is responsible for ascertaining the correct certificate authority for your network’s RADIUS server and then acquiring the necessary certificates from the issuer.

To obtain the certificates for the RADIUS server, you’ll need to authenticate to the certificate authority. You can use the DAA certificate, as previously mentioned, for this purpose. After acquiring the certificates for the RADIUS server, you should store them in the device certificate store. The device certificate store is available only for use in authenticating to a secured network with EAP-TLS. (The DAA certificate is not kept in the device certificate store; it is kept securely in the OS.) The azsphere device certificate command in the CLI lets you manage the certificate store from the command line. Azure Sphere applications can use the CertStore API to store, retrieve, and manage certificates in the device certificate store. The CertStore API also includes functions to return information about individual certificates, so that apps can prepare for certificate expiration and renewal.

See Use EAP-TLS in the online documentation for a full description of the certificates used in EAP-TLS networking, and see Secure enterprise Wi-Fi access: EAP-TLS on Azure Sphere on Microsoft Tech Community for additional information.

Azure Sphere Applications

Azure Sphere applications need certificates to authenticate to web services and some networks. Depending on the requirements of the service or endpoint, an app may use either the DAA certificate or a certificate from an external certificate authority.

Apps that connect to a third-party service using wolfSSL or a similar library can call the DeviceAuth_GetCertificatePath function to get the DAA certificate for authentication. This function was introduced in the deviceauth.h header in the 20.10 SDK.

The Azure IoT library that is built into Azure Sphere already trusts the necessary Root CA, so apps that use this library to access Azure IoT services (IoT Hub, IoT Central, DPS) do not require any additional certificates.

If your apps use other Azure services, check with the documentation for those services to determine which certificates are required.

Azure Sphere Public API

The Azure Sphere Public API (PAPI) communicates with the Azure Sphere Security Service to request and retrieve information about deployed devices. The Security Service uses a TLS certificate to authenticate such connections. This means that any code or scripts that use the Public API, along with any other Security Service clients such as the Azure Sphere SDK (including both the v1 and v2 azsphere CLI), must trust this certificate to be able to connect to the Security Service. The SDK uses the certificates in the host machine’s system certificate store for Azure Sphere Security Service validation, as do many Public API applications.

On October 13, 2020 the Security Service updated its Public API TLS certificate to one issued from the DigiCert Global Root G2 certificate. Both Windows and Linux systems include the DigiCert Global Root G2 certificate, so the required certificate is readily available. However, as we described in an earlier blog post, only customer scenarios that involved subject, name, or issuer (SNI) pinning required changes to accommodate this update.

Azure Sphere Security Service

Azure Sphere cloud services in general, and the Security Service in particular, manage numerous certificates that are used in secure service-to-service communication. Most of these certificates are internal to the services and their clients, so Microsoft coordinates updates as required. For example, in addition to updating the Public API TLS certificate in October, the Azure Sphere Security Service also updated its TLS certificates for the DAA service and Update service. Prior to the update, devices received an OTA Update to the Trusted Root Store which included the new required root certificate. No customer action was necessary to maintain device communication with the Security Service.

How does Azure Sphere make certificate changes easier for customers?

Certificate expiration is a common cause of failures for IoT Devices that Azure Sphere can prevent.

Because the Azure Sphere product includes both the OS and the Security Service, the certificates used by both these components are managed by Microsoft. Devices receive updated certificates through the DAA process, OS and application updates, and error reporting without requiring changes in applications. When Microsoft added the DigiCert Global Root G2 certificate, no customer changes were required to continue DAA, updates, or error reporting. Devices that were offline at the time of the update received the update as soon as they reconnected to the internet.

The Azure Sphere OS also includes the Azure IoT library, so if Microsoft makes further changes to certificates that the Azure IoT libraries use, we will update the library in the OS so that your applications won’t need to be changed. We’ll also let you know through additional blog posts about any edge cases or special circumstances that might require modifications to your apps or scripts.

Both of these cases show how Azure Sphere simplifies application management by removing the need for maintenance updates of applications to handle certificate changes. Because every device receives an update certificate as part of its daily attestation, you can easily manage the update of any locally-managed certificates your devices and applications use. For example, if your application validates the identity of your line-of-business server (as it should), you can deploy an updated application image package that includes updated certificates. The application update services provided by the Azure Sphere platform delivers those updates, removing the worry that the update service itself will incur a certificate expiry issue.

For more information

by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

Overview

In 2021, each month we will be releasing a monthly blog covering the webinar of the month for the Low-code application development (LCAD) on Azure solution. LCAD on Azure is a new solution to demonstrate the robust development capabilities of integrating low-code Microsoft Power Apps and the Azure products you may be familiar with.

This month’s webinar is ‘Develop Application Lifecycle Management (ALM) processes with GitHub Actions and Power Apps.’ In this blog I will highlight what LCAD on Azure is, the 3 most prevalent products in the webinar and use cases and provide supporting documentation for you to learn more about the webinar’s content.

What is Low-code application development (LCAD) on Azure?

Low-code application development (LCAD) on Azure was created to help developers build business applications faster with less code, leveraging the Power Platform, and more specifically Power Apps, yet helping them scale and extend their Power Apps with Azure services.

For example, a pro developer who works for a manufacturing company would need to build a line-of-business (LOB) application to help warehouse employees’ track incoming inventory. That application would take months to build, test, and deploy, however with Power Apps’ it can take hours to build, saving time and resources.

However, say the warehouse employees want the application to place procurement orders for additional inventory automatically when current inventory hits a determined low. In the past that would require another heavy lift by the development team to rework their previous application iteration. Due to the integration of Power Apps and Azure a professional developer can build an API in Visual Studio (VS) Code, publish it to their Azure portal, and export the API to Power Apps integrating it into their application as a custom connector. Afterwards, that same API is re-usable indefinitely in the Power Apps’ studio, for future use with other applications, saving the company and developers more time and resources.

This is just one scenario that highlights the capabilities of the LCAD on Azure solution. To learn more about the solution itself there is a link at the bottom of this blog in the supporting documentation section. This month’s webinar will focus on the capability to automate application lifecycle management, like the above scenario, with GitHub Actions to further expedite and streamline the development process for developers.

Webinar Content

The webinar explains ‘Fusion Development’ a process that leverages the citizen developer to build low-code applications themselves, further reducing strain on development teams, but professional developers meeting citizen developer’s half-way by extending these applications with custom code.

The webinar includes 2 demos, one on the integration of API management and Power Apps, how to create a CI/CD pipeline using GitHub Actions.

The integration of API management and Power Apps will cover the no cliff extensibility capabilities of Power Apps and Azure together, how to export APIs to Power Apps, and how to connect API management with Power Apps via Microsoft Teams for free.

We introduced Azure API Management connectors to quickly publish Azure API Management backed APIs to the Power Platform for easy discovery and consumption, dramatically reducing the time it takes to create apps connecting to Azure services.

This means that enterprises can now truly benefit from existing assets hosted on Azure, by making these available to Citizen developers with just a few clicks in the Azure portal, thereby eliminating the additional steps to go create custom connectors in the Power Apps or Power Automate maker experiences.

The GitHub Actions demo will cover developer’s ability to build automated software development lifecycle workflows. With GitHub Actions for Microsoft Power Platform, developers can create workflows in their repository to build, test, package, release, and deploy apps; perform automation; and manage bots and other components built on Microsoft Power Platform.

Conclusion

The webinar is currently available on-demand, and the webinar in February will cover the integration of SAP on Azure and Power Apps.

Support Documents

Power Apps x Azure websites

Power Platform x Azure API Management Integration

Power Platform x GitHub Actions Automated SDLC workflows

by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

We are excited to announce that Application Performance Monitoring (APM) is now fully integrated into Azure Spring Cloud, powered by Application Insights.

Azure Spring Cloud is jointly built, operated, and supported by Microsoft and VMware. It is a fully managed service for Spring Boot applications that lets you focus on building the applications that run your business without the hassle of managing infrastructure.

APM in Azure Spring Cloud offers in-depth performance monitoring for your Spring applications without requiring ANY code changes, recompiling, retesting, or redeployment. APM on Azure Spring Cloud is so seamless that you get the insights on your applications just out of the box. You do not have to do ANYTHING – just deploy your applications and the monitoring data starts flowing. The benefits you get with application monitoring are:

- Visibility into all your applications with distributed tracing, including paths of operation requests from origins to destinations and insights into applications that are operating correctly and those with bottlenecks.

- Logs, exceptions, and metrics in the context of call paths offer meaningful insights and actionable information to speed root cause analysis.

- Insights into application dependencies – SQL Database, MySQL, PostgreSQL, MariaDB, JDBC, MongoDB, Cassandra, Redis, JMS, Kafka, Netty / WebFlux, etc.

- Performance data for every call into operations exposed by applications, including data-like request counts, response times, CPU usage, and memory.

- Custom metrics conveniently auto-collected through Micrometer, allowing you to publish custom performance indicators or business-specific metrics and visualize deeper application and business insights.

- Ability to browse, query, and alert on application metrics and logs.

While both Azure Spring Cloud and Application Insights Java agent are generally available, their integration for out of the box monitoring is in preview.

“Improvements in Azure Spring Cloud are always welcome, especially when they seamlessly integrate products from across the Azure ecosystem. A great example is the introduction of the 3.0 version of the Application Insights Java agent – now we can make better horizontal autoscaling decisions by capturing requests sent to our Netty powered Spring Cloud Gateway and SQL statements issued by our applications are captured and show very nicely in the application map”. — Jonathan Jones, Lead Solutions Architect, Swiss Re Management Ltd. (Switzerland)

“Raley’s is very excited by the continued enhancements to Azure Spring Cloud. With the addition of a Java in-process agent for Application Insights, our developers have fewer integration points to worry about! This enhancement promises to increase our productivity by reducing the development effort and decreasing the effort required to support and troubleshoot issues!” — Arman Guzman, Principal Software Engineer, Unified Commerce, Raley’s (United States)

You can enable the Java in-process monitoring agent when you create or update Azure Spring Cloud:

az spring-cloud create --name ${SPRING_CLOUD_SERVICE}

--sku standard --enable-java-agent

--resource-group ${RESOURCE_GROUP}

--location ${REGION}

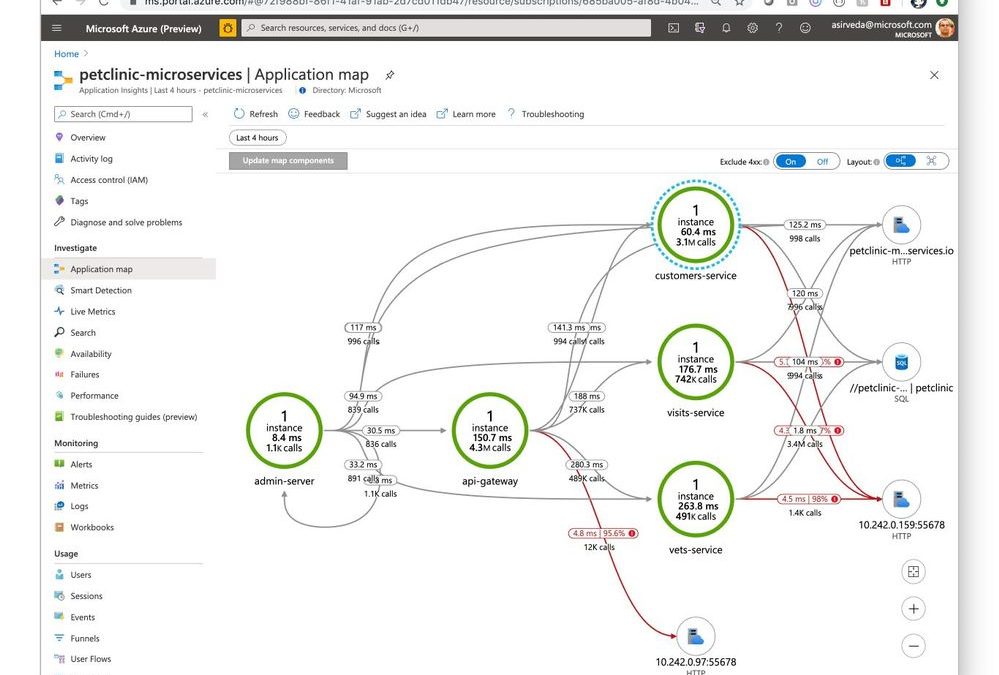

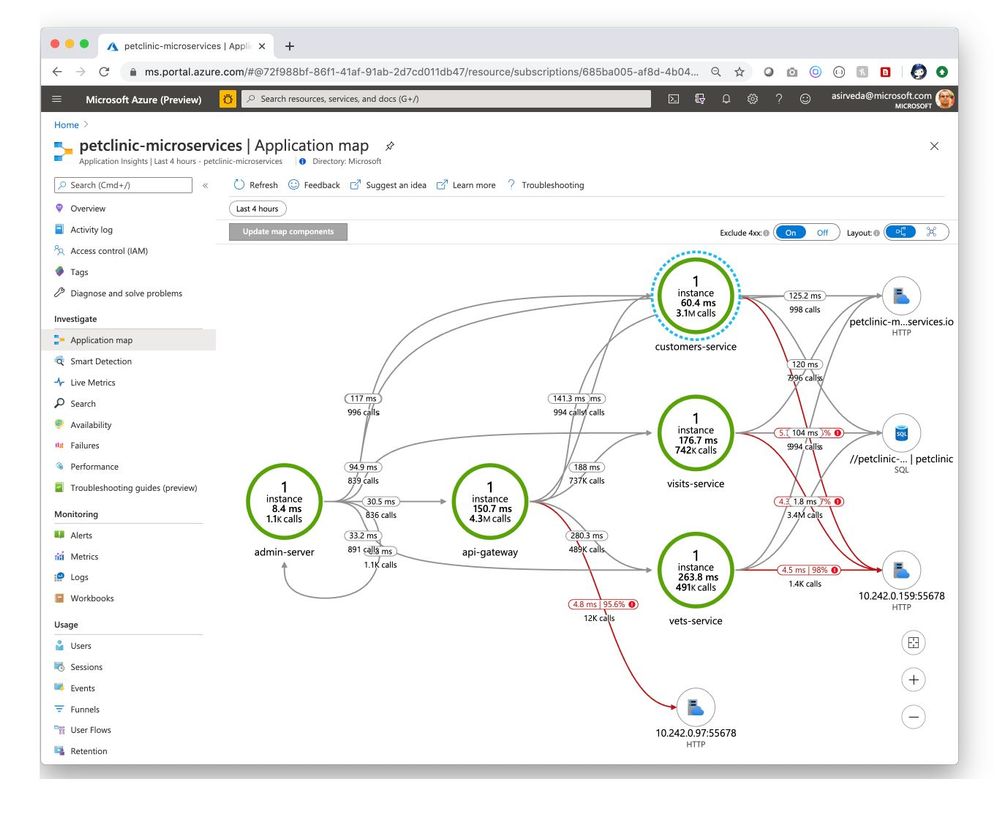

Then, you can open Application Insights created by Azure Spring Cloud and start monitoring applications and their dependencies – we will illustrate this using a distributed version of Spring Petclinic. Navigate to the Application Map blade where you can see an incredible, holistic view of microservices that shows applications that are operating correctly (green) and those with bottlenecks (red) [Figure 1]. Developers can easily identify issues in their applications and quickly troubleshoot and fix them.

Figure 1 – Microservice transactions in Application Insights

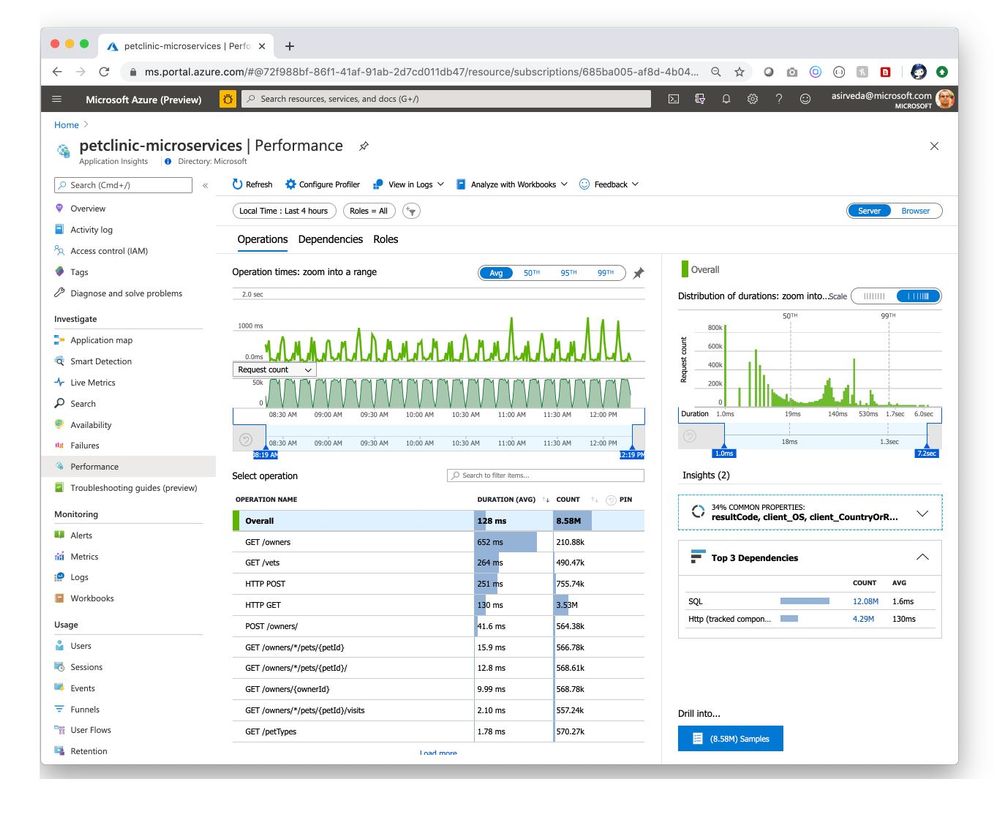

Navigate to the Performance blade where you can see response times and request counts for operations exposed by your applications [Figure 2].

Figure 2 – Performance of operations exposed by applications

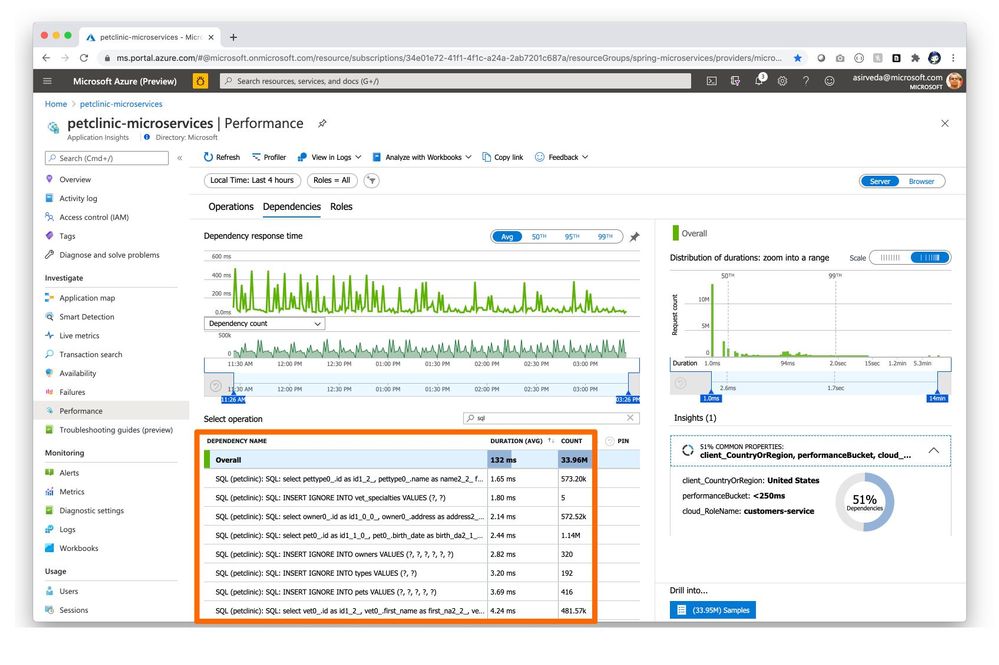

Navigate to the Dependencies tab in the Performance blade where you can see all your dependencies and their response times and request counts [Figure 3].

Figure 3 – Performance of application dependencies

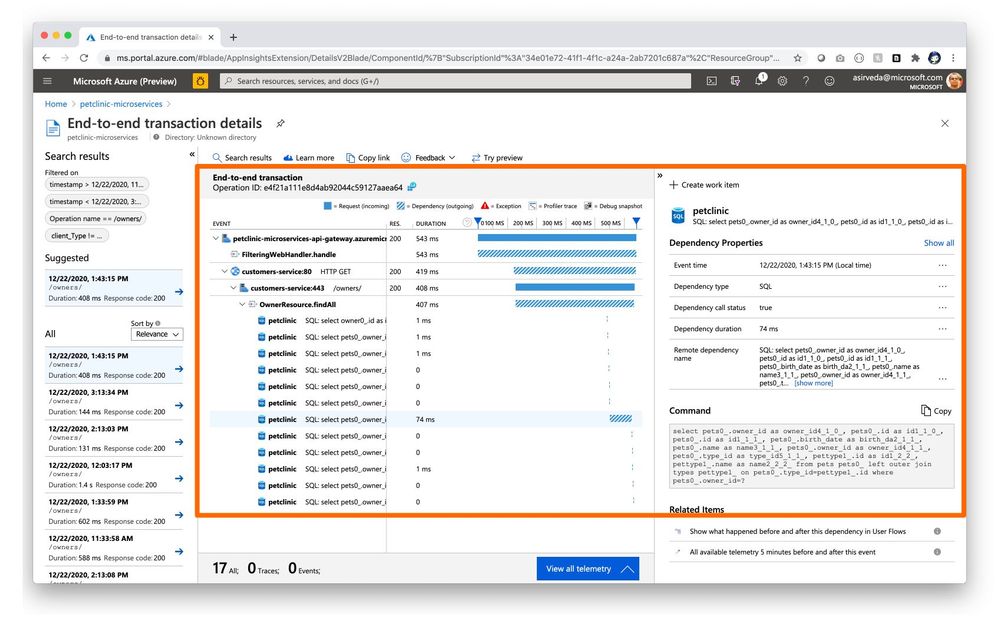

You can click a SQL call or a dependency to see the full end-to-end transaction in context [Figure 4].

Figure 4 – End-to-end application to SQL call transaction details

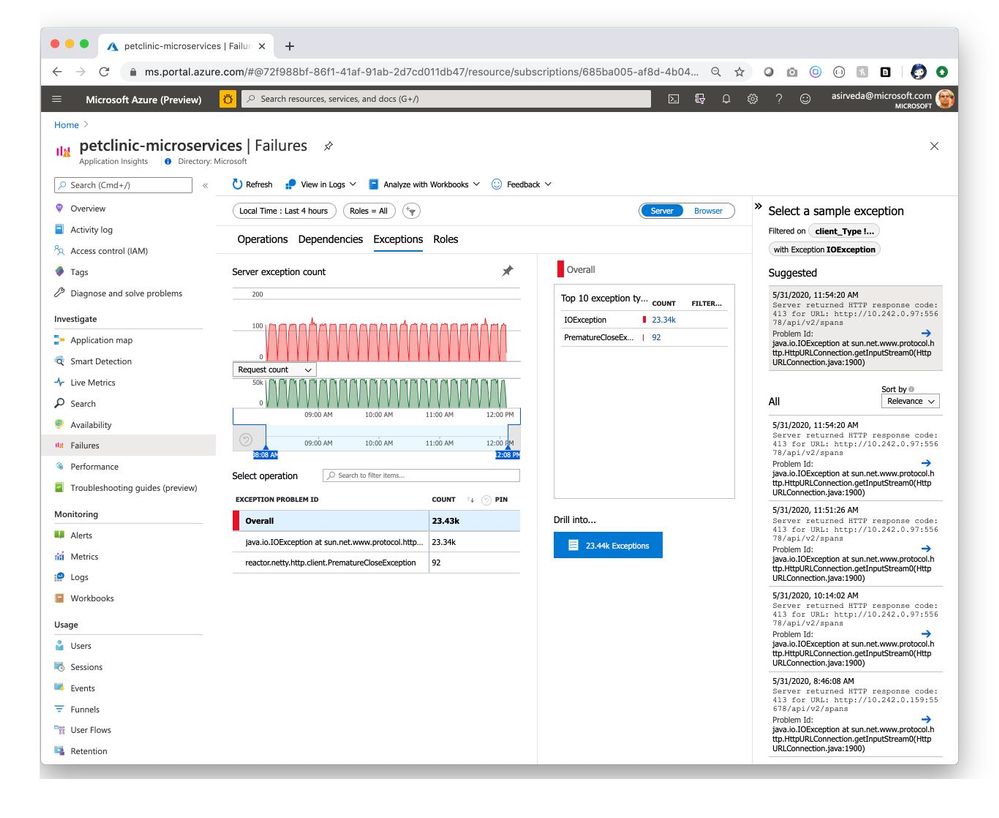

Navigate to the Exceptions tab in the Failures blade to see a collection of exceptions thrown by applications [Figure 5].

Figure 5 – Exceptions thrown by applications

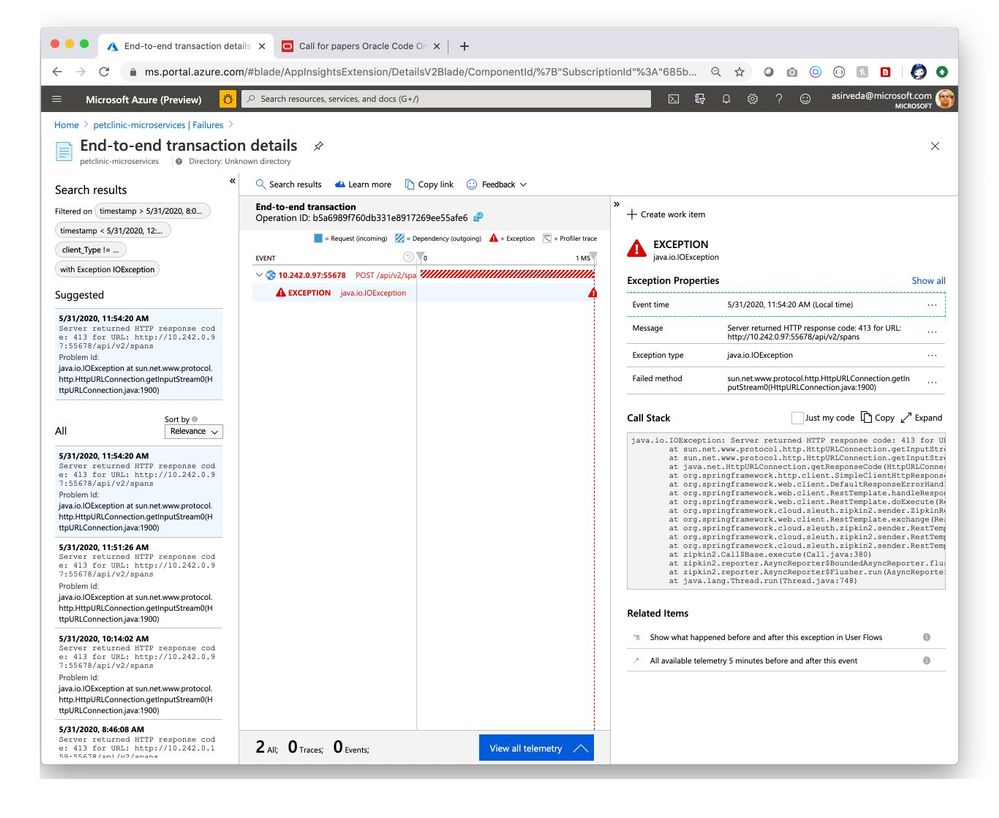

Simply select an exception and drill in for meaningful insights and actionable stack trace [Figure 6].

Figure 6 – End-to-end transaction details for an application exception

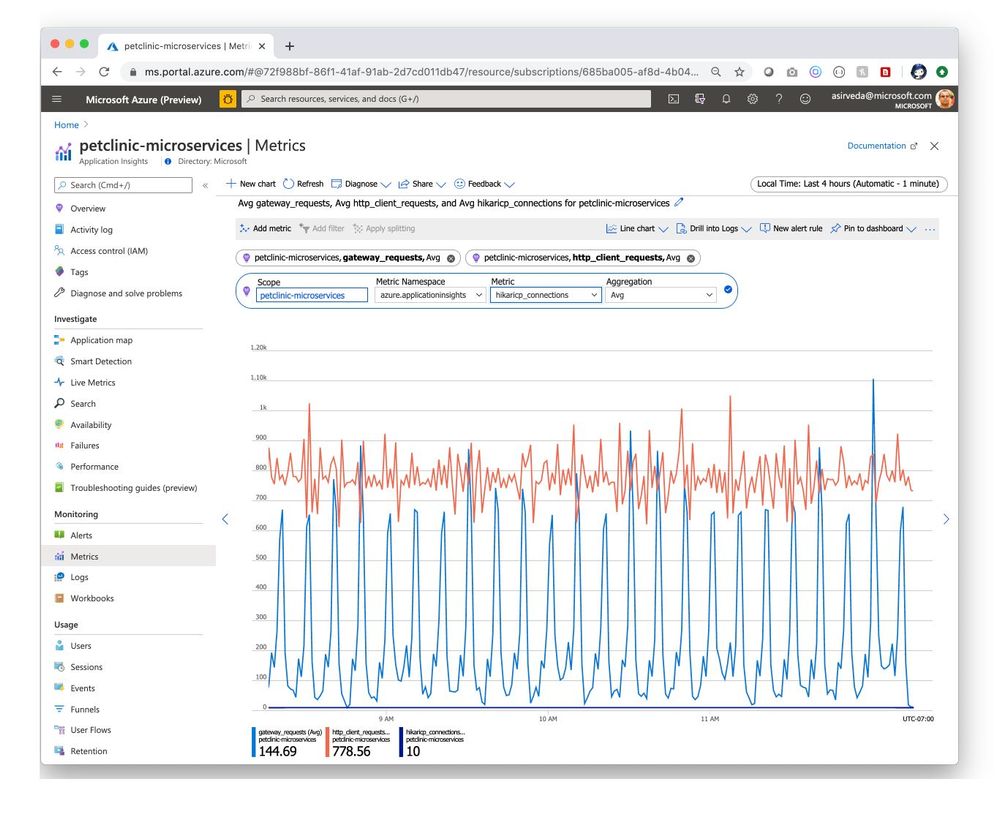

Navigate to the Metrics blade to see all the metrics contributed by Spring Boot applications, Spring Cloud modules, and their dependencies. The chart below showcases gateway-requests contributed by Spring Cloud Gateway and hikaricp_connections contributed by JDBC [Figure 7]. Similarly, you can aggregate Spring Cloud Resilience4J metrics and visualize them.

Figure 7 – Metrics contributed by Spring modules

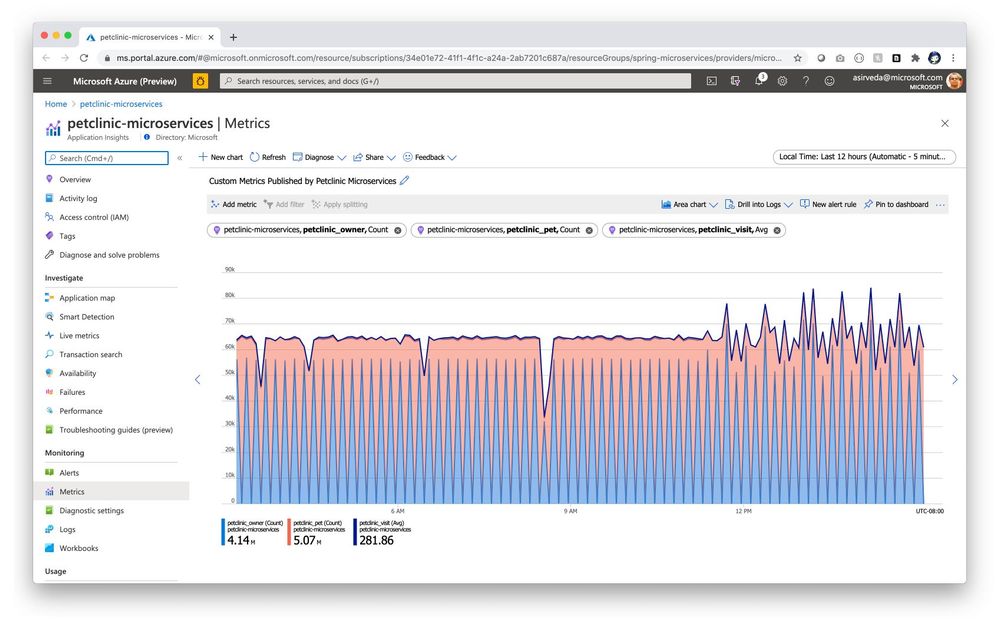

Spring Boot applications register a lot of core metrics – JVM, CPU, Tomcat, Logback, etc. You can use Micrometer to contribute your own custom metrics, say using the @Timed Micrometer annotation at a class level. You can then visualize those custom metrics in Application Insights. As an example, see how pet owners, pets, and their clinical visits are tracked by custom metrics below – you can also see how the pattern changes at 9 PM because applications are driving higher utilization when autoscaling kicked in [Figure 8].

Figure 8 – Custom metrics published by user applications

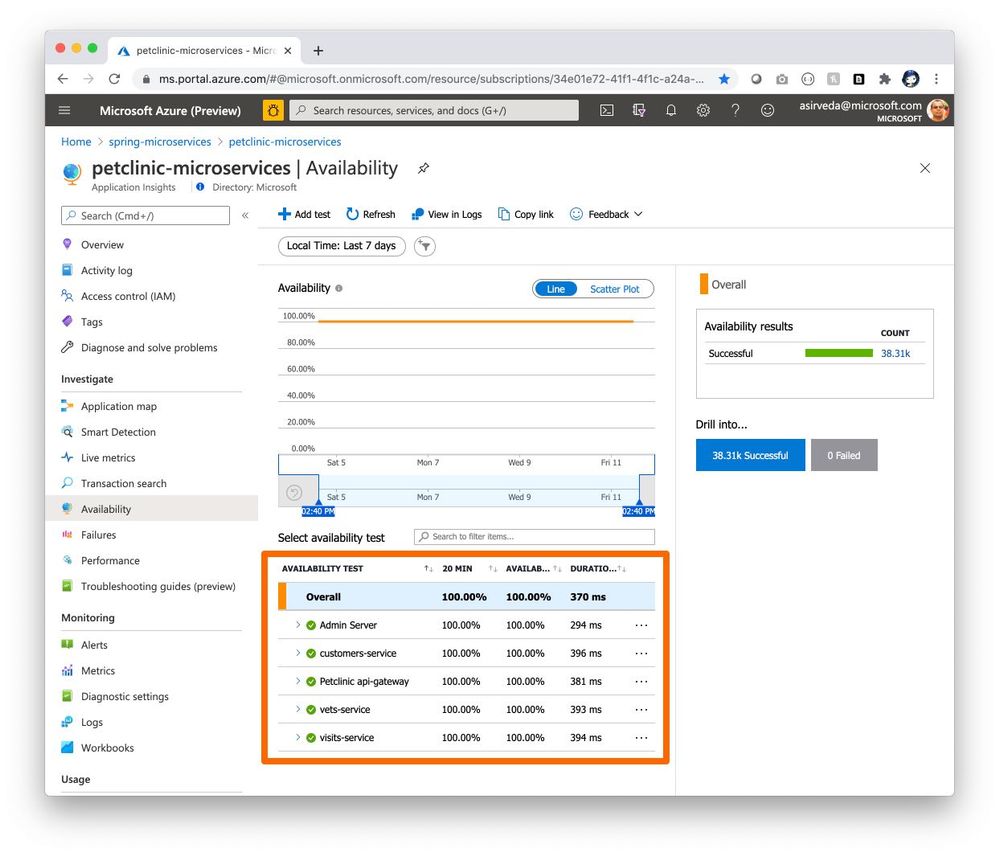

You can use the Availability Test feature in Application Insights to monitor the availability of applications in Azure Spring Cloud. This is a recurring test to monitor the availability and responsiveness of applications at regular intervals from anywhere across the globe. It can proactively alert you if your applications are not responding or if they respond too slowly. The chart below shows availability tests from across North America – West US, South Central, Central US, and East US [Figure 9].

Figure 9 – Availability of application endpoints across time

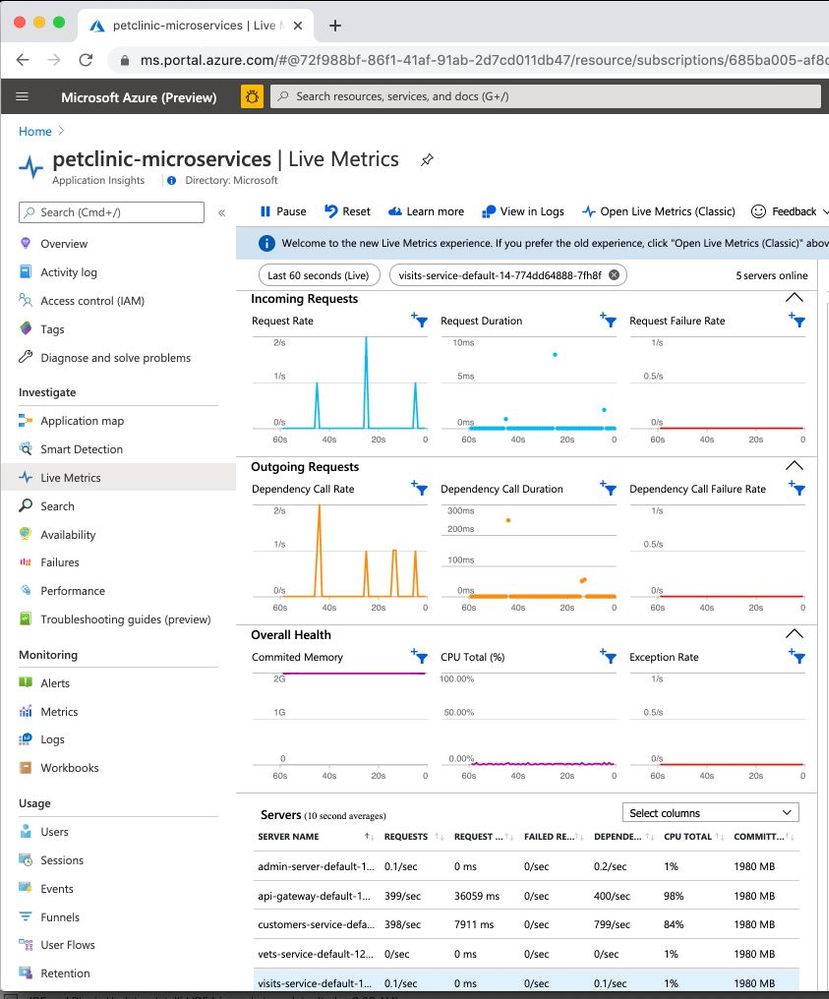

Navigate to the Live Metrics blade where you can see live metrics practically in real-time, within only one second [Figure 10].

Figure 10 – Real-time metrics

Application Insights Java agent is based on OpenTelemetry auto instrumentation effort, where Microsoft collaborates with other brightest minds of the APM space.

Build your solutions and monitor them today!

Azure Spring Cloud abstracts away the complexity of infrastructure management and Spring Cloud middleware management, so you can focus on building your business logic and let Azure take care of dynamic scaling, patches, security, compliance, and high availability. With a few steps, you can provision Azure Spring Cloud, create applications, deploy, and scale Spring Boot applications, and start monitoring in minutes. We will continue to bring more developer-friendly and enterprise-ready features to Azure Spring Cloud.

We would love to hear how you are building impactful solutions using Azure Spring Cloud. Get started today – deploy Spring applications to Azure Spring Cloud using quickstart!

Resources

Performance: The new design is faster and more performant. It takes very little time for you to see the groups that are already created. If creating new groups, once the group is created, you can additionally edit the group settings directly from the completion page (see below).

Recent Comments