by Contributed | Jan 26, 2021 | Technology

This article is contributed. See the original author and article here.

In this installment of the weekly discussion revolving around the latest news and topics on Microsoft 365, hosts – Vesa Juvonen (Microsoft) | @vesajuvonen, Waldek Mastykarz (Microsoft) | @waldekm, are joined by MVP, “Stickerpreneur”, conference speaker, and engineering lead Elio Struyf (Valo Intranet) | @eliostruyf.

Their discussion focuses on building products on the Microsoft 365 platform, from a partner perspective. Angles explored – platform control, product ownership, communications, a marriage, importance of roadmap, areas for improvement, communications, and value. Considerations behind product development and distribution strategy including on-Prem, SAAS offering or delivery to customer to host in their own cloud with assistance from partners. Valo is effectively an ISV that delivers solutions like an SI.

This episode was recorded on Monday, January 25, 2020.

Did we miss your article? Please use #PnPWeekly hashtag in the Twitter for letting us know the content which you have created.

As always, if you need help on an issue, want to share a discovery, or just want to say: “Job well done”, please reach out to Vesa, to Waldek or to your Microsoft 365 PnP Community.

Sharing is caring!

by Contributed | Jan 26, 2021 | Technology

This article is contributed. See the original author and article here.

Authors: Lu Han, Emil Almazov, Dr Dean Mohamedally, University College London (Lead Academic Supervisor) and Lee Stott, Microsoft (Mentor)

Both Lu Han and Emil Almazov, are the current UCL student team working on the first version of the MotionInput supporting DirectX project in partnership with UCL and Microsoft UCL Industry Exchange Network (UCL IXN).

Examples of MotionInput

Running on the spot

Cycling on an exercise bike

Introduction

This is a work in progress preview, the intent is this solution will become a Open Source community based project.

During COVID-19 it has been increasingly important for the general population’s wellbeing to keep active at home, especially in regions with lockdowns such as in the UK. Over the years, we have all been adjusting to new ways of working and managing time, with tools like MS Teams. It is especially the case for presenters, like teachers and clinicians who have to give audiences instructions, that they do so with regular breaks.

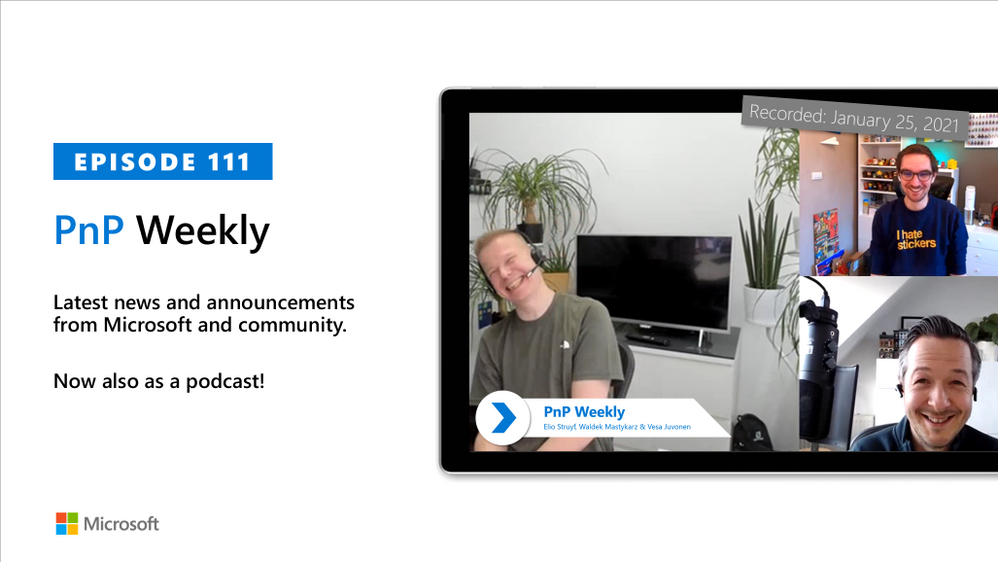

UCL’s MotionInput supporting DirectX is a modular framework to bring together catalogues of gesture inputs for Windows based interactions. This preview shows several Visual Studio based modules that use a regular webcam (e.g. on a laptop) and open-source computer vision libraries to deliver low-latency input on existing DirectX accelerated games and applications on Windows 10.

The current preview focuses on two MotionInput catalogues – gestures from at-home exercises, and desk-based gestures with in-air pen navigation. For desk-based gestures, in addition to being made operable with as many possible Windows based games, preliminary work has been made towards control in windows apps such as PowerPoint, Teams and Edge browser, focusing on the work from home era that uses are currently in.

The key ideas behind the prototype projects are to “use the tech and tools you have already” and “keep active”, providing touchless interactive interfaces to existing Windows software with a webcam. Of course, Sony’s EyeToy and Microsoft Kinect for Xbox have done this before and there are other dedicated applications that have gesture technologies embedded. However, many of these are no longer available or supported on the market and previously only worked with dedicated software titles that they are intended for. The general population’s fitness, the potential for physiotherapy and rehabilitation, and use of motion gestures for teaching purposes is something we intend to explore with these works. Also, we hope the open-source community will revisit older software titles and make selections of them become more “actionable” with further catalogue entries of gestures to control games and other software. Waving your arms outreached in front of your laptop to fly in Microsoft Flight Simulator is now possible!

The key investigation is in the creation of catalogues of motion-based gestures styles for interfacing with Windows 10, and potentially catalogues for different games and interaction genres for use industries, like teaching, healthcare and manufacturing.

The teams and projects development roadmap includes trialing at Great Ormond Street’s DRIVE unit and several clinical teams who have expressed interest for rehabilitation and healthcare systems interaction.

Key technical advantages

- Computer vision on RGB cameras on Windows 10 based laptops and tablets is now efficient enough to replace previous depth-camera only gestures for the specific user tasks we are examining.

- A library of categories for gestures will enable many uses for existing software to be controllable through gesture catalogue profiles.

- Bringing it as close as possible to the Windows 10 interfaces layer via DirectX and making it as efficient as possible on multi-threaded processes reduces the latency so that gestures are responsive replacements to their corresponding assigned interaction events.

Architecture

All modules are connected by a common windows based GUI configuration panel that exposes the parameters available to each gesture catalogue module. This allows a user to set the gesture types and customise the responses.

The Exercise module in this preview examines repetitious at-home based exercises, such as running on the spot, squatting, cycling on an exercise bike, rowing on a rowing machine etc. It uses the OpenCV library to decide whether the user is moving by calculating the pixel difference between two frames.

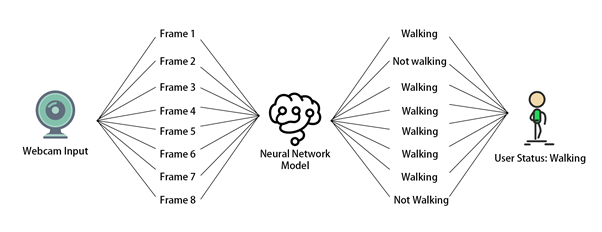

The PyTorch exercise recognition model is responsible for checking the status of the user every 8 frames. Only when the module decides the user is moving and the exercise he/she is performing is recognized to be the specified exercise chosen in the GUI, DirectX events (e.g. A keypress of “W” which is moving forward in many PC games) will be triggered via the PyDIrectInput’s functions.

The Desk Gestures module tracks the x and y coordinates of the pen each frame, using the parameters from the GUI. These coordinates are then mapped to the user’s screen resolution and fed into several PyDirectInput’s functions that trigger DirectX events, depending on whether we want to move the mouse, or press keys on the keyboard and click with the mouse.

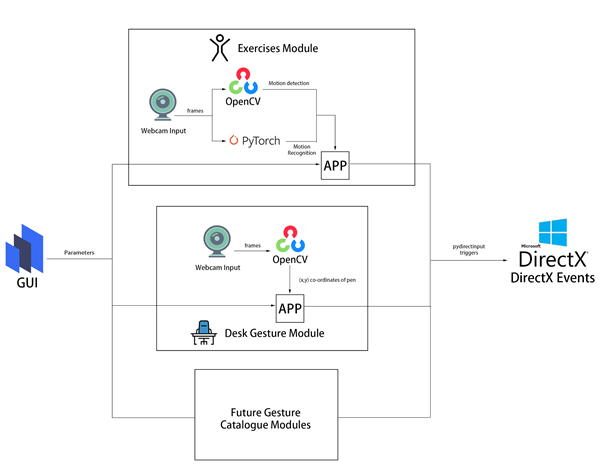

Fig 1 – HSV colour range values for the blue of the pen

From then the current challenge and limitation is having other objects with the same colour range in the camera frame. When this happens, the program detects the wrong objects and therefore, produces inaccurate tracking results. The only viable solution is to make sure that no objects with similar colour range are present in the camera view. This is usually easy to achieve and if not, a simple green screen (or another screen of a singular colour) can be used to replace the background.

In the exercises module, we use OpenCV to do motion detection. This involves subtracting the current frame from the last frame and taking the absolute value to get the pixel intensity difference. Regions of high pixel intensity difference indicate motion is taking place. We then do a contour detection to find the outlines of the region with motion detected. Fig 2 shows how it looks in the module.

Technical challenges

OpenCV

In the desk gestures module, to track the pen, we had to provide an HSV (Hue, Saturation, Value) colour range to OpenCV so that it only detected the blue part of the pen. We needed to find a way to calculate this range as accurately as possible.

The solution involves running a program where the hue, saturation, and value channels of the image could be adjusted so that only the blue of the pen was visible(see Fig 1). Those values were then stored in a .npy file and loaded into the main program.

Fig 2 – Contour of the motion detected

Multithreading

Videos captured by the webcam can be seen as a collection of images. In the program, OpenCV keeps reading the input from the webcam frame by frame, then each frame is processed to get the data which is used to categorize the user into a status (exercising or not exercising in the exercise module, moving the pen to different directions in the desk gesture module). The status change will then trigger different DirectX events.

Initially, we tried to check the status of the user after every time the data is ready, however, this is not possible because most webcams are able to provide a frame rate of 30 frames per second, which means the data processing part is performed 30 times every second. If we check the status of the user and trigger DirectX events at this rate, it will cause the program to run slow.

The solution to this problem is multithreading, which allows multiple tasks to be executed at the same time. In our program, the main thread handles the work of reading input from webcam and data processing, and the status check is executed every 0.1 seconds in another thread. This reduces the execution time of the program and ensures real-time motion tracking.

Human Activity Recognition

In the exercise module, DirectX events are only triggered if the module decides the user is doing a particular exercise, therefore our program needs to be able to classify the input video frames into an exercise category. This then belongs to a broader field of study called Human Activity Recognition, or HAR for short.

Recognizing human activities from video frames is a challenging task because the input videos are very different in aspects like viewpoint, lighting and background. Machine learning is the most widely used solution to this task because it is an effective technique in extracting and learning knowledge from given activity datasets. Also, transfer learning makes it easy to increase the number of recognized activity types based on the pre-trained model. Because the input video can be viewed as a sequence of images, in our program, we used deep learning, convolutional neural networks and PyTorch to train a Human Activity Recognition model that can output the action category given an input image. Fig 3 shows the change of loss and accuracy during the training process, in the end, the accuracy of the prediction reached over 90% on the validation dataset.

Fig 3 – Loss and accuracy diagram of the training

Besides training the model, we used additional methods to increase the accuracy of exercise classification. For example, rather than changing the user status right after the model gives a prediction of the current frame, the status is decided based on 8 frames, this ensures the overall recognition accuracy won’t be influenced by one or two incorrect model predictions [Fig 4].

Fig 4 – Exercise recognition process

Another method we use to improve the accuracy is to ensure the shot size is similar in each input image. Images are a matrix of pixels, the closer the subject is to the webcam, the greater the number of pixels representing the user, that’s why recognition is sensitive to how much of the subject is displayed within the frame.

To resolve this problem, in the exercise module, we ask the user to select the region of interest in advance, the images are then cropped to fit the selection [Fig 5]. The selection will be stored as a config file and can be reused in the future.

Fig 5 – Region of interest selection

DirectX

The open-source libraries used for computer vision are all in Python so the library ‘PyDirectInput’ was found to be most suitable for passing the data stream. PyDirectInput is highly efficient at translating to DirectX.

Our Future Plan

For the future, we plan to add a way for the user to record gestures to a profile and store it in a catalogue. From there on the configuration panel they will be able to assign mouse clicks, any keyboard button presses and sequences of button presses, for the user to map to their specific gesture. This will be saved as gesture catalogue files and can be reused in different devices.

We are also benchmarking and testing the latency between gestures performed and DirectX events triggered to further evaluate efficiency markers, hardware limits and exposing timing figures for the users configuration panel.

We will be posting more videos on our progress of this work on our YouTube channels (so stay tuned!), and we look forward to submitting our final year dissertation project work at which point we will have our open-source release candidate published for users to try out.

We would like to build a community interest group around this. If would like to know more and join our MotionInput supporting DirectX community effort, please get in touch – d.mohamedally@ucl.ac.uk

Bonus clip for fun – Goat Simulator

by Contributed | Jan 26, 2021 | Technology

This article is contributed. See the original author and article here.

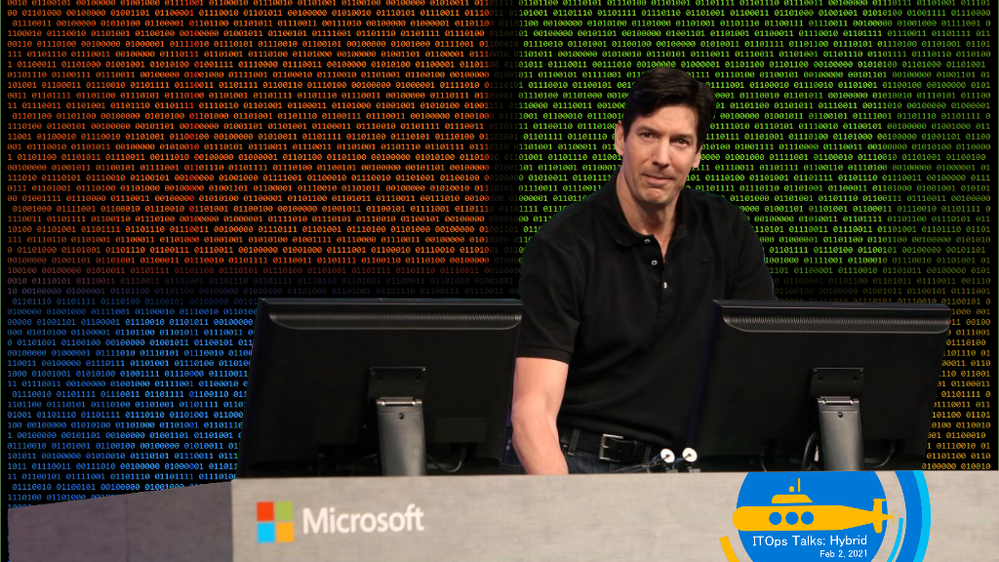

Targets are locked. We’re flooding the torpedo tubes so we’re ready to dive into battle for this Feb 2nd event. I believe it’s time to share some freshly declassified details about things we have planned, so let’s kick this off with the event flow and info about our Keynote Speaker for the event.

On Feb 2nd, 7:30 AM PST ITOps Talks: All Things Hybrid will be LIVE on Microsoft Learn TV to kickstart the festivities. I’ll be there chatting with folks from my team about the logistics of the event, why we’re doing this, how you can get involved in hallway conversations and how to participate throughout the coming days. After we’ve gotten the logistical stuff out of the way – we’ll be introducing our featured keynote speaker.

Who is that you ask? (drum roll please)

It’s none other than Chief Technology Officer for Microsoft Azure – Mark Russinovich! After debating with the team about possible candidates – Mark was an obvious choice to ask. As a Technical Fellow and CTO of Azure paired with his deep technical expertise in the Microsoft ecosystem – Mark brings a unique perspective to the table. He’s put together this exclusive session about Microsoft Hybrid Solutions and has agreed to join us for a brief interview and live Q&A following the keynote. I’ve had a quick peek at what he has instore for us and I’m happy to report: it’s really cool.

After the Keynote and Live Q&A on February 2nd, we will be releasing the full breadth of content I introduced to you in my previous blogpost. All sessions will be live for your on-demand viewing at a time and cadence that meets your schedule. You can binge watch them all in a row or pick and choose selective ones to watch when you have time in your busy life / work schedule – It’s YOUR choice. We will be publishing one blog post for each session on the teams ITOpsTalk.com blog with the embedded video so they will be easy to find as well as having all supporting reference documentation, links to additional resources as well as optional Microsoft Learn modules to learn even more about their related technologies.

What about the connectedness you would feel during a real event? Where are the hallway conversations? We’re trying something out using our community Discord server. After the keynote and the release of the session content, you will want to login to Discord (have you agreed to the Code of Conduct / Server Rules?) where you will see a category of channels that looks something like this:

The first channel is just a placeholder with descriptions of each talk and a link to that “chat” channel. It’s really just for logistics and announcements. The second channel “itops-talks-main-channel” is where our broad chat area is with no real topic focus, other than supporting the event and connecting with you. The rest of the channels (this graphic is just a sample) identify the session code and title of each session. THIS is where you can post questions, share your observations, answer other folks questions and otherwise engage with the speakers / local experts at ANY point of the day or night. The responses may not be in real-time if the speaker is asleep or if the team is not available at the time you ask – but don’t worry – we’ll be there to connect once we’re up and functioning.

Oh Yeah! Remember – there is no need for registration to attend this event. You may want to block the time in your calendar though…. just in case. Here’s a quick and handy landing page where you can quickly/conveniently download an iCal reminder for the Europe/Eastern NorthAmerica livestream OR Asia Pacific/Western NorthAmerica livestream.

Anything else you’d like to know? Hit me up here in the comments or can ask us in the Discord server. Heck – you can even ping us with a tweet using the #AzOps hashtag on Twitter.

by Contributed | Jan 26, 2021 | Technology

This article is contributed. See the original author and article here.

Hello everybody, Simone here to tell you about a situation that happened many times to my customers: understanding how the syslog ingestion works.

To make subject clear make sure you have clear in mind the below references:

Most of the time nobody knows what needs to be collected and how hence, with this article, I just want to make some clarification on what is behind the scenes.

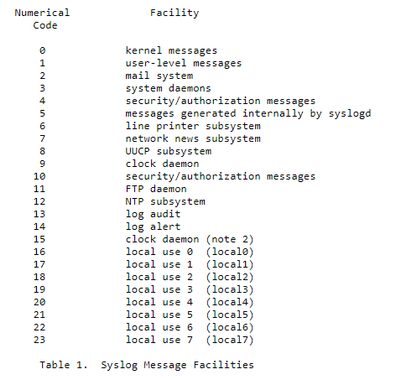

Starting from RFC, it is mentioned that we have a list of “Facility” like in the screenshot below:

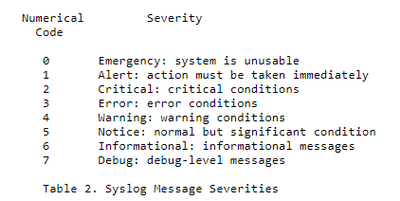

And for each of them we could have a specific “Severity” (see the corresponding picture below):

Back to the situation, the natural question that comes up is: how can we clearly understand who is using who if we have no information about facilities and severities about related products we are using?

To find the information we need, we must capture some TCP/UDP packets from the syslog server and rebuild the packets in wireshark and then analyze the results.

Let’s start with first step: packets capture. Below you have the macro steps to be followed:

- From the syslog server (in this case a Linux server) we will use the tcpdump command,

if not available follow this link on how to setup

https://opensource.com/article/18/10/introduction-tcpdump

- the command could be for example

tcpdump -i any -c50 -nn src xxx.xxx.xxx.xxx (replace with source IPADDRESS under analysis)

- the results after the rebuilt with wireshark, should be something similar the following image:

The header of every row contains exactly the information that we are looking for; how to deal with this piece of info? Easy; use the formula contained in the following part directly taken from RFC:

“The Priority value is calculated by first multiplying the Facility number by 8 and then adding the numerical value of the Severity. For example, a kernel message (Facility=0) with a Severity of Emergency (Severity=0) would have a Priority value of 0. Also, a “local use 4” message (Facility=20) with a Severity of Notice (Severity=5) would have a Priority value of 165. In the PRI of a syslog message, these values would be placed between the angle brackets as <0> and <165> respectively.

The only time a value of “0” follows the “<” is for the Priority value of “0”. Otherwise, leading “0”s MUST NOT be used.”

In the example above, we have the value of <46>. According to the above-mentioned RFC, the formula used to translate that number into something human readable is the following:

8 x facility + severity

We now must look for the formula result in the following matrix:

|

Emergency

|

Alert

|

Critical

|

Error

|

Warning

|

Notice

|

Informational

|

Debug

|

|

|

|

|

|

|

|

|

|

Kernel

|

0

|

1

|

2

|

3

|

4

|

5

|

6

|

7

|

user-level

|

8

|

9

|

10

|

11

|

12

|

13

|

14

|

15

|

mail

|

16

|

17

|

18

|

19

|

20

|

21

|

22

|

23

|

system

|

24

|

25

|

26

|

27

|

28

|

29

|

30

|

31

|

security/auth

|

32

|

33

|

34

|

35

|

36

|

37

|

38

|

39

|

message

|

40

|

41

|

42

|

43

|

44

|

45

|

46

|

47

|

printer

|

48

|

49

|

50

|

51

|

52

|

53

|

54

|

55

|

network news

|

56

|

57

|

58

|

59

|

60

|

61

|

62

|

63

|

UUCP

|

64

|

65

|

66

|

67

|

68

|

69

|

70

|

71

|

clock

|

72

|

73

|

74

|

75

|

76

|

77

|

78

|

79

|

security/auth

|

80

|

81

|

82

|

83

|

84

|

85

|

86

|

87

|

FTP deamon

|

88

|

89

|

90

|

91

|

92

|

93

|

94

|

95

|

NTP

|

96

|

97

|

98

|

99

|

100

|

101

|

102

|

103

|

Log Audit

|

104

|

105

|

106

|

107

|

108

|

109

|

110

|

111

|

Log Alert

|

112

|

113

|

114

|

115

|

116

|

117

|

118

|

119

|

Clock

|

120

|

121

|

122

|

123

|

124

|

125

|

126

|

127

|

local0

|

128

|

129

|

130

|

131

|

132

|

133

|

134

|

135

|

local1

|

136

|

137

|

138

|

139

|

140

|

141

|

142

|

143

|

local2

|

144

|

145

|

146

|

147

|

148

|

149

|

150

|

151

|

local3

|

152

|

153

|

154

|

155

|

156

|

157

|

158

|

159

|

local4

|

160

|

161

|

162

|

163

|

164

|

165

|

166

|

167

|

local5

|

168

|

169

|

170

|

171

|

172

|

173

|

174

|

175

|

local6

|

176

|

177

|

178

|

179

|

180

|

181

|

182

|

183

|

local7

|

184

|

185

|

186

|

187

|

188

|

189

|

190

|

191

|

So now, let’s make one step back to customer’ question and “guess” what the “Facility” and the “Severity” are in the provided example.

Since header was 46, the result was:

- Facility = message

- Severity = Informational

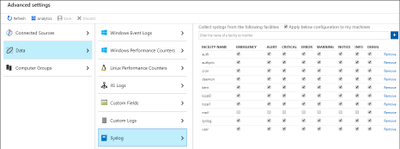

Once we understood what to deal with, it’s time to configure Log Analytics / Sentinel enabling the Syslog data sources in Azure Monitor.

All we have to do is to:

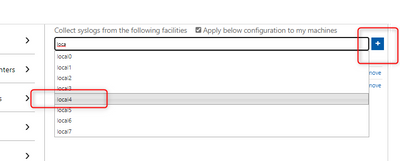

- add the facilities (by entering its name and leveraging the intellisense) to the workspace.

- select what severity(ies) to import.

- and click Save.

Using some real-life example, if we want to collect the logs for FTP, the corresponding facility to be entered is “ftp” and the following logs will be imported:

Syslog file

|

Log Path

|

ftp.info; ftp.notice

|

/log/ftplog/ftplog.info

|

ftp.warning

|

/log/ftplog/ftplog.warning

|

ftp.debug

|

/log/ftplog/ftplog.debug

|

ftp.err; ftp.crit; ftp.emerg

|

/log/ftplog/ftplog.err

|

Differently, talking about Users, the facility is “user” and the imported logs will be:

Syslog file

|

Log Path

|

user.info;user.notice

|

/log/user/user.info

|

user.warning

|

/log/user/user.warning

|

user.debug

|

/log/user/user.debug

|

user.err;user.crit;user.emerg

|

/log/user/user.err

|

Another one: for Apache, the facility is “local0” and the logs will be:

Syslog file

|

Log Path

|

local0.info;local0.notice

|

/log/httpd/httpd.

|

local0.warning

|

/log/httpd/httpd.warning

|

local0.debug

|

/log/httpd/httpd.debug

|

local0.err; local0.crit;local0.emerg

|

/log/httpd/httpd.err

|

We have everything in place, but are we really sure that info is produced?

What if you would like to effectively test that data is flowing in the corresponding facility?

We can leverage the following sample commands for CEF & Syslog using the logger built-in utility:

logger -p auth.notice “Some message for the auth.log file”

logger -p local0.info “Some message for the local0.log file”

logger “CEF:0|Microsoft|MOCK|1.9.0.0|SuspiciousActivity|Demo suspicious activity|5|start=2020-12-12T18:52:58.0000000Z app=mock suser=simo msg=Demo suspicious activity externalId=2024 cs1Label=tag cs1=my test”

Note pay attention to time when you query for this result!!! ;)

That’s it from my side, thank you for reading my article till the end.

Special thanks go to Bruno Gabrielli for review

Simone

Recent Comments