by Contributed | Jan 27, 2021 | Technology

This article is contributed. See the original author and article here.

Azure’s AI portfolio has options for every developer and data scientist, and we’re committed to empowering you to develop applications and machine learning models on your terms. Azure enables you to develop in your preferred language, environment, and machine learning framework, and allows you to deploy anywhere – to the cloud, on-premises, or the edge. We help improve your productivity regardless of your skill level, with code-first and low code/no code options which can help you accelerate the development process. We’re also devoted to empowering you with resources to help you get started with Azure AI and machine learning, grow your skills, and start building impactful solutions.

Announcing new AI & ML resource pages for developers and data scientists

Today we’re excited to announce new resources pages on Azure.com, with a rich set of content for data scientists and developers. Whether you’re new to AI and ML, or new to Azure, the videos, tutorials, and other content on these pages will help you get started.

- Learn how your peers around the world are using Azure AI to develop AI and machine learning solutions on their terms to solve business challenges.

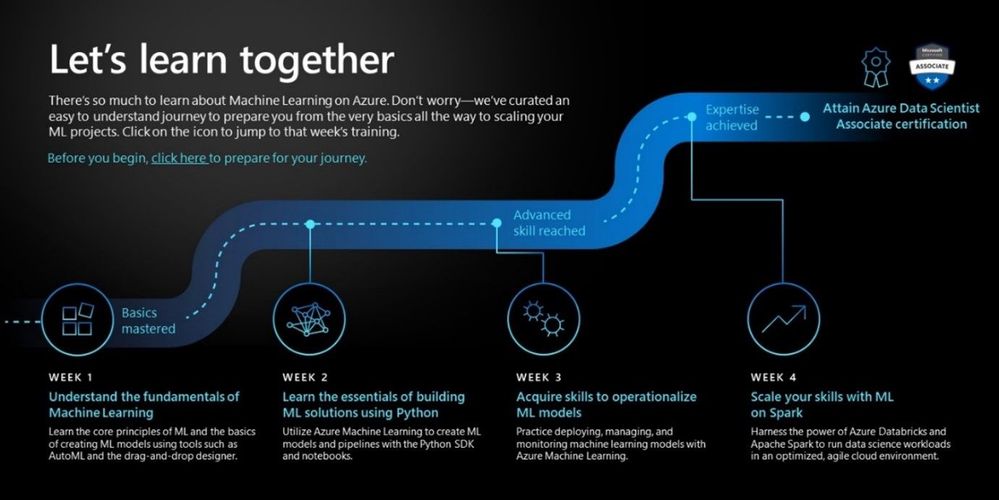

- Grow your skills with curated learning journeys to help your skill up on Azure AI and Machine Learning in 30 days. Each learning journey has videos, tutorials, and hands-on exercises to help prepare you to pass a Microsoft certification in just 4 weeks. Upon completing the learning journey, you’ll be eligible to receive 50% off a Microsoft Certification exam.

- Engage with our engineering teams and stay up to date with the latest innovations on our AI Tech Community, where you’ll find blogs, discussion forums, and more.

Pictured above: ML learning journey for developers and data scientists.

Register for the Azure AI Hackathon

Finally, put your skills to the test by entering the Azure AI Hackathon, which starts today and will run through March 22nd, 2021. Winners will be announced in early April. The most innovative and impactful projects will win prizes up to $10,000 USD. We look forward to seeing what you build with Azure AI.

Get started today

Check out the pages to get started with your 30-day learning journey, and register for the hackathon:

by Contributed | Jan 27, 2021 | Technology

This article is contributed. See the original author and article here.

In this video, we look at how to use Cloud Partner Portal CPP API for managing “Virtual Machine” offers in Azure Marketplace.

The document called “Cloud Partner Portal API Reference” describes seemingly older API that existed with the previous version of the publisher portal called Cloud Partner Portal or CPP.

If you had used CPP in the past, you know that in 2019–2020 all offers were migrated to the new Microsoft Partner Center. Therefore, we might expect that all offer types should now be manageable via some new API exposed by the Partner Center. However, as the note in the doc above says, “Cloud Partner Portal APIs are integrated with and will continue working with Partner Center” for many of the offer types including Virtual Machines. While, the newer Partner Center Ingestion API only supports “Azure Application” (i.e. solution template and managed app) offer types (see this related article).

In this video walkthrough, we look at how to use Postman to invoke a few REST methods of the Cloud Partner Portal CPP API to view and update a Virtual Machine offer and see the changes reflect in the Partner Center UI.

You can download my Postman collection here.

Note: This video is specifically about the “Virtual Machine” offer type. If you are looking at how to manage “Azure Application” offers, please see “Using Partner Center Ingestion API for managing Azure Application offers in Azure Marketplace”.

Video Walkthrough

Tip: Play the video full screen to see all of the details.

So, should we use Partner Center API or Cloud Partner Portal CPP API?

Answer: It depends on the “offer type”

- VM Offers (and a few other types): cloudpartner.azure.com (described in this article)

- Azure Application Offers: api.partner.microsoft.com/ingestion/v1 (described in the related article)

Originally published at https://arsenvlad.medium.com/using-cloud-partner-portal-cpp-api-for-managing-virtual-machine-offers-in-azure-marketplace-90c3787c21c4 on September 10, 2020.

by Contributed | Jan 27, 2021 | Technology

This article is contributed. See the original author and article here.

First published on MSDN on Jan 01, 2013

The Memory Consumer with Many Names

Have you ever wondered what Memory grants are? What about QE Reservations ? And Query Execution Memory ? Workspace memory ? How about Memory Reservations ?

As with most things in life, complex concepts often reduce to a simple one: all these names refer to the same memory consumer in SQL Server: memory allocated during query execution for Sort and Hash operations (bulk copy and index creation fit into the same category but a lot less common).

Allow me to provide some larger context: during its lifetime a query may request memory from different “buckets” or clerks, depending on what it needs to do. For example, when a query is parsed and compiled initially, it will consume compile or optimizer memory. Once the query is compiled that memory is released and the resulting query plan needs to be stored in cache. For that, the plan will consume procedure cache memory and will stay in that cache until server is restarted or memory pressure occurs. At that point, the query is ready for execution. If the query happens to be doing any sort operations or hash match (join or aggregates), then it will first reserve and later use part or all of the reserved memory to store sorted results or hash buckets. These memory operations during the execution of a query are what all these many names refer to.

Terminology and Troubleshooting Tools

Let’s review the different terms that you may encounter referring to this memory consumer. Again, all these describe concepts that relate to the same memory allocations:

Query Execution Memory (QE Memory): This term is used to highlight the fact that sort/hash memory is used during the execution of a query and is the largest memory consumption that may come from a query during execution.

Update(9/17): QE Memory is the very type of memory that Resource Governor actually limits, when used. See Resource Pools Max and Min Memory percent

Query Execution (QE) Reservations or Memory Reservations: When a query needs memory for sort/hash operations, during execution it will make a reservation request based on the original query plan which contained a sort or a hash operator. Then as the query executes, it requests the memory and SQL Server will grant that request partially or fully depending on memory availability. There is a memory clerk (accountant) named ‘MEMORYCLERK_SQLQERESERVATIONS’ that keep track of these memory allocations (check out DBCC MEMORYSTATUS or sys.dm_os_memory_clerks).

Memory Grants: When SQL Server grants the requested memory to an executing query it is said that a memory grant has occurred. There is a Perfmon counter that keeps track of how many queries have been granted the requested memory: Memory Grants Outstanding . Another counter shows how many queries have requested sort/hash memory and have to wait for it because the Query Execution memory has run out (QE Reservation memory clerk has given all of it away): Memory Grants Pending . These two only display the count of memory grants and do not account for size. That is, one query alone could have consumed say 4 GB of memory to perform a sort, but that will not be reflected in either of these.

To view individual requests, and the memory size they have requested and have been granted, you can query the sys.dm_exec_query_memory_grants DMV. This shows information about currently executing queries, not historically.

In addition, you can capture the Actual Query Execution plan and find an XML element called <Query plan> which will contain an attribute showing the size of the memory grant (KB) as in the following example:

<QueryPlan DegreeOfParallelism=”8″ MemoryGrant =”2009216″

Another DMV- sys.dm_exec_requests – contains a column granted_query_memory which reports the size in 8 KB pages. For example a value of 1000 would mean 1000 * 8 KB , or 8000 KB of memory granted.

Workspace Memory: This is yet another term that describes the same memory. Often you will see this in the Perfmon counter Granted Workspace Memory (KB) which reflects the overall amount of memory currently used for sort/hash operations in KB. The Maximum Workspace Memory (KB) accounts for the maximum amount of workspace memory ever used since the start of the SQL Server. In my opinion, the term Workspace Memory is a legacy one used to describe this memory allocator in SQL Server 7.0 and 2000 and was later superseded by the memory clerks terminology after SQL Server 2005.

Resource Semaphore: To add more complications to this concept, SQL Server uses a thread synchronization object called a semaphore to keep track of how much memory has been granted. The idea is this: if SQL Server runs out of workspace memory/QE memory, then instead of failing the query execution with an out-of-memory error, it will cause the query to wait for memory. In this context, the Memory Grants Pending Perfmon counter makes sense. And so do wait_time_ms , granted_memory_kb = NULL, timeout_sec in sys.dm_exec_query_memory_grants . BTW, this and compile memory are the only places in SQL Server where a query will actually be made to wait for memory if it is not available; in all other cases, the query will fail outright with a 701 error – out of memory.

There is a Wait type in SQL Server that shows that a query is waiting for a memory grant – RESOURCE_SEMAPHORE. As the documentation states, this “occurs when a query memory request cannot be granted immediately due to other concurrent queries. High waits and wait times may indicate excessive number of concurrent queries, or excessive memory request amounts.” You will observe this wait type in sys.dm_exec_requests for individual sessions. Here is a KB article written primarily for SQL Server 2000 which describes how to troubleshoot this issue and also what happens when a query finally “gets tired” of waiting for a memory grant.

Why do you Care About Memory Grants or Workspace Memory or Query Execution Memory, or whatever you call it?

Over the years of troubleshooting performance problems, I have seen this to be one of the most common memory-related issues. Applications often execute seemingly simple queries that end up wreaking tons of performance havoc on the SQL Server side because of huge sort or hash operations. These not only end up consuming a lot of SQL Server memory during execution, but also cause other queries to have to wait for memory to become available – thus the performance bottleneck.

Using the tools I have outlined above (DMVs, Perfmon counters and actual query plan), you can investigate which queries are large-grant consumers and can have those tuned/re-written where possible.

What Can a Developer Actually Do about Sort/Hash Operations?

Speaking of re-writing queries, here are some things to look for in a query that may lead to large memory grants.

Reasons why a query would use a SORT operator (not all inclusive list):

ORDER BY (T-SQL)

GROUP BY (T-SQL)

DISTINCT (T-SQL)

Merge Join operator selected by the optimizer and one of the inputs of the Merge join has to be sorted because a clustered index is not available on that column.

Reasons why a query would use a Hash Match operator (not all inclusive list):

JOIN (T-SQL) – if SQL ends up performing a Hash Join. Typically, lack of good indexes may lead to the most expensive of join operators – Hash Join. Look at query plan.

DISTINCT (T-SQL) – a Hash Aggregate could be used to perform the distinct. Look at query plan.

SUM/AVG/MAX/MIN (T-SQL)– any aggregate operation could potentially be performed as a Hash Aggregate . Look at query plan.

UNION – a Hash Aggregate could be used to remove the duplicates.

Knowing these common reasons can help an application developer eliminate, as much as possible, the large memory grant requests coming to SQL Server.

As always, basic query tuning starts with checking if your queries have appropriate indexes to help them reduce reads, minimize or eliminate large sorts where possible.

Update : Since SQL Server 2012 SP3, there exist a query hint that allows you to control the size of your memory grant. You can read about it in New query memory grant options are available (min_grant_percent and max_grant_percent) in SQL Server 2012 . Here is an example

SELECT * FROM Table1 ORDER BY Column1 OPTION (min_grant_percent = 3, max_grant_percent = 5 )

Memory Grant Internals:

Here is a great blog post on Memory Grant Internals

Summary of Ways to Deal with Large Grants:

- Re-write queries

- Use Resource Governor

- Find appropriate indexes for the query which may reduce the large number of rows processed and thus change the JOIN algorithms (see Database Engine Tuning Advisor and Missing Indexes DMVs)

- Use OPTION (min_grant_percent = XX, max_grant_percent = XX ) hint

- SQL Server 2017 and 2019 introduce Adaptive query processing allowing for Memory Grant feedback mechanism to adjust memory grant size dynamically at run-time.

Namaste!

Joseph

by Contributed | Jan 27, 2021 | Technology

This article is contributed. See the original author and article here.

Often I get asked by people how are looking for jobs if it makes sense to get certified. While certification is not a requirement nor a guarantee, it can definitely help to land a job working in a team doing cloud computing. I also use it often to make sure, I learn the right things. So we see that getting certified is a great thing, now the question is how do I prepare for a Microsoft Azure certification exam?

Since I passed a couple of the Azure exams, I would like to share how I prepared for these exams and passed. Hopefully, this will make it easier for you to pass them as well.

Passing exams is all about having the right strategy and preparation. If you are looking for tips and tricks to on how to take a Microsoft exam, check out my following blog.

Choose the right Azure exam and certification

To begin with, make sure you choose and pick the certification path and exam which is right for you. There are a lot of different exams and industry certifications out there. Microsoft’s approach of role-based certifications is aligned to relevant market and industry job-roles, to make it easier to find the right one. It makes a lot of sense to pick the right one for you, depending on where you are in your career and where you’re going. I wrote a blog post to give you an overview and pick the different Azure exam certification paths.

Identify the certification of your interest to find the required exams. To browse all the Microsoft Certification exams, check out the official website.

Start Small

If you are not 100% sure where and with which exam to start, I recommend that you start small by taking the AZ-900 Azure Fundamentals exam. This will help you understand how Microsoft exams work by not being too deep into technology. Having experience taking Microsoft exams helps you to focus on the actual topics and not on the testing process. Also, make sure that you have a look at these special offers, you can find more information on special offers further down.

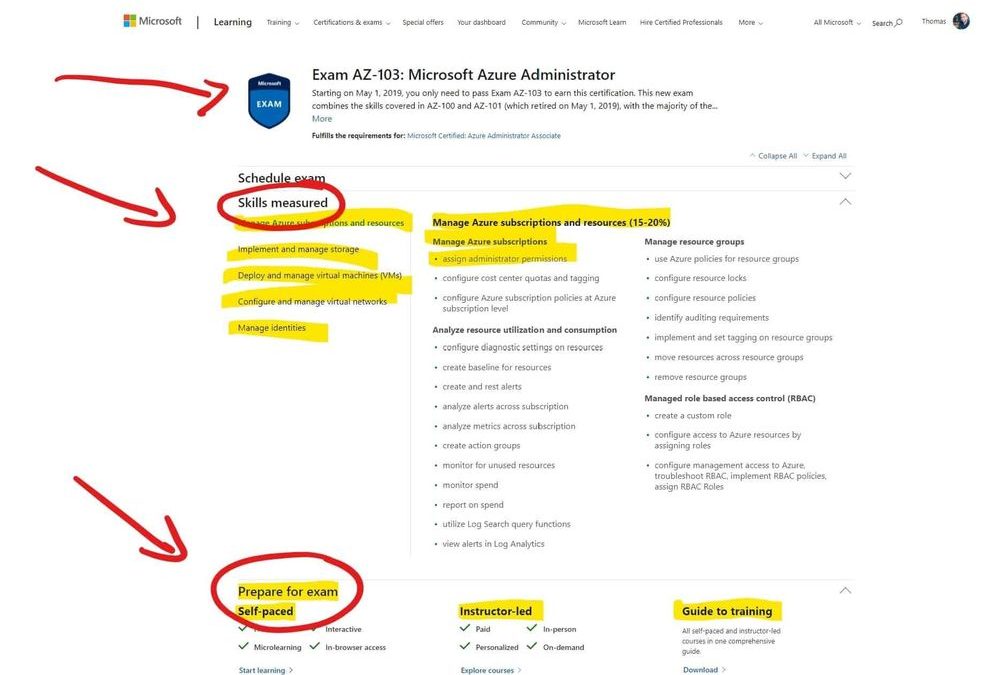

Know the exam content, read what is measured

The first thing after and during picking the exam is to see what is asked during the exam. Every Microsoft exam page lists the “skills measured” in the exam. This list is usually very accurate and helps you to focus and study the right content. The page itself even lists available training and courses to prepare for the exam.

Microsoft Azure Exam Page – Skills measured and prepare for the exam

Microsoft Azure Exam Page – Skills measured and prepare for the exam

Understand the question types

Understanding the exam formats and question types before taking the exam can help you a lot. Microsoft does not mention which question types for exam formats are exactly in each exam, but you can find a list of exam and question samples here in this YouTube playlist. Understanding what questions types will be coming in your exam, will make it easier for you to answer them and get the most point per question.

Take free hands-on learning courses on Microsoft Learn

Microsoft Learn was introduced at Ignite 2018 as a free learning platform for a lot of different Microsoft technologies, not just Azure. Microsoft Learn provides you with various learning paths depending on your job role or the skills you are looking for. Most of the learning paths give you a hands-on learning opportunity so that you can develop practical skills through interactive training. And it is free! You get instant in-browser access to Microsoft tools and modules, no credit card required.

Microsoft Learn

Microsoft Learn

Microsoft Learn :graduation_cap: – Up your game with a module or learning path tailored to today’s IT Pro, developer, and technology masterminds and designed to prepare you for industry-recognized Microsoft certifications.

Hands-on experience

The best way to learn and pass the Microsoft Azure exams, or basically to learn anything, is most of the time through real hands-on experience with the technology. While Microsoft Learn gives you some free hands-on learning modules, there is also an Azure free account. The Azure free account will provide you with 12 months of free Azure services. You can find out more here. Make sure you dive into the skills measured and try the tutorials in Microsoft Docs.

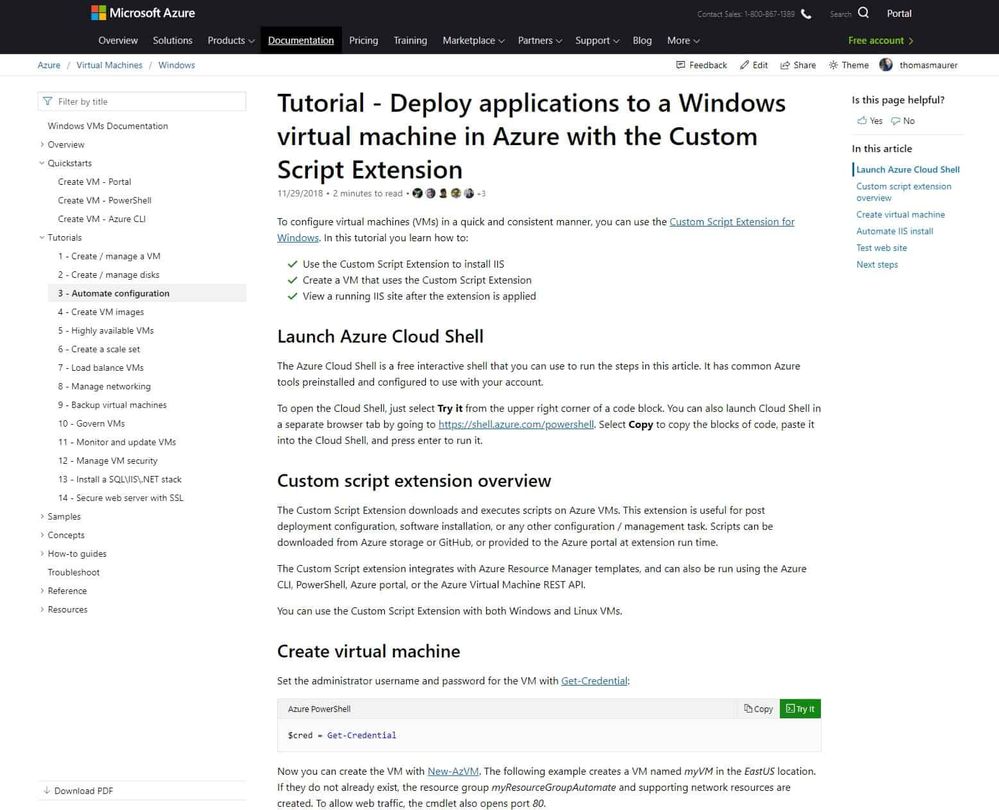

Read the Microsoft Docs

Next, to Microsoft Learn and Hands-on experience, this is one of my main recommendations to prepare for a Microsoft Azure exam. Read the Microsoft Azure Documentation. Trust me on this, Azure and the topics which come up in the exams are very well documented. As mentioned, read the skills measured on the exam page, look up the specific Microsoft Docs pages and read through them and try out the tutorials.

Microsoft Docs

Microsoft Docs

And have a look at my Azure Certification Exam Study Guides, which will help find you the right documentation.

Video courses and training

There are a lot of different video training courses out there, which allow you to do video-based Azure exam preparations. To mention a couple of them like LinkedIn Learning, Pluralsight, Whizlabs, ITPro.TV, Udemy, A Cloud Guru, CloudSkills.io, and many many more! Just browse through the different offers and read the review to find the best match for you. There are also a lot of Microsoft Learning Partners which offer online courses.

Choose instructor-led courses and learning partners

As you can see, there is a lot of self-study learning materials out there to prepare and pass the Azure exams. However, the classroom experience can be super beneficial and efficient, especially with the right trainer. You can find a list of official Microsoft Learning Partners with Microsoft Certified Trainers depending on your country here. A lot of them offer different courses for different technologies and in combination with in-person or online training.

Books

If you prefer to learn and prepare for an exam using books, Microsoft offers books written by the experts at Microsoft Press. There are some excellent books that will help you learn more and prepare and pass the Microsoft Azure exams. However, if you get a hard copy of the book, it won’t be updated in the future, to reflect changes in technology or in the exams.

Take a practice exam

Some of the exams also have official practice exams available. These are great to see where in the learning process you are standing and on which topics you need to spend a little bit more time. I highly recommend that you only do the official practice exams and don’t use brain dumps. Besides cheating on the exam and yourself, brain dumps are often simply wrong and contain a lot of mistakes. You can find Microsoft’s official practice tests here.

Study groups

If you have a couple of colleagues, friends, or people you met at an Azure User group meetup, it can help to build a study group. Study groups don’t just help you to get more structure in your learning. They also help you to gain a new perspective on the study material and reduce procrastination.

Conclusion

If you want to know more about how you can learn and get started with Microsoft Azure, check out my blog: How to learn Microsoft Azure in 2021.

I hope this gives you an overview of how you can prepare for a Microsoft Azure Certification exam. If you have any questions, please let me know in the comments.

by Contributed | Jan 27, 2021 | Technology

This article is contributed. See the original author and article here.

We are strong supporters of automation, and fully acknowledge the value of automating repetitive actions and being able to adjust technology to the specific security practices and processes used by our customers and partners. This is what motivates us in developing and enriching our API layer. But as we all know, with great scale comes great responsibility, and here efficiency is the name of the game.

This blog series will provide you best practices and recommendations on how to best use the different Microsoft 365 Defender features and APIs, in the most efficient way to power your automation to achieve the outcome you are desire.

In this first blog we will focus on two aspects:

- Don’t automatically default to the Advanced hunting API

- If you do need to use the Advanced hunting API for your scenario, how to use it in the most optimal way.

When to use the Advanced hunting API and when to use other APIs / features?

The Advanced hunting API is a very robust capability that enables retrieving raw data from all Microsoft 365 Defender products (covering endpoints, identities, applications docs and email), and can also be leveraged to generate statistics on entities, translating identifiers, e.g. to which machine IP X.X.X.X belongs to. While this is a great feature with broad reach across your data, it can also be challenging to maintain, because;

- More team members need to know the internals of KQL to leverage it, and;

- Consuming the hunting resource pool where there is no real need for that

Below are a few examples of how we have developed a dedicated API to provide you with the intended answer in a single API call:

1. You have a 3rd party alert on an IP address. You would like to see which device this IP was assigned to at that time and to get more information on this device. Easy ! You can do it by :

a. First using Find devices by internal IP API – Find devices seen with the requested internal IP in the time range of 15 minutes prior and after a given timestamp.

b. Once you have device ID you can use Get machine by ID API to get more details on the device including its OS Platform, MDE groups, tags, and exposure level.

2. You have a malicious domain IOC and you would like to see its prevalence in your organization.

Easy! You can use Get domain statistics API for that, it retrieves the organization statistics on the given domain for the lookback time you configured, by default the last 30 days, based on Microsoft Defender for Endpoint (MDE) including:

a. Prevalence

b. First seen

c. Last seen

For example: https://wdatpapi-eus-stg.cloudapp.net/api/domains/microsoft.com/stats?lookBackHours=24

3. You have a list of IOCs, and you would like to make sure you are alerted if there is any activity associated with this URL in your organization.

To implement this scenario you can use Indicators:

a. Add IOCs to MDE indicators via Indicators API and set the required action (“Alert” or “Alert and Block”).

b. To check if any of the IOCs was observed in the organization in the last 30 days, you can run a single Advanced hunting query:

// See if any process created a file matching a hash on the list

let covidIndicators = (externaldata(TimeGenerated:datetime, FileHashValue:string, FileHashType: string )

[@”https://raw.githubusercontent.com/Azure/Azure-Sentinel/master/Sample%20Data/Feeds/Microsoft.Covid19.Indicators.csv”]

with (format=”csv”))

| where FileHashType == ‘sha256’; //and TimeGenerated > ago(1d);

covidIndicators

| join (DeviceFileEvents

| where ActionType == ‘FileCreated’

| take 100) on $left.FileHashValue == $right.SHA256

How to optimize your Advanced hunting queries

Once you determine that the only way to resolve your scenario is using Advanced hunting queries, you should write efficient optimized queries so your queries will execute faster and will consume less resources. Queries may be throttled or limited based on how they’re written, to limit impact to other sessions. You can read all our best practices recommendations, and also watch this webcast to learn more. In this section we will highlight a few recommendations to improve query performance.

- Always use time filters as your first query condition. Most of the time you will use Advanced hunting to get more information on an entity following an incident, so make sure to insert the time of the incident, and narrow your lookback time. The shorter the lookback time is, the faster the query will be executed.

There are multiple ways to insert time filters to your query.

Scenario example – get all logon activities of the Finance departments users in Office 365.

// Filter timestamp in the query using “ago”

IdentityInfo

| where Department == “Finance”

| distinct AccountObjectId

| join (IdentityLogonEvents | where Timestamp > ago(10d)) on AccountObjectId

| where Application == “Office 365”

// Filter timestamp in the query using “between”

let selectedTimestamp = datetime(2020-11-12T19:35:03.9859771Z);

IdentityInfo

| where Department == “Finance”

| distinct AccountObjectId

| join (IdentityLogonEvents | where Timestamp between ((selectedTimestamp – 2h) .. (selectedTimestamp + 2h))) on AccountObjectId

| where Application == “Office 365”

In general, always filter your query by adding Where conditions, so it will be accurate and will query for the exact data you are looking for.

2. Only use “join” when it is necessary for your scenario.

a. If you are using a join, try to reduce the dataset before joining to limit the join size. Filter the table on the left side, to reduce its size as much as you can.

b. Use an accurate key for the join.

c. Choose the join flavor(kind) according to your scenario.

In the following example we want to see all details of emails and their attachments.

The following example is an inefficient query, because:

a. EmailEvents table is the largest table, it should never be on the left side of the join, without substantial filtering on it.

b. Join kind=leftouter returns all emails, including ones without attachments, which make the result set very large. We don’t need to see emails without attachments therefore this kind of join is not the right kind for this scenario.

c. The Key of the join is not accurate , NetworkMessageId. This is an email identifier, but the same email can be set to multiple recipients.

EmailEvents

| project NetworkMessageId, Subject, Timestamp, SenderFromAddress , SenderIPv4 , RecipientEmailAddress , AttachmentCount

| join kind=leftouter(EmailAttachmentInfo

| project NetworkMessageId,FileName, FileType, MalwareFilterVerdict, SHA256, RecipientEmailAddress )

on NetworkMessageId

This query should be changed and improved to the following query by:

a. Putting the smaller table, EmailAttachmentInfo, on the left.

b. Increasing join accuracy using join kind=inner

c. Using an accurate key for the join (NetworkMessageId, RecipientEmailAddress)

d. Filtering the EmailEvents table to only include emails with attachments before the join.

// Smaller table on the left side, with kind = inner, as default join (innerunique)

// will remove left side duplications, so if a single email has more than one attachments we will miss it

EmailAttachmentInfo

| project NetworkMessageId, FileName, FileType, MalwareFilterVerdict, SHA256, RecipientEmailAddress

| join kind=inner

(EmailEvents

| where AttachmentCount > 0

|project NetworkMessageId, Subject, Timestamp, SenderFromAddress , SenderIPv4 , RecipientEmailAddress , AttachmentCount)

on NetworkMessageId, RecipientEmailAddress

3. When you want to search for an attribute/entity in multiple tables, use the search in operator instead of using union. For example, if you want to search for list of Urls, use the following query:

let ListOfIoc = dynamic([“t20saudiarabia@outlook.sa”, “t20saudiarabia@hotmail.com”, “t20saudiarabia@gmail.com”, “munichconference@outlook.com”,

“munichconference@outlook.de”, “munichconference1962@gmail.com”, “ctldl.windowsupdate.com”]);

search in (DeviceNetworkEvents, DeviceFileEvents, DeviceEvents, EmailUrlInfo )

Timestamp > ago(1d) and

RemoteUrl in (ListOfIoc) or FileOriginUrl in (ListOfIoc) or FileOriginReferrerUrl in (ListOfIoc)

4. Using “Has” is better than “contains”: When looking for full tokens, “has” is more efficient,

since it doesn’t look for substrings.

Instead of using “contains”:

DeviceNetworkEvents

| where RemoteUrl contains “microsoft.com”

| take 50

Use “has”:

DeviceNetworkEvents

| where RemoteUrl has “microsoft.com”

| take 50

If possible, Use case-sensitive operators

DeviceNetworkEvents

| where RemoteUrl has_cs “microsoft.com”

| take 50

For more information about Advanced hunting and the features discussed in this article, read:

As always, we’d love to know what you think. Leave us feedback directly on Microsoft 365 security center or start a discussion in Microsoft 365 Defender community.

Recent Comments