by Contributed | Nov 18, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Data format mappings (for example, Parquet, JSON, and Avro) in Azure Data Explorer now support simple and useful ingest-time transformations. In cases where the scenario requires more complex processing at ingest time, use the update policy, which will allow you to define lightweight processing using KQL expression.

In addition, as part of a 1-click experience, you now have the ability to select data transformation logic from a supported list to add to one or more columns.

To learn more, read about mapping transformations.

by Contributed | Nov 18, 2020 | Technology

This article is contributed. See the original author and article here.

Normally we always need to test the whole set of code before accepting a git pull request, in this article we will try to understand the best approach to deal with pull requests and test them locally.

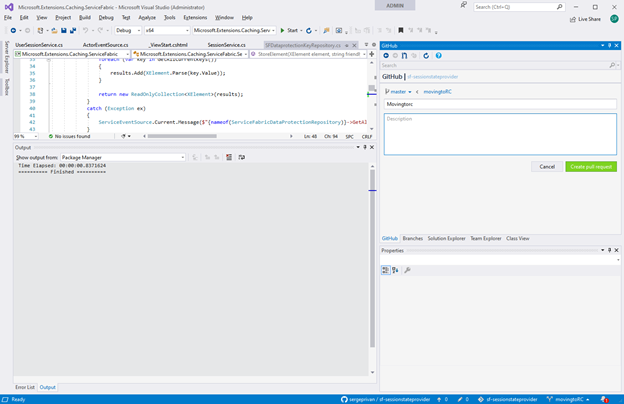

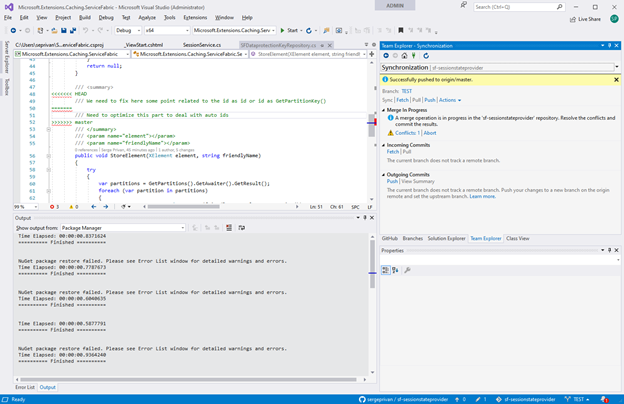

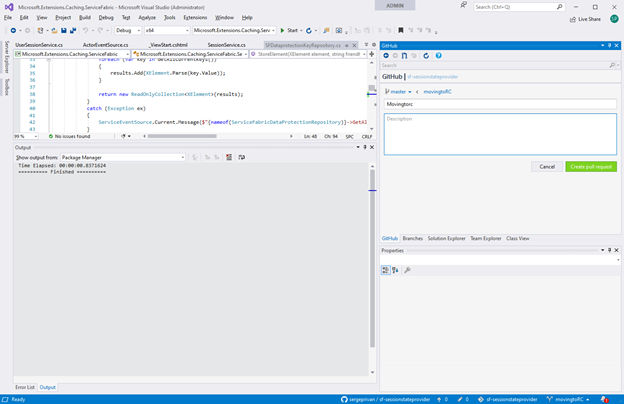

First, we need to get and setup visual studio github extension https://visualstudio.github.com/ we won’t go through the way of setting up it, because this process is very well documented and just start from creating a pull request in the visual studio:

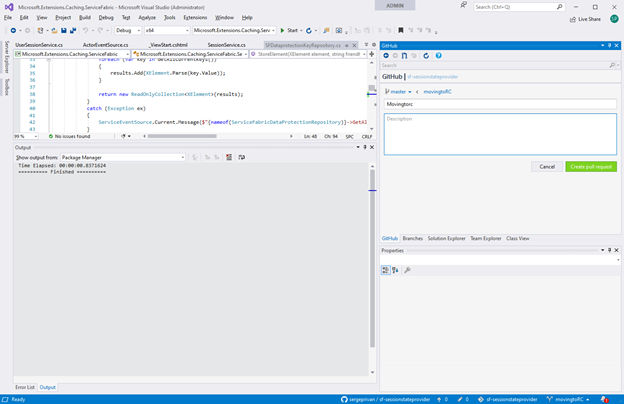

Pull request is being created based on the branch in this case I took movingtoRC one as I want ask someone to check my changes and confirm that I can merge them to the main one:

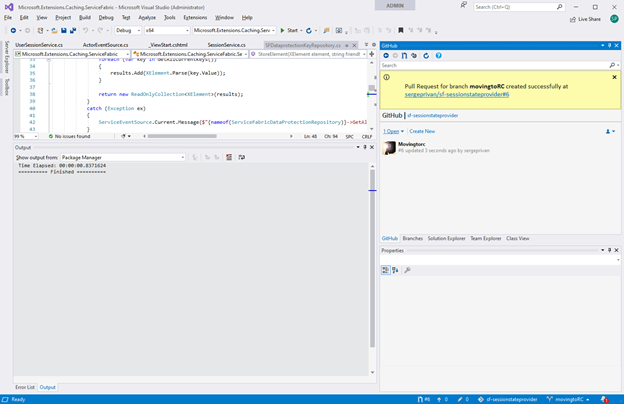

As we have now our pull request ready, we can see that it received an identifier #6

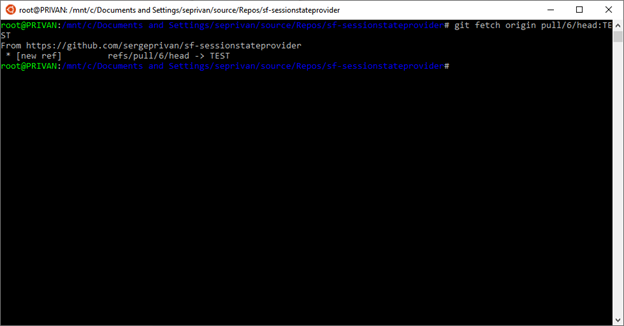

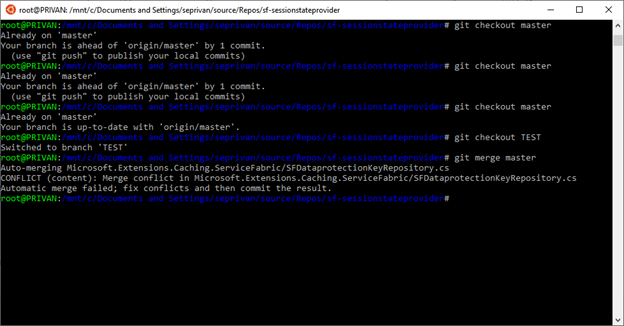

Let’s get this pull request as a branch locally with

git fetch origin

And checkout it locally

Active branch is now TEST

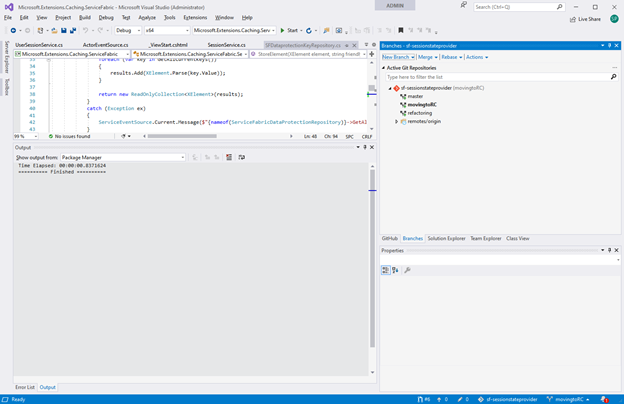

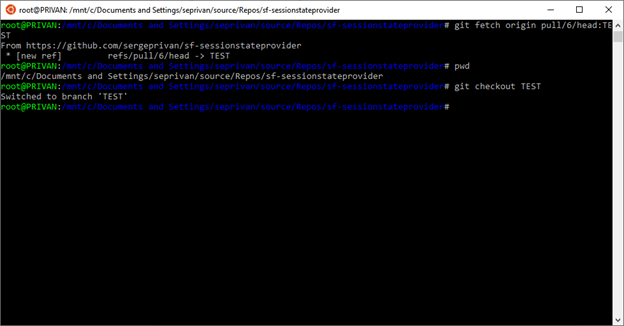

Now we are going to merge main into our pull request branch for testing, mainly we need to make sure here that master is up to date and start merging:

1. use git checkout master to ensure that branch is up to date

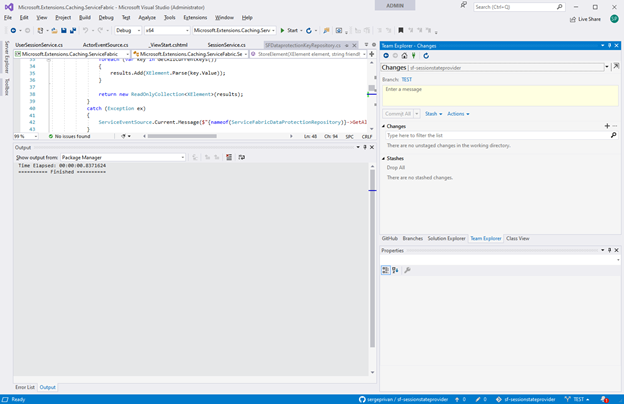

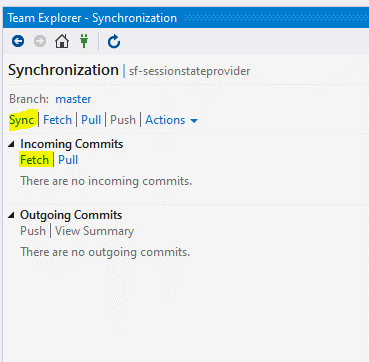

2. In my case as you can see it did not work because I had one commit which was not posted to main, in this case you can do resync/update from the VS extension, to get the latest version:

3. After master is up to date, we are switching back to TEST

git checkout TEST

4. And finally, we can merge master to TEST

git merge master

In the output we can see already the conflict as intended in the git output, but what is more important we have the conflicts and changes in the branch which is ready to be tested directly via Visual Studio, so we can switch now to VS and edit/test/build conflict files

by Contributed | Nov 18, 2020 | Technology

This article is contributed. See the original author and article here.

In this week’s edition of Reconnect, we are joined by none other than 15-time MVP titleholder Dave Noderer!

The South Florida software developer and community activist has been developing custom business automation software, services and applications for clients in his region since founding Computer Ways, Inc. in 1994.

Windows-based application development has been one of Dave’s main areas of focus ever since, achieving Microsoft Certified System Engineer + Internet, Microsoft Certified Solutions Developer, and Microsoft Certified Database Administrator certifications.

Always willing to expand his expertise and share knowledge with others, Dave is an active member of many technical groups and meetups in South Florida. For starters, Dave co-founded FlaDotNet in 2001 to organize in-person and online meetings for the community.

Later, Dave worked to establish the annual event of The South Florida Code Camp. More than 1500 developers have been in attendance in recent years with the February event focused on technologies like .NET, SQL, Cloud, The Internet of Things and machine learning.

Last year, meanwhile, Dave co-founded the Microsoft Cloud South Florida User Group.

“My passion is community of any kind, especially the developer community,” Dave says.

This passion is certainly evident in Dave’s extensive list of community engagements, which also includes work with Florida Azure Association, Azure Global Boot Camp, SQL Saturday and ITPalooza.

When he’s not lending a hand to the developer community, Dave is involved with the wider community as well. Dave works with Kiwanis, Deerfield Beach Historical Society, Hillsboro Lighthouse Society and the Deerfield Chamber.

For more on Dave and his dedicated support of all things community, check out his blog and Twitter @davenoderer

by Contributed | Nov 18, 2020 | Technology

This article is contributed. See the original author and article here.

The new release for DPM SCOM management pack is now out for download! You can access the MP here:

Download Link: https://www.microsoft.com/en-us/download/details.aspx?id=56560

This Management Pack has fixed some of the key issues found in earlier version of DPM SCOM MP.

Also, we announced the release of System Center Data Protection Manager UR 10! You can access the KB article here for the release for a list of known issues fixed and new features:

DPM 2016 UR10 KB article:

https://support.microsoft.com/en-us/help/4578608/update-rollup-10-for-system-center-2016-data-protection-manager

SCDPM 2016 UR10 has a new optimized capability for backup data migration. For details on the new feature refer to the documentation here.

Follow us on https://twitter.com/SCDPM for the latest updates on DPM!

by Contributed | Nov 18, 2020 | Technology

This article is contributed. See the original author and article here.

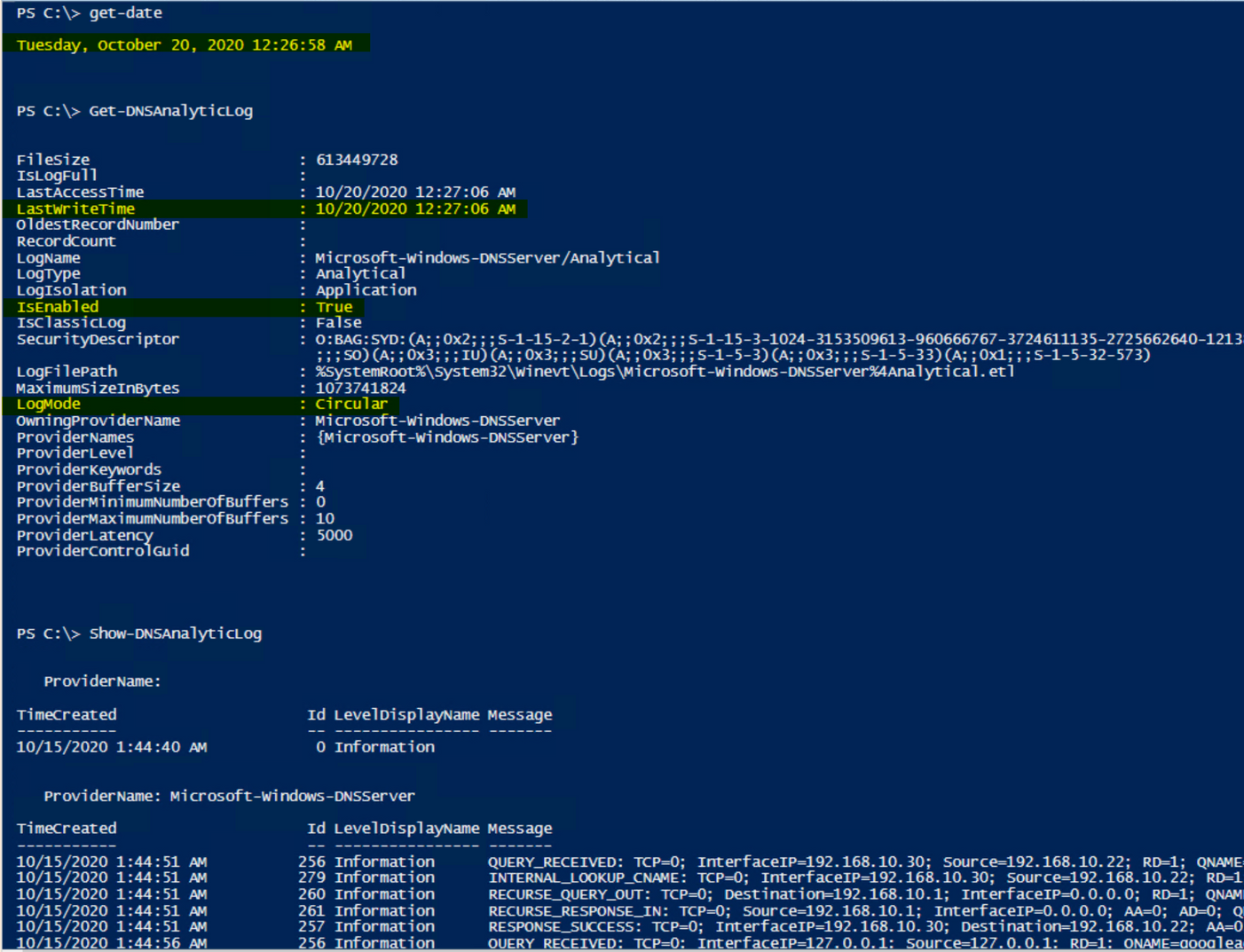

Hi Team, Eric Jansen here again, this time to add on to Joel Vickery’s previous post discussing how to view the DNS Analytic Logs without having to disable them. It’s a great read if you haven’t already seen it…. however, there’s been a very unfortunate death in the family since that posting…

It was a sad day when the news posted that Microsoft Message Analyzer was no more; because it was an extremely feature rich tool that myself and a lot of others still use today, albeit of the last release – that can no longer be downloaded. In situations like this one though, we must adapt and overcome, to find new ways to accomplish the same goals. This is where I thought I might be able to help empower you guys to be able to do more, using the power of PowerShell. Reading through online forums I’ve seen numerous posts where folks say that it’s not possible to view the DNS Analytical log while it’s running. I’m here to tell you guys that you absolutely can, and not just using the methods that Joel outlined in his blog.

As you may have noticed in part one of the series, I used several cmdlets that if you tried to follow along with the posting, wouldn’t have worked for you. That’s because they’re all custom functions that I’ve personally written, since I spend a fair amount of time digging around in these logs, just to make my life a bit easier. I’ve written a series of functions (Seven to be exact) to help with DNS Analytic Log configuration. As we move along through the series, I’ll likely share more of them, and at some point, I’ll see if I can muster up the motivation to setup a code repository on GitHub, if the interest is there.

For today’s topic though, I thought I’d share one of my shiny new functions, I call her…… Show-DNSAnalyticLog. I decided that I’d even reveal the magic behind the curtains (OK, it’s not that magical, but it does what I want it to.). In reality, there’s only one real line that does the work in the wrapper-of-a-function that I wrote, and that’s the line that uses Get-WinEvent, specifically with the use of the -Oldest parameter; that’s the key.

So, let’s take a look at this sample code and a few notes that I’ve added in there:

<#

.Synopsis

Shows what's currently in the DNS Analytic log regardless of if the log is enabled or disabled.

.DESCRIPTION

See Synopsis.

.EXAMPLE

Show-DNSAnalyticLog

.NOTES

Alpha Version - 13 Oct 2020

Disclaimer:

This is a sample script. Sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.

AUTHOR: Eric Jansen, MSFT

#>

function Show-DNSAnalyticLog

{

[CmdletBinding()]

[Alias('SDAL')]

Param

(

#Parameter to define the server.

[Parameter(Mandatory=$false,

ValueFromPipelineByPropertyName=$true,

Position=0)]

$Server = $env:COMPUTERNAME

)

#Define the DNS Analytical Log name.

$EventLogName = 'Microsoft-Windows-DNSServer/Analytical'

Try{

#Step 1 for Show-DNSAnalyticLog.....does the Analytical log even exist on the computer to be enumerated?

If(Get-WinEvent -listlog $EventLogName -ErrorAction SilentlyContinue){

$DNSAnalyticalLogData = Get-WinEvent -listlog $EventLogName

If(($DNSAnalyticalLogData.LogFilePath).split("")[0] -eq '%SystemRoot%'){$DNSAnalyticalLogPath = $DNSAnalyticalLogData.LogFilePath.Replace('%SystemRoot%',"$env:Windir")}

}

Else{

Write-Host "The Microsoft-Windows-DNSServer/Analytical log couldn't be found to be enumerated.`n" -ForegroundColor Red

Write-Host "Ensure that this function is being run on a DNS Server that has the Microsoft-Windows-DNSServer/Analytical log."

Return

}

#Check to make sure that the log can be found to be read, because if it's cleared, via the GUI, or wevtutil, or Clear-DNSAnalyticLog, the .etl is not just cleared, but deleted.

If(Test-Path $DNSAnalyticalLogPath){

Get-WinEvent -Path $DNSAnalyticalLogPath -Oldest

}

Else{

Write-Warning "The $($EventLogName) log doesn't exist at the expected path:"

Write-Host "`n$($DNSAnalyticalLogPath)"

Return

}

}

Catch{

$_.Exception.Message

}

}

So, once you load that guy up, all you need to do is type Show-DNSAnalyticLog, and you’re off to the races. It’ll dump an unparsed / unfiltered list of events that’ll scroll down the screen until everything’s dumped out for your viewing pleasure. One thing to take into consideration though, is that when dumping ALL events (and there could be millions of them), then it could take a while before the scroll fest begins. Once it does though, it’ll look something like this…again, starting from the oldest created event, racing to catch up with the newest written event:

Note: The below just shows that the log is in fact enabled and was written to, even after I showed the current time, followed by the running of the function to show the events that are in the log.

Anyhow, nothing crazy, but if it can help someone else, then my job is done (for the time being).

But who wants unparsed / unfiltered logs when you’re on a hunt? Gross. Maybe we can talk about that in a future post. ;)

Until next time…

Recent Comments