by Scott Muniz | Sep 8, 2020 | Uncategorized

This article is contributed. See the original author and article here.

We have identified key trends that define how customers are using meeting and calling solutions and rethinking how your communications infrastructure can support long term resilience and productivity.

The post New discounts on meeting and calling experiences in Microsoft Teams appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Scott Muniz | Sep 8, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Like a rabbit out of the hat. An ace up their sleeve. Disappearing right before your eyes … OK, I think you get it. There are people out there that wield magic – technical magic – power at their fingertips. They take the ordinary and go beyond. They make Houdini look like he takes PowerNaps. The real difference is their willingness to share their secrets. Listen closely to the makers and the magic can be yours.

In this episode, Chris and I talk with Shane Young (Power Apps guru from PowerApps911) and Chris Kent (Office 365 Practice Lead at DMI) – maker magicians both – Shane a Power Apps sorcerer and Chris a wizard of lists. Throughout the discussion, we learn how makers make, the approach to making – which tools – what techniques, and how you, too, can be a maker.

Listen to podcast below (and then start making magic of your own)

Subscribe to The Intrazone podcast! And listen to episode 56 now + show links and more below.

Intrazone guests – clockwise, Shane Young (Power Apps guru | PowerApps911) and Chris Kent (Office 365 Practice Lead | DMI), with co-host, Mark Kashman (senior product manager | Microsoft).

Intrazone guests – clockwise, Shane Young (Power Apps guru | PowerApps911) and Chris Kent (Office 365 Practice Lead | DMI), with co-host, Mark Kashman (senior product manager | Microsoft).

Links to important on-demand recordings and articles mentioned in this episode:

- Articles and sites

- Events

- Hosts and guests

Subscribe today!

Listen to the show! If you like what you hear, we’d love for you to Subscribe, Rate and Review it on iTunes or wherever you get your podcasts.

Be sure to visit our show page to hear all the episodes, access the show notes, and get bonus content. And stay connected to the SharePoint community blog where we’ll share more information per episode, guest insights, and take any questions from our listeners and SharePoint users (TheIntrazone@microsoft.com). We, too, welcome your ideas for future episodes topics and segments. Keep the discussion going in comments below; we’re hear to listen and grow.

Subscribe to The Intrazone podcast! And listen to episode 56 now.

Thanks for listening

The SharePoint and Power Platform teams wants you to unleash your magic, creativity, and productivity. And we will do this, together, one poof of magic at a time.

The Intrazone links

+ Listen to other Microsoft podcasts at aka.ms/microsoft/podcasts.

![Mark Kashman_1-1583812153608.jpeg Left to right [The Intrazone co-hosts]: Chris McNulty, director PMM (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).](https://www.drware.com/wp-content/uploads/2020/09/large-259) Left to right [The Intrazone co-hosts]: Chris McNulty, director PMM (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).

Left to right [The Intrazone co-hosts]: Chris McNulty, director PMM (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).

The Intrazone – a show about the Microsoft 365 intelligent intranet (https://aka.ms/TheIntrazone)

The Intrazone – a show about the Microsoft 365 intelligent intranet (https://aka.ms/TheIntrazone)

by Scott Muniz | Sep 8, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Azure Synapse Analytics is an analytics platform that provides productive developer experiences such as the Synapse Studio bulk load wizard helping data engineers quickly get data ingested and datasets onboarded through a code-less experience. The platform also comes with other low-code authoring experiences for data integration where it is now even easier to take the next step and further orchestrate and operationalize loads in just a few clicks. You can use built-in data pipelines that are extremely flexible where you can customize them according to your requirements all within the Synapse studio for maximum productivity.

This how to guide walks you through how to quickly set up a continuous data pipeline that automatically loads data as the files arrive in your storage account of your SQL pool.

1. Generate your COPY statement within a stored procedure by using the Synapse Studio bulk load wizard.

2. Use familiar dynamic SQL syntax to parameterize the COPY statement’s storage account location. You can also generate the time of ingestion using default values within the COPY statement. Sample code:

CREATE PROC [dbo].[loadSales] @storagelocation nvarchar(100) AS

DECLARE @loadtime nvarchar(30);

DECLARE @COPY_statement nvarchar(4000);

SET @loadtime = GetDate();

SET @COPY_statement =

N'COPY INTO [dbo].[Trip]

(

[DateID] 1,

[MedallionID] 2,

[HackneyLicenseID] 3,

[PickupTimeID] 4,

[DropoffTimeID] 5,

[PickupGeographyID] 6,

[DropoffGeographyID] 7,

[PickupLatitude] 8,

[PickupLongitude] 9,

[PickupLatLong] 10,

[DropoffLatitude] 11,

[DropoffLongitude] 12,

[DropoffLatLong] 13,

[PassengerCount] 14,

[TripDurationSeconds] 15,

[TripDistanceMiles] 16,

[PaymentType] 17,

[FareAmount] 18,

[SurchargeAmount] 19,

[TaxAmount] 20,

[TipAmount] 21,

[TollsAmount] 22,

[TotalAmount] 23,

[loadTime] default ''' + @loadtime + ''' 24

)

FROM ''' + @storagelocation + '''

WITH (

FIELDTERMINATOR=''|'',

ROWTERMINATOR=''0x0A''

) OPTION (LABEL = ''loadTime: ' + @loadtime + ''');';

EXEC sp_executesql @COPY_statement;

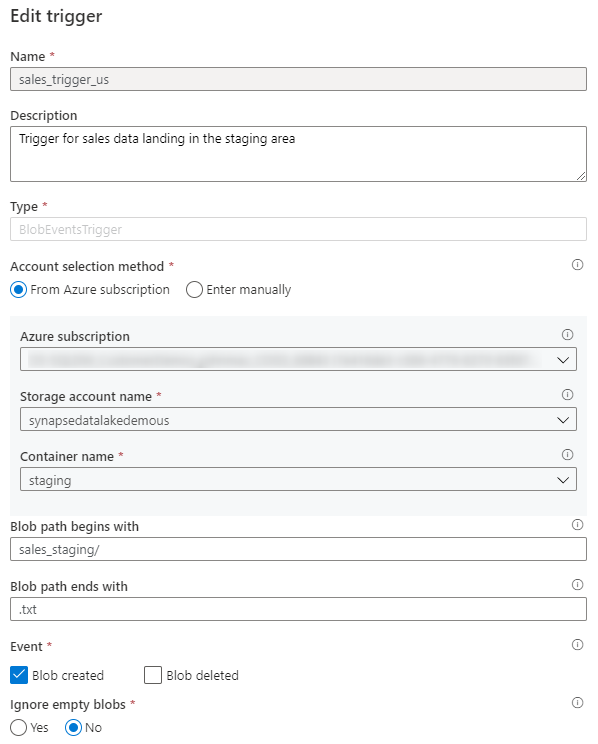

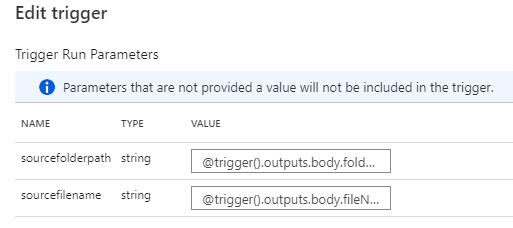

3. Create a Synapse pipeline in the Synapse Studio with an event-based trigger for when a blob is created in your storage container and parameterize the blob path (folder path and file name) as part of the pipeline. Additional documentation on pipeline triggers is here.

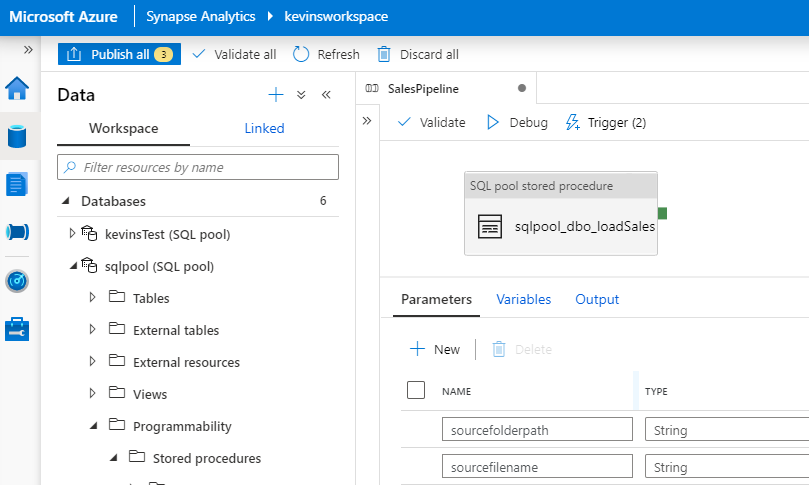

Parameterized pipeline:

Event-based trigger:

Trigger parameters:

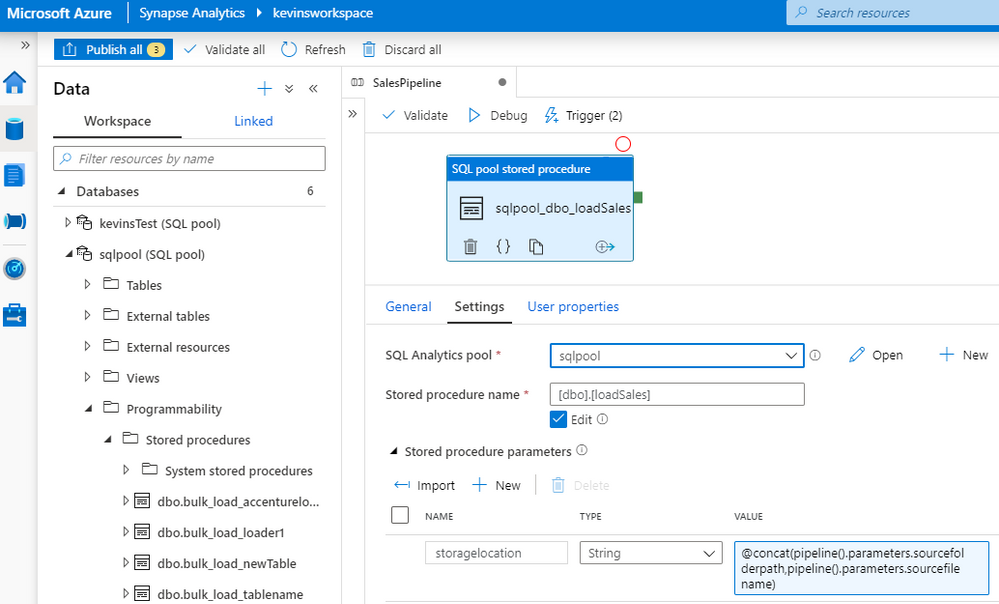

4. Add the stored procedure to a stored procedure activity in your pipeline where the stored procedure parameter is the blob path pipeline parameter and publish your pipeline to your workspace. Additional documentation on the stored procedure activity is here.

In just 4 steps, you have now created a data pipeline which automatically and continuously loads files as they land in your staging storage account location using the COPY statement in a stored procedure. You did not need to provision or integrate any event notification services such as Azure Event Grid or Azure Queue Storage to support this auto-ingestion workflow and there were minimal code changes.

Synapse pipelines are flexible where there is a range of configurations and customization you can set to address your scenarios. Here are some other considerations when operationalizing your data pipelines:

- Add additional transformation logic for further processing within your stored procedure or create additional stored procedures (activities) in your pipeline

- Instead of creating a stored procedure, you can leverage the pipeline COPY activity with the COPY statement. This will make your data pipeline authoring experience code-less. You can configure the COPY activity to ingest data based on blobs’ last modified date.

- Use data flows where you can quickly use pre-defined templates for handling common ETL patterns such as SCD1 and SCD2 in your pipeline – code free dimensional and fact processing.

- You can batch up files instead and leverage a schedule-based trigger. You can use a static storage location as your staging area to upload files. Note you may need to move and clean up files at the end of your pipeline to prevent duplicate loads if you follow this pattern – Synapse pipelines also has this capability built-in through the delete activity or move template.

For more information on Synapse data pipelines and getting started with data integration, visit the following documentation:

by Scott Muniz | Sep 8, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Azure provides several mechanisms how to secure Azure platform.

The most popular approach is through Azure Security Center.

ASC is a unified infrastructure security management system that strengthens the security posture of your data centers, and provides advanced threat protection across your hybrid workloads in the cloud – whether they’re in Azure or not – as well as on premises.

https://docs.microsoft.com/en-us/azure/security-center/security-center-intro

I’d like to highlight also another framework which I’m seeing in use with other customers – Secure DevOps Kit for Azure (AzSK)

https://azsk.azurewebsites.net/

The Secure DevOps Kit for Azure (AzSK) was created by the Core Services Engineering & Operations (CSEO) division at Microsoft, to help accelerate Microsoft IT’s adoption of Azure. Documentation with the community to provide guidance for rapidly scanning, deploying and operationalizing cloud resources, across the different stages of DevOps, while maintaining controls on security and governance.

by Scott Muniz | Sep 8, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

This is nice framework customers were waiting for. Framework is guiding Architects through pillars of architecture excellence: Cost Optimization, Operational Excellence, Performance Efficiency, Reliability, and Security.

https://docs.microsoft.com/en-us/azure/architecture/framework/

See also Design Patterns: https://docs.microsoft.com/en-us/azure/architecture/patterns/

And Azure Architecture Center: https://docs.microsoft.com/en-us/azure/architecture/

Recent Comments