by Scott Muniz | Sep 18, 2020 | Uncategorized

This article is contributed. See the original author and article here.

We are just a few days away from our first ever digital Microsoft Ignite. Microsoft 365 Government will be available with in-depth content to pair the all-digital event experience. Specifically, for our government customers we’re diving into two unique conversations about security, compliance, and providing hands on ways you can reduce risk as you accelerate your governments digital transformation.

Today, more than ever, government organizations are facing increasing challenges associated with remote delivery of public services. We have added enhanced regulatory, legal, and forensic investigation capabilities in the government clouds to help address these challenges. We are excited to announce Advanced eDiscovery capabilities in Microsoft 365 Government. Advanced eDiscovery provides a broad set of intelligent capabilities to help customers reduce large volumes of data to a relevant set. For example, an automatically generated query helps filter only for unique content by identifying near duplicates, which can help dramatically reduce data. You can learn more about this and other Advanced eDiscovery capabilities in this demo at aka.ms/GovAdvancedeDiscoveryVideo.

Sessions

For a full list of sessions for Ignite can be found here

Government Sessions

As part of the learning experience we’ve added two on demand sessions specifically targeted to security and compliance professionals and anyone that is looking to learn more about how Microsoft 365 Government can help in protect and navigate the modern digital world. The below sessions will be posted in the Microsoft Tech Community Video Hub launching on Sept 22nd.

- Increase security, reduce risk, and maximize government resources – Steve Faehl US Security CTO; Dean Iacovelli, SLG Director; Bryan Hunt, SLG Director

- Streamline your mission with Microsoft 365 Compliance – Matthew Littleton Microsoft 365 Advanced Compliance Specialist

- Improving the Customer Experience for Government Services– Bobby Chang, Technology Specialist; Brian Duncan, Senior Solutions Architect

Resources

Below is a list of resources you can take a look at now to learn more and bookmark to reference later:

- Register for Microsoft Ignite and start building your event schedule with sessions.

- Access the Virtual Hub for deep-dive technical training for IT pros, developers, and community champions available on September 22nd and get access to interactive guides, community resources and more.

- Check out the Microsoft Tech Community Video Hub launching on September 22nd to view videos and join conversation.

- Follow @Microsoft365 and @MS_Ignite on Twitter to stay up to date with the latest Microsoft 365 and Ignite news.

by Scott Muniz | Sep 18, 2020 | Uncategorized

This article is contributed. See the original author and article here.

This installment is part of a broader series to keep you up to date with the latest features in Azure Sentinel. The installments will be bite-sized to enable you to easily digest the new content.

File hash is a unique value that corresponds to the content of a file computed by using a specified hash algorithm. Using hashes, you can determine if two different files have exactly the same content. Files with identical hash values share identical contents. You can also use hashes to verify if file data has been modified, tampered with, or corrupted. In cybersecurity, one of the most common use cases of file hash is to share Indicators of Compromise, a valuable resource to SOC analysts, security researchers, and threat hunters.

Up until now, different types of entity such as IP, Account, Host, URL have been made available in different areas in Azure Sentinel. We are delighted to announce that FileHash entity has been added to the Analytics and generally available! Let us look at an example of where and how you can start leveraging this great addition today.

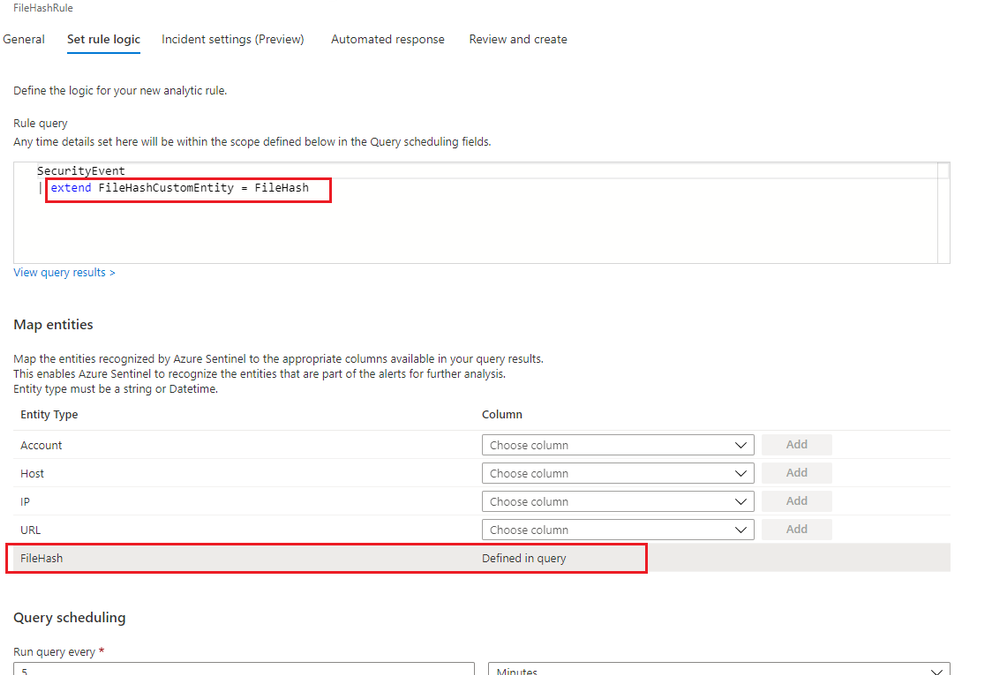

Analytic Rule

File hash can be used for entity mapping in a scheduled analytic rule. Under Set Rule Logic view of the scheduled analytic rule wizard, you can select any relevant field of a table defined in the rule query and map to the FileHash entity. A new column called FileHashCustomEntity will then be automatically created in the query, and this column name can be customized. If you identify a malicious file on a machine and want to scan your entire environment for existence of that file, you can assign that specific hash value to the FileHashCustomEntity field. This enables Azure Sentinel to recognize this entity that is part of the alerts for further analysis.

Figure1. FileHash in Analytic Rule

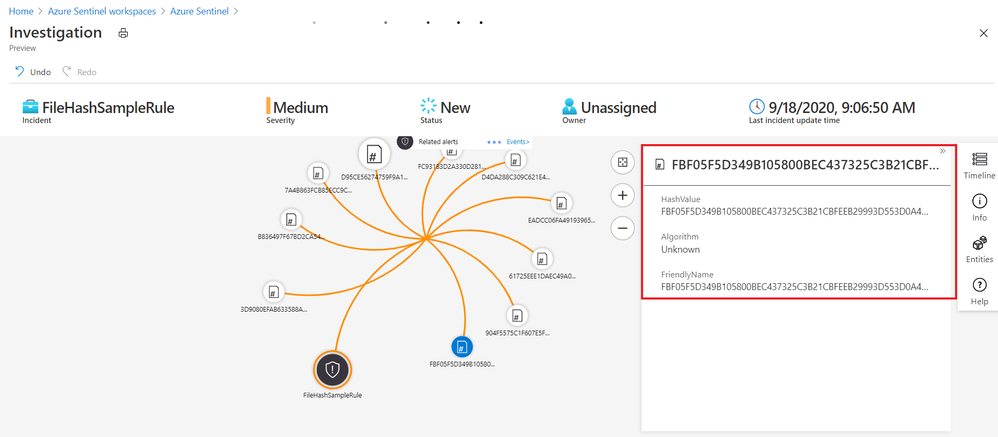

Investigation

If Azure Sentinel detects any matches for a known hash value from a detection rule you already set up, incidents with an entity type of FileHash will be created. You can then leverage the full incident view and Investigation graph to analyze the potentially anomalous activity based on this hash information. The Investigation view provides the relevant information including hash value, hash algorithm such as SHA256, MD5, etc., and its friendly name.

Figure2. FileHash sample in Investigation Graph

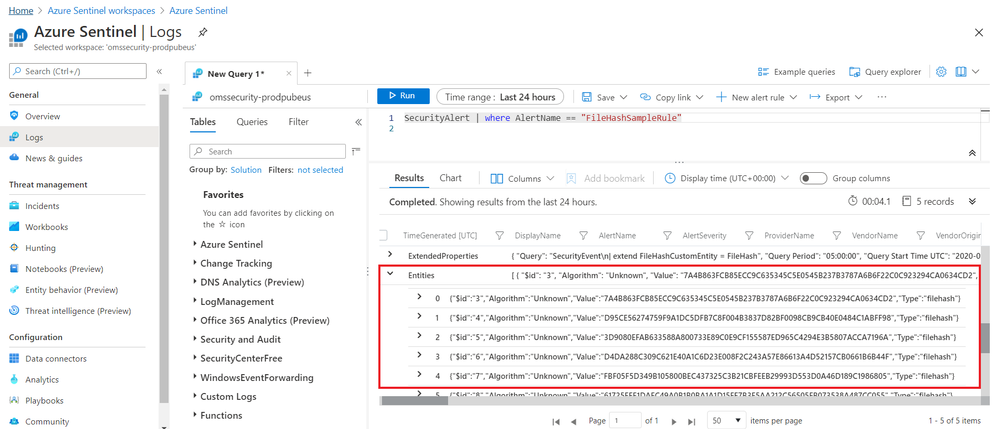

Leveraging the power of KQL in Log Analytics, you can also query the matches across your environment to perform further analysis.

Figure3. FileHash sample in query results

Get started today!

We encourage you to explore the FileHash entity in Azure Sentinel for threat detection and investigation in your environment.

Try it out, and let us know what you think!

You can also contribute new connectors, workbooks, analytics and more in Azure Sentinel. Get started now by joining the Azure Sentinel Threat Hunters GitHub community.

by Scott Muniz | Sep 18, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Another day another case.

It took me a while to follow this step by step by using the new Synapse APIs. So this post has the intention to make it easier by example.

You will need:

- A Synapse workspace

- An ADF pipeline that you want to start from Rest API.

Doc references:

https://docs.microsoft.com/en-us/azure/data-factory/quickstart-create-data-factory-rest-api

https://docs.microsoft.com/en-us/azure/azure-monitor/platform/rest-api-walkthrough

https://docs.microsoft.com/en-us/rest/api/synapse/data-plane/pipeline/createpipelinerun

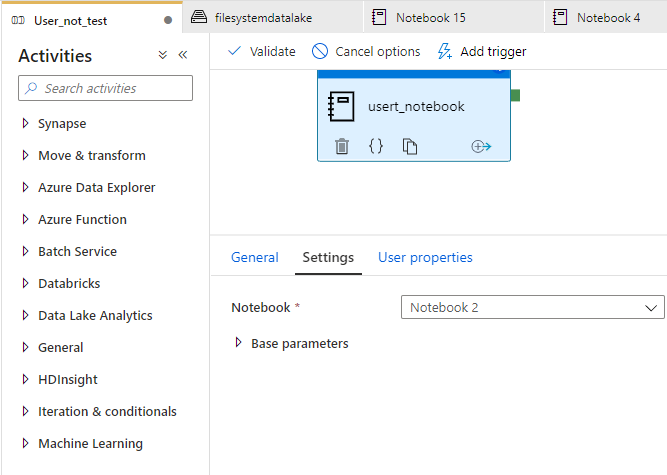

That is my pipeline code. It is a simple one it just creates Spark Database using a notebook.

%%spark

spark.sql("CREATE DATABASE IF NOT EXISTS DB_example")

As you can see in figure 1. That is my pipeline:

Figure 1 Pipeline

The name of my pipeline is User_not_test.

I can run successfully this pipeline from Synapse Studio. But I want to run it from the Rest API, actually, that is the post idea.

Step by Step

The first step consists in using this documentation to register my pipeline/workspace as an application:

(https://docs.microsoft.com/en-us/azure/azure-monitor/platform/rest-api-walkthrough)

$subscriptionId = "{azure-subscription-id}"

$resourceGroupName = "{resource-group-name}"

# Authenticate to a specific Azure subscription.

Connect-AzAccount -SubscriptionId $subscriptionId

# Password for the service principal

$pwd = "{service-principal-password}"

$secureStringPassword = ConvertTo-SecureString -String $pwd -AsPlainText -Force

# Create a new Azure AD application

$azureAdApplication = New-AzADApplication `

-DisplayName "My Azure Monitor" `

-HomePage "https://localhost/azure-monitor" `

-IdentifierUris "https://localhost/azure-monitor" `

-Password $secureStringPassword

# Create a new service principal associated with the designated application

New-AzADServicePrincipal -ApplicationId $azureAdApplication.ApplicationId

# Assign Reader role to the newly created service principal

New-AzRoleAssignment -RoleDefinitionName Reader `

-ServicePrincipalName $azureAdApplication.ApplicationId.Guid

Fill the gasp. For example, suppose my workspace was named as: synapseworkspace_Demo. So this part of the script you should add your workspace name as my example: https://synapseworkspace_Demo.dev.azuresynapse.net

Take note of the password that you create and define here: $pwd = “{service-principal-password}”

# Create a new Azure AD application

$azureAdApplication = New-AzADApplication `

-DisplayName "APP_synapseworkspace" `

-HomePage "https://synapseworkspace_Demo.dev.azuresynapse.net" `

-IdentifierUris "https://synapseworkspace_Demo.dev.azuresynapse.net" `

-Password $secureStringPassword

After that, you will execute some steps to actually invoke the API. The example is described here:

https://docs.microsoft.com/en-us/azure/data-factory/quickstart-create-data-factory-rest-api

But the Synapse APIs are here:

https://docs.microsoft.com/en-us/rest/api/synapse/data-plane/pipeline/createpipelinerun

My script will run with the following:

https://synapseworkspace_Demo.dev.azuresynapse.net/pipelines/User_not_test/createRun?api-version=2018-06-01

Do not forget to add the password that you defined:

$pwd = “Password that you defined on the previous step”

$pwd = "Password that you defined on the previous step"

$azureAdApplication = Get-AzADApplication -IdentifierUri "https://YourWorkspaceName.dev.azuresynapse.net"

$subscription = Get-AzSubscription -SubscriptionId $subscriptionId

$clientId = $azureAdApplication.ApplicationId.Guid

$tenantId1 = $subscription.TenantId

$authUrl = "https://login.microsoftonline.com/${tenantId1}/oauth2/token"

$cred = New-Object -TypeName Microsoft.IdentityModel.Clients.ActiveDirectory.ClientCredential -ArgumentList ($clientId, $pwd)

$AuthContext = [Microsoft.IdentityModel.Clients.ActiveDirectory.AuthenticationContext]$authUrl

$result = $AuthContext.AcquireTokenAsync("https://dev.azuresynapse.net", $cred).GetAwaiter().GetResult()

# Build an array of HTTP header values

$authHeader = @{

'Content-Type'='application/json'

'Accept'='application/json'

'Authorization'=$result.CreateAuthorizationHeader()

}

$request = "https://YourWorkspaceName.dev.azuresynapse.net/pipelines/YourPipelineName/createRun?api-version=2018-06-01"

$body = @"

{

"name": "YourWorkspaceName",

"location": "Region",

"properties": {},

"identity": {

"type": "SystemAssigned"

}

}

"@

$response = Invoke-RestMethod -Method POST -Uri $request -Header $authHeader -Body $body

$response | ConvertTo-Json

$runId = $response.runId

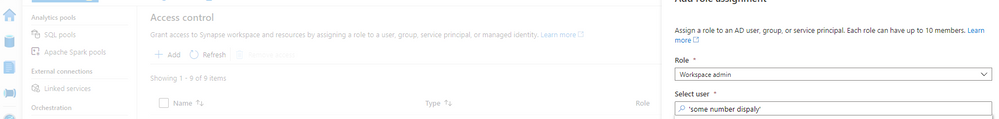

If you face this error:

Invoke-RestMethod: {“error”:{“code”:”Unauthorized”,”message”:”The principal ‘some number display’ does not have the necessary permissions to perform this operation. “}}

Go back to you synapse studio -> open Monitoring -> access control and be sure of 2 things:

1) The user that will start the rest API needs Workspace admin permission

2)The APP that you register needs workspace admin permissions and to satisfy this requisite: Copy the number displayed on the error and add the permission like figure 2:

Figure 2 Permission

Note: Use the number of the principal service id, not the APP name to get this permission done.

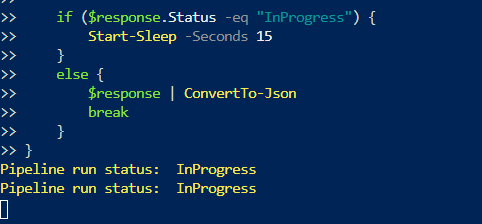

You could also monitor the pipeline execution adding this piece of code:

while ($True) {

$response = Invoke-RestMethod -Method GET -Uri "https://YourWorkspaceName.dev.azuresynapse.net/pipelineruns/${runId}?api-version=2018-06-01" -Header $authHeader

Write-Host "Pipeline run status: " $response.Status -foregroundcolor "Yellow"

if ($response.Status -eq "InProgress") {

Start-Sleep -Seconds 15

}

else {

$response | ConvertTo-Json

break

}

}

Here is the execution – Figure 4:

Figure 4 Execution

Thanks, Dan Rosales for help during this process.

That is it!

Liliam

UK Engineer

by Scott Muniz | Sep 18, 2020 | Uncategorized

This article is contributed. See the original author and article here.

This week, MVPs Mynda Treacy, Tony de Jonker, and Alan Murray share their Excel knowledge with us

Excel PivotTable Profit and Loss

Mynda Treacy shows how to create an easy-to-read interactive profit and loss statement in Excel using PivotTable, slicers, and conditional formatting

Excel: Interactieve grafieken (Interactive Charts)

Tony de Jonker walks through using multiple graphs from the same dataset to reveal hidden insights in your data

Excel VLOOKUP Trick – No More Counting Columns

Alan Murray shares a neat VLOOKUP trick to select a column for the data to return instead of specifying a column index number- in case you’re not already using XLOOKUP

Find this useful and/or have an Excel question? Click the Like button and/or leave a comment below

by Scott Muniz | Sep 18, 2020 | Uncategorized

This article is contributed. See the original author and article here.

We hope all of you are taking care of yourselves and staying safe. Here are the latest functionalities that rolled out to production in the month of August-2020.

- Tasks in Word and Excel

- Update to comment notifications

- Mute comment notifications

- OneDrive app for Surface Duo

Tasks in Word and Excel

Roadmap ID: 61110 ; 61111

We are always invested in making the collaboration experience reliable, intuitive and secure. Office is more than just a canvas for document creation- it supports native collaboration with real time coauthoring as well as helps you in staying up to date with your file activities with effective features like @mentions, comments and now tasks. Across Word and Excel ,tasks will help you assign responsibilities to your peers, which can be resolved once the job is complete. In addition to assigning the task you can also reassign it and track any changes made to the task within the comment thread via the inline history.

Updates to comment notification

Roadmap ID: 66192

Users who create or upload Word, Excel, PowerPoint or any other type of file to OneDrive or SharePoint will now get email notifications whenever someone else leaves a comment on their file.

Mute comment notifications

Roadmap ID: 65912

We all want to stay on top of our document tasks, but too many reminders can inhibit our productivity on other pressing work. To balance these needs, we’re enabling users to turn off comment notifications for individual files in the details pane. This feature is also incorporated in sharing emails as an actionable message action.

OneDrive app for Surface Duo

Last week we announced the general availability of Surface Duo.

OneDrive app for Surface Duo is a critical component of and is fully integrated into the Surface Duo operating system to ensure you stay connected to your files and photos and enable you with on-the-go collaboration while making the most of the dual-screen and spanning capabilities. Surface Duo and OneDrive enhances multitasking with drag-and-drop functionality as well as the built-in PDF editor for book-like reading and markup experience.

Learn more and stay engaged

As you can see, we continue to evolve OneDrive as a place to access, share, and collaborate on all your files in Microsoft 365, keeping them protected and readily accessible on all your devices, anywhere.

You can stay up-to-date on all things via the OneDrive Blog and the OneDrive release notes.

Check out the new and updated OneDrive documentation.

Take advantage of end-user training resources on our Office support center.

Thank you again for your support of OneDrive. We look forward to your continued feedback on UserVoice and hope to connect with you at Ignite or another upcoming Microsoft or community-led event.

Thanks for your time reading all about OneDrive,

Ankita Kirti – Product Manager – OneDrive | Microsoft

Recent Comments