by Scott Muniz | Aug 14, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Claire: You’re watching the Microsoft US health and life Sciences Confessions of Health Geeks podcast. A show the offers industry insight from the health geeks and data freaks of the US health and life Sciences industry team. I’m your host, Claire Bonaci. On today’s episode, I talked with our HLS industry team summer intern Param on how he spent his three months at Microsoft and what surprised him most.

Claire Bonaci: So, welcome to the podcast Param. You are our summer intern on the HSL industry team and it’s been really great to working with you. So I’d love to get your perspective over the last few months here.

Param Mahajan: Thank you. Thank you for having me. A quick background about me. I’m a rising senior at Cornell. I’m double majoring in computer science and economics, and my experience has over here has been pretty great so far. I mean after COVID hit, I think my internship went virtual and I’m definitely missing out being in the cool NYC office of Microsoft. But I think the transition to a virtual internship was pretty efficient and effective. My team members were very reachable. The people I was working with always took out the time to meet me and help me out in my project. So people have been very accessible. People have always tried to help me out, so it’s it’s been a phenomenal experience.

CB: That’s great, yeah you do. You have a very impressive background. I know you worked at a digital services startup where you built an enterprise at health cloud and last summer you worked at PwC cyber division, assessing a market for a cyber threat simulator. So I guess you mind telling us a little bit about your experience as an intern at Microsoft, kind of what your project was, what you worked on for last three months.

PM: Yeah, absolutely. So my project for this summer was to make a global point of view for the space of manufacturing in pharmaceutical and life Sciences, and internally for the healthcare and life Sciences team. This was an area which was lacking focus initially and it’s a focus area for FY 21, which is why I was asked to focus in this area. In my project I made a global POV deck with an inventory of current assets, resources, solution areas, partner and user success stories. I worked with the healthcare and manufacturing teams from across the US, Europe and APAC. And I also work with a partner development teams at Microsoft to make sure that all the information is up-to-date and the most recent ones. In the latter half of my project, I made a selling strategy document. The thought behind making this document was that infield sellers for healthcare and life Sciences are more used to the health care domain and um so this setting strategy document was made to bridge the gap between the health care domain and the healthcare and manufacturing domains intersection. And this setting strategy document was divided into 6 solution areas with summarized links to either a user success story or a partnership story. And one key aspect of of the selling strategy document was that a lot of our manufacturing success stories are just that like they’re only in the manufacturing domain. And we want to emphasize that OK, we’re going to replicate that success as it pertains to, um, the healthcare and life Sciences sector as well. So there’s that

distinction in the document as well. So yeah, that’s a brief summary of my project and we’re waiting to do a final review, do some field tests and then it will go into the team sales

pipeline.

CB: That’s great, yeah, I’ve been a little bit apart of what you’ve been doing the last three months and it has been very impactful. Very helpful. I’m really looking forward to using these documents in FY21 since I do think they’re going to be really helpful for the team overall. So, given your extensive background, why did you actually choose to work at Microsoft? Why did you choose to intern here rather than some other companies?

PM: Right. So as I said before, my background is in computer science and economics and I have always wanted to do a role which had elements of both. I like a role with client facing

and business development elements. But I also like roles which have some technical elements that coding or technical analysis. So I think Microsoft as a company is one of the few companies that has a breadth of roles which which helps you choose an combine the

elements of your background that you like an make a role that is very suitable for you. So that was a primary reason. And secondly I think the team’s culture and the teams values

really resonated with me. I think in, especially now that, I didn’t think of this like when I

was choosing, but I I, I think, especially now in an era is like great income inequality’s and great disparities in social justice. I think it’s, uh, it’s good to be working for a company which is not tone death, which takes these matters seriously, and which is actually reflects my values. So both of those combined, I think, helped me make my decision.

CB: Those are all really great points and definitely one of the reasons that I came to Microsoft as well. So during your three months here, what surprised you the most? I know, yeah, your fresh, coming from college and obviously you have you had that experience

working at a large company. So what kind of surprised you most here?

PM: Yeah, so I think two things stood out for me. The first one was every company that I’ve worked at before and every [advert] every company that tries to advertise says that oh, bring your passion into work, speak about your passion. And let us know what your passion is, but I think your passion just stay that, like your passions outside of work and the one thing that pleasantly surprised me at Microsoft, especially in this internship was I spoke to my mentor and my manager about exploring some other roles which has surrounded the industry team for healthcare and life Sciences. And they were like OK if that’s your passion, let’s include that in one of as one of your deliverables or one of your core priorities. So I think my passions were really heard and they were not just like encouraged, but they were included in what I was supposed to do in this internship, so it’s quantifiable. I actually had an opportunity to pursue them, so that was a pleasant surprise. And it was, it was great actually pursuing your passions as part of your job. And the second one was, I think I touched on this before was the breadth of roles. I’ve spoken to three to four people in the company that I’ve come in with a multidisciplinary background in computer science in health care, in ah business and you know, client relationship development. And these people have picked and chosen whatever aspects of their background they like and they have made or they have made their respective roles tailor made for themselves and they have been doing that for the past nine years. And I think that was a big big takeaway because listening to company say oh you can you know adjust your role, you have flexibility, that all sounds great, but I think having some of these people on my team interacting with them and seeing how they actually may like sometimes the seemingly most like weird roles, but now they are very sought after and valued and then an essential part of the team. I think that was a great surprise for me and it’s very encouraging to me as well.

CB: I think you really hit on the best parts of Microsoft for sure. And you have made a huge impact already, just in the three months you’re here. I’m really looking forward to hopefully working with you in the future. So again, thank you so much Param for talking to us explaining your internship and giving us a little bit about your insight and we look forward

to seeing you in the future.

PM: Absolutely, it was a pleasure.

CB: Thank you all for watching. Please feel free to leave us questions or comments below and check back soon for more content from the HLS industry team.

by Scott Muniz | Aug 14, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

CSS live reloading on Blazor

Jun-ichi Sakamoto is a Japanese MVP for Developer Technologies. The 10-time MVP title holder specializes in C#, ASP.NET, Blazor, Azure Web Apps, TypeScript. Jun-ichi’s publication of NuGet packages – like Entity Framework Core, helper, testing tools, Blazor components, and more – have been downloaded more than 18 million times. For more on Jun-ichi, check out his Twitter @jsakamoto

Helping the busy BDM or manager: create ToDo tasks automatically when you are mentioned in a team channel

Vesku Nopanen is a Principal Consultant in Office 365 and Modern Work and passionate about Microsoft Teams. He helps and coaches customers to find benefits and value when adopting new tools, methods, ways or working and practices into daily work-life equation. He focuses especially on Microsoft Teams and how it can change organizations’ work. He lives in Turku, Finland. Follow him on Twitter: @Vesanopanen

6 useful Xamarin Forms Snippets

Damien Doumer is a software developer and Microsoft MVP in development technologies, who from Cameroon and currently based in France. He plays most often with ASP.Net Core and Xamarin, and builds mobile apps and back-ends. He often blogs, and he likes sharing content on his blog at https://doumer.me. Though he’s had to deal with other programming languages and several frameworks, he prefers developing in C# with the .Net framework. Damien’s credo is “Learn, Build, Share and Innovate”. Follow him on Twitter @Damien_Doumer.

#Microsoft Windows Admin Center and Azure Backup Management #WAC #Azure

James van den Berg has been working in ICT with Microsoft Technology since 1987. He works for the largest educational institution in the Netherlands as an ICT Specialist, managing datacenters for students. He’s proud to have been a Cloud and Datacenter Management since 2011, and a Microsoft Azure Advisor for the community since February this year. In July 2013, James started his own ICT consultancy firm called HybridCloud4You, which is all about transforming datacenters with Microsoft Hybrid Cloud, Azure, AzureStack, Containers, and Analytics like Microsoft OMS Hybrid IT Management. Follow him on Twitter @JamesvandenBerg and on his blog here.

Step by Step Azure NAT Gateway – Static Outbound Public IP address

Robert Smit is a EMEA Cloud Solution Architect at Insight.de and is a current Microsoft MVP Cloud and Datacenter as of 2009. Robert has over 20 years experience in IT with experience in the educational, health-care and finance industries. Robert’s past IT experience in the trenches of IT gives him the knowledge and insight that allows him to communicate effectively with IT professionals. Follow him on Twitter at @clusterMVP

by Scott Muniz | Aug 14, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

The Super User feature of the Azure Rights Management service from Azure Information Protection ensures that authorized people and services can always read and inspect the data that Azure Rights Management protects for your organization. You can learn more about the super user feature and how to enable and manage it here.

One of the concerns we have heard from our customers regarding the super user management was that to be able to add a super user, one needs to be assigned the Global Administrator role and that the super user assignment is permanent until manually removed. All this adds complexity to the roles management workflow and raises security, compliance and governance questions especially at large companies with distributed IT organizations.

Azure Active Directory (Azure AD) Privileged Identity Management (PIM) is a service that enables you to manage, control, and monitor access to important resources in your organization. These resources include resources in Azure AD, Azure, and other Microsoft Online Services like Office 365 or Microsoft Intune. You can learn more about Azure PIM here.

One of the most expected PIM features had been ability to manage membership of privileged AAD groups. Finally, you can now assign eligibility for membership or ownership of privileged access groups. You can learn more about this new capability here.

Note: As of this writing (August 2020) this feature is in preview, so it is subject to change.

So, how can this new feature help us with the problem outlined above? Let’s find out.

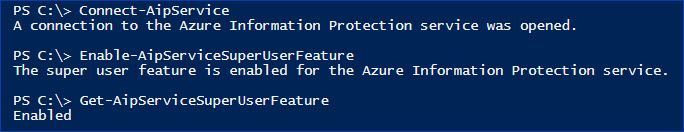

Enable the AIP Super User feature

If you have not enabled the Super User feature yet, you need to connect to the AIP service as a Global Administrator and run the following command: Enable-AipServiceSuperUserFeature

Figure 1: Enabling the AIP Super User feature

Figure 1: Enabling the AIP Super User feature

Note: Please take a moment to review our security best practices for the Super User feature.

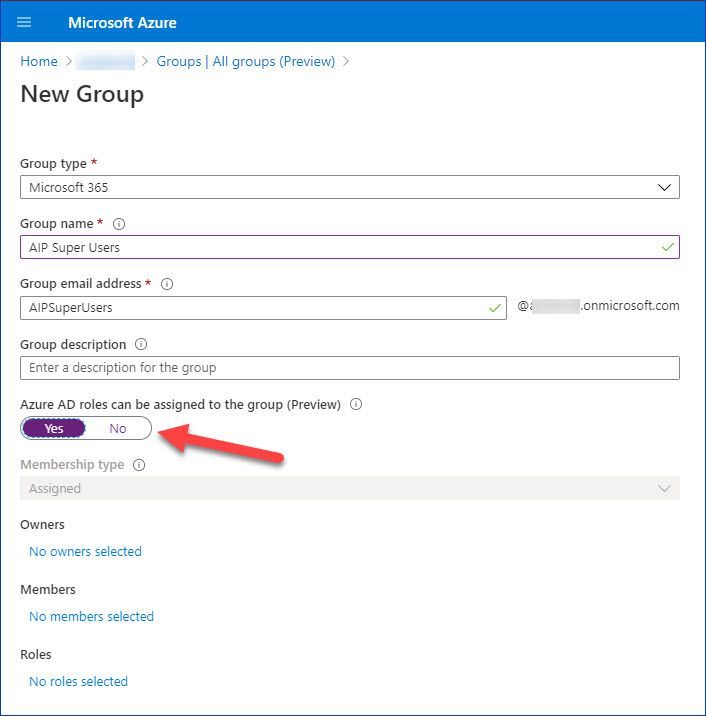

Create an Azure AD group

Before you go ahead and create a new group, you need to consider:

- AIP only works with identities which have an email address (proxyAddress attribute in Azure AD)

- As of this writing (August 2020) only new Microsoft 365 and Security groups can be created with “isAssignableToRole” property, you can’t set or change it for existing groups.

- This new switch is only visible to Privileged Role Administrators and Global Administrators because these are only two roles that can set the switch.

This leaves us with the only option – a new Microsoft 365 group.

Figure 2: Creating a new Microsoft 365 group in the Azure Portal

Figure 2: Creating a new Microsoft 365 group in the Azure Portal

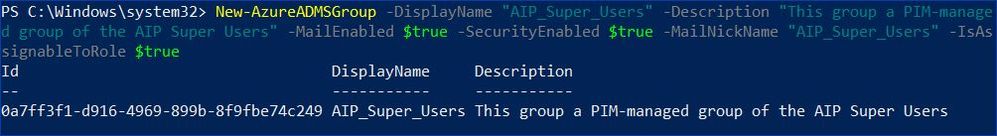

If you prefer PowerShell, you can use it too:

Figure 3: Creating a new Microsoft 365 group using PowerShell

Figure 3: Creating a new Microsoft 365 group using PowerShell

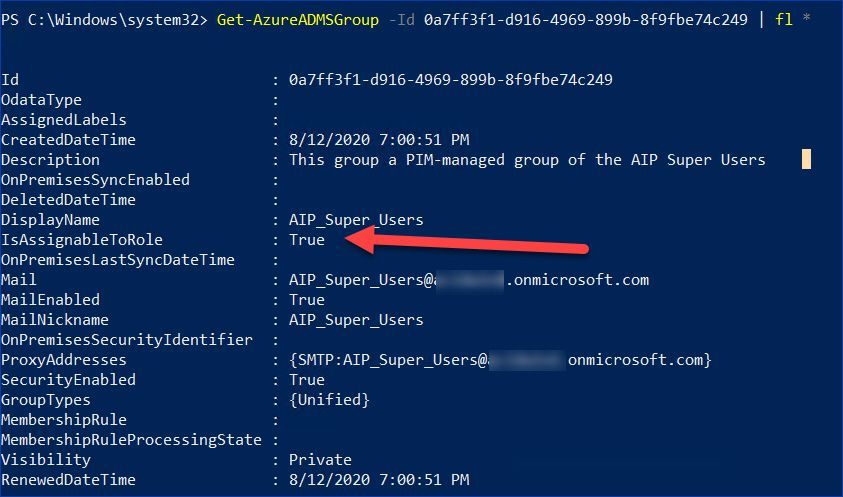

Figure 4: Reviewing properties of the new Microsoft 365 group using PowerShell

Figure 4: Reviewing properties of the new Microsoft 365 group using PowerShell

Enable PIM support for the new group

Our next step is to enable privileged access management for the group we have just created:

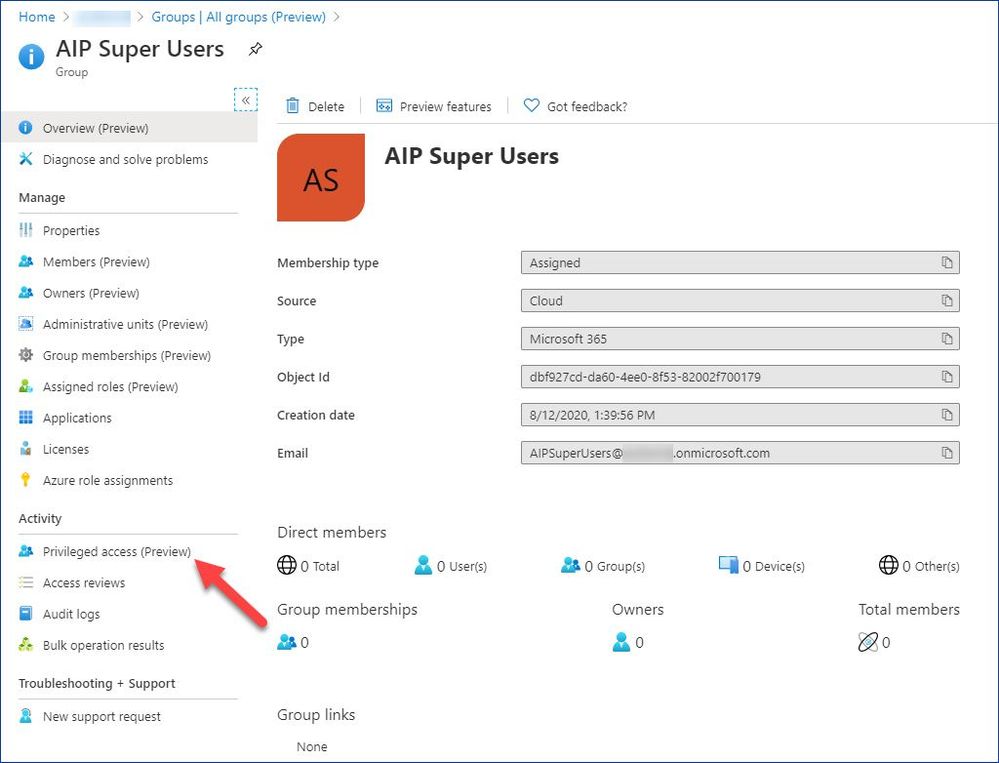

Figure 5: Accessing Privileged access configuration from the group management

Figure 5: Accessing Privileged access configuration from the group management

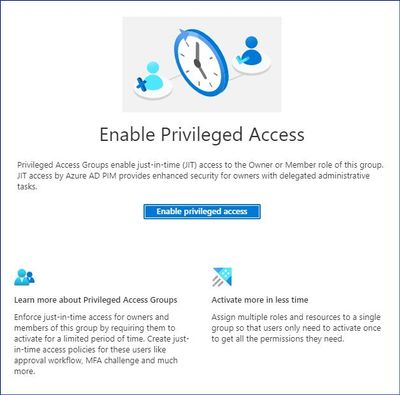

Figure 6: Enabling Privileged Access for the new group

Figure 6: Enabling Privileged Access for the new group

Add eligible members to the group

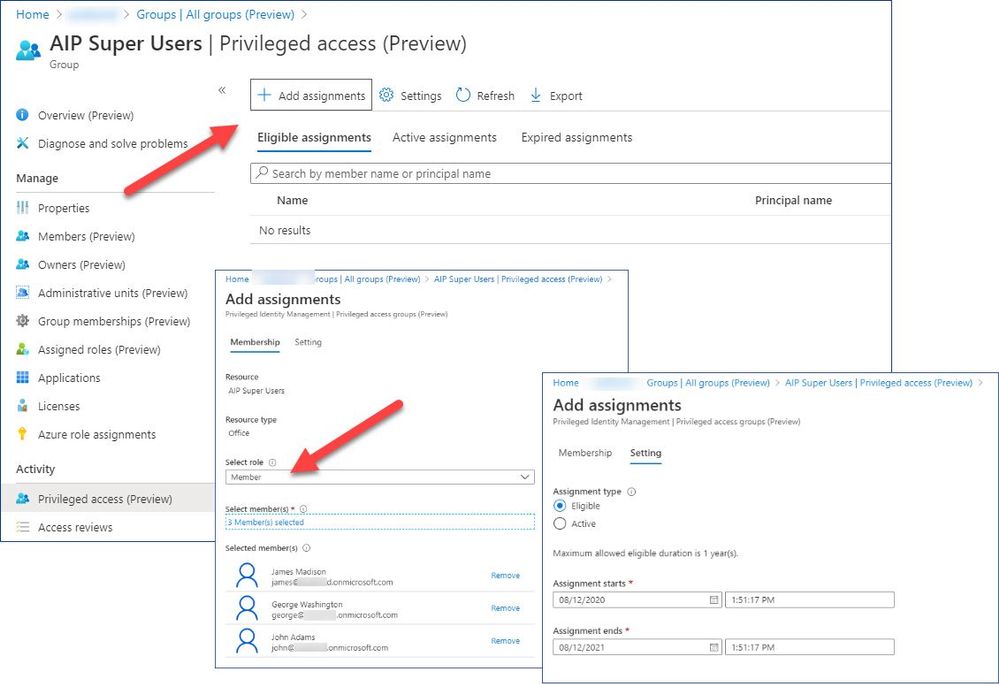

Now we can add assignments and decide who should be active or eligible members of our new group.

Figure 7: Adding assignments

Figure 7: Adding assignments

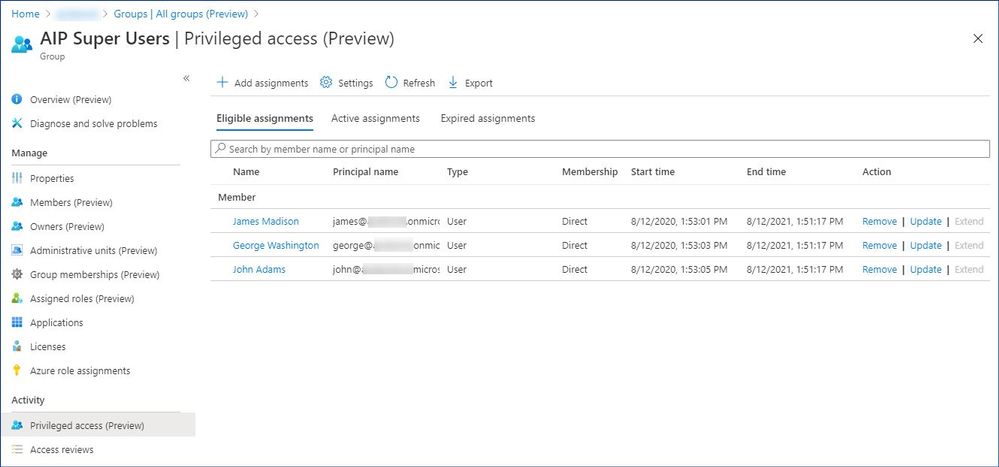

Figure 8: Reviewing a list of the eligible members

Figure 8: Reviewing a list of the eligible members

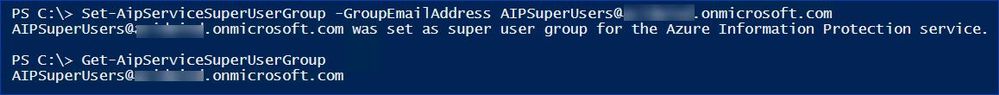

Set the new group to use as the super user group for AIP

The Set-AipServiceSuperUserGroup cmdlet specifies a group to use as the super user group for Azure Information Protection. Members of this group are then super users, which means they become an owner for all content that is protected by your organization. These super users can decrypt this protected content and remove protection from it, even if an expiration date has been set and expired. Typically, this level of access is required for legal eDiscovery and by auditing teams.

You can specify any group that has an email address, but be aware that for performance reasons, group membership is cached. For information about group requirements, see Preparing users and groups for Azure Information Protection.

Figure 9: Adding the new PIM-managed group as the super user group

Figure 9: Adding the new PIM-managed group as the super user group

Using the super user feature

Now that we have everything set up, let’s see what the end user (JIT administrator) experience is going to be.

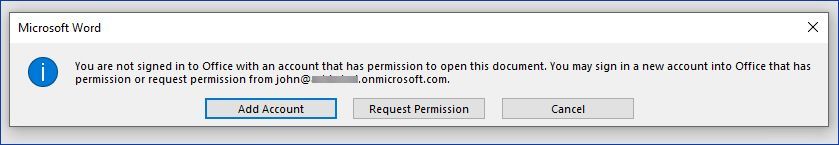

First, for the sake of testing we are going to make sure that the test user can’t open a protected document he does not normally have access to.

Figure 10: Error indicating that the user does not have access to the protected document

Figure 10: Error indicating that the user does not have access to the protected document

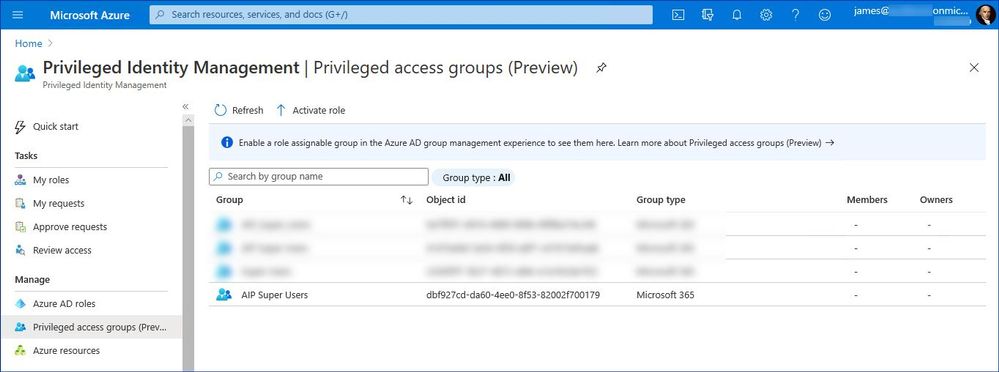

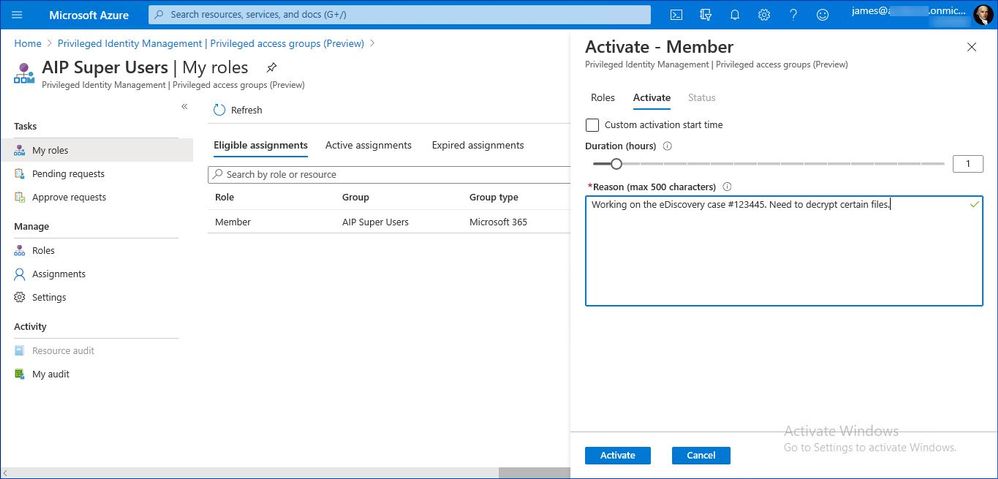

It’s time to elevate our access using Azure PIM:

Figure 11: List of the PIM-managed privileged access groups

Figure 11: List of the PIM-managed privileged access groups

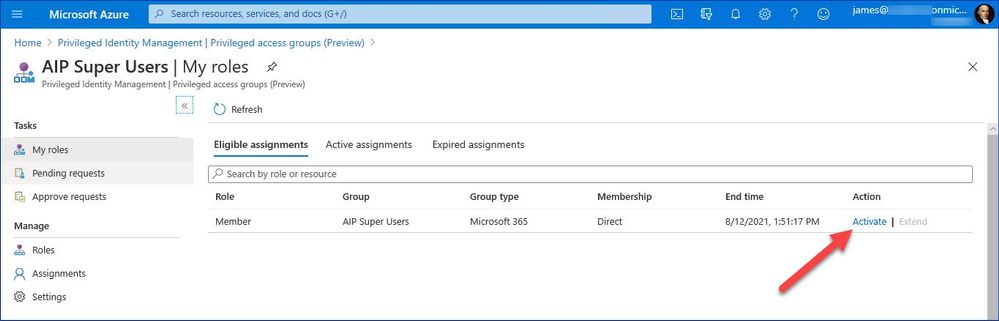

Figure 12: List of privileged groups the user is eligible for

Figure 12: List of privileged groups the user is eligible for

Figure 13: Privileged group activation dialog

Figure 13: Privileged group activation dialog

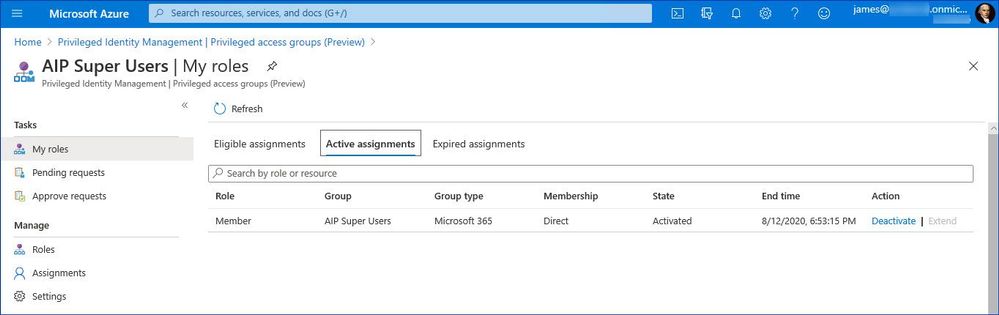

Figure 14: Verifying that the user has the privileged group activated

Figure 14: Verifying that the user has the privileged group activated

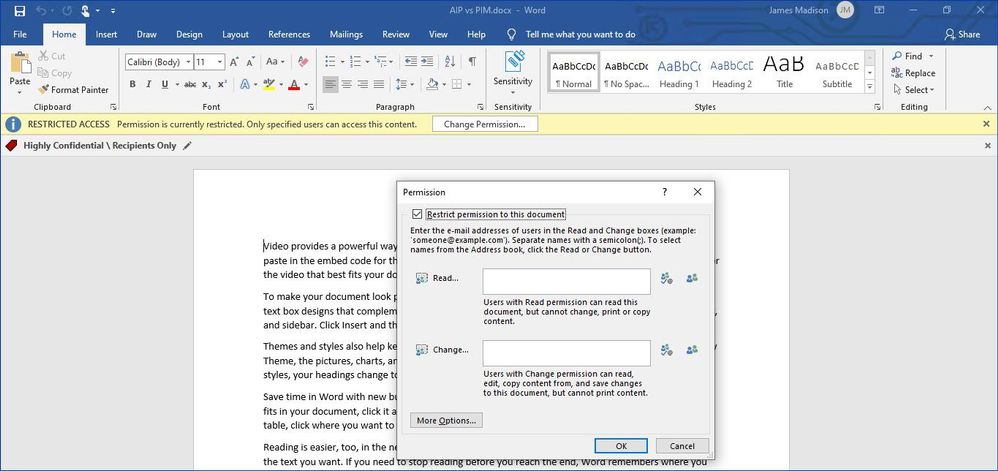

After that the user is able to access the protected document and remove or change protection settings if needed.

Figure 15: Accessing a protected document as a super user

Figure 15: Accessing a protected document as a super user

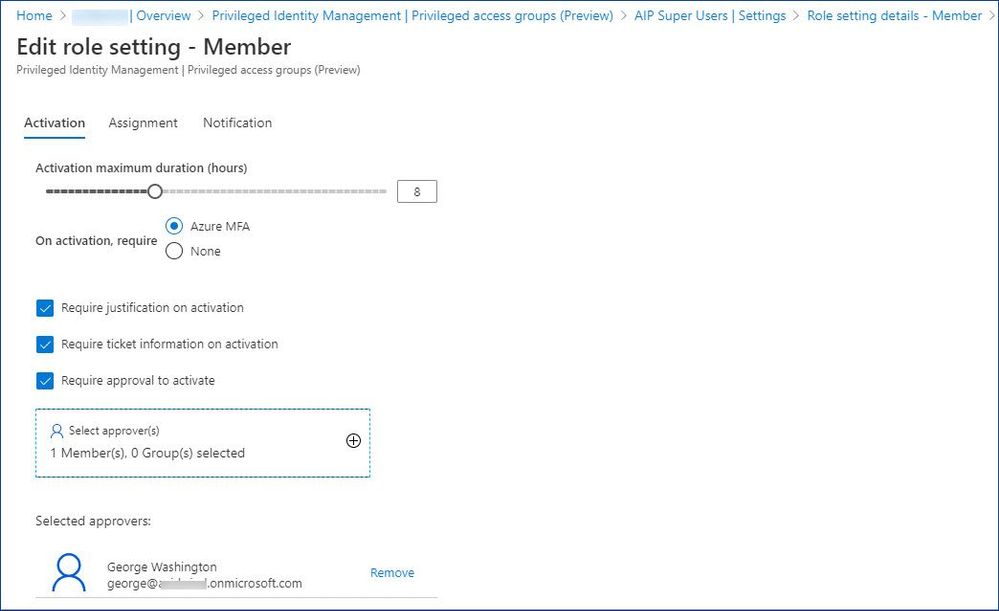

If required by your company’s policy, you can secure this elevation process even further by enforcing MFA and approval

Figure 16: Customizing role activation options

Figure 16: Customizing role activation options

For more information about role-assignable groups in Azure AD, see Use cloud groups to manage role assignments in Azure Active Directory.

Please also take a moment to review current limitations and known issues here.

by Scott Muniz | Aug 14, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

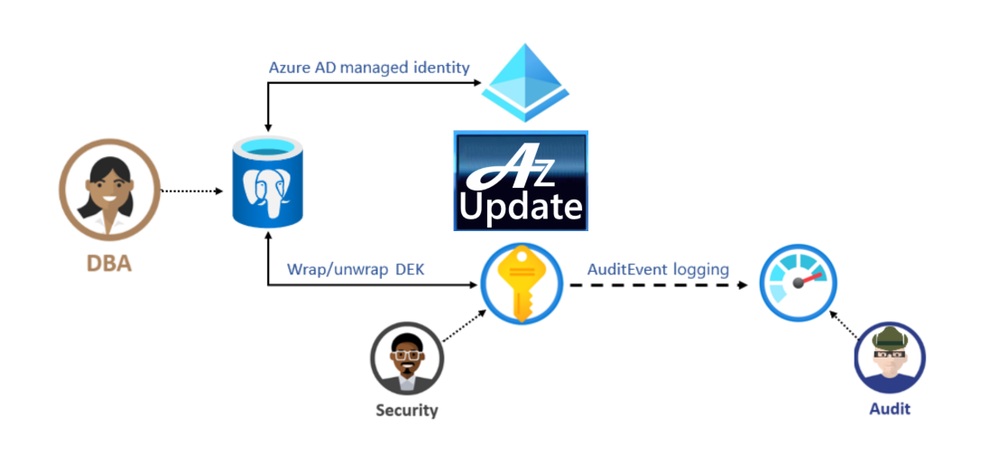

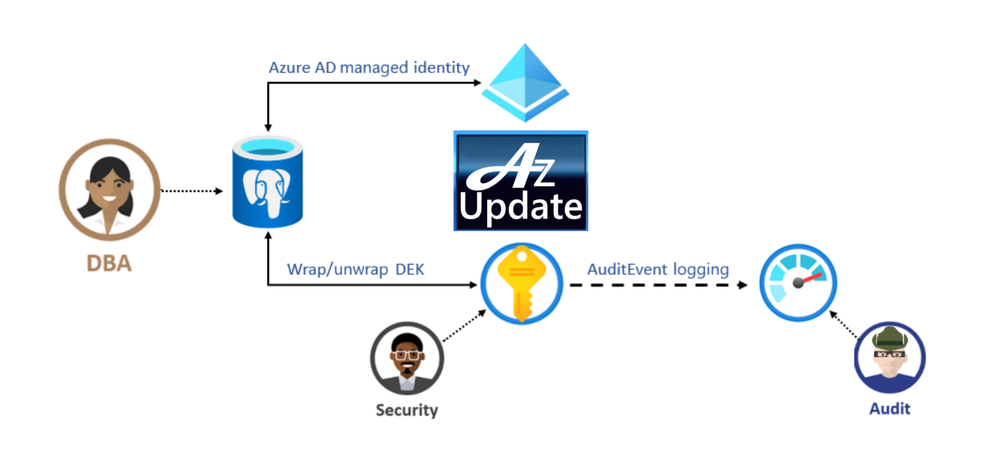

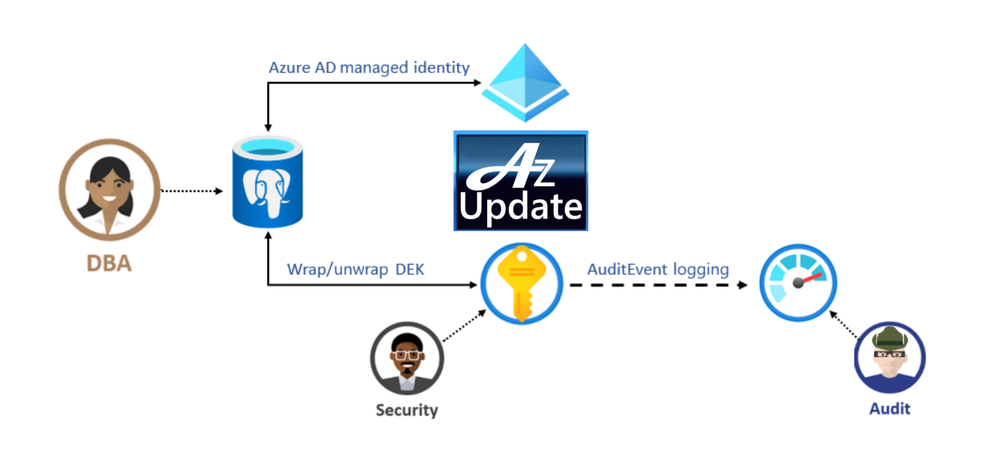

Lots of news surrounding Azure security and hybrid offerings this week. Here are a couple of the news items we are covering this week: Azure Database for PostgreSQL Data encryption enhancements announced, New Microsoft Learn Modules for Azure and Windows Server IT Pros, Exchange Server 2016 End of Mainstream Support and Azure Cloud Shell running in an isolated virtual network and tools image open sourced.

Azure Database for PostgreSQL Data encryption enhancements announced

Azure Database for PostgreSQL Infrastructure double encryption (preview)

The preview of infrastructure double encryption for Azure Database for PostgreSQL-single server is now available. Infrastructure double encryption adds a second layer of encryption using a FIPS 140-2 validated cryptographic module and a different encryption algorithm which gives an additional layer of protection for your data at rest. The key used in Infrastructure Double encryption is also managed by this service. This feature is not turned on by default as it will have performance impact due to the additional layer of encryption.

Data encryption with customer managed keys for Azure DB for PostgreSQL-single server

Data encryption with customer-managed keys for Azure Database for PostgreSQL-single server enables you to bring your own key (BYOK) for data protection at rest. It also allows organizations to implement separation of duties in the management of keys and data. With customer-managed encryption, you are responsible for, and in a full control of, a key’s lifecycle, key usage permissions, and auditing of operations on keys.

New Microsoft Learn Modules for Azure and Windows Server IT Pros

Whether you’re just starting or an experienced professional, the hands-on approach helps you arrive at your goals faster, with more confidence and at your own pace. In the last couple of days, the team at Microsoft published a couple of new Microsoft Learn modules around Azure, Hybrid Cloud, and Windows Server for IT Pros. These modules help you to learn how you can leverage Microsoft Azure in a hybrid cloud environment to manage Windows Server.

Exchange Server 2016 and the End of Mainstream Support

Microsoft has announced that Exchange Server 2016 enters the Extended Support phase of its product lifecycle on October 14th, 2020. During Extended Support, products receive only updates defined as Critical consistent with the Security Update Guide. For Exchange Server 2016, critical updates will also include any required product updates due to time zone definition changes. With the transition of Exchange Server 2016 to Extended Support, the quarterly release schedule of cumulative updates (CU) will end. The last planned CU for Exchange Server 2016, CU19, will be released in December 2020.

Azure Cloud Shell enhancements announced

Azure Cloud Shell can now run in an isolated virtual network (public preview)

Microsoft announced the public preview of Azure Cloud Shell running inside a private virtual network. This widely requested and optional feature allows you deploy a Cloud Shell container into an Azure virtual network that you control. Once you connect to Cloud Shell, you can interact with resources within the virtual network you selected. This allows you to connect to virtual machines that only have a private IP, use kubectl to connect to a Kubernetes cluster which has locked down access, or connect to other resources that are secured inside a virtual network.

The Azure Cloud Shell tools image is now open sourced

The Cloud Shell experience contains common command line tools to manage resources across both Azure and M365 and can now be found on GitHub. You can now file issues or pull requests directly to the tools image, and any changes that occur there will be reflected in the next release of Cloud Shell. You can use this container image in other management scenarios, with many tools already installed and updated regularly, removing the concern about updating your cloud management tools.

MS Learn Module of the Week

These hands-on-labs share details on how to configure an Azure environment so that Windows IaaS workloads requiring Active Directory are supported. You’ll also learn to integrate on-premises Active Directory Domain Services (AD DS) environment into Azure.

Let us know in the comments below if there are any news items you would like to see covered in next week show. Az Update streams live every Friday so be sure to catch the next episode and join us in the live chat.

by Scott Muniz | Aug 14, 2020 | Uncategorized

This article is contributed. See the original author and article here.

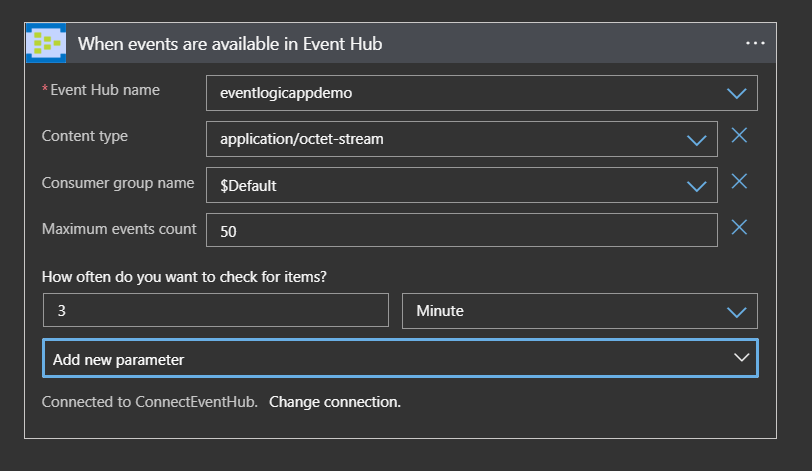

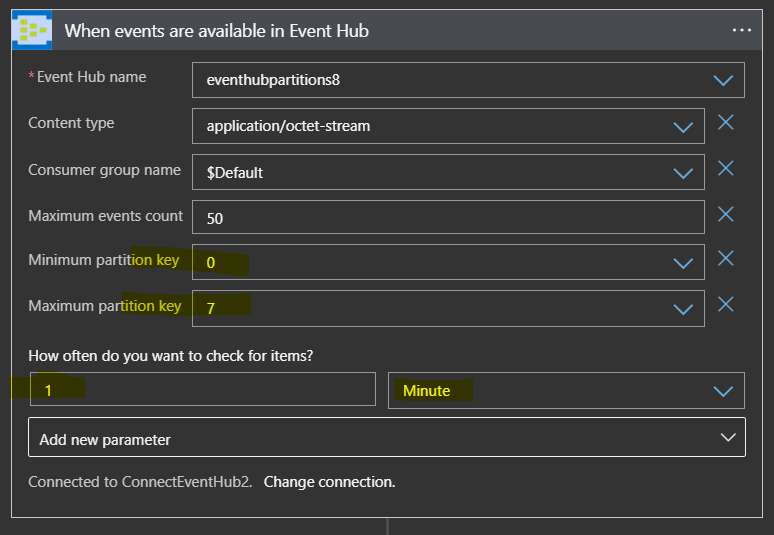

This article guides you on configuring the Event Hub Trigger of Logic App and later automate the workflows for the events pulled from Event Hub.

To introduce about the Event Hub Trigger :

All Event Hub triggers are long-polling triggers, which means that the trigger processes all the events and then waits 30 seconds per partition for more events to appear in your Event Hub. So, if the trigger is set up with four partitions, this delay might take up to two minutes before the trigger finishes polling all the partitions. If no events are received within this delay, the trigger run is skipped. Otherwise, the trigger continues reading events until your Event Hub is empty. The next trigger poll happens based on the recurrence interval that you specify in the trigger’s properties.

- The Event Hub Trigger pulls all the events until the number reaches the “Maximum event Counts “from one partition at a time.

- The next poll for next partition happens after 30 seconds gap.

- If next partition doesn’t have any data the trigger would count as skipped and again we have delay of 30 seconds.

- As a whole we would not know how long the Event Hub trigger would take to complete to poll all the partitions.

It depends on the previous poll time.

Hence, we would not be able to poll all the partitions of Event Hub at the same time by targeting all the partitions by LA Trigger as shown below.

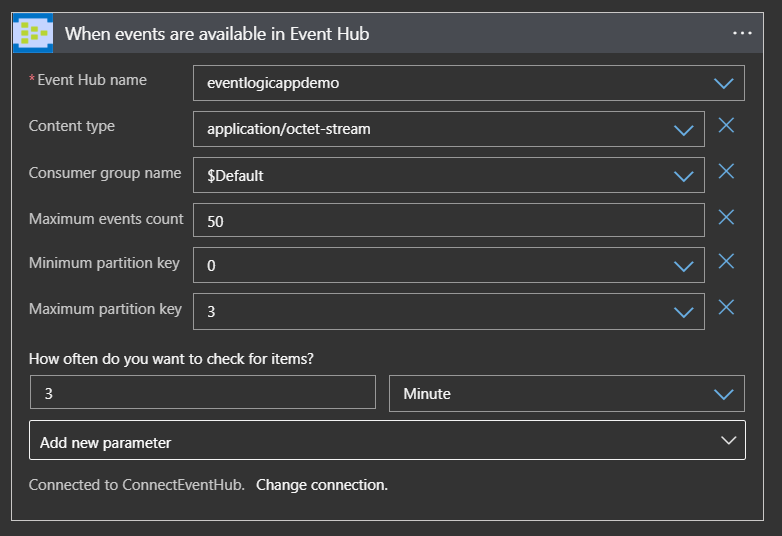

If we configure the Event Hub Trigger without “Minimum Partition key” and “Maximum Partition key”, then by default all partitions will be polled.

If we configure the Event Hub Trigger with “Minimum Partition key” and “Maximum Partition key”(considering the number of partitions), then only specific partitions will be polled. In this case : partition 0,1,2 and 3 will be polled.

In either of the above cases we will have a Single logic App trigger polling for all the partitions with a polling interval of 3 minutes.

Suppose all the Event source data is pushed into a single partition (say partition 3), then it will take more than 12 minutes to pull the data.

Note : If the data is pushed into the Event Hub without targeting any of partitions then we cannot assume the data is distributed to all the partitions equally.

In a real time scenario, we may require to process all the events from all the partitions of Event Hub at the same time.

For this instance we shall again keep an Event Hub with only 4 partitions for demo.

Since we know a single Logic App Event Hub trigger shall poll all the partitions in round robin fashion and each trigger shall poll each partitions and our used case will not be satisfied. Hence, we shall go for 4 different Logic App which points to each Event Hub partition.

Each Logic App can point to different Partitions as shown in the below image.

If we have the common Logic of processing the Events then we can opt for Common Logic App which does the actual Processing of the Events. These 4 Logic App can pull the data and call the Common Logic App for processing. This helps in LA definition management and also helps in reducing the complexity.

Later we can call the Common Logic app from all the Other Logic Apps.

The above design can be made scalable in various aspects based on number of Events and Event Hub Partitions.

For example :

Let’s consider the scenario where you have more Events that are published and you will have to add more partitions to the Event hub. For now we keep it as 8 partitions.

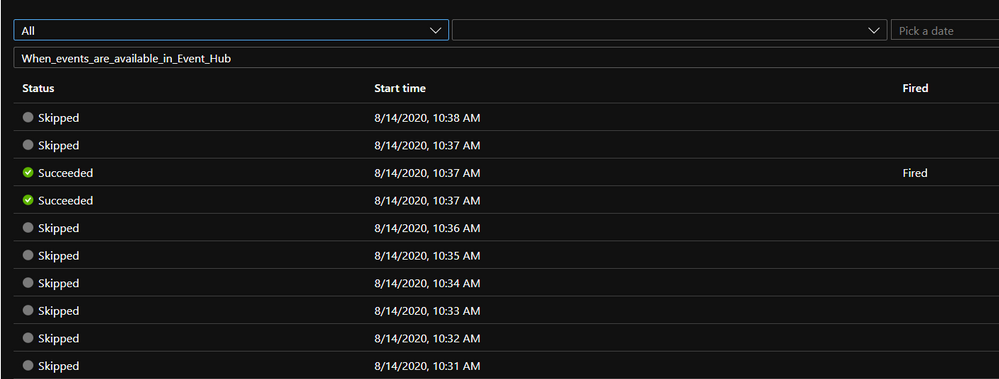

If we have a single Logic App to poll all the partitions from 0 to 7 , it would take 24 minutes to poll all the data if we have configured to poll the Event Hub for every 3 minutes with total of 8 triggers fired.

Similarly, if we have the polling frequency set for 1 minute it would take at least 8 minutes to complete to poll all the partitions.

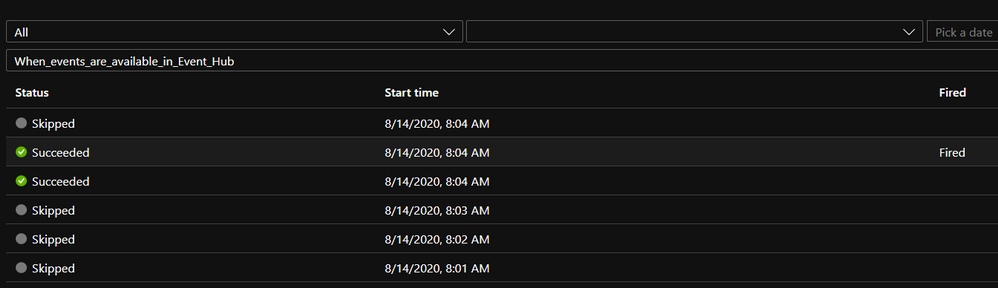

Trigger History for reference :

So by the time the Trigger would poll the partition which has more data the chances of overloading the EventHub would be even more because the events are not pulled from Event Hub in the rate that is received from the partition.

In Such scenarios we can add few more Logic Apps where each LA shall poll less partitions which in turn increases the frequency of poll.

For ex: we can clone 3 more Logic Apps separating the main logic of processing/automation of the Events in Child Logic Apps.

Choose Clone option under Overview of Logic Apps and create 3 more Logic Apps.

Later change the properties of LogicApp to poll 2 partitions each. For ex Logic App-1 to partition 0 and 1, Logic App-2 to partition 2 and 3, Logic App-3 to Partition 4 and 5 , Logic App-4 to partition 6 and 7.

Since the major logic goes into the Child(Common Logic App) there would be drastic reduction in maintenance work. If any changes related to business can be done in Child Logic App.

Reference : https://docs.microsoft.com/en-us/azure/connectors/connectors-create-api-azure-event-hubs

Recent Comments