by Scott Muniz | Jul 28, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Azure Cognitive Services provides a suite of AI services and APIs that lets developers work with AI technologies without having a deep expertise in machine learning. This post will cover how we can use two of these services together, Custom Vision and Bing Image Search APIs, along with a .net core console application for rapid prototyping of Custom Vision models.

Custom Vision is a service that lets user build and deploy customized computer vision models using their own image datasets. The process of training a customized computer vision model is simplified as the machine learning happening under the hood is all managed by Azure, only the image data for the model itself is required by the user. A separate user interface is also provided as part of the Custom Vision service which makes it very simple to understand and use.

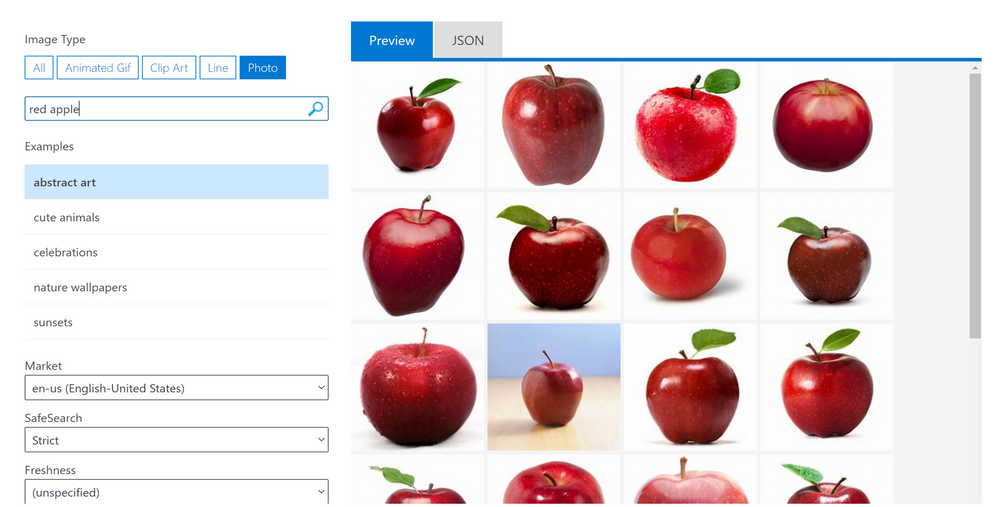

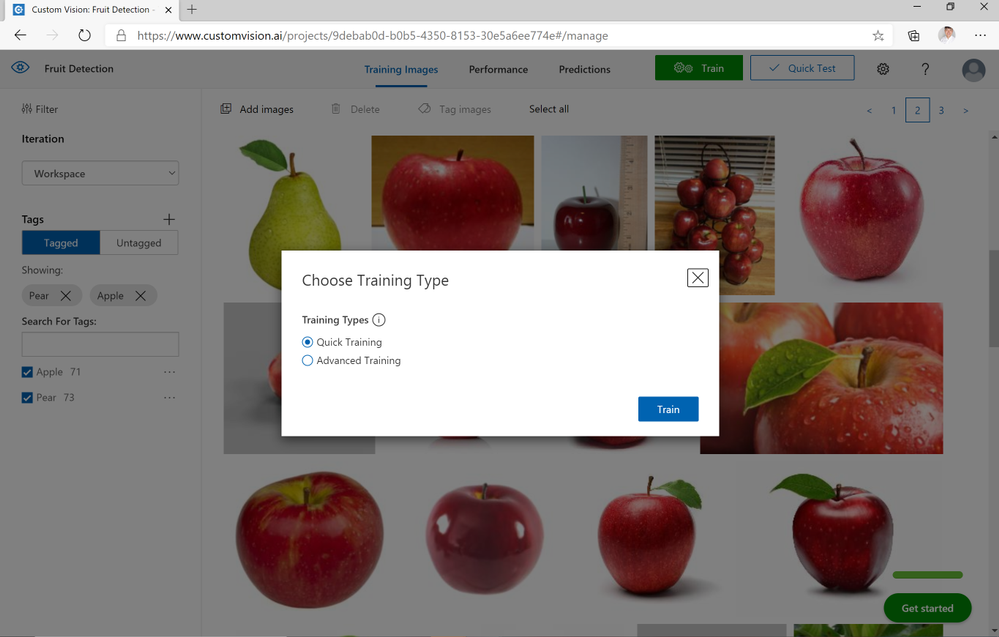

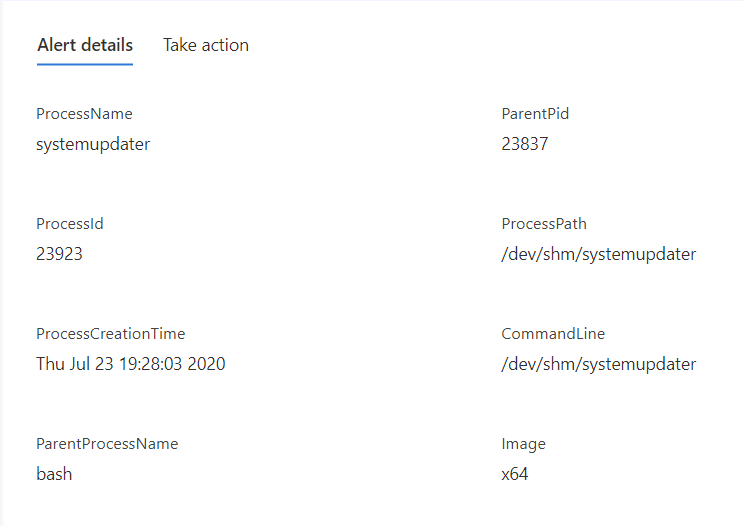

Bing Image Search APIs is a service that executes a search query and returns a result of images and functions very similarly to an image search done the web version of Bing Image Search. Query filters can also be applied as part of the Bing Image Search APIs to refine the results e.g: filtering for specific colours, selecting image type (photograph, clipart, GIF). The image below shows the Bing Image Search APIs through a visual interface that users can try their own search terms on, as well as apply some query filters such as the image type and content freshness.

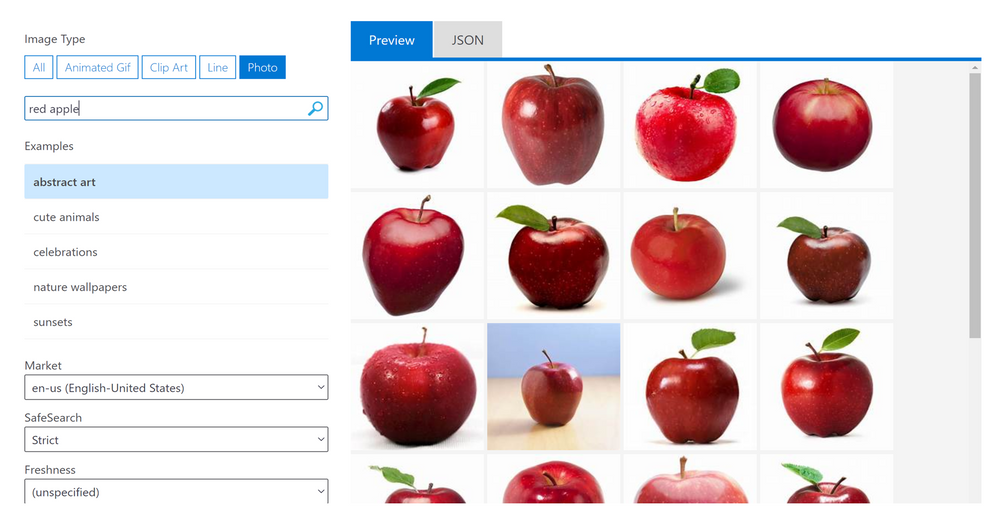

To create a Custom Vision model, it is recommended to have at least 50 images for each label before beginning to train a model. This can be a time consuming process especially when you have no pre-existing datasets and looking to prototype multiple models. By using a combination of the Bing Image Search APIs and the Custom Vision REST APIs, the process of populating a Custom Vision project with tagged images can be accelerated, and once all the images are in the Custom Vision project and tagged, a model can immediately be trained. The flow of this process is captured in a .net core console application that easily be altered to test this process with different Bing Image Search terms and query filters to understand what results are returned and how to further improve the model. The below diagram shows the flow between the components of this application.

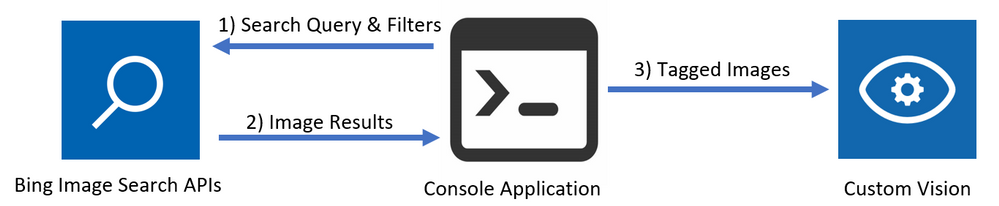

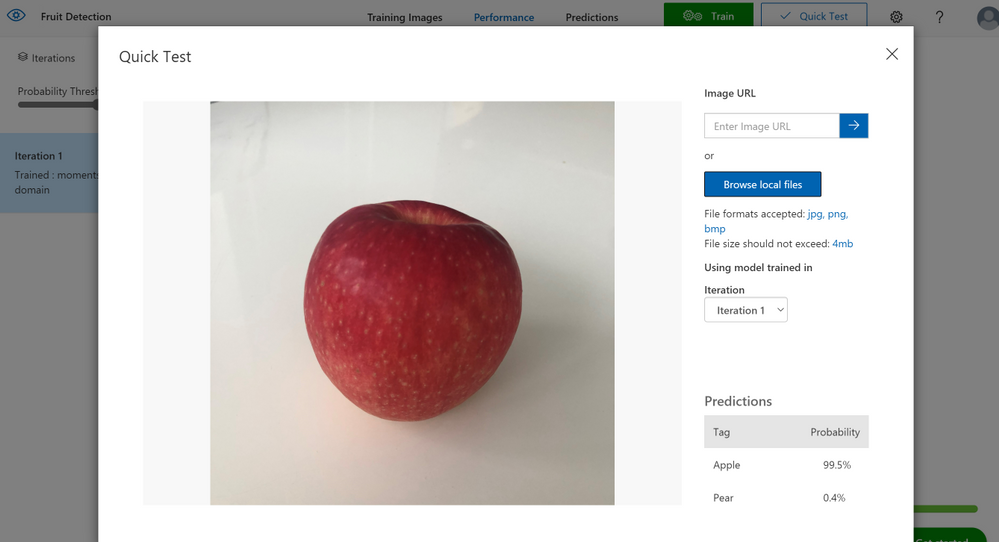

After creating the necessary resources on Azure, the console application of this solution can be opened to specify the name of the tag and the search term to be queried in Bing Image Search. In this example, two subjects are set, the first one with a tag name of “Apple” and a search term of “Red Apple”, and the second one with a tag name of “Pear” and a search term of “Green Pear”. Afterwards, the console application is run and the user populates all the required values such as the resource keys. This will then trigger off the application at it starts with carrying out a search query and populates the specified Custom Vision Project. Once the application has finished running, the Custom Vision project should be populated with tagged images of red apples and green pears. To now train the model, the user can select between two options: quick training and advanced training.

- Quick training trains the model in a few minutes which is good for quick testing of simpler models.

- Advanced training option provides the option of allocating virtual machines over a selected amount of time to train a more in-depth model.

In this example, within 2 minutes after selecting the quick training option, a model for distinguishing between apples and pears has been trained. To test my model, I’ve used a photo of an apple at home which has been correctly identified as being an apple. If the user wanted to expand on this and include more fruit as part of this model, this can easily be done with very minor changes to the code. Otherwise, by also changing the count & offset values when running the console application, more images of apples and pears can be populated in the project to retrain an updated model.

More detailed steps on running this solution are available in the Readme as part of the GitHub repository for this solution which can be used to not just classify between apples and pears but any other examples you have in mind – I have also used this solution to create a Custom Vision model that classifies between 5+ different car models. At the time of writing this post, this solution can be run on the free tiers of both Custom Vision and Bing Image Search APIs so please feel free to try this in your own environment.

Link to GitHub Repository

by Scott Muniz | Jul 28, 2020 | Uncategorized

This article is contributed. See the original author and article here.

To support evolving industry security standards, and continue to keep you protected and productive, Microsoft will retire content that is Windows-signed for Secure Hash Algorithm 1 (SHA-1) from the Microsoft Download Center on August 3, 2020. This is the next step in our continued efforts to adopt Secure Hash Algorithm 2 (SHA-2), which better meets modern security requirements and offers added protections from common attack vectors.

SHA-1 is a legacy cryptographic hash that many in the security community believe is no longer secure. Using the SHA-1 hashing algorithm in digital certificates could allow an attacker to spoof content, perform phishing attacks, or perform man-in-the-middle attacks.

Microsoft no longer uses SHA-1 to authenticate Windows operating system updates due to security concerns associated with the algorithm, and has provided the appropriate updates to move customers to SHA-2 as previously announced. Accordingly, beginning in August 2019, devices without SHA-2 support have not received Windows updates. If you are still reliant upon SHA-1, we recommend that you move to a currently supported version of Windows and to stronger alternatives, such as SHA-2.

by Scott Muniz | Jul 28, 2020 | Uncategorized

This article is contributed. See the original author and article here.

At SWOOP Analytics, a Microsoft Partner, we’ve benchmarked hundreds of organizations using Yammer and Microsoft Teams, and millions of interactions world-wide, to know exactly what “good” looks like. We have the data to see what makes a highly productive team on Teams and a collaborative organization on Yammer.

Our Teams benchmarking found the best performing, and highly productive teams use Microsoft Teams for their day-to-day work, for collaborating within their own team, when you know the people you’re working with.

But what happens when you need to reach outside your team? When you need answers, knowledge or inspiration from a wider group?

Chances are, someone in your organization has the answer to your issue, someone has encountered the same thing and if you can find that person and tap into the knowledge, your job could be done in a fraction of the time, probably at a fraction of the cost.

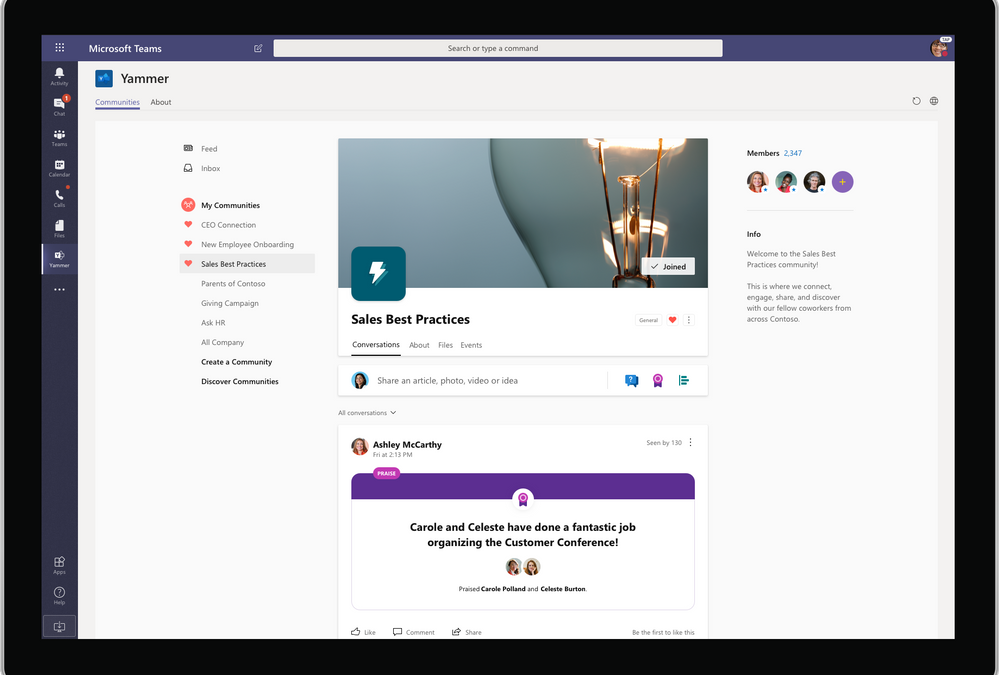

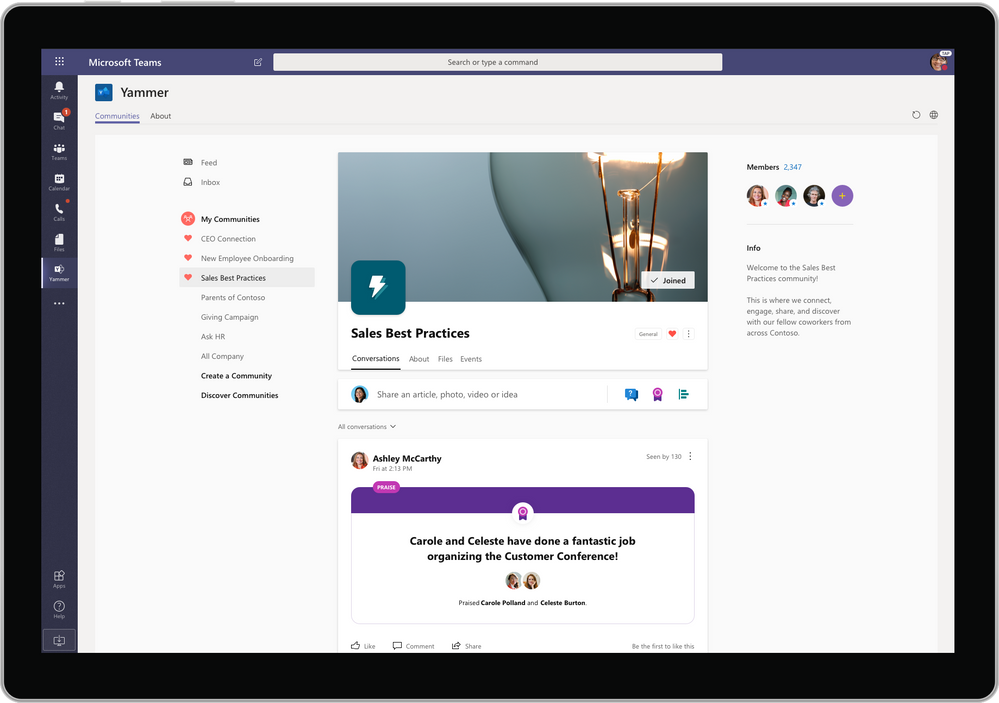

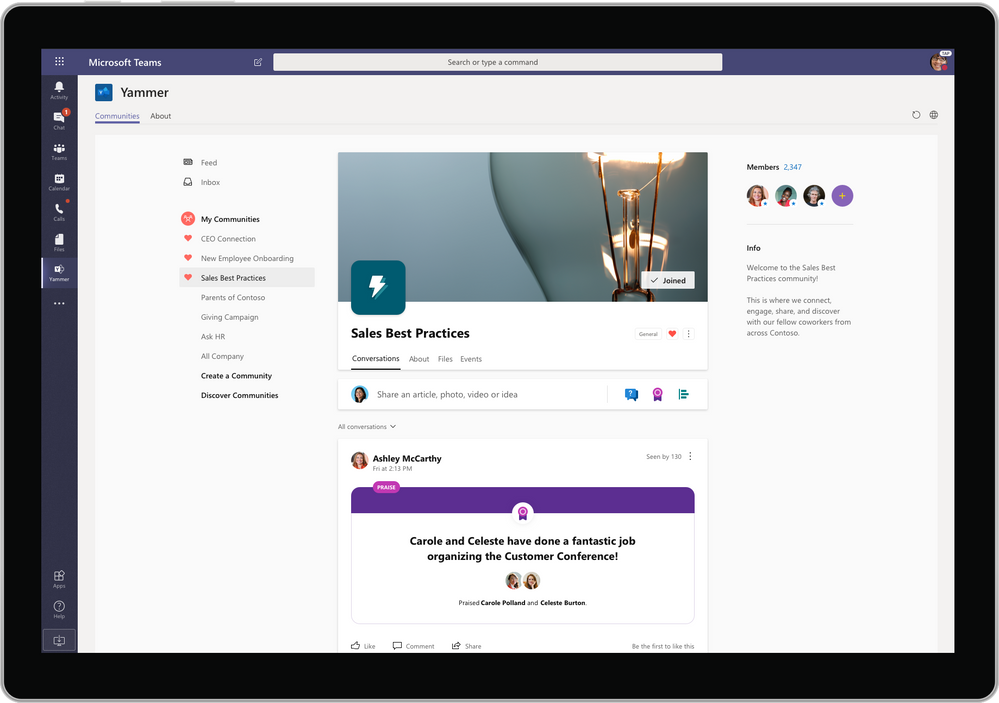

Microsoft has just made it so much easier to find the people and the knowledge you’re looking for, without interrupting your day-to-day work, by introducing the Communities app to Microsoft Teams.

When the Communities app is installed on Teams, it delivers Yammer inside Teams. You can navigate to all the Communities in your Yammer network and participate in conversations, join communities, watch live events, share announcements, pin conversations and mark best answers just as you would in Yammer.

Yammer and Teams – better together

Yammer is for open knowledge sharing. A place to ask questions and find answers, to access knowledge across the entire organization, not just within your team.

Combining Microsoft Teams and Yammer with the Communities app means there is no more toggling between apps or confusion about where to post. Just add the Communities app to your Teams page by searching for “Communities” in Apps on the left-hand menu bar. You’ll start discovering communities, knowledge and conversations alongside your projects, chat and meetings.

“Collaboration always involves interacting with people you know, like the people in your team,” said SWOOP Analytics CEO Cai Kjaer.

“This is the core value proposition for Teams. But often you need to reach outside your core team to find answers. In this case, it’s all about collaborating with people you don’t yet know and this is one of Yammer’s superpowers.

“Together, Teams and Yammer are a formidable ally for business performance, and since most of our daily work is happening within a team, it makes a lot of sense to make Yammer available in the Teams mobile app.”

Communities app in Teams allows instant all-company communication

If teams are to truly perform online, their size should be limited to less than 10 members, says SWOOP’s Chief Scientist Dr Laurence Lock Lee. Following this rule, which is backed by decades of research, Microsoft Teams becomes the primary place where staff log in when they come to work. By adding the Communities app to Teams it allows teams to operate while remaining connected to the entire organization, something that’s become even more important during times of crisis when leaders need quick and clear communication with all staff.

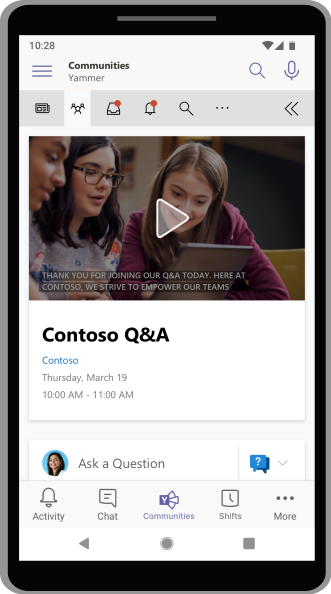

And now with the Communities app in Teams mobile app, it allows employees to put out a call for assistance in Yammer during their day-to-day work, without fear of losing their place in their regular workflow.

Do you behave differently on Teams and Yammer?

SWOOP Analytics conducted research using real-time data to explore whether the increased use of Teams resulted in a drop in Yammer usage.

In terms of activity, we found a high correlation between individual activity levels on Yammer and Teams, showing if you are highly active on Yammer, you will also be highly active on Teams and vice versa. Overall, our research found more collaboration is happening overall when both tools are utilised.

With the data to prove using Teams and Yammer together increases collaboration, it seems the most logical step to make the Yammer Community app easily accessible in Teams. SWOOP is also following Microsoft’s lead by integrating SWOOP for Yammer and SWOOP for Teams into a single navigable dashboard, which can also be accessed as an app within Microsoft Teams.

The new-look Yammer has also changed the name of “Groups” to “Communities”, something Kjaer says is a better reflection of the purpose of Yammer.

“Yammer was always about connecting people from across the enterprise, and re-framing groups as communities now makes that purpose even more clear,” he said.

The new-look Yammer clearly demonstrates Microsoft’s commitment to the Yammer platform and the importance of an enterprise social network to run alongside day-to-day team work in Teams.

The ability to embed the new-look Yammer in Teams and respond to a post via Outlook on your phone or your tablet shows Microsoft’s commitment to make collaboration adaptable, flexible and focused by breaking down the barriers between apps.

About SWOOP

SWOOP provides collaboration analytics for Microsoft Teams and Yammer to give you insights to measure and improve your digital workplace relationships. SWOOP analyses the content and relationships in Teams and Yammer to help you adapt behaviours to reach better business outcomes and make informed decisions about collaboration effectiveness.

by Scott Muniz | Jul 28, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Today, we are excited to announce that the Microsoft Family Safety app, designed to help you protect your family’s digital and physical safety, is available to download on iOS and Android.

The post Now available: Microsoft Family Safety app—helping you protect what matters most appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Scott Muniz | Jul 28, 2020 | Uncategorized

This article is contributed. See the original author and article here.

This blog post was co-authored by Aditya Joshi, Senior Software Engineer, Enterprise Protection and Detection.

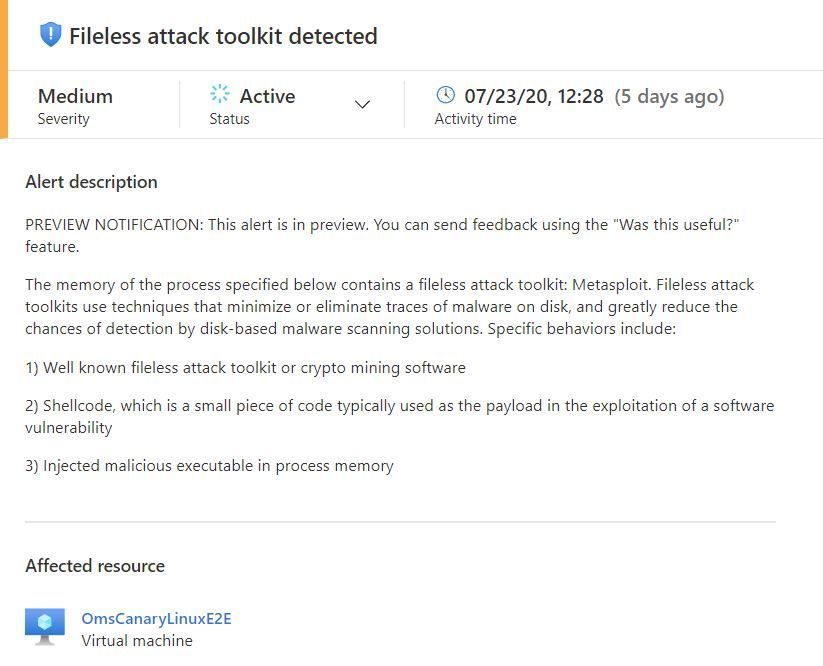

The Security Center team is excited to share that the Fileless Attack Detection for Linux Preview, which we announced earlier this year, is expanding to include all Azure VMs and non-Azure machines enrolled in Azure Security Center Standard and Standard Trial pricing tiers. This solution periodically scans your machine and extracts insights directly from the memory of processes. Automated memory forensic techniques identify fileless attack toolkits, techniques, and behaviors. This detection capability identifies attacker payloads that persist within the memory of compromised processes and perform malicious activities.

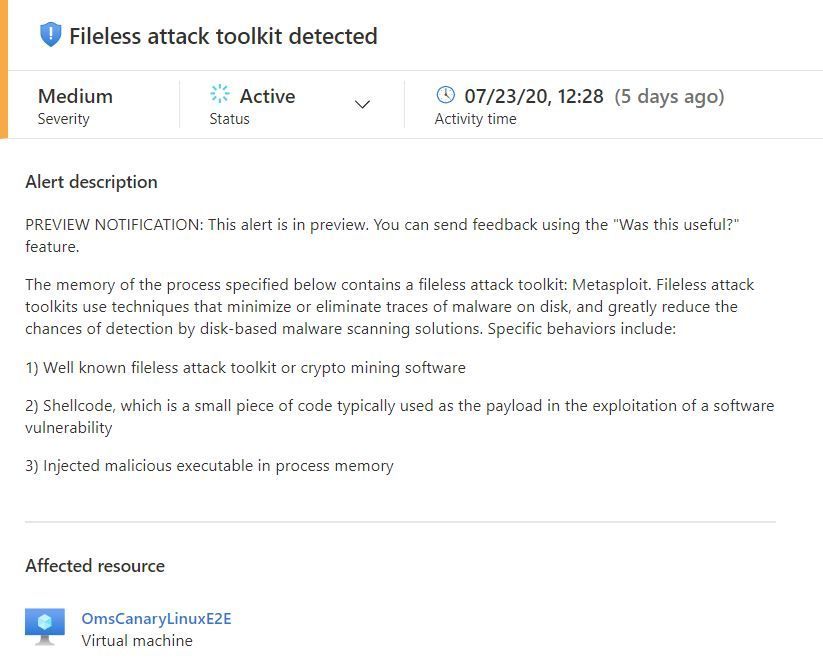

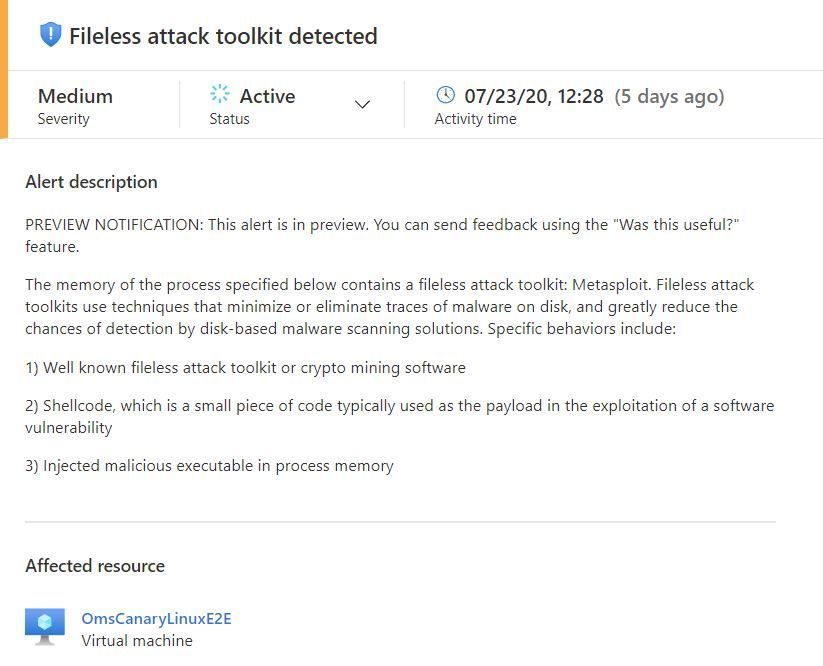

See below for an example fileless attack from our preview program, a description of detection capabilities, and an overview of the onboarding process.

Real-world attack pattern from our preview program

We continue to see the exploitation of vulnerabilities and multi-staged attack payloads with shellcode and dynamic code visible only in memory. In this example, a customer’s VM is running shellcode-based malware and a cryptominer as root within a compromised docker container.

Here are the steps of the attack:

- The attacker uses an unauthenticated network facing service running inside a docker container to achieve code execution as root inside the container.

- The attacker downloads and executes a file and deletes the file to deter disk-based detection, leaving only the in-memory payload.

- The attacker achieves persistence by adding a crontab task to run a bash shell script to download a 2nd stage payload. The 2nd stage payload is a packed file containing the XMRIG cryptocurrency miner.

- The attacker unpacks and runs XMRIG within the container. XMRIG persists in memory and connects to a miner pool to start crypto mining.

- The attacker deletes the on-disk packed file so that the crypto mining activity is only observable in-memory.

Detecting the attack

In the attack above, fileless attack detection, running on the docker host, uncovers the compromise via in-memory analysis. It starts by identifying dynamically allocated code segments, then scanning each code segment for specific behaviors and indicators.

The first payload’s code segment contains shellcode with references to syscalls used for creating new tasks, getting process information and process control. Subset of detected syscalls include: fork, getpid, gettid and rt_sigaction.

The second payload’s code segment contains an injected executable consisting of a well-known crypto mining toolkit: XMRIG. Additionally, fileless attack detection identifies the active network connection to the crypto mining pool.

Fileless attack detection preview capabilities

For the preview program, fileless attack detection scans the memory of all processes for shellcode, malicious injected ELF executables, and well-known toolkits. Toolkits include crypto mining software such as the one mentioned above.

At the start of the preview program, we will emit alerts for well-known toolkits:

The alerts contain information to assist with triaging and correlation activities, which include process metadata:

Alert details also include the toolkit name, capabilities of the detected payload, and network endpoints.

We plan to add and refine alert capabilities over time. Additional alert types will be documented here.

Process memory scanning is non-invasive and does not affect the other processes on the system. Most scans run in less than five seconds. The privacy of your data is protected throughout this procedure as all memory analysis is performed on the host itself. Scan results contain only security-relevant metadata and details of suspicious payloads.

Onboarding details

We will be onboarding customer machines in phases to ensure the smoothest possible customer experience. Deployment begins on July 28th and completes by September 3rd. This capability is automatically deployed to your Linux machines as an extension to the Log Analytics Agent for Linux (also known as the OMS Agent). This agent supports the Linux OS distributions described in this document. Azure VMs and non-Azure machines must be enrolled in Standard or Standard Trial pricing tier to benefit from this detection capability.

To learn more about Azure Security Center, visit the Azure Security Center page.

Recent Comments