by Scott Muniz | Jun 23, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Chris and I grabbed time with Jeff Teper (CVP SharePoint, OneDrive and Teams – Microsoft) to get his take on “how things are going” with he and his family, Microsoft Teams and the Microsoft 365 service, and his recent connections with the tech community.

We asked Jeff about the recent response to the COVID-19 crisis, progress on #ProjectCortex, the coming Microsoft Lists, service performance and more. Plus, Jeff reveals a big education metric number about a new feature in Teams; I’ll raise a hand to hear that one!

OK, I know, fewer words, more Jeff! On to the show…

Subscribe to The Intrazone podcast! And listen to episode #51 now + show links and more below.

![Jeff Teper.jpg Jeff Teper (CVP/SharePoint, OneDrive, and Teams – Microsoft) [guest].](https://gxcuf89792.i.lithium.com/t5/image/serverpage/image-id/200361i2F8FF629F329D706/image-size/medium?v=1.0&px=400) Jeff Teper (CVP/SharePoint, OneDrive, and Teams – Microsoft) [guest].

Jeff Teper (CVP/SharePoint, OneDrive, and Teams – Microsoft) [guest].

Link to articles mentioned in the show:

- Hosts and guests

- Articles and sites

- Events

Subscribe today!

Listen to the show! If you like what you hear, we’d love for you to Subscribe, Rate and Review it on iTunes or wherever you get your podcasts.

Be sure to visit our show page to hear all the episodes, access the show notes, and get bonus content. And stay connected to the SharePoint community blog where we’ll share more information per episode, guest insights, and take any questions from our listeners and SharePoint users (TheIntrazone@microsoft.com). We, too, welcome your ideas for future episodes topics and segments. Keep the discussion going in comments below; we’re hear to listen and grow.

Subscribe to The Intrazone podcast! And listen to episode #51 now.

Thanks for listening!

The SharePoint team wants you to unleash your creativity and productivity. And we will do this, together, one Captain Jeff episode at a time.

The Intrazone links

![Mark Kashman_0-1585068611977.jpeg Left to right [The Intrazone co-hosts]: Chris McNulty, senior product manager (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).](https://www.drware.com/wp-content/uploads/2020/06/large-147) Left to right [The Intrazone co-hosts]: Chris McNulty, senior product manager (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).

Left to right [The Intrazone co-hosts]: Chris McNulty, senior product manager (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).

The Intrazone, a show about the Microsoft 365 intelligent intranet (aka.ms/TheIntrazone)

The Intrazone, a show about the Microsoft 365 intelligent intranet (aka.ms/TheIntrazone)

by Scott Muniz | Jun 23, 2020 | Uncategorized

This article is contributed. See the original author and article here.

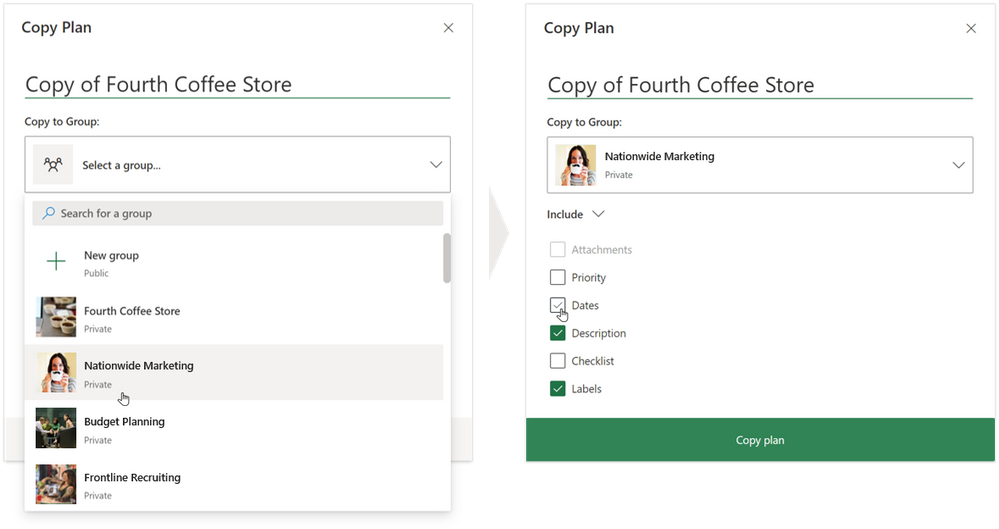

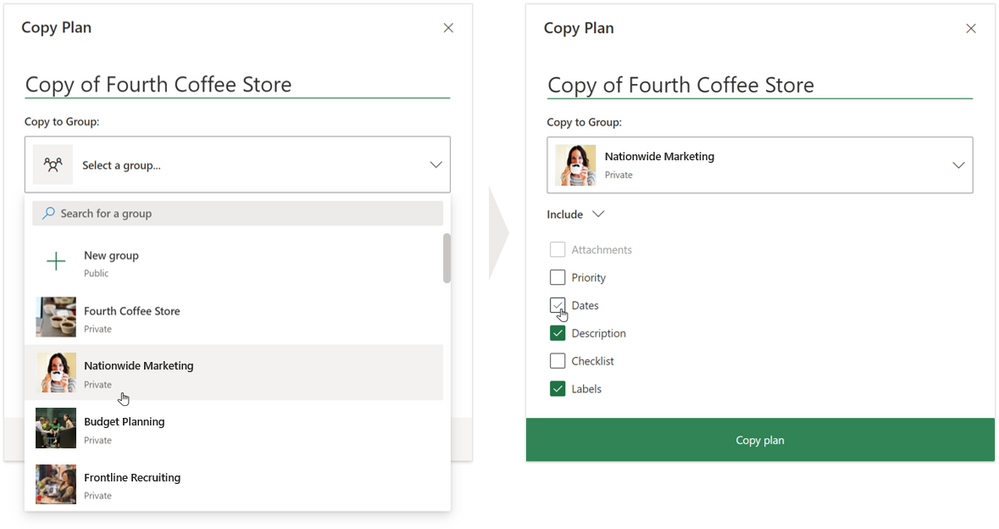

Coming hot off our last Planner roundup at the end of May, we’re excited to announce two new features that rolled out in June: copy plan to an existing group or team and task filtering for iOS. Both have been frequent asks on Planner UserVoice, and both are now generally available to all Planner users.

Copy plan to an existing group or team

Office 365 Groups and Microsoft Teams are fantastic hubs for collaborating with your team. But in the words of one UserVoice commenter, “Group sprawl” is real. The more you use Groups and Teams, the more groups and teams you need to manage. Before today, copying a Planner plan created a new group, adding to that sprawl. But now, you can copy your plans to an existing group or team to streamline your workflow. This release also lets you choose which parts of your plan to copy over.

(Quick side note: Office 365 Groups is being renamed to Microsoft 365 Groups. You can read about this change, which is happening over time, here.)

To copy a plan, start in the Planner hub and select the ellipses “…” in a plan box. Then, select Copy plan. You can also copy a plan from with the plan itself by selecting the ellipses near the top of the window. This brings you to a redesigned pop-out for choosing the destination group or team and the elements of the plan you’d like to duplicate: priority, dates, description, checklist, and labels. You can only copy over attachments to the group or team in which the current plan exists. There’s still the option to copy a plan to a new group, too.

Your copied plan will automatically appear under Recent plans and be associated with the group or team you selected.

One known issue with this feature is that users who don’t have permissions to create groups can’t copy plans, even if they are just copying it to an existing group. We’ve since resolved the issue internally and will be rolling out the fix soon.

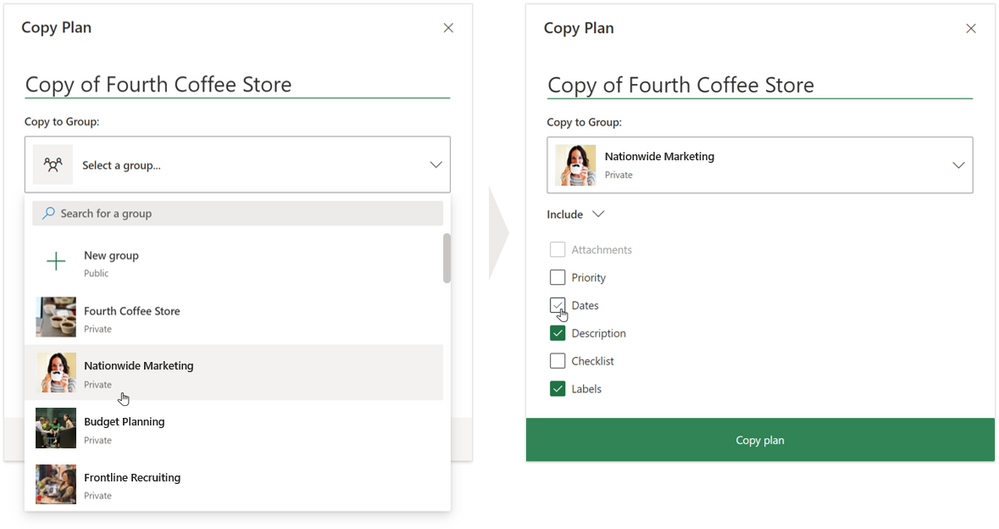

Task filtering for iOS

Filtering in the Planner web experience makes it easy to quickly find tasks using keywords or premade filters, like Priority and Assignment. To bring greater parity to the mobile experience, we’ve added keyword filtering for task titles and assignees to the Planner app on iOS. Using the new keyword filter bar (i.e., the magnifying glass icon at the top of the Board view), you can surface relevant tasks from the Board view and My Tasks view.

We developed both features in response to your feedback on UserVoice, so please continue to drop us suggestions for improving your Planner experience. As always, keep checking our Tech Community for the latest Planner news.

by Scott Muniz | Jun 23, 2020 | Uncategorized

This article is contributed. See the original author and article here.

I’ve had entire projects and my career pivot around who I’ve bumped into at the office at any given time. Whether it was the time a thoughtful co-worker shared why they were leaving the company or the time an Account Executive revealed a resource who could solve my customer’s exact problem, chance 1:1 encounters play a big part in our success.

With so many of us working from home, these chance encounters have been relegated to the small talk that happens in the first few minutes of our virtual meetings. This idle chit-chat does little to provide what those ad hoc meetings did:

- An outsider’s perspective into our challenges

- Empathy and emotional well-being

Of course, not every encounter was a panacea. There were occasions when it was a waste of time, but on the whole, I’d wager that I got more benefit out of them in the long run.

This scenario became top of mind recently when I heard a Development Manager reflect on her staff lamenting the loss of these interactions. The team was looking into how to safely reopen their office as a result, perhaps in shifts or via some other means.

As an Industry Executive in Capital Markets at Microsoft and someone that doesn’t code, it was daunting at first to take this on. I thought about this challenge and how I could solve it. First, I outlined the basic requirements:

- The tool had to facilitate both random meetings and connecting across common interests

- People had to opt-in for the service since not everyone wants to meet

- The scheduling of the meeting needed to be on the recipient’s terms (meaning they got to pick the time)

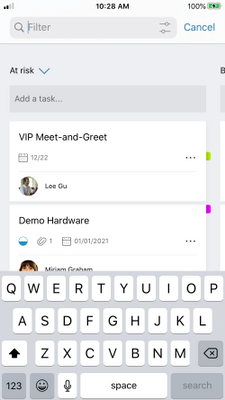

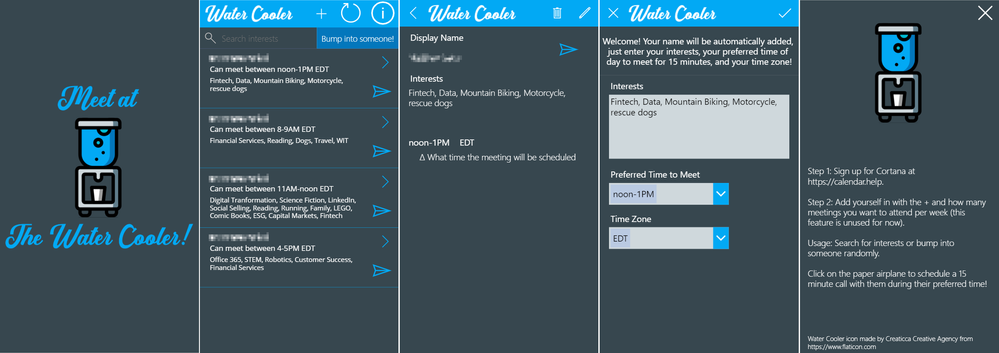

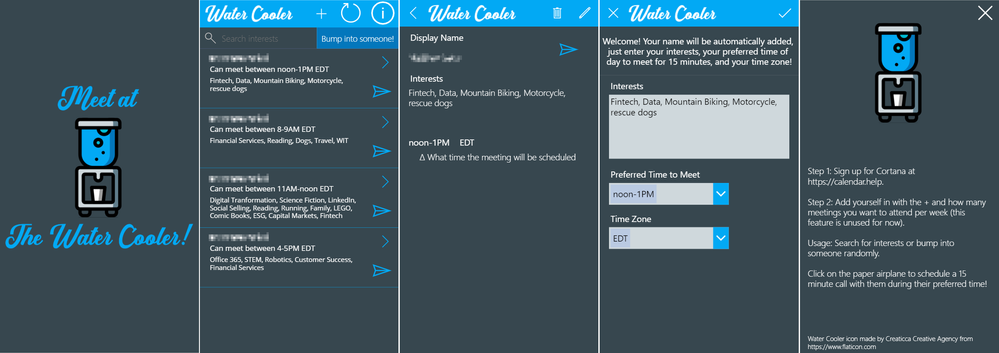

Next, I dusted off my low-code/no-code skills and decided on Microsoft 365 and the Power Platform to solve the problem. I leveraged a simple SharePoint list with 4 columns and a PowerApp with 5 basic screens to meet the requirements, starting with the PowerApp. I named it “Water Cooler.”

The app starts with a Splash Screen on a timer. From there, employees enter their information, view a list of potential co-workers to meet with, and schedule the meeting. The functionality seemed pretty basic, but powerful.

Screens of the Water Cooler App

Screens of the Water Cooler App

The next challenge was to determine how to schedule the meetings. Since I wanted the recipient to dictate the time window they could meet in, I needed an automated mechanism to schedule the meetings based on that variable. Microsoft has an AI assistant that does this exact thing – Cortana.

If you aren’t familiar with Cortana’s scheduling capabilities, I recommend checking out a previous article I wrote about her over on LinkedIn.

The app captured the preferred time and time zone for the recipient to meet, which are fields Cortana can understand. I created a Power Automate button that looked up the email address for the recipient and then executed a pre-scripted email, cc’ing Cortana to schedule during the preferred time.

At this point, the app worked well, but I still didn’t provide a way for people to randomly connect. I felt surfacing a random co-worker was a key component to recreate since it reflected the randomness of bumping into someone at the office. For this, I had to stretch my low-code/no-code expertise.

To do this, I cheated. I took the second screen (above) and shuffled the list. When someone clicked the “Bump into someone!” button, the list would shuffle and an image would appear to block the remaining results – instant random person!

The app makes for a compelling use case to solve a challenge we’re all facing right now, facilitating random connections to gain new perspectives. For technical notes on what is required to get the app up and running or to get the PowerApp and Power Automate code for yourself, check out the repo over on GitHub. Don’t forget that because it’s a PowerApp, it can be deployed right inside Microsoft Teams!

If you would like to showcase this app or see it in action, you can check out the video below!

Matthew Sekol | Industry Executive, Capital Markets

LinkedIn | Twitter

by Scott Muniz | Jun 23, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Hello folks! If you have made it this far, know I think you’re amazing and one of a kind! If you are just tuning in now and would like to see the full blog series daisy chained together, take note of the URLs below, so you can take in the full spectrum of what it looks like to fully automate a replica domain controller build within Azure:

Introduction to Building a Replica Domain Controller ARM Template

Pre-Requisites to Build a Replica Domain Controller ARM Template

Design Considerations of Building a Replica

Digging into the Replica Domain Controller ARM Template Code

Desired State Configuration Extension and the Replica Domain Controller ARM Template

GitHub Repo – Sample Code

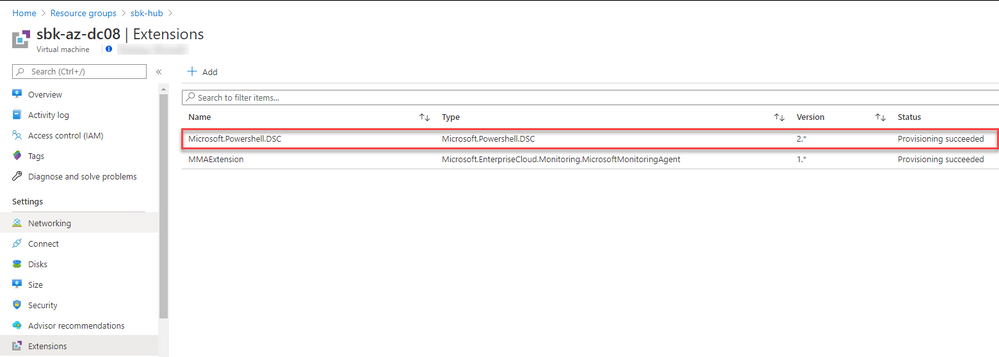

This last post will surround the actual Desired State Configuration code + how to troubleshoot the extension. Since troubleshooting the extension is quick, let us step through that task first.

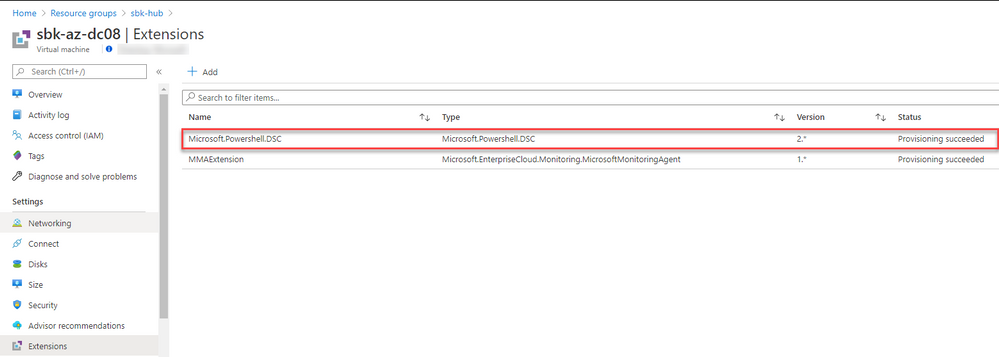

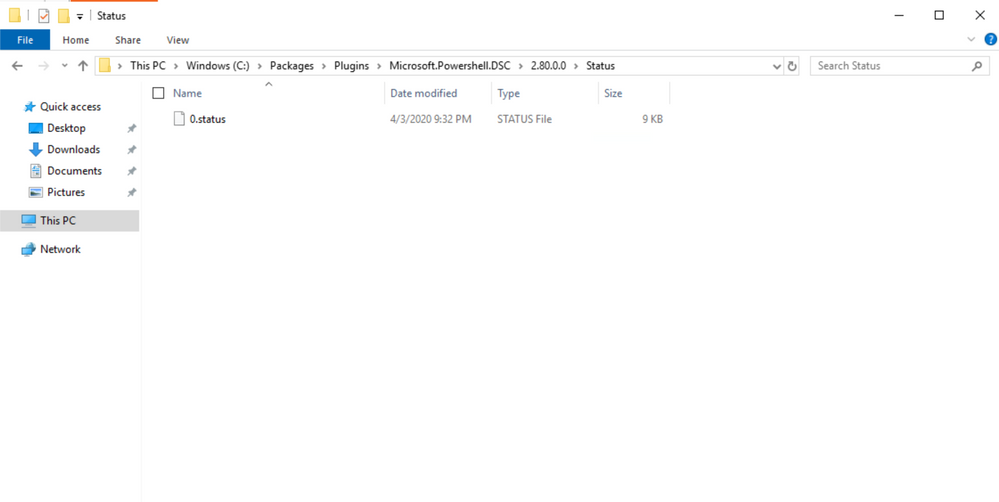

When you head over to the extensions setting underneath your VM blade, you always want to see something like this:

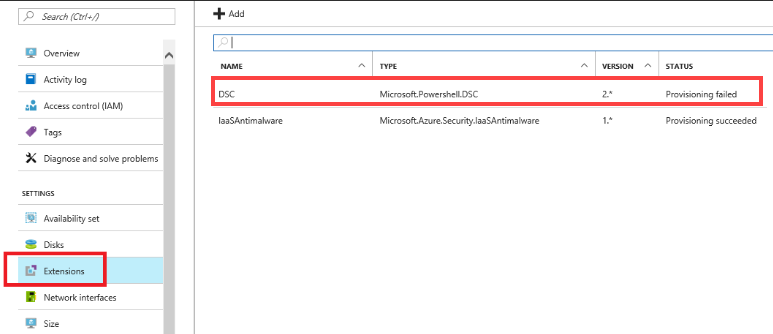

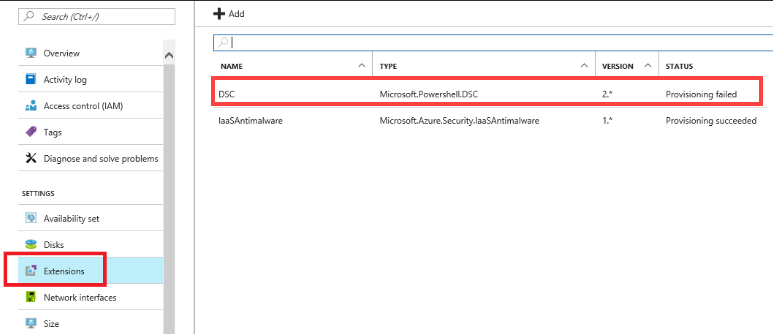

It might seem obvious, but “Provisioning succeeded” means the script ran and the desired state configuration extension has been applied to the VM object. Since some of you may be newer to DSC, the first handful of times this operation may not work properly. What happens if you see something like this instead?

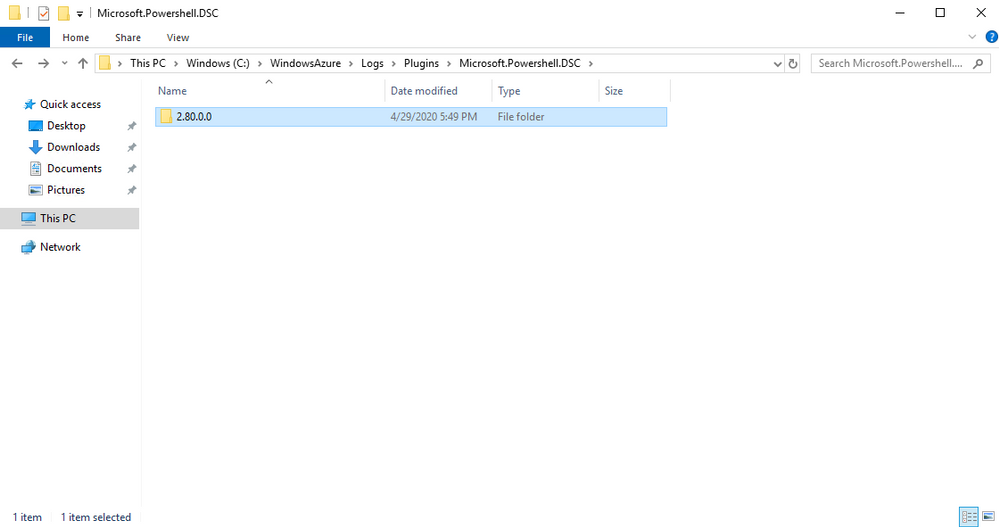

Unfortunately, that means you must dig on the VM itself. Since there is no pull server, all troubleshooting logs show up on the deployed VM. So long as the VM is up, it provisioned successfully, and is accessible, the log files will generate in the following location:

C:WindowsAzureLogsPluginsMicrosoft.Powershell.DSC

What you will find here are the extension output logs. When you log into the VM in Azure, you should see a folder like this:

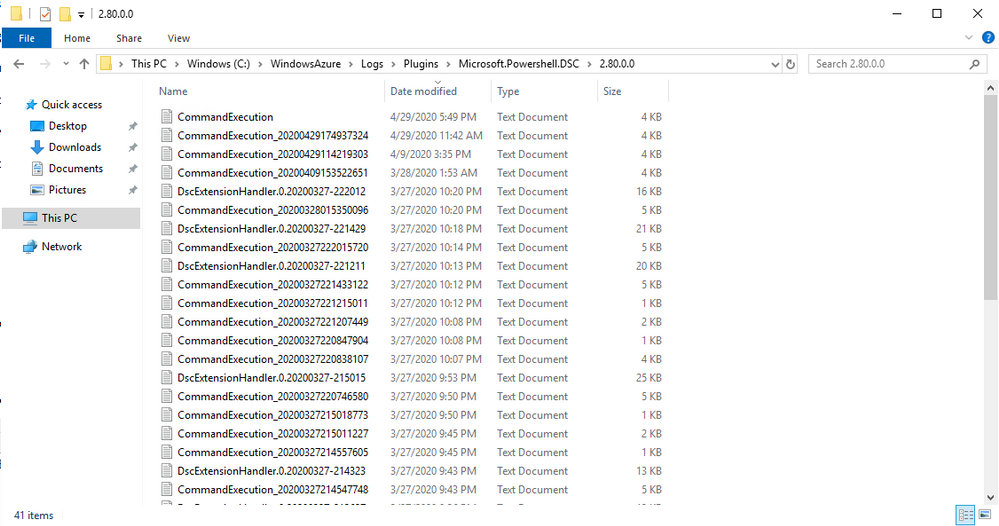

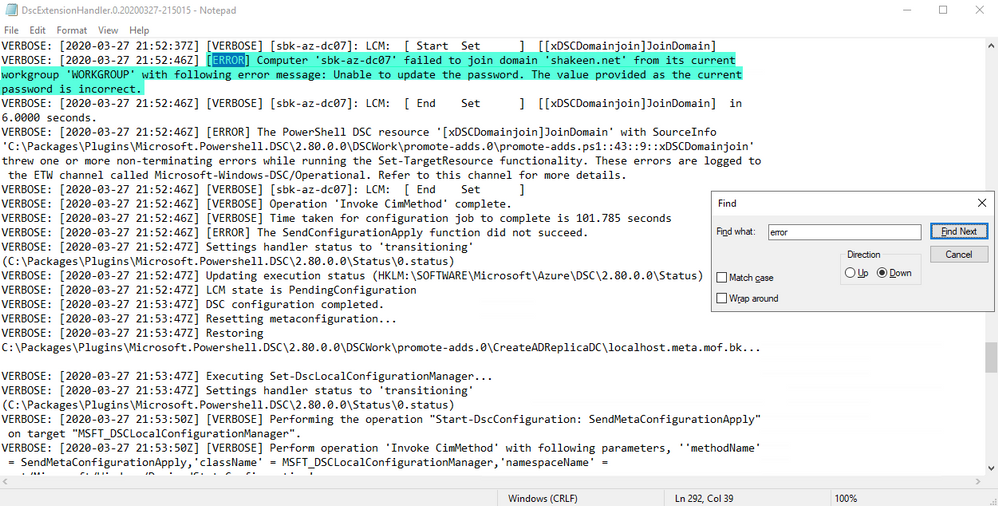

Drilling into that folder, you should see a series of text files with number GUIDs generated at the end. Ensure you see “CommandExecution” and “DscExtensionHandler” files:

Open the DscExtensionHandler file and filter on error. You should hopefully see some helpful clues show up, seeing as this extension throws verbose logging into these files. In my example below, I discovered that I had either mistyped the password or did not formulate the password correctly in the way DSC requires:

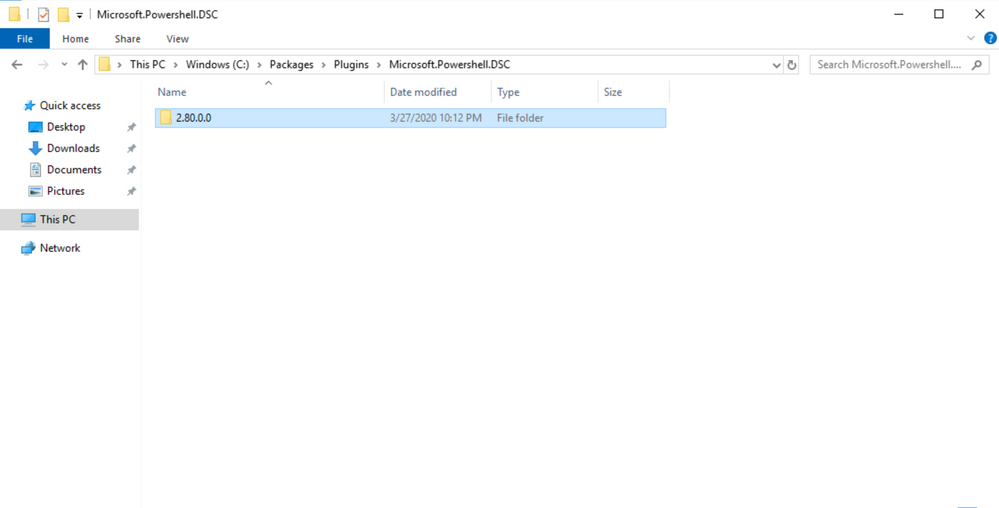

There is a specific workflow the extension follows for any Azure VM. The extension package is downloaded and deployed to this location on the Azure VM itself:

C:PackagesPlugins{Extension_Name}{Extension_Version}

The extension status file contains the sub status and the status success/error codes along with the detailed error and description for each extension run:

C:PackagesPlugins{Extension_Name}{Extension_Version}Status{0}.Status -> {0} being the sequence number

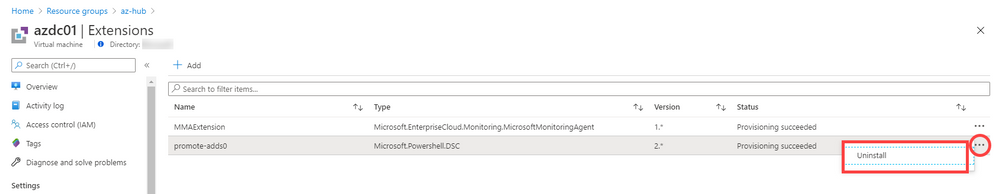

Now, in my screen shots, you will not see any “1.status” folders because one of the troubleshooting steps within the DSC Extension deployment is to remove any failed deployments. Remember this screen shot?

If the DSC extension deployment fails, you need to uninstall the extension using something like PowerShell, the Azure CLI, or in the portal by examining the screen shot below:

Now that the troubleshooting and caveats for re-running a DSC extension have been covered, let us move into the DSC code itself.

Many sysadmins have come to me over the years and asked how to manage systems at scale. With the cloud, many sysadmins are looking at ways to build and manage infrastructure without manual intervention. Desired State Configuration is one of the tools I talk about and I personally feel it can be one of the unsung heroes of any Windows server system environment. In a regular configuration management flow, DSC is comprised of both a data file and a configuration file that are compiled into a text file following the Managed Object Format (MOF). The MOF file is then parsed and executed on the target server using DSC features that know how to configure the system. Now, the Local Configuration Manager (LCM) is the engine of DSC. The LCM runs on every target node and is responsible for parsing and enacting configurations that are sent to the node. Fun fact, every Azure VM deployed within your subscriptions is a LCM, so DSC runs naturally, whether evoked from a pull server or through a DSC extension.

The Azure DSC extension uses the Azure VM Agent framework to configure and report on DSC configurations running on Azure VMs. When the DSC extension is processed the first time, it will install the right version of Windows Management Framework (WMF), unless the server OS is Windows Server 2016 or newer (Windows Server 2016 and newer OSes have the latest version of PowerShell installed). If WMF winds up installed because you are using an older Windows OS, it will require a reboot. When you use the DSC extension, the DSC extension is what creates the MOF file, so all you need to supply is the PowerShell script.

Seems pretty darn awesome, right? Right.

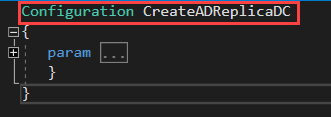

So, what does the actual DSC script look like in my GitHub repo? Let’s go through it section by section.

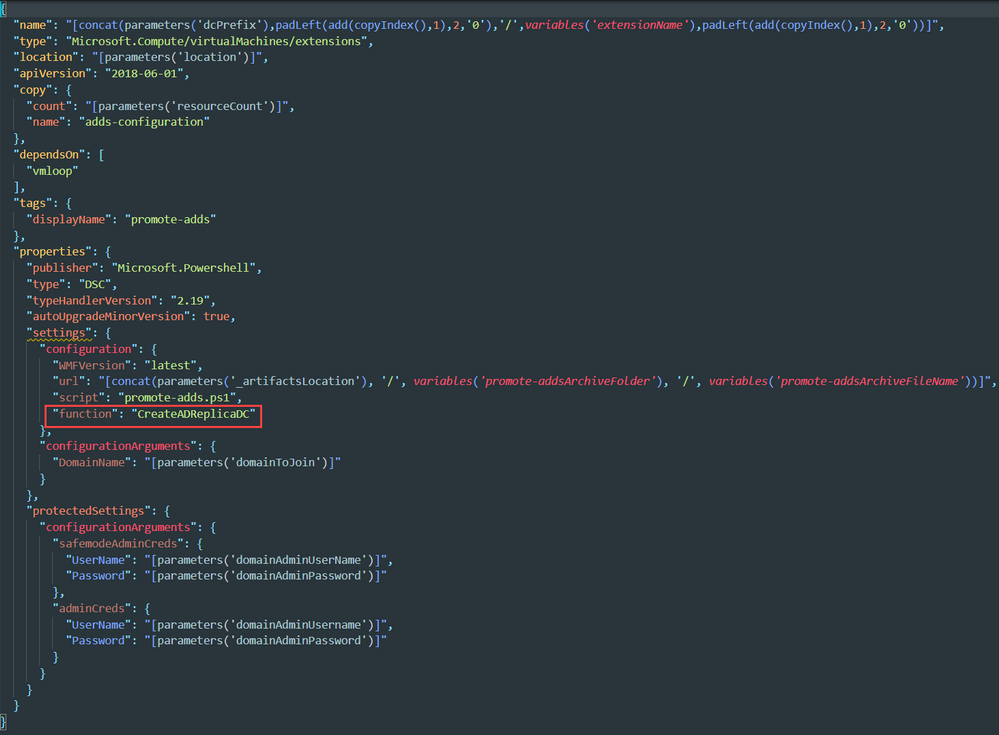

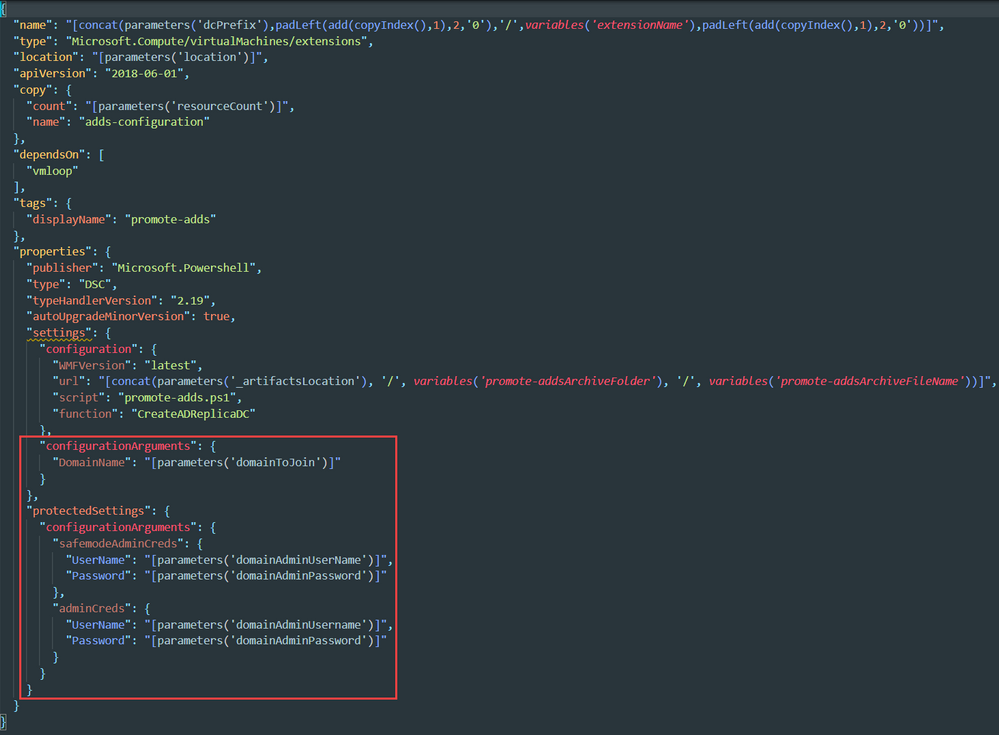

First, the configuration itself is given a title. This is called upon within the ARM Template.

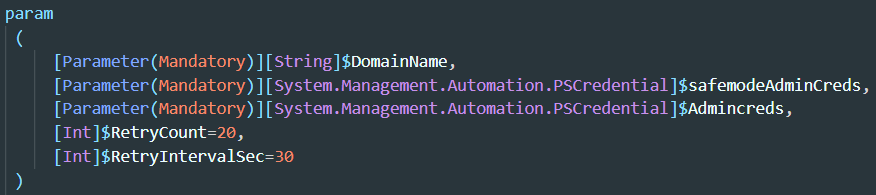

Let us expand the parameters next. These are declared parameters within your parameters JSON file from the ARM Template deployment.

Once the parameters are effectively declared, the DSC script imports some DSC modules:

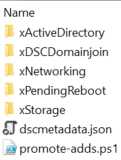

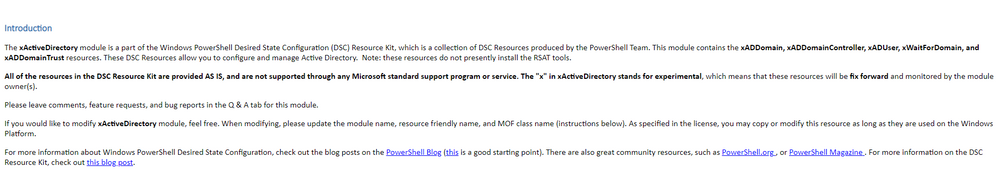

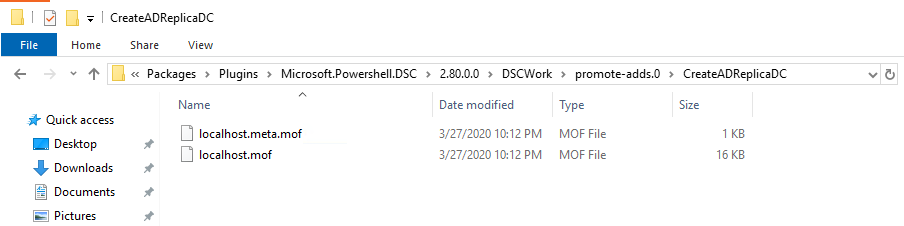

Within the template, the DSC ZIP file I reference (found here) contains the right manifests + DSC modules. If you download and open the ZIP file, you will see this in the folder:

xActiveDirectory, xDSCDomainjoin, xNetworking, xPendingReboot, and xStorage are all experimental DSC modules and can be downloaded here or you can search for these modules using your search engine of choice and download accordingly. The larger zip from the script center packages up all Microsoft documentation for each experimental module. Personally, I have found that helpful when trying to figure out how to make use of the modules to build a domain controller.

Packaging up the DSC modules and then importing them in the script you pass to the DSC extension is how the DSC extension can align the configuration on the target nodes. The DSC extension takes the ZIP file and extracts the modules to the following folder:

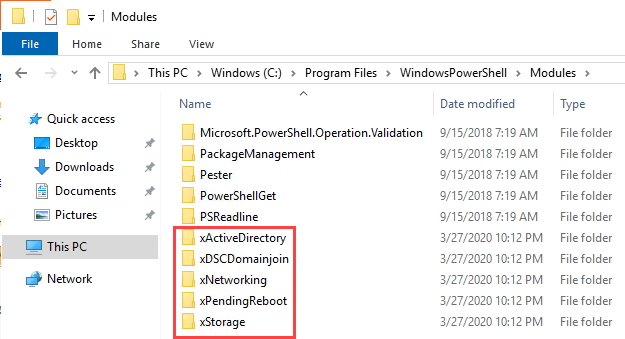

Additionally, the PowerShell script gets plugged into the following folder:

And if you double click into “CreateADReplicaDC,” you will see the MOF files:

By this point, it should be hopefully coming full circle in terms of how DSC and the DSC extension work to configure VMs during deployment using the Azure Resource Manager (ARM) model.

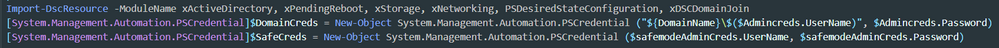

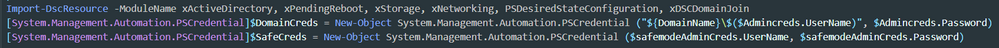

After the modules are imported, we need to lean on the PSCredential Class System.Management.Automation. This class offers a centralized way to manage usernames, passwords, and credentials. I incorrectly passed credentials in as strings (I know, I know – I should have been thinking about credentials being secure objects and placed my dev hat on, but I digress). I leaned on my pal Matt Canty once more and he helped me formulate the right credential object by using these two lines of code in the PowerShell script:

After all parameters and credential objects are declared at the beginning of the PowerShell script, the PowerShell script follows a specific flow. I will outline those steps next:

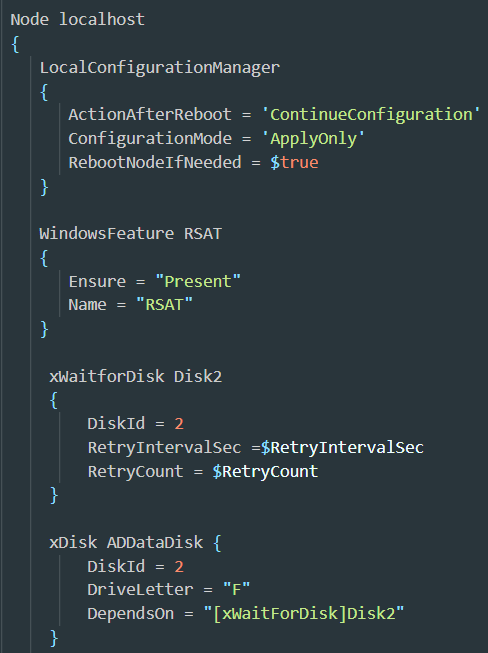

If we read this, the script declaratively states that on the localhost, the Local Configuration Manager (LCM) first gets called. The script then indicates that the configuration should continue after any reboots, the configuration mode should apply the configuration, and the server will reboot if needed. The script then installs RSAT, which are good tools to have on any Windows server. From there, the script calls upon the xDisk experimental module to add the non-cached drive for the SYSVOL folder (you may recall this was a design consideration in my 3rd blog post). The steps taken to initialize the extra drive and format happen sequentially after previous tasks run on the target node.

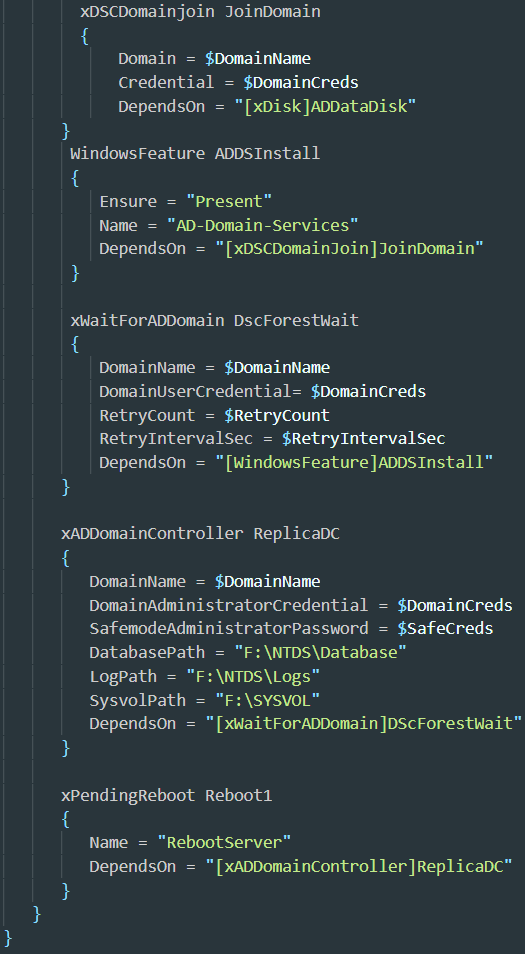

After the disk has been initialized and is reporting as ready to the LCM, the script moves on into joining the VM to the domain, adding the Active Directory Domain Services role, and building out the replica domain controller:

As you can see, DSC code is not too hard to read through and understand each step taken before deployment. Each step depends on the previous step, so you declare some parameters you want met related to the Log Path, SYSVOL Path, and Database Path, and let the LCM do the rest after the script is parsed and applied to the node.

In closing, if you take on this code and run into problems, please do not hesitate to reach out. The steps involved to break this down so everyone understands took me many posts to unravel. The beauty here is you can reach out if you get stuck, but getting deeper with ARM Templates and understanding DSC scripting is going to take you from regular sysadmin status to HERO or HEROINE status in your current role. :smiling_face_with_smiling_eyes:

by Scott Muniz | Jun 22, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Background:

I thought it would be helpful to put up an article on migrating Microsoft BizTalk server integrations to Microsoft Azure using Logic Apps and other Azure resources. I have done bit of due diligence to figure out what are the corresponding resources which could replace or suitable for migrating BizTalk server integrations to Azure. Of-Course ,It’s not like apple to apple comparison but best possible resources to replace current BizTalk server components. We also have some limitations on design patterns, runtime executions. However, workarounds to tweak various challenges encounter while migrating.

Let’s see below the resource mapping sheet of BizTalk server components and corresponding Microsoft Azure resources.

Resource comparison:

| S.No |

BizTalk Resource |

Azure Resource |

Comments |

| 1 |

Orchestration |

Logic Apps |

Work Flow engine similar to Orchestration |

| 2 |

BizTalk Artefacts(Schemas/Maps(XSLT(1.0/2.0/3.0)) & EDI Artefacts |

Integration Account |

Azure Integration Account is the place to store artefacts such as Schemas ,Maps, Agreements, Partners and Batch configurations used in BizTalk integrations.

EDI:As of now , Integration account supports X12, EDIFACT ,AS2 and SWIFT protocols which is in private private. |

| 3 |

.net class Libraries |

Azure functions /API Apps /Web Roles |

Azure Functions:

To replace the Simple/Medium complex functionality of .net class libraries. For complex scenarios, you can check durable functions.

API Apps:

For complex functionality we could create Apps and use it in logic apps/ any other resource. |

| 4 |

Host BizTalk schema/Orchestration as Service |

HTTP Trigger /API Management |

HTTP Trigger:

We can use HTTp trigger to expose LogicApp endpoint to external world.

API Management:

You can customize the LogicApp endpoint URL with various security settings via API management. |

| 5 |

Adapters |

Triggers |

We have various connectors to connect multiple endpoints for both Azure and On-Premise (through On-Premise Gateway etc..)resources |

| 6 |

Decode/Encode/Enrich |

Schema Validation, Decode X12, AS2 and Flat file decode/encode etc.. Connectotors |

Various actions are available to perform specific actions what we have BizTalk pipeline component. |

| 7 |

Custom components |

Logic App Custom Connector |

Logic Apps has various connectors to talk to respective LOB systems. However, if you seek a component to implement your own custom code ,leverage Logic App Custom connector.

Supported : SOAP and REST using WSDL or OPEN API definition respectively. |

| 8 |

Config Store (SSO etc..) |

Key Valut |

Key Vault:

Place to store secure config ,certificates.

This can be retrieved in Logic App using Key-Vault connector or REST API. |

| 9 |

BRE |

Azure fucntions/Liquid templates(BRE) |

Azure Functions:

We could store the configuration in Azure functions might need to implement Encrypt/ Decrypt for Secured info.

Liquid Templates:

This is not an exact comparison but you could leverage this to have conditions evaluated and use output as deciding factor in your design. |

| 10 |

On-Premise SQL DataBase |

Azure SQL Database |

Could use for creating Custom Databases |

| 11 |

Publish- Subscription/BizTalk design patterns |

Azure ServiceBus/EventGrid |

Azure Service Bus: Message based.

Could provide flexibility to have custom context properties.

Queues-

For queuing messages for longer time would be used to implement BizTalk patterns like Sequential Convoy.

Topics:

Creates no.of copies of message based on no.of subscribers and could have filter mechanism as well like in BizTalk.

Event Grid: Event Based

Provides Pub-Sub architecture similar to BizTalk |

| 12 |

Enterprise Library logging |

Logic Apps /Log Analytics /Storage Account/Event Hub |

Logic Apps: By default,we would be able to see the run history which gives details about Trigger or action input,outputs and errors if any.

Log Analytics: If you need to have this logged someplace for analysis or extensive auditing later on the logs /transactions. Better place would be Log Analytics. |

| 13 |

BAM |

LogAnalytics (B2B and LogicApp API management) |

Place where you can log all metrics, transaction details and custom [properties which can be used to monitor your business would be Log Analytics.

Also, Log Analytics supports the tools for Logic App which allows to see Graphically the status of transactions to each Logic App runs. Which could be user friendly for Business users to monitor without much experience on development or queries. |

| 14 |

IIS |

AppServices &Azure API Management |

We could use to expose the hosted services in App Services/Classic services through API management as layer for implementing various security parameters such as Client Certificate Validations/Mock responses. Implement transforming , controlling throughput through policies etc.. |

| 15 |

Automate Deployment |

ARM templates with Power Shell script/DevOps/REST API with custom components/ SDK to deploy Logic App code |

You could various options mentioned for deploying Logic Apps. |

Other Resources on On-Premise:

- On-Premise Data gateway – To connect to On-Premise system resources like FILE,SQL,ORACLE, IBM MQ and SAP etc…

- ISE : On-premise resources can be accessed from Azure Virtual networks through VNet peering, Express route etc.. Wer can ingest LogicApps to ISE environment hosted in Virtual network and enabling subnets to access through routing tables or NSG rules. ISE serves better limitations than shared environment, Performance and better control on security etc..

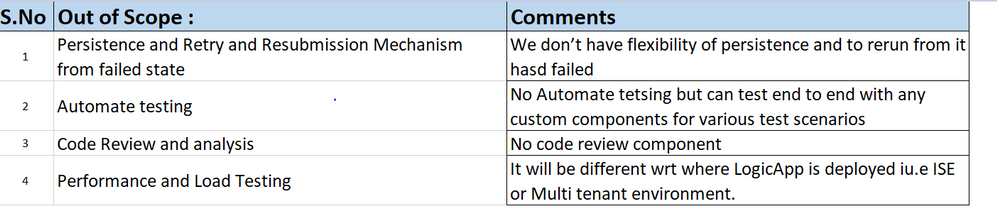

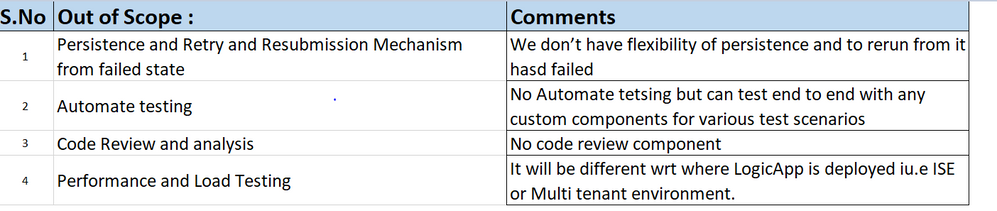

Out-Of Scope:

Other Concepts As IPAAS is evolving and imminent with various new features the below may not be limitation these can be addressed with custom components.

![Jeff Teper.jpg Jeff Teper (CVP/SharePoint, OneDrive, and Teams – Microsoft) [guest].](https://gxcuf89792.i.lithium.com/t5/image/serverpage/image-id/200361i2F8FF629F329D706/image-size/medium?v=1.0&px=400)

Left to right [The Intrazone co-hosts]: Chris McNulty, senior product manager (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).

The Intrazone, a show about the Microsoft 365 intelligent intranet (aka.ms/TheIntrazone)

Recent Comments