This article is contributed. See the original author and article here.

Hi all – Jeremy here with an interesting case where Windows Server 2016 systems in one of my customer’s enterprise environments couldn’t complete installation of the Latest Cumulative Update (LCU). As a Premier Field Engineer, it’s my responsibility to troubleshoot/diagnose issues related to Microsoft Platform technologies and I’m often reminded to look at all factors in the environment that could influence the success or failure of ‘normal’ processes. When attempting to install the LCU, it didn’t matter what steps were taken to resolve the issue using known KB articles, Microsoft Docs, Tech Community suggestions, or Microsoft Forums…the updates would rollback at 99% completion upon system restart. So, when you’ve done everything Microsoft suggests to resolve a problem and the issue still occurs, Microsoft might not be the problem. Proving that theory can be difficult, and sometimes you need to eliminate all possibilities to discover a root cause. This article will take you through the troubleshooting steps performed that led me to the conclusion that third-party software was the culprit.

Environment Details:

- Windows Server 2016

- Operating system hardened with DoD-recommended and custom security baselines.

- Third-party AV/Firewall/Host Intrusion Prevention/DLP

- Patches deployed through System Center Configuration Manager (SCCM)

At first, I was like a “bull in a china shop” …haphazardly troubleshooting with some of the known ‘easy’ fixes when experiencing problems with updating Windows systems. Steps taken to fix:

- WSUS component reset – FAIL

- Sfc /scannow – FAIL

- Dism /online /cleanup-image /restorehealth – FAIL

- Attempt to apply the patch manually – FAIL

- Delete pending.xml and migration.xml (sure, deleting stuff always works) – FAIL

Hmmm…after each attempt I tried installing the LCU, but the rollbacks still occured at 99% after restart. I wonder if there is something in the Update package that’s not signed…or corrupt. Let’s try disabling some of the security controls:

- Disable Driver Signature Enforcement – FAIL

- Disable SmartScreen – FAIL

Then it hit me…of course!! It’s Group Policy…one of the System Admins made a change without telling anyone and it’s causing a problem with the Windows Server 2016 systems! I’ll just temporarily move the machine to an OU with no GPOs applied…aaannd FAIL.

You can begin to see a trend here with my troubleshooting attempts…

Okay, it was time for a sanity check, so I decided to “phone a friend” by reaching out to our internal community. What was the advice given? …The evidence is in the logs!! (oh, right…duh)

So, when I was done beating the system with a virtual hammer to get the installs working, I pulled out my ‘IT scalpel’, took the sound advice offered by my colleagues and began to analyze the logs (I know…should have started here first).

Logs analyzed:

- Setup Event Log

- *Windows Update log

- setupAPI.dev.log

- SCCM logs

- CBS logs

Indicators

Here’s what I found:

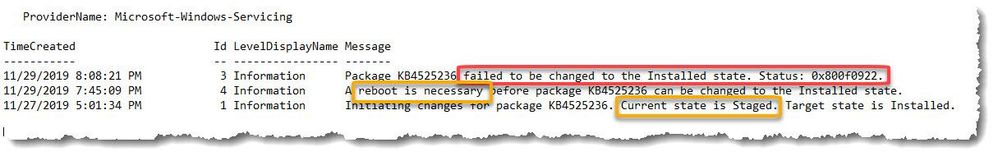

The Windows Setup Event Log confirmed that the patch was indeed staged with a target state of ‘installed’, but then rolling back upon restart and reverting to a ‘failed’ state (Figure 1.).

The *Windows Update Log was no help because all entries were ‘unknown’ with a system date of 1600/12/31 (insert picture here). Because the system is disconnected from the Internet, running Get-WindowsUpdateLog in PowerShell requires Symbol files to merge and de-code the new ‘.ETL’ Windows Update log binary format couldn’t be downloaded. There are steps available to create an offline manifest referenced in this link, but I decided to press on.

SCCM logs were helpful because they showed that the system was targeted for the update, but after restart showed the update as failed/pending install.

The setupAPI.dev.log didn’t reveal any clues so I moved on to the CBS logs located under C:WindowsLogsCBS

The CBS.log (and CbsPersist_[timestamp].log) gave the most useful information.

- The Windows CBS (Component Based Servicing) log is a file that includes entries for components when they get installed or uninstalled during updates.

- Observed (Figure 2.) were ‘access denied’ errors in the log, created at the time of update rollback. The denied access errors indicated that a component of the update was attempting to update Windows boot files in the EFI System Partition (ESP).

- Because all Windows Server 2016 systems are formatted with a GUID partition table (GPT), there must be a system partition (the ESP), usually stored on the primary hard drive that is used for device boot. The minimum size of this partition is 100 MB and must be formatted using the FAT32 file format. This partition, referenced in this link, is managed by the operating system and should not contain any other files.

Figure 2: CBS Log example.

Something is blocking access to the ESP…what could it be? We’ve already checked the Windows logs and security controls with no indicators of anything blocking.

“The Lightbulb Moment”

I described the operational environment at the beginning of this article and mentioned a third-party AV/Firewall/Host Intrusion Prevention/DLP application. The application is managed by another group, so we initiated a ticket to investigate the issue. Without going too far into the details, research of the third-party logs confirmed that the DLP (Data Loss Prevention) application had a rule blocking Fat32 partitions because they were deemed to be a security risk. Once documentation regarding the ESP and an explanation for its purpose was delivered, an exception was created.

When the exception took effect on our server, we attempted once again to apply the LCU…SUCCESS!

Conclusion

In this case I could have saved a lot of time and headaches if I would have included the third-party application administrators, but sometimes exercising due diligence to eliminate all possible sources is necessary to prevent ‘finger-pointing’ and accusations. Fortunately, after providing evidence that Windows wasn’t the culprit the problem was resolved.

Happy troubleshooting! Out for now…

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments