This article is contributed. See the original author and article here.

Oxford’s AI Team 1 solution to Project 15’s Elephant Listening Project

Who are we?

|

|

|

|

|

|

|

|

|

|

|

|

Members of Microsoft Project 15 have approached Oxford University to help analyse these audio recordings to better monitor for threats and elephant well-being. We will use the machine learning algorithms to help identify, classify, and respond to acoustic cues in the large audio files gathered from Elephant Listening Project

What are we trying to do?

Microsoft’s Project 15’s

Elephant Listening Project Challenge document

The core of this project is to assist in Project 15’s mission to save elephants and precious wildlife in Africa from poaching and other threats. Project 15 has tied with the Elephant Listening Project (ELP) to cleverly record acoustic data of the rural area in order to listen for threats to elephants and monitor their health

Baby elephant calling out for mama elephant.

How are we going to do it?

Documentation for video (here)

Data preview / feature engineering

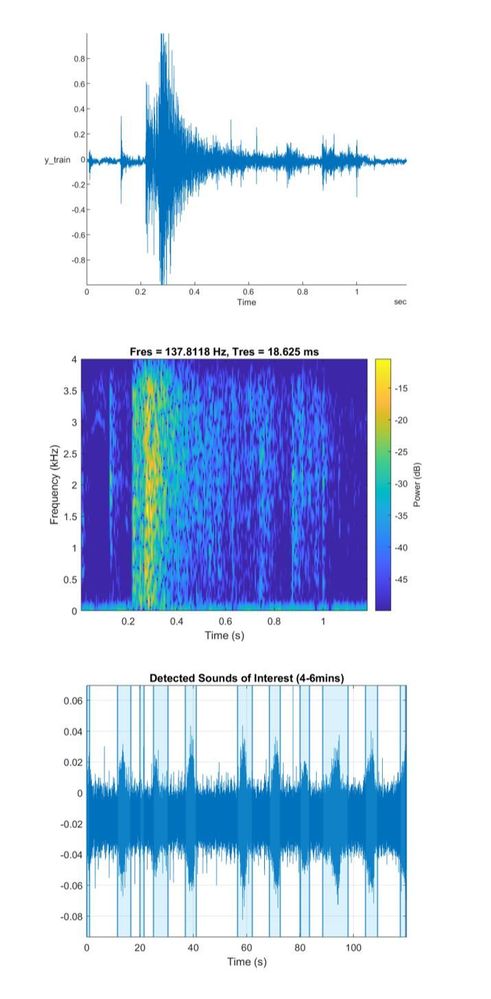

The key to many machine learning challenges boils down to data quality: not enough data or too much data. In this case, the rich audio files proves expensive and time-consuming to analyse in full. Hence, MATLAB 2020b and the Audio Toolbox was used to truncate audio files down into processable sizes.

Before we began, we conducted a brief literaure search on what kind of audio snippets we needed to truncate out. A paper analysed gunshots in cultivated environments (Raponi et al.) and characteristed two distinctive signals in the acoustic profile in most guns: a muzzle blast and ballistic shockwave. From this, we had a better clue of what kind of audio snippet profiles we should extract.

A variety of ELP audios were obtained and placed into a directory to work within MATLAB 2020b to ensure the audio snippets obtained reflected the diverse African’ environment. DetectSpeech, a function in MATLAB’s audio toolbox was used to extract audios of interest; audio snippets whose signal to noise ratio suggested speech due to it’s high frequency signal. These extracted snippets were compared to a gunshot profile obtained from the ELP with the spectrogram shown. This method allowed us to only analyse segments of the large 24hr audio file.

This datastore will be used later in validating and testing our models.

Modelling

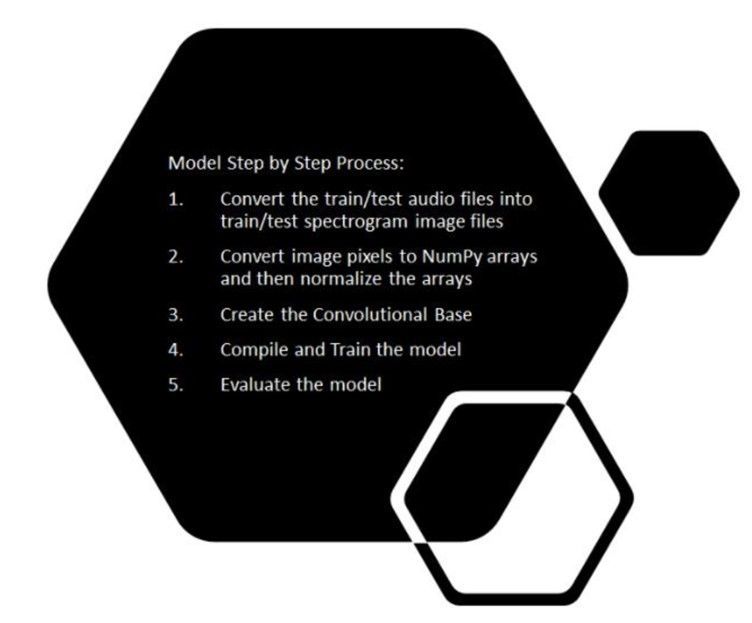

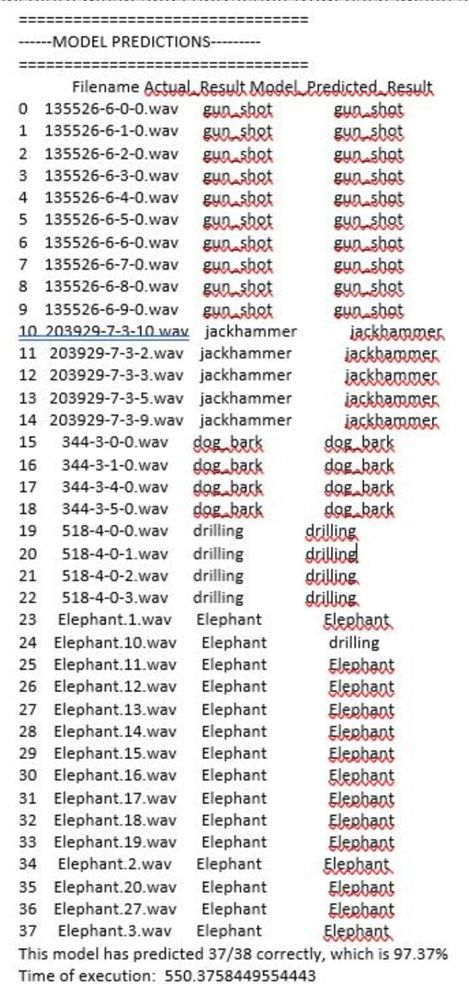

We developed and designed a deep learning model that would take in a dataset of audio files and classify elephant sounds, gunshot sounds and other urban sounds. We had approximately 8000 audio files as our training set and 2000 audio files as our testing set ranging from elephant sounds, gunshot sounds, to urban sounds. The procedure we followed was to convert these audio files into spectrograms and then classify based on the spectrogram images. The step-by-step process is highlighted below:

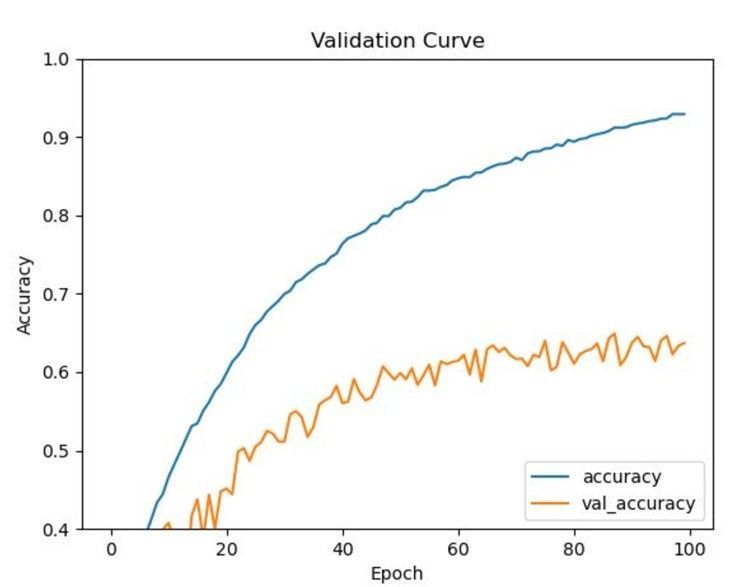

After getting the spectrograms, we converted the spectrograms into Numpy arrays with each pixel of an image is represented as an array. Spectrograms frequencies vary for each class of an audio file. The sound frequency pattern is different for elephants, gunshots, etc. We want to be able to classify each audio based on the differences in sound frequencies captured in spectrogram images. After converting spectrograms into Numpy arrays, we normalized each numpy representation of a spectrogram by taking the absolute mean and subtracting from each image and then diving by 256. From this point, we applied convolutional later and started training the model. We adjusted the hyperparameters to see which would yield better accuracy in classifying audio files. SGD has better generalization performance than ADAM. SGD usually improves model performance slowly but could achieve higher test performance. The figure below shows the results of the model:

Deployment Strategy

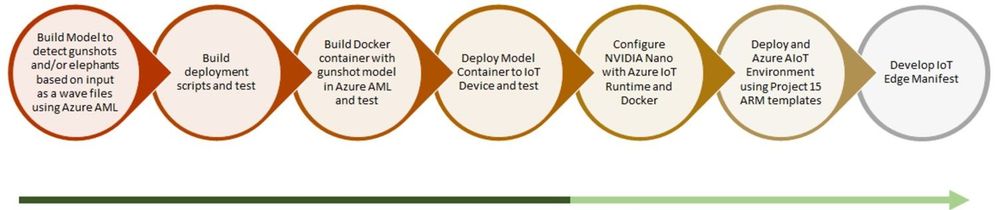

Our actual deployment strategy (as mentioned in our video presentation) is to deploy the built model to an Edge Device (NVIDIA jetson Nano connected to a microphone – in our case) which would be present in the location and perform real time inferencing. The device would wake up whenever there is a sound and perform the inferencing to detect either gunshots or elephant noise.

As of now we have our model deployed on cloud – using Azure ML Studio and is hosted through a docker endpoint (through ACI). Presently inferencing is being performed on the cloud using this endpoint. As an arrangement to this approach, all our data is hosted locally in our cloud workspace environment for the time being.

Simultaneously we are also testing the model deployment on our preferred edge device. Once this is done, we will be able to enable real time inferencing on the edge. Post successful completion of this we will also modify our data storage approach.

Conclusion and Future Directions

Currently we have a working model which is able to detect gunshots as well as elephant sound. Our model is deployed on cloud and is ready for inferencing.

As per our end to end workflow – we are testing our model container deployed on our preferred IoT Device. Once this test is a success, we will configure our edge device with azure IoT runtime and docker. And then, test the same for real time edge inferencing with gunshot and elephant sound.

Upon the success of this, we will automate the entire deployment process using an ARM template. Once we reach a certain level of maturity technically and are ready to scale – we will develop an IoT Edge Deployment Manifest for replicating the desired configuration properties across a group of Edge Devices.

Our next steps solely for improving model performance –

- Classify elephant sounds based on demographics

- Combine gunshots with ambient sounds (Fourier transform)

- Eventually rework the way we infer so that a spectrogram file does not have to be created and ingested by the Keras model. This would be more of an in memory operation

Contact us if you’d like to join us in helping Project 15

Although we are proud of our efforts, we recognise more brains out there can help inject creativity into this project to further assist Project 15 and ELP. Please visit our GitHub repo (here) to find more information on our work and where you can help!

https://github.com/Oxford-AI-Edge-2020-2021-Group-1/AI4GoodP15

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments