This article is contributed. See the original author and article here.

In December 2020, we introduced the Cluster API Provider for Azure (CAPZ) to enable users to operate self-managed Kubernetes clusters on Azure infrastructure. CAPZ empowers you to build and manage Kubernetes clusters on Azure using the same tools and methods you are already using to manage your Kubernetes workloads. Changing a configuration on the cluster becomes as easy as running a `kubectl patch` command.

Started by the Kubernetes Special Interest Group (SIG) Cluster Lifecycle, the Cluster API project uses Kubernetes-style APIs and patterns to automate cluster lifecycle management for platform operators. CAPZ is the Azure provider implementation of Cluster API and it is backed by Cluster API’s vibrant community that of engineers from cloud providers, ISVs and the wider FOSS community.

Since December, the community has continued growing, welcoming new members like GiantSwarm, getting closer to a v1alpha4 release, and starting to plan the v1beta1 release. While the v1alpha4 cycle was focused mostly on stability and iterative improvements, paving the way for a 1.0 release, some of the recent notable feature additions include support for Windows nodes and automatic remediation of unhealthy control plane machines via MachineHealthChecks.

As we look forward to the upcoming release, one capability that has been requested by users of CAPZ is the ability to integrate Azure services with your Kubernetes cluster, such as turning on Azure Monitor to track the health and performance of your workloads, or enabling Azure Active Directory (AD) role-based access control (RBAC) to limit access to cluster resources based a user’s identity or group membership. This is similar to the concept of AKS and AKS Engine addons that you might already be familiar with. But what if you could do this the same way across all your Kubernetes clusters?

Today, we’re excited to announce three new features for Azure Arc enabled Kubernetes that address the above-mentioned problems:

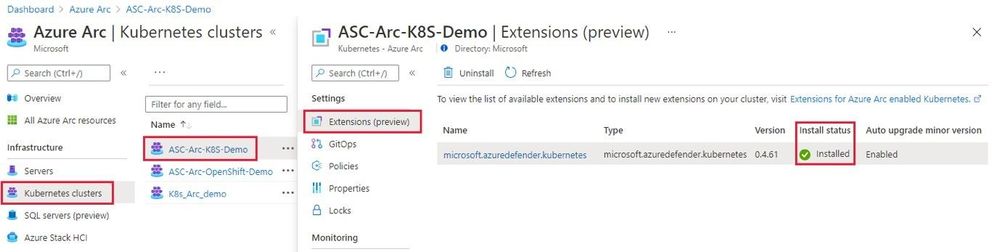

Cluster extensions introduces an Azure Resource Manager driven approach for deployment and lifecycle management of Azure Monitor and Azure Defender for Kubernetes. As each cluster extension gets its own Azure Resource Manager representation, you’ll now be able to leverage Azure Policy for at-scale deployment of these extensions across all your CAPZ clusters as well.

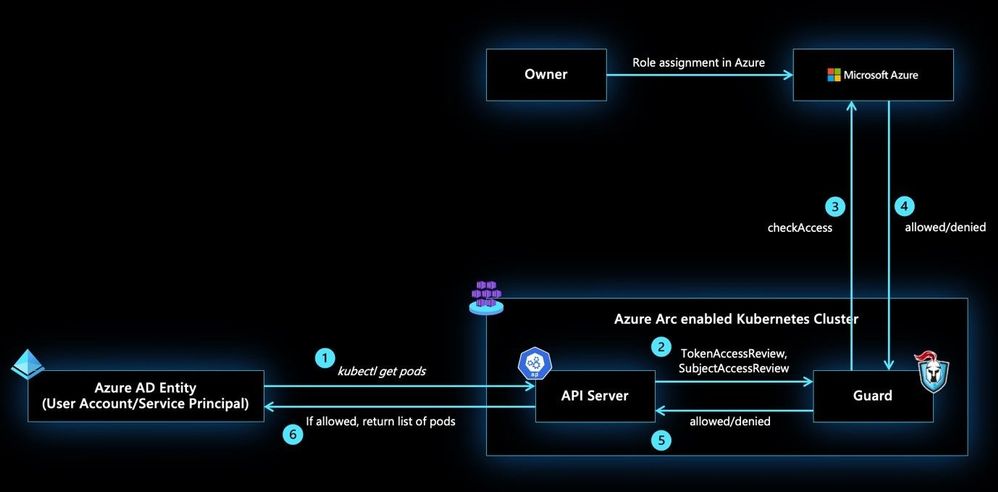

Azure RBAC for Arc enabled Kubernetes allows you to control authorization checks happening on your CAPZ clusters using role assignments on the Azure Arc enabled Kubernetes resource. These role assignments can be done at the cluster scope or namespace scope to account for multi-tenant scenarios on the same cluster. In addition to the built-in roles defined as part of this feature, custom roles could be authored and consumed in role assignments to control the permissions on Kubernetes resources at a more granular level.

Custom locations on top of Azure Arc enabled Kubernetes is envisioned to be an evolution of the Azure location construct. It provides a way for tenant administrators to utilize their Azure Arc enabled Kubernetes clusters as target locations to deploy instances of Azure services. Examples of such Azure resources are Azure Arc enabled SQL Managed Instance and Azure Arc enabled PostgreSQL Hyperscale. Like Azure locations, end users within the tenant who have access to Custom Locations can deploy these Azure PaaS resources on their self-managed Kubernetes clusters where they have complete control on the specification of the CAPZ cluster.

Learn more about Cluster API + CAPZ

- Get started with Cluster API

- Explore the Cluster API documentation

- Explore the CAPZ documentation

Join the Cluster API community

- Join the community on Slack at #cluster-api and #cluster-api-azure

- Join the Cluster API office hours and watch recordings of past calls

Learn more about Azure Arc enabled Kubernetes:

- Documentation on connecting your cluster to Azure Arc

- Cluster extensions documentation

- Azure RBAC documentation

- Custom Locations documentation

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments