This article is contributed. See the original author and article here.

This article is the first part of a series which explores an end-to-end pipeline to deploy an Air Quality Monitoring application using off-the-market sensors, Azure IoT Ecosystem and Python. We will begin by looking into what is the problem, some terminology, prerequisites, reference architecture, and an implementation.

Indoor Air Quality – why does it matter and how to measure it with IoT?

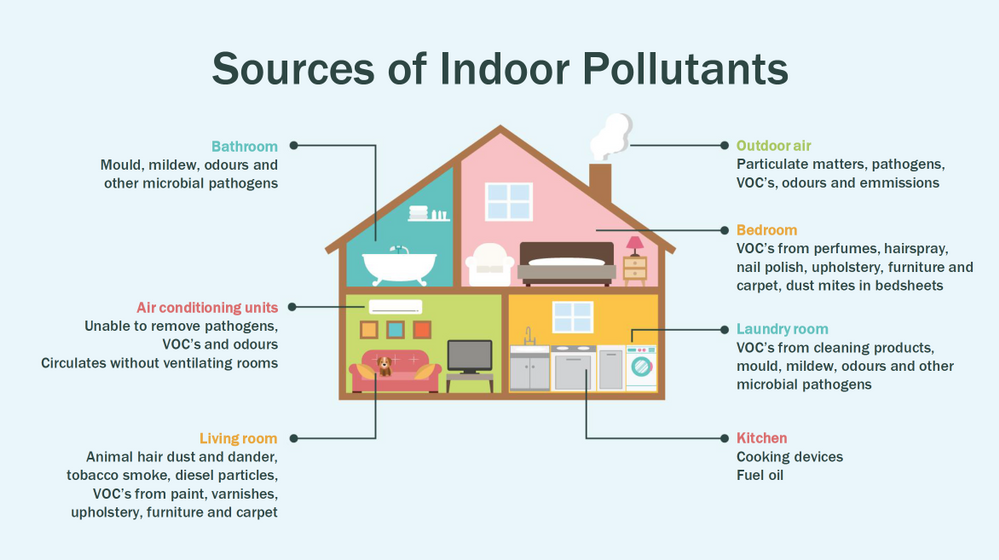

Most people think of air pollution as an outdoor problem, but indoor air quality has a major impact on health and well-being since the average American spends about 90 percent of their time indoors. Proper ventilation is one of the most important considerations for maintaining good indoor air quality. Poor indoor air quality is known to be harmful to vulnerable groups such as the elderly, children or those suffering chronic respiratory and/or cardiovascular diseases. Here is a quick visual on some sources of indoor air pollution.

Post Covid-19, we are in a world where awareness of our indoor environments is key for survival. Here in Canada we are quite aware of the situation, which is why we have a set of guidlines from the Government of Canada, and a recent white paper from Public Health Ontario. The American Medical Association has put up this excellent document for reference. So now that we know what the problem is, how do we go about solving it? To solve something we must be able to measure it and currently we have some popular metrics to measure air quality, viz. IAQ and VOC.

So what are IAQ and VOC exactly?

Indoor air quality (IAQ) is the air quality within and around buildings and structures. IAQ is known to affect the health, comfort, and well-being of building occupants. IAQ can be affected by gases (including carbon monoxide, radon, volatile organic compounds), particulates, microbial contaminants (mold, bacteria), or any mass or energy stressor that can induce adverse health conditions. IAQ is part of indoor environmental quality (IEQ), which includes IAQ as well as other physical and psychological aspects of life indoors (e.g., lighting, visual quality, acoustics, and thermal comfort). In the last few years IAQ has received increasing attention from environmental governance authorities and IAQ-related standards are getting stricter. Here is a IAQ blog infographic if you’d like to read.

Volatile organic compounds (VOC) are organic chemicals that have a high vapour pressure at room temperature. High vapor pressure correlates with a low boiling point, which relates to the number of the sample’s molecules in the surrounding air, a trait known as volatility. VOC’s are responsible for the odor of scents and perfumes as well as pollutants. VOCs play an important role in communication between animals and plants, e.g. attractants for pollinators, protection from predation, and even inter-plant interactions. Some VOCs are dangerous to human health or cause harm to the environment. Anthropogenic VOCs are regulated by law, especially indoors, where concentrations are the highest. Most VOCs are not acutely toxic, but may have long-term chronic health effects. Refer to this and this for vivid details.

The point is, in a post pandemic world, having a centralized air quality monitoring system is an absolute necessity. The need for collecting this data and using the insights from it is crucial to living better. And this is where Azure IoT comes in. In this series we are going to explore how to create the moving parts of this platform with ‘minimum effort‘. In this first part, we are goiing to concentrate our efforts on the overall architecture, hardware/software requirements, and IoT edge module creation.

Prerequisites

To accomplish our goal we will ideally need to meet a few basic criteria. Here is a short list.

- Air Quality Sensor (link)

- IoT Edge device (link)

- Active Azure subscription (link)

- Development machine

- Working knowledge of Python, Sql, Docker, Json, IoT Edge runtime, VSCode

- Perseverance

Lets go into a bit of details about the aforementioned points since there are many possibilities.

Air Quality Sensor

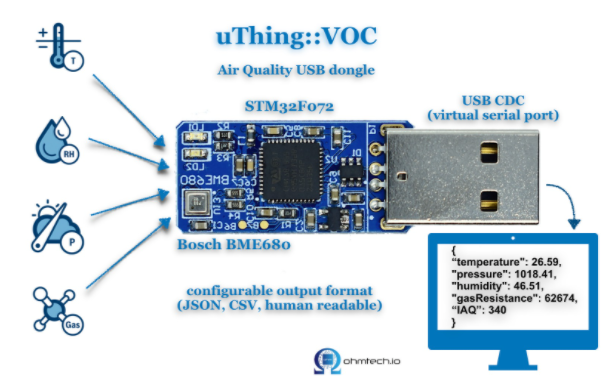

This is the sensor that emits the actual IAQ/VOC+ data. Now, there are a lot of options in this category, and technically they should be producing the same results. However, the best sensors in the market are Micro-Electro-Mechanical Systems (MEMS). MEMS technology uses semiconductor fabrication processes to produce miniaturized mechanical and electro-mechanical elements that range in size from less than one micrometer to several millimeters. MEMS devices can vary from relatively simple structures with no moving elements, to complex electromechanical systems with multiple moving elements. My choice was uThing::VOC™ Air-Quality USB sensor dongle. This is mainly to ensure high quality output and ease of interfacing, which is USB out of the box, and does not require any installation. Have a look at the list of features available on this dongle. The main component is a Bosch proprietary algorithm and the BME680 sensor that does all the hard work. Its basically plug-and-play. The data is emitted in Json format and is available at an interval of 3 milliseconds on the serial port of your device. In my case it was /dev/ttyACM0, but could be different in yours.

IoT Edge device

This is the edge system. where the sensor is plugged in. Typical choices are windows or linux. If you are doing windows, be aware some of these steps may be different and you have to figure those out. However, in my case I am using ubuntu 20.04 installed on an Intel NUC. The reason I chose the NUC is because many IoT modules require an x86_64 machine, which is not available in ARM devices (Jetson, Rasp Pi, etc.) Technically this should work on ANY edge device with a usb port, but for example windows has an issue mounting serial ports onto containers. I suggest better stick with linux unless its a client requirement.

Active Azure subscription

Surely, you will need this one, but as we know Azure has this immense suit of products, and while ideally we want to have everything, it may not be practically feasible. For practical purposes you might have to ask for access to particular services, meaning you have to know ahead exactly which ones you want to use. Of course the list of required services will vary between use cases, so we will begin with just the bare minimum. We will need the following:

- Azure IoT Hub (link)

- Azure Container Registry (link)

- Azure blob storage (link)

- Azure Streaming Analytics (link)(future article)

- Power BI / React App (link)(future article)

- Azure Linux VM (link)(optional)

A few points before we move to the next prerequisite. For IoT hub you can use free tier for experiments, but I will recommend to use the standard tier instead. For ACR get the usual tier and generate username password. For storageaccount its the standard tier. The ASA and BI products will be used in the reference architecture, but is not discussed in this article. The final service Azure VM is an interesting one. Potentially all the codebase can be run using VM, but this is only good for simulations. However, note that it is an equally good idea to experiment with VMs first as they have great integration and ease the learning curve.

Development machine

The development machine can be literally anything from which you have ssh access to the edge device. From an OS perspective it can be windows, linux, raspbian, mac etc. Just remember two things – use a good IDE (a.k.a VSCode) and make sure docker can be run on it, optionally with priviliges. In my case I am using a Startech KVM, so I can shift between my windows machine and the actual edge device for development purposes, but it is not neccessary.

Working knowledge of Python, Sql, Docker, Json, IoT Edge runtime, VSCode

This is where it gets tricky. Having a mix of these knowledge is somewhat essential to creating and scaling this platform. However, I understand you may not be having proficiency in all of these. On that note, I can tell from experience that being from a data engineering background has been extremely beneficial for me. In any case, you will need some python skills, some sql, and Json. Even knowing how to use the VSCode IoT extension is non-trivial. One notable mention is that good docker knowledge is extrememly important, as the edge module is in fact simply a docker container thats deployed through the deployment manifest (IoT Edge runtime).

Perseverance

In an ideal world, you read a tutorial, implement, it works and you make merry. The real world unfortunately will bring challenges that you have not seen anywhere. Trust me on this, many times you will make good progress simply by not quitting what you are doing. Thats it. That is the secret ingredient. Its like applying gradient descent to your own brain model of a concept. Anytime any of this doesn’t work, simply have belief in Azure and yourself. You will always find a way. Okay enough of that. Lets get to business.

Reference Architecture

Here is a reference architecture that we can use to implement this platform. This is how I have done it. Please feel free to do your own.

Most of this is quite simple. Just go through the documentation for Azure and you should be fine. Following this we go to what everyone is waiting for – the implementation.

Implementation

In this section we will see how we can use these tools to our benefit. For the Azure resources I may not go through the entire creation or installation process as there are quite a few articles on the internet for doing those. I shall only mention the main things to look out for. Here is an outline of the steps involved in the implementation.

- Create a resource group in Azure (link)

- Create a IoT hub in Azure (link)

- Create a IoT Edge device in Azure (link)

- Install Ubuntu 18/20 on the edge device

- Plugin the usb sensor into the edge device and check blue light

- Install docker on the edge device

- Install VSCode on development machine

- Create conda/pip environment for development

- Check read the serial usb device to receive json every few milliseconds

- Install IoT Edge runtime on the edge device (link)

- Provision the device to Azure IoT using connection string (link)

- Check IoT edge Runtime is running good on the edge device and portal

- Create an IoT Edge solution in VSCode (link)

- Add a python module to the deployment (link)

- Mount the serial port to the module in the deployment

- Add codebase to read data from mounted serial port

- Augument sensor data with business data

- Send output result as events to IoT hub

- Build and push the IoT Edge solution (link)

- Create deployment from template (link)

- Deploy the solution to the device

- Monitor endpoint for consuming output data as events

Okay I know that is a long list. But, you must have noticed some are very basic steps. I mentioned them so everyone has a starting reference point regarding the sequence of steps to be taken. You have high chance of success if you do it like this. Lets go into some details now. Its a mix of things so I will just put them as flowing text.

90% of what’s mentioned in the list above can be done following a combination of the documents in the official Azure IoT Edge documentation. I highly advise you to scour through these documents with eagle eyes multiple times. The main reason for this is that unlike other technologies where you can literally ‘stackoverflow’ your way through things, you will not have that luxury here. I have been following every commit in their git repo for years and can tell you the tools/documentation changes almost every single day. That means your wits and this document are pretty much all you have in your arsenal. The good news is Microsoft makes very good documentation and even though its impossible to cover everything, they make an attempt to do it from multiple perspectives and use cases. Special mention to the following articles.

- Develop IoT module

- Deploy your first IoT Edge module

- Register an IoT Edge device in IoT Hub

- Install or uninstall Azure IoT Edge for Linux

- Understand Azure IoT Edge modules

- Develop your own IoT Edge modules

- How to deploy modules and establish routes

Once you are familiar with the ‘build, ship, deploy’ mechanism using the copius SimulatedTemperatureSensor module examples from Azure Marketplace, you are ready to handle the real thing. The only real challenge you will have is at steps 9, 15, 16, 17, and 18. Lets see how we can make things easy there. For 9 I can simply do a cat command on the serial port.

cat /dev/ttyACM0

This gives me output every 3 ms.

{"temperature": 23.34, "pressure": 1005.86, "humidity": 40.25, "gasResistance": 292401, "IAQ": 33.9, "iaqAccuracy": 1, "eqCO2": 515.62, "eqBreathVOC": 0.53}

This is exactly the data that the module will receive when the serial port is successfully mounted onto the module.

"AirQualityModule": {

"version": "1.0",

"type": "docker",

"status": "running",

"restartPolicy": "always",

"settings": {

"image": "${MODULES.AirQualityModule}",

"createOptions": {

"Env": [

"IOTHUB_DEVICE_CONNECTION_STRING=$IOTHUB_IOTEDGE_CONNECTION_STRING"

],

"HostConfig": {

"Dns": [

"1.1.1.1"

],

"Devices": [

{

"PathOnHost": "/dev/ttyACM0",

"PathInContainer": "/dev/ttyACM0",

"CgroupPermissions": "rwm"

}

]

}

}

}

}

Notice the Devices block in the above extract from the deployment manifest. Using these keys/values we are able to mount the serial port onto the custom module aptly named AirQualityModule. So we got 15 covered.

Adding codebase to the module is quite simple too. When the module is generated by VSCode it automatically gives you the docker file (Dockerfile.amd64) and a sample main code. We will just create a copy of that file in the same repo and call it say air_quality.py. Inside this new file we will hotwire the code to read the device output. However, before doing any modification in the code we must edit requirements.txt. Mine looks like this:

azure-iot-device

psutil

pyserial

azure-iot-device is for the edge sdk libraries, and pyserial is for reading serial port. The imports look like this:

import time, sys, json

# from influxdb import InfluxDBClient

import serial

import psutil

from datetime import datetime

from azure.iot.device import IoTHubModuleClient, Message

Quite self-explainatory. Notice the influx db import is commented, meaning you can send these reading there too through the module. To cover 16 we will need the final three peices of code. Here they are:

message = ""

#uart = serial.Serial('/dev/tty.usbmodem14101', 115200, timeout=11) # (MacOS)

uart = serial.Serial('/dev/ttyACM0', 115200, timeout=11) # Linux

uart.write(b'Jn')message = uart.readline()

uart.flushInput()

if debug is True:

print('message...')

print(message)data_dict = json.loads(message.decode())

There that’s it! With three peices of code you have taken the data emitted by the sensor, to your desired json format using python. 16 is covered. For 17 we will just update the dictionary with business data. In my case as follows. I am attaching a sensor name and coordinates to find me ![]() .

.

data_dict.update({'sensorId':'roomAQSensor'})

data_dict.update({'longitude':-79.025270})

data_dict.update({'latitude':43.857989})

data_dict.update({'cpuTemperature':psutil.sensors_temperatures().get('acpitz')[0][1]})

data_dict.update({'timeCreated':datetime.now().strftime("%Y-%m-%d %H:%M:%S")})

For 18 it is as simple as

print('data dict...')

print(data_dict)

msg=Message(json.dumps(data_dict))

msg.content_encoding = "utf-8"

msg.content_type = "application/json"

module_client.send_message_to_output(msg, "airquality")

Before doing step 19, two things must happen. First, u need to replace the default main.py in the dockerfile and with air_quality.py. Second, you must use proper entries in .env file to generate deployment & deploy successfully. We can quickly check the docker image exists before actual deployment.

docker images

iotregistry.azurecr.io/airqualitymodule 0.0.1-amd64 030b11fce8af 4 days ago 129MB

Now you are good to deploy. Use this tutorial to help deploy successfully. At the end of step 22 this is what it looks like upon consuming the endpoint through VSCode.

[IoTHubMonitor] Created partition receiver [0] for consumerGroup [$Default]

[IoTHubMonitor] Created partition receiver [1] for consumerGroup [$Default]

[IoTHubMonitor] [2:33:28 PM] Message received from [azureiotedge/AirQualityModule]:

{

"temperature": 28.87,

"pressure": 1001.15,

"humidity": 38.36,

"gasResistance": 249952,

"IAQ": 117.3,

"iaqAccuracy": 1,

"eqCO2": 661.26,

"eqBreathVOC": 0.92,

"sensorId": "roomAQSensor",

"longitude": -79.02527,

"latitude": 43.857989,

"cpuTemperature": 27.8,

"timeCreated": "2021-07-15 18:33:28"

}

[IoTHubMonitor] [2:33:31 PM] Message received from [azureiotedge/AirQualityModule]:

{

"temperature": 28.88,

"pressure": 1001.19,

"humidity": 38.35,

"gasResistance": 250141,

"IAQ": 115.8,

"iaqAccuracy": 1,

"eqCO2": 658.74,

"eqBreathVOC": 0.91,

"sensorId": "roomAQSensor",

"longitude": -79.02527,

"latitude": 43.857989,

"cpuTemperature": 27.8,

"timeCreated": "2021-07-15 18:33:31"

}

[IoTHubMonitor] Stopping built-in event endpoint monitoring...

[IoTHubMonitor] Built-in event endpoint monitoring stopped.

Congratulations! You have successfully deployed the most vital step in creating a scalable air quality monitoring platform from scratch using Azure IoT.

Future Work

Keep an eye out for a follow up of this article where I shall be discussing how to continue the end-to-end pipeline and actually visualize it on Power BI.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments