This article is contributed. See the original author and article here.

Hello Folks,

As we announced last month (Announcing the general availability of Azure shared disks and new Azure Disk Storage enhancements) Azure shared disks are now generally available.

Shared disks, is the only shared block storage in the cloud that supports both Windows and Linux-based clustered or high-availability applications. It now allows you to use a single disk to be attached to multiple VMs therefore enabling you to run applications, such as SQL Server Failover Cluster Instances (FCI), Scale-out File Servers (SoFS), Remote Desktop Servers (RDS), and SAP ASCS/SCS running on Windows Server. Thus, enabling you to migrate your applications, currently running on-premises on Storage Area Networks (SANs) to Azure more easily.

Shared disks are available on both Ultra Disks and Premium SSDs.

Ultra disks have their own separate list of limitations, unrelated to shared disks. For ultra disk limitations, refer to Using Azure ultra disks. When sharing ultra disks, they have the following additional limitations:

- Currently limited to Azure Resource Manager or SDK support.

- Only basic disks can be used with some versions of Windows Server Failover Cluster, for more details see Failover clustering hardware requirements and storage options.

Shared ultra disks are available in all regions that support ultra disks by default.

Premium SSDs

- Currently only supported in the West Central US region.

- Currently limited to Azure Resource Manager or SDK support.

- Can only be enabled on data disks, not OS disks.

- ReadOnly host caching is not available for premium SSDs with maxShares>1.

- Disk bursting is not available for premium SSDs with maxShares>1.

- When using Availability sets and virtual machine scale sets with Azure shared disks, storage fault domain alignment with virtual machine fault domain is not enforced for the shared data disk.

- When using proximity placement groups (PPG), all virtual machines sharing a disk must be part of the same PPG.

- Only basic disks can be used with some versions of Windows Server Failover Cluster, for details see Failover clustering hardware requirements and storage options.

For this post we’ll deploy a 2-node Windows Server Failover Cluster (WSFC) using clustered shared volumes. That way both VMs will have simultaneous write-access to the disk, which results in the ReadWrite throttle being split across the two VMs and the ReadOnly throttle not being used. And we’ll do it using the new Windows Admin Center Failover clustering experience.

Azure shared disks usage is supported on all Windows Server 2008 and newer. And Azure shared disks are supported on the following Linux distros:

- SUSE SLE for SAP and SUSE SLE HA 15 SP1 and above

- Ubuntu 18.04 and above

- RHEL developer preview on any RHEL 8 version

- Oracle Enterprise Linux

Currently only ultra disks and premium SSDs can enable shared disks. Each managed disk that have shared disks enabled are subject to the following limitations, organized by disk type:

Ultra disks

Ultra disks have their own separate list of limitations, unrelated to shared disks. For ultra disk limitations, refer to Using Azure ultra disks.

When sharing ultra disks, they have the following additional limitations:

- Currently limited to Azure Resource Manager or SDK support.

- Only basic disks can be used with some versions of Windows Server Failover Cluster, for details see Failover clustering hardware requirements and storage options.

Shared ultra disks are available in all regions that support ultra disks by default, and do not require you to sign up for access to use them.

Premium SSDs

- Currently only supported in the West Central US region.

- Currently limited to Azure Resource Manager or SDK support.

- Can only be enabled on data disks, not OS disks.

- ReadOnly host caching is not available for premium SSDs with maxShares>1.

- Disk bursting is not available for premium SSDs with maxShares>1.

- When using Availability sets and virtual machine scale sets with Azure shared disks, storage fault domain alignment with virtual machine fault domain is not enforced for the shared data disk.

- When using proximity placement groups (PPG), all virtual machines sharing a disk must be part of the same PPG.

- Only basic disks can be used with some versions of Windows Server Failover Cluster, for details see Failover clustering hardware requirements and storage options.

- Azure Backup and Azure Site Recovery support is not yet available.

Let’s get on with the creation of our cluster. In my test environment I have 2 Windows Server 2019 that will be used as our cluster Nodes. They are joined to a domain through a DC in the same virtual network on Azure. Windows Admin Center (WAC) is running on a separate VM and ALL these machine are accessed using an Azure Bastion server.

When creating the VMs you need to ensure that you enable Ultra Disk compatibility in the Disk section. If your shared Ultra Disk is already created, you can attach it as you create the VM. In my case I will attach it to existing VM in the next step.

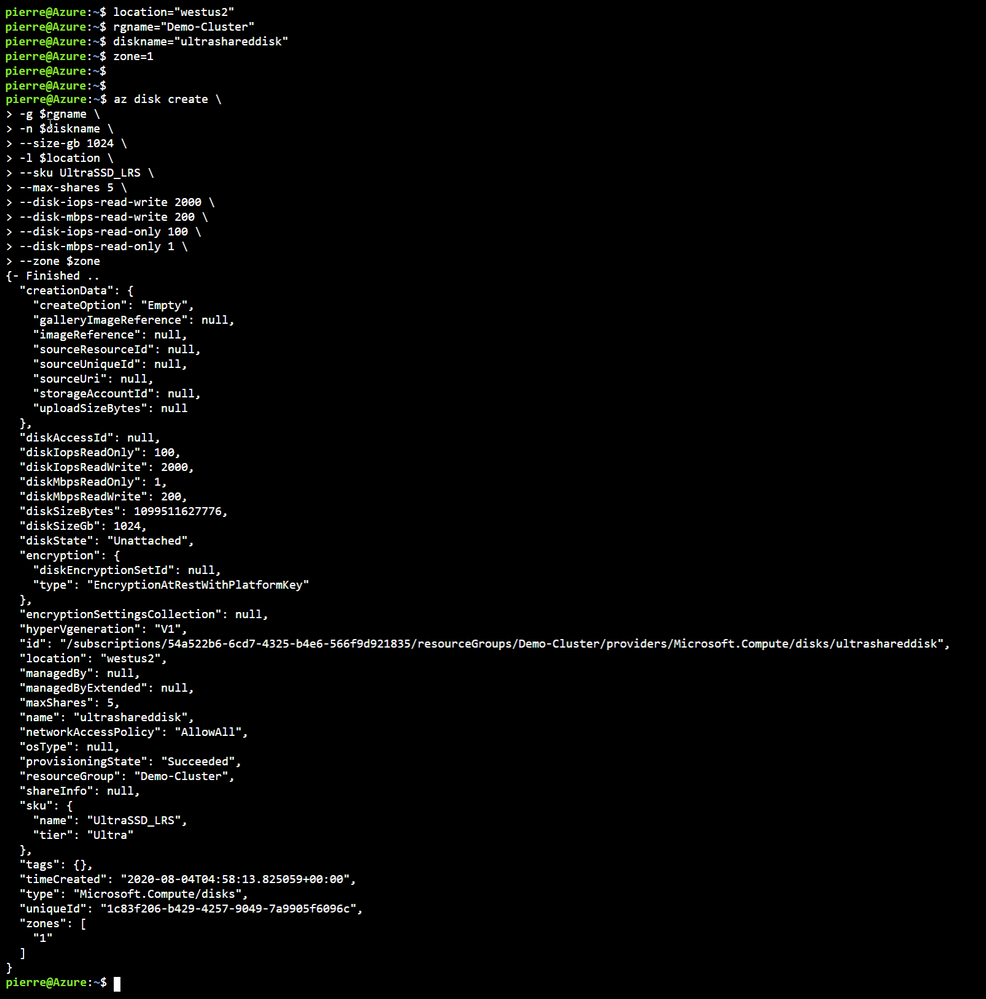

First, we need to Deploy an ultra disk as a shared disk. To deploy a managed disk with the shared disk feature enabled, you must ensure that the “maxShares” parameter is set to a value greater than 1. This makes the disk shareable across multiple VMs. I used the cloud shell through the portal and the following Azure CLI commands to perform that operation. Notice that we also need to set the zone parameter to the same zone where the VMs are located (Azure Shared Disk across availability zones is not yet supported)

location = "westus2"

rgname = "Demo-Cluster"

diskname = "ultrashareddisk"

zone = 1

az disk create

-g $rgname

-n $diskname

--size-gb 1024

-l $location

--sku UltraSSD_LRS

--max-shares 5

--disk-iops-read-write 2000

--disk-mbps-read-write 200

--disk-iops-read-only 100

--disk-mbps-read-only 1

--zone $zone

We end up with the following result:

Once the Shared Disk is created, we can attach it to BOTH VMs that will be our clustered nodes. I’ve attached the disk to the VMs through the Azure portal by navigating to the VM, and in the Disk management pane, clicking on the “+ Add data disk” and selecting the disk I created above.

Now that the shard disk is attached to both VM, I use the WAC cluster deployment workflow to create the cluster.

To launch the workflow, from the All Connections page, click on “+Add” and select “Create new” on the server clusters tile.

You can create hyperconverged clusters running Azure Stack HCI, or classic failover clusters running Windows Server (in one site or across two sites). I’m my case I’m deploying a traditional cluster in one site.

The cluster deployment workflow is included in Windows Admin Center without needing to install an extension.

At this point just follow the prompts and walk through the workflow. Just remember that whenever, in the workflow, you are asked for an account name and password. the username MUST be in the DOMAINUSERNAME format.

Once I walked through the workflow, I connected to Node 1 and added the disk to my clustered shard volume.

and verified on the other node that I could see the Clustered Shared Volume.

That’s it!! My traditional WSFC is up and running and ready to host whatever application I need to migrate to Azure.

I hope this helped. Let me know in the comments if there are any specific scenarios you would like us to review.

Cheers!

Pierre

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments